Visible to Intel only — GUID: GUID-5A7B01F1-F5E0-4897-A679-B85917199A6A

Visible to Intel only — GUID: GUID-5A7B01F1-F5E0-4897-A679-B85917199A6A

GPU Application Analysis on Intel® HD Graphics and Intel® Iris® Graphics

Use the Intel® VTune™ Profiler to profile graphics applications and correlate activities on both the CPU and GPU.

Consider following these steps for GPU analysis with the VTune Profiler:

Set up your system for GPU analysis.

Run the GPU Offload analysis to identify whether your application is GPU bound and how effectively your code is offloaded to the GPU.

Run the GPU Compute/Media Hotspots analysis for detailed analysis of the GPU-bound application with explicit support of SYCL, Intel® Media SDK, and OpenCL™ software technology:

- Investigate execution of SYCL computing tasks

(supported with VTune Profiler 2021)

You may also configure a custom analysis to collect GPU usage data. To do this, select the GPU Utilization option in the analysis configuration. This option introduces the least overhead during the collection, while the Analyze Processor Graphics hardware events adds medium overhead, and the Trace GPU Programming APIs option adds the biggest overhead.

Analyze GPU Usage for GPU-Bound Applications

If you already identified that your application or some of its stages are GPU bound, run the GPU Compute/Media Hotspots analysis in the Characterization mode to see whether GPU engines are used effectively and whether there is some room for improvement. Such an analysis is possible with hardware metrics collected by the VTune Profiler for the Render and GPGPU engine of the Intel Graphics.

Explore GPU Hardware Metrics

GPU hardware metrics can provide you with a next level of details to analyze GPU activity and identify whether any performance improvements are possible. You may configure the GPU Compute/Media Hotspots analysis to collect the following types of GPU event metrics on the Render and GPGPU engine of Intel Graphics:

Overview (default) group analyzes general activity of GPU execution units, sampler, general memory, and cache accesses;

Compute Basic (with global/local memory accesses) group analyzes accesses to different types of GPU memory;

Compute Extended (for Intel® Core™ M processors and higher)

Full Compute group combines metrics from the Overview and Compute Basic presets and presents them in the same view, which helps explore the reasons why the GPU execution units were waiting. To use this event set, make sure to enable the multiple runs mode in the target properties.

Start with the Overview events group and then move to the Compute Basic (global/local memory accesses) group. Compute Basic metrics are most effective when you analyze computing work on a GPU with the GPU Utilization events option enabled (default for the GPU Compute/Media Hotspots analysis), which allows you to correlate GPU hardware metrics with an exact GPU load.

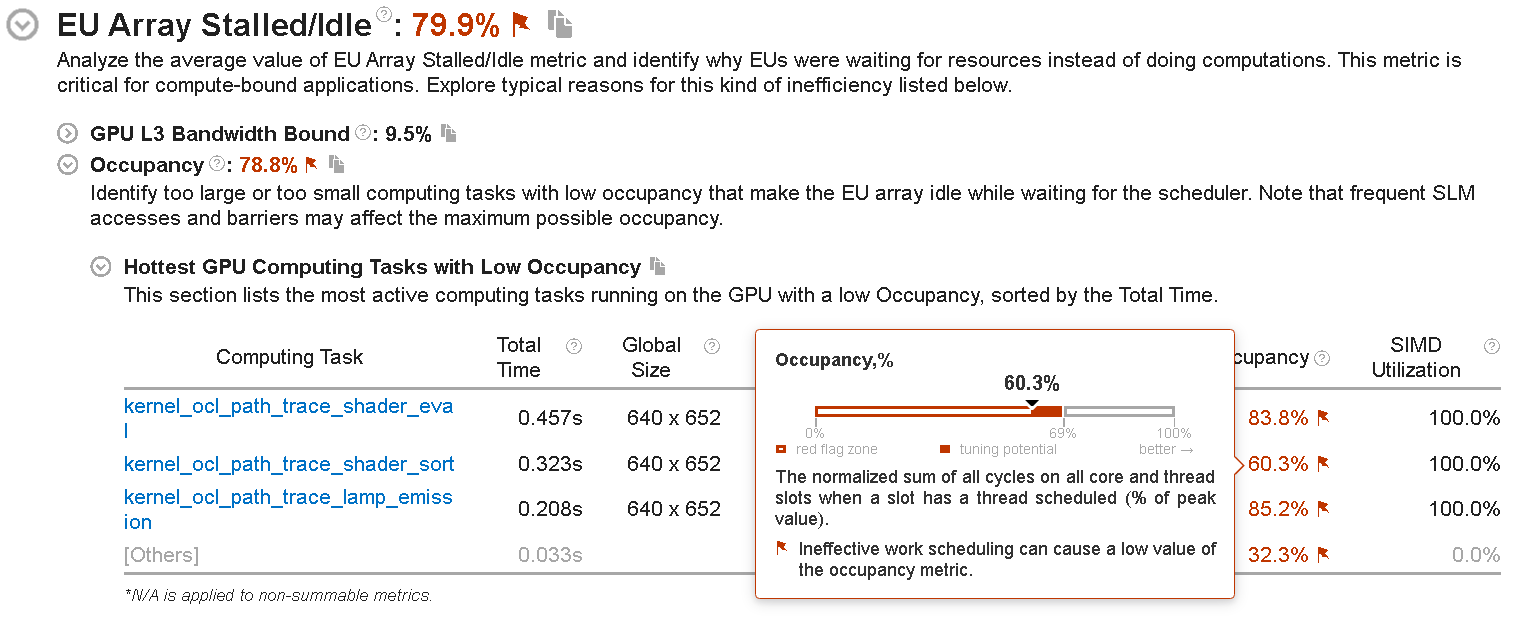

When the data is collected, explore the EU Array Stalled/Idle section of the Summary window to identify the most typical reasons why the execution units could be waiting.

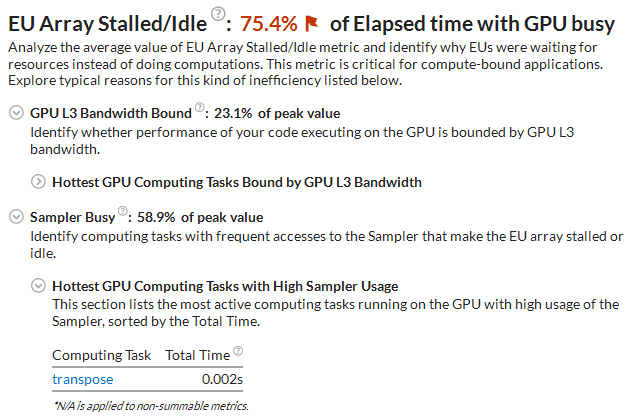

Depending on the event preset you used for the configuration, the VTune Profiler analyzes metrics for stalled/idle executions units. The GPU Compute/Media Hotspots analysis by default collects the Overview preset including the metrics that track general GPU memory accesses, such as Sampler Busy and Sampler Is Bottleneck, and GPU L3 bandwidth. As a result, the EU Array Stalled/Idle section displays the Sampler Busy section with a list of GPU computing tasks with frequent access to the Sampler and hottest GPU computing tasks bound by GPU L3 bandwidth:

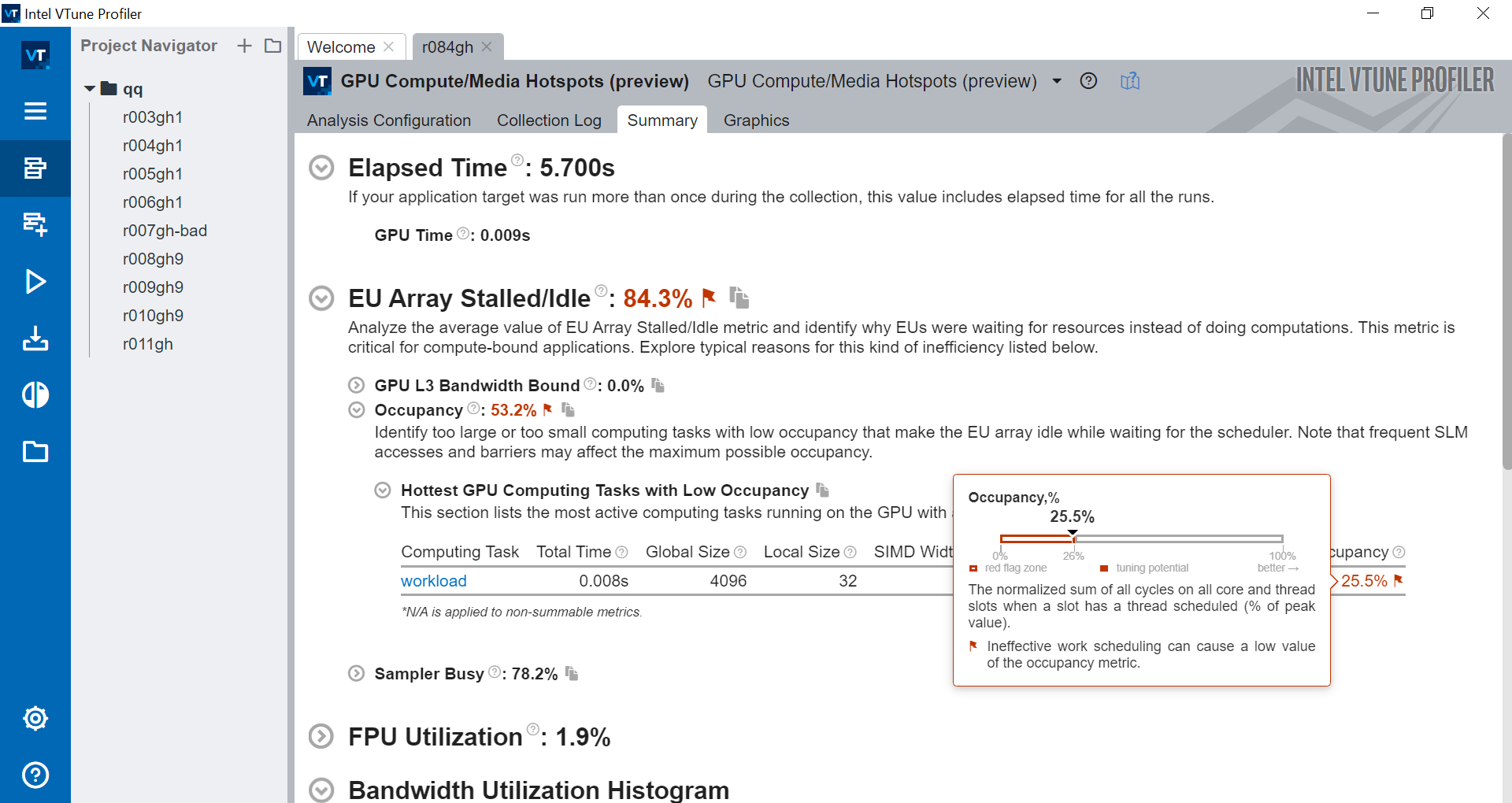

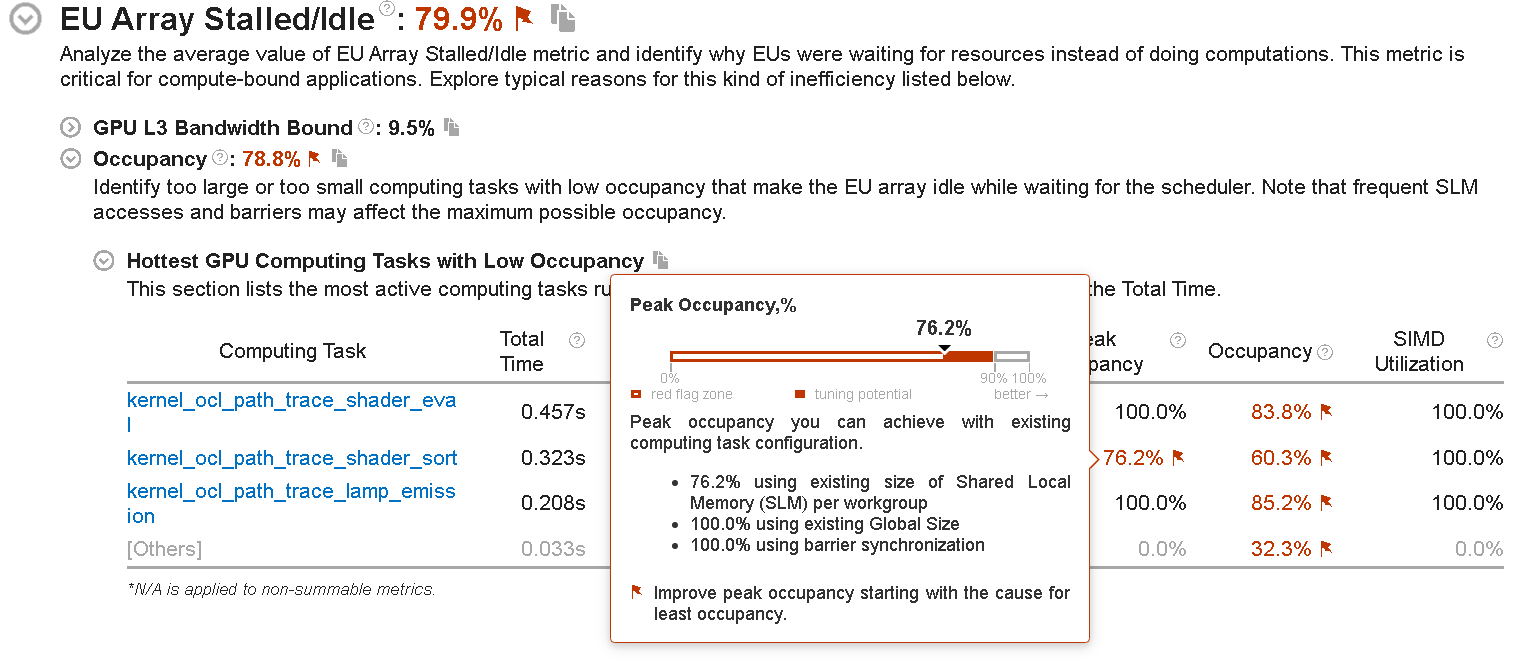

If you select the Compute Basic preset during the analysis configuration, VTune Profiler analyzes metrics that distinguish accessing different types of data on a GPU and displays the Occupancy section. See information about GPU tasks with low occupancy and understand how you can achieve peak occupancy:

If the peak occupancy is flagged as a problem for your application, inspect factors that limit the use of all the threads on the GPU. Consider modifying your code with corresponding solutions:

| Factor responsible for Low Peak Occupancy | Solution |

|---|---|

SLM size requested per workgroup in a computing task is too high |

Decrease the SLM size or increase the Local size |

Global size (the number of working items to be processed by a computing task) is too low |

Increase Global size |

Barrier synchronization (the sync primitive can cause low occupancy due to a limited number of hardware barriers on a GPU subslice) |

Remove barrier synchronization or increase the Local size |

If the occupancy is flagged as a problem for your application, change your code to improve hardware thread scheduling. These are some reasons that may be responsible for ineffective thread scheduling:

- A tiny computing task could cause considerable overhead when compared to the task execution time.

- There may be high imbalance between the threads executing a computing task.

The Compute Basic preset also enables an analysis of the DRAM bandwidth usage. If the GPU workload is DRAM bandwidth-bound, the corresponding metric value is flagged. You can explore the table with GPU computing tasks heavily using the DRAM bandwidth during execution.

If you select the Full Compute preset and multiple run mode during the analysis configuration, the VTune Profiler will use both Overview and Compute Basic event groups for data collection and provide all types of reasons for the EU array stalled/idle issues in the same view.

To analyze Intel® HD Graphics and Intel® Iris® Graphics hardware events, make sure to set up your system for GPU analysis

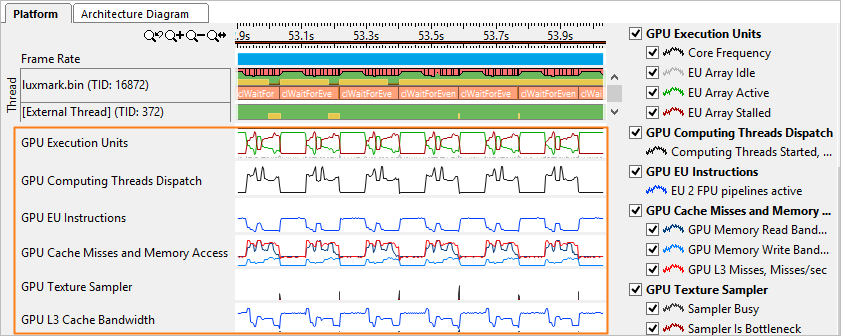

To analyze GPU performance data per HW metrics over time, open the Graphics window, and focus on the Timeline pane. List of GPU metrics displayed in the Graphics window depends on the hardware events preset selected during the analysis configuration.

The example below shows the Overview group of metrics collected for the GPU bound application:

The first metric to look at is GPU Execution Units: EU Array Idle metric. Idle cycles are wasted cycles. No threads are scheduled and the EUs' precious computational resources are not being utilized. If EU Array Idle is zero, the GPU is reasonably loaded and all EUs have threads scheduled on them.

In most cases the optimization strategy is to minimize the EU Array Stalled metric and maximize the EU Array Active. The exception is memory bandwidth-bound algorithms and workloads where optimization should strive to achieve a memory bandwidth close to the peak for the specific platform (rather than maximize EU Array Active).

Memory accesses are the most frequent reason for stalls. The importance of memory layout and carefully designed memory accesses cannot be overestimated. If the EU Array Stalled metric value is non-zero and correlates with the GPU L3 Misses, and if the algorithm is not memory bandwidth-bound, you should try to optimize memory accesses and layout.

Sampler accesses are expensive and can easily cause stalls. Sampler accesses are measured by the Sampler Is Bottleneck and Sampler Busy metrics.

Explore Execution of OpenCL™ Kernels

If you know that your application uses OpenCL software technology and the GPU Computing Threads Dispatch metric in the Timeline pane of the Graphics window confirms that your application is doing substantial computation work on the GPU, you may continue your analysis and capture the timing (and other information) of OpenCL kernels running on Intel Graphics. To run this analysis, enable the Trace GPU Programming APIs option during analysis configuration. The GPU Compute/Media Hotspots analysis enables this option by default.

The Summary view shows OpenCL kernels running on the GPU in the Hottest GPU Computing Tasks section and flags the performance-critical kernels. Clicking such a kernel name opens the Graphics window grouped by Computing Task (GPU) / Instance. You may also want to group the data in the grid by the Computing Task. VTune Profiler identifies the following computing task purposes: Compute (kernels), Transfer (OpenCL routines responsible for transferring data from the host to a GPU), and Synchronization (for example, clEnqueueBarrierWithWaitList).

The corresponding columns show the overall time a kernel ran on the GPU and the average time for a single invocation (corresponding to one call of clEnqueueNDRangeKernel ), working group sizes, as well as averaged GPU hardware metrics collected for a kernel. Hover over a metric column header to read the metric description. If a metric value for a computing task exceeds a threshold set up by Intel architects for the metric, this value is highlighted in pink, which signals a performance issue. Hover over such a value to read the issue description.

Analyze and optimize hot kernels with the longest Total Time values first. These include kernels characterized by long average time values and kernels whose average time values are not long, but they are invoked more frequently than the others. Both groups deserve attention.

To view details on OpenCL kernels submission and analyze the time spent in the queue, explore the Computing Queue data in the Timeline pane of the Graphics or Platform window.

Explore Execution of Intel Media SDK Tasks

If you enabled both the GPU Utilization and Trace GPU Programming APIs options for the Intel Media SDK program analysis, use the Graphics window to correlate data for the Intel Media SDK tasks execution with the GPU software queue data.

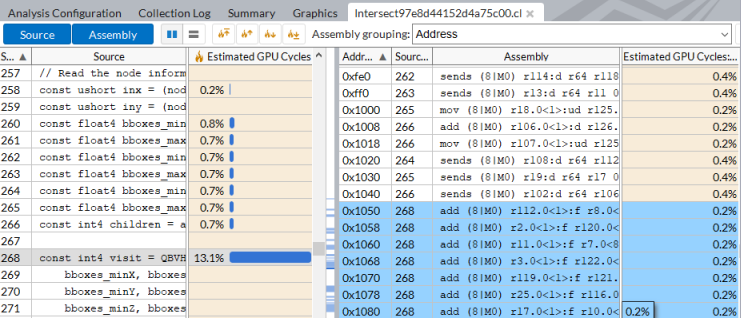

Analyze GPU Kernels Per Code Line

You can run the GPU Compute/Media Hotspots Analysis in the Code-Level Analysis mode to narrow down you GPU analysis to a specific hot GPU kernel identified with the GPU Offload analysis. This analysis helps identify performance-critical basic blocks or issues caused by memory accesses in the GPU kernels providing performance statistics per code line/assembly instruction: