Visible to Intel only — GUID: tof1696535020995

Ixiasoft

Visible to Intel only — GUID: tof1696535020995

Ixiasoft

5.8. Bandwidth Sharing At Each Switch

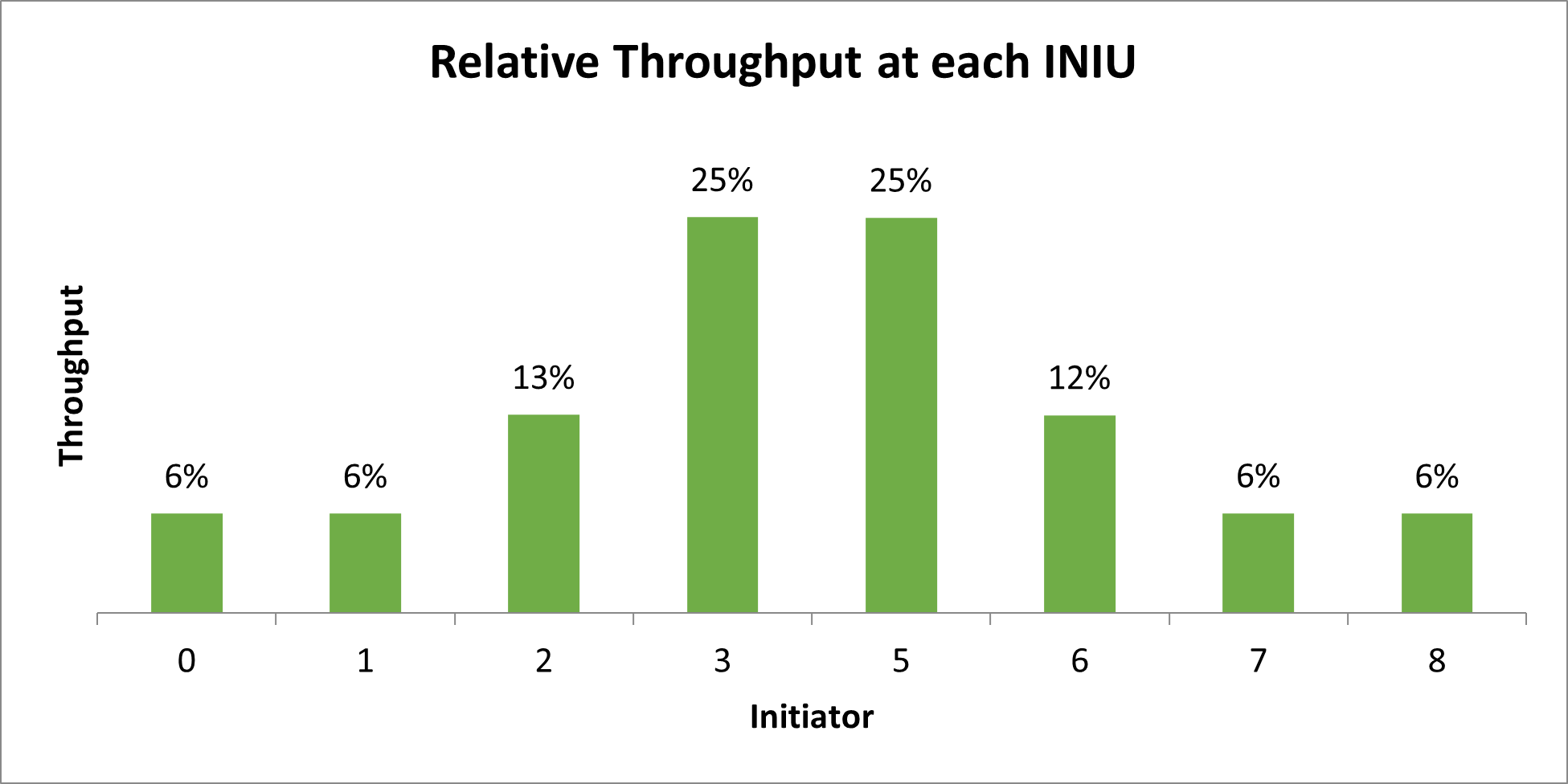

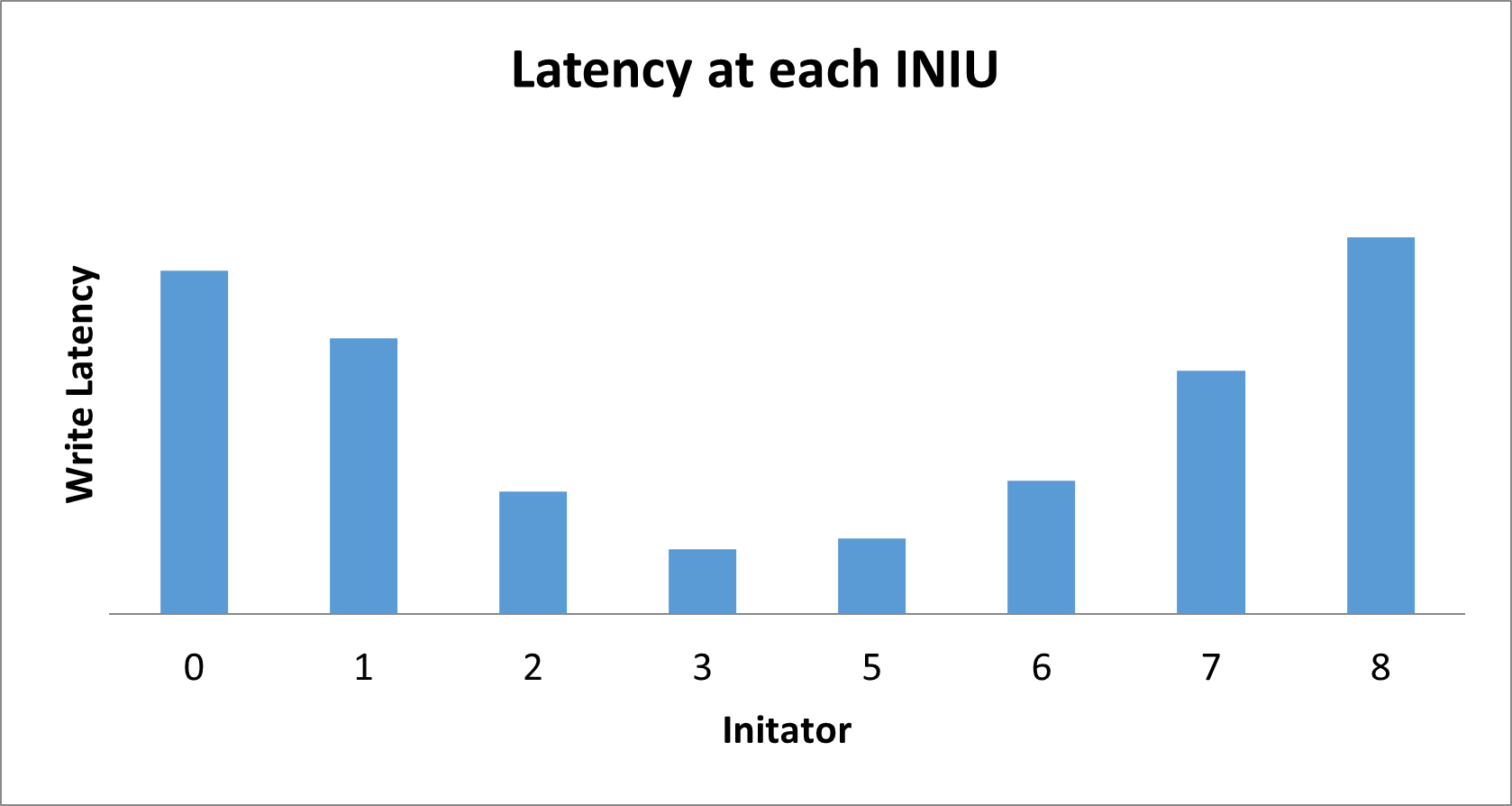

Bandwidth sharing at each switch can impact NoC performance. When fully loaded, each switch in the NoC splits access between its inputs evenly. This splitting creates a potential starvation scenario for connections that span long distances over the NoC. Consider the impact this has on the following topology, consisting of 8 initiators (INIU) writing to one target (TNIU). These initiators all use the same link because they are all going to the same target.

At the switch in the middle, the available bandwidth is divided into two, equally for the left and right half of the initiators. Therefore, you can expect equal performance between the left- and right-half initiators. You can also expect initiators 1, 2, 3, and 4 to collectively have the same bandwidth as initiators 6, 7, 8, and 9.

However, notice how this division continues along the left side (and similarly down the right). The bandwidth is split between initiator 3 and the set of initiators 1, 2, and 3. At the next switch (above initiator 3) the bandwidth is split evenly between initiator 3 and the set of initiators 1 and 2. Finally, initiators 1 and 2 equally share bandwidth. The collective result is a continuous reduction of bandwidth down the NoC, as Relative Throughput At Each Initiator (INIU) shows.

In systems where all of the initiators are issuing transactions at an unbounded rate, you can expect reduced performance for connections that span long distances and involve combining large throughput traffic at switches along the path. Latency increases for the outer initiators because of the added queuing at each switch.

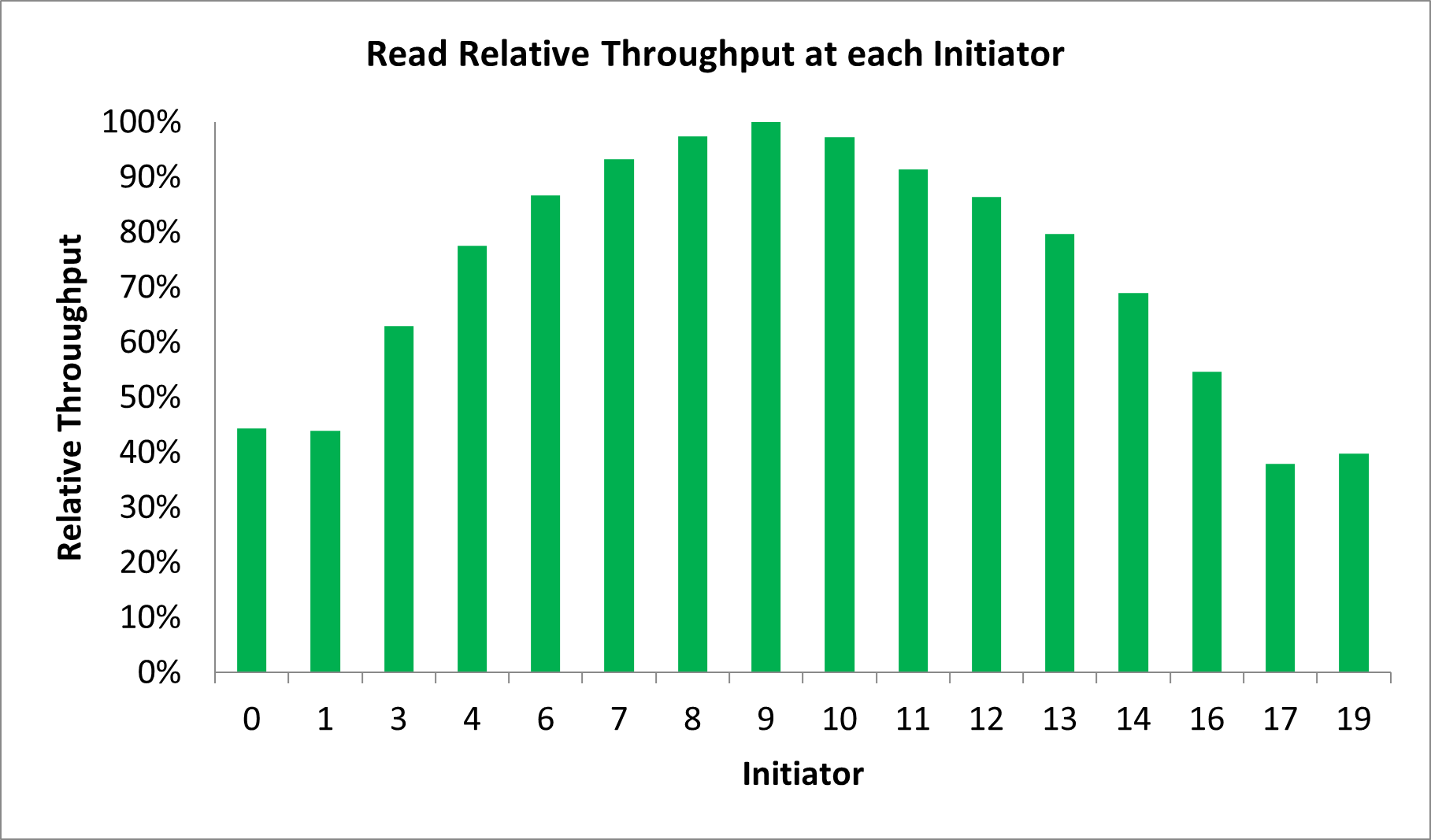

More complex interactions are possible. However, the net effect is still a reduction in bandwidth for initiators that are further away from their targets. Consider an example of a 16x16 crossbar on HBM and note the reduction in throughput for the outer initiators, despite all initiators attempting identical access to all targets.

You can use the QoS priority features to improve performance in these scenarios. It may also be helpful to have IP that is self-limiting in issuing transactions on the NoC, or have system-wide synchronization to allow other parts on the system to get bandwidth:

- Self-limiting means that a block attached to each individual initiator monitors how much traffic is generated, and throttles the number of transactions issued in a given time window.

- System wide synchronization means that a centralized control block coordinates initiators, and one initiator cannot issue more transactions until other initiators complete their transactions. The centralized coordination guarantees fair sharing of bandwidth.