Visible to Intel only — GUID: GUID-8E3185EC-3570-4E6D-948F-36E71D44AC9A

Visible to Intel only — GUID: GUID-8E3185EC-3570-4E6D-948F-36E71D44AC9A

Single Program Multiple Data

This section includes concepts and descriptions of objects that support distributed computations using SPMD model.

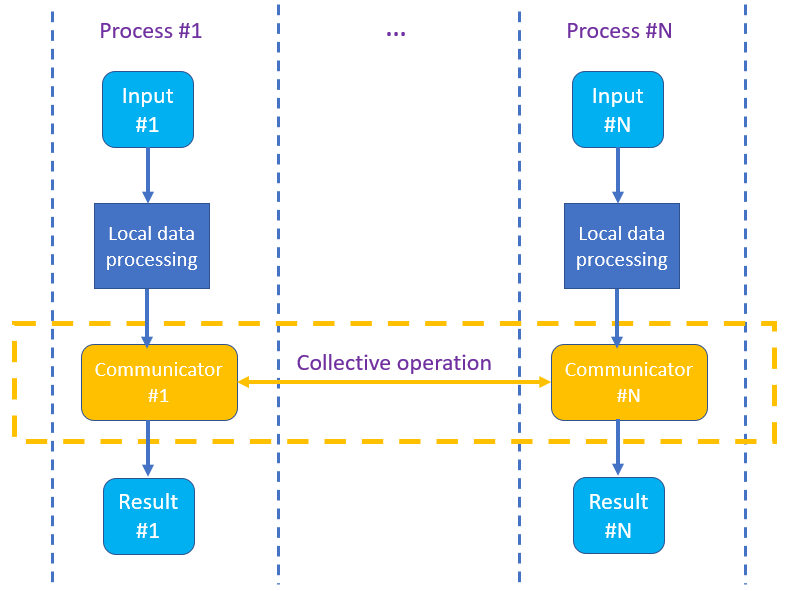

Distributed computation using SPMD model

In a typical usage scenario, a user provides a communicator object as a first parameter of a free function to indicate that the algorithm can process data simultaneously. All internal inter-process communications at sync points are hidden from the user.

General expectation is that input dataset is distributed among processes. Results are distributed in accordance with the input.

Supported Collective Operations

The following collective operations are supported:

bcast Broadcasts data from specified process.

allreduce Reduces data among all processes.

allgatherv Gathers data from all processes and shares the result among all processes.

sendrecv_replace Sends and receives data using a single buffer.

Backend-specific restrictions

oneCCL: Allgetherv does not support arbitrary displacements. The result is expected to be closely packed without gaps.

oneMPI: Collective operations in oneMPI do not support asynchronous executions. They block the process till completion.