How it Works

The Edge Insights for Autonomous Mobile Robots (EI for AMR) modules are deployed via Docker* containers for enhanced Developer Experience (DX), support of Continuous Integration and Continuous Deployment (CI/CD) practices and flexible deployment in different execution environments, including robot, development PC, server, and cloud.

This section provides an overview of the modules and services featured with Edge Insights for Autonomous Mobile Robots.

Modules and Services

The middleware layered architecture in the Intel® oneAPI Base Toolkit (Base Kit) and Intel® Distribution of OpenVINO™ toolkit (OpenVINO™) abstracts hardware dependencies from the algorithm implementation.

The ROS 2 with data distribution service (DDS) is used as a message bus. This Publisher-Subscriber architecture based on ROS 2 topics decouples data providers from consumers.

Camera and LIDAR sensor data is abstracted with ROS 2 topics.

Video streaming processing pipelines are supported by GStreamer*. It decouples sensor ingestion, video processing and AI object detection via OpenVINO™ toolkit DL Streamer framework.

Also, more complex computational graphs that decouple Sense-Plan-Act autonomous mobile robot applications can be implemented using ROS 2 topic registration.

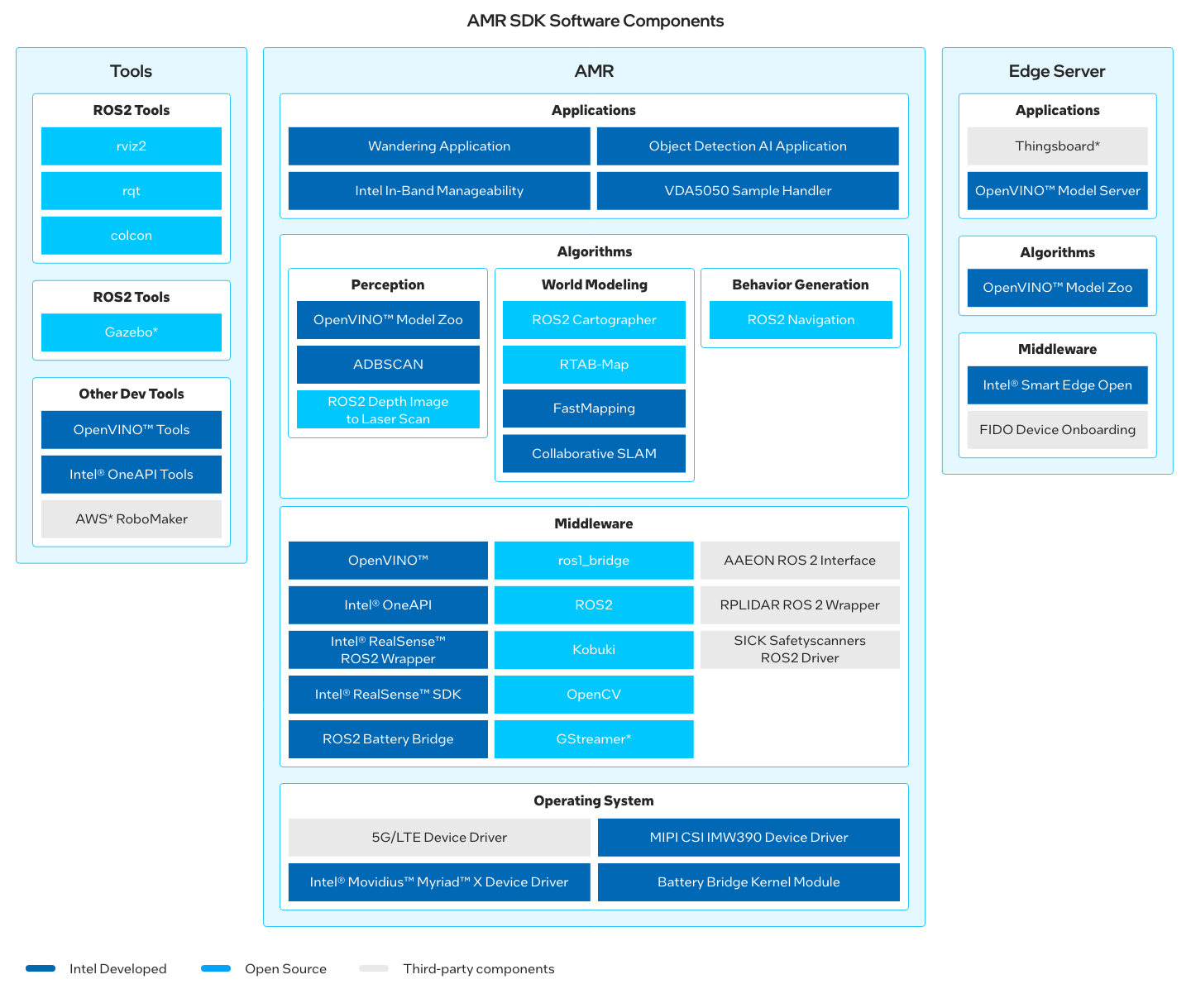

This diagram shows the software components included in the EI for AMR package. The software stack keeps evolving iteratively with additional algorithms, applications, and third-party ecosystem software components.

The EI for AMR software stack is based on software supported by and part of the underlying hardware platform, their respective Unified Extensible Firmware Interface (UEFI) based boot, and their supported Linux* operating system. For requirement details, see:

EI for AMR Drivers

Edge Insights for Autonomous Mobile Robots relies on standard Intel® Architecture Linux* drivers included and upstreamed in the Linux* kernel from kernel.org and included in Ubuntu* distributions. These drivers are not included in the EI for AMR package. Some notable drivers that are specifically important for EI for AMR include:

5G/LTE Device Drivers for 5G and LTE connectivity.

Battery Bridge Kernel Module, which allows user-space applications to feed battery and power information into the Linux kernel’s power supply subsystem. It has been designed to be used together with the ROS 2 Battery Bridge to allow ROS 2-based AMR software stacks to forward battery information from an AMR’s microcontroller into the Linux kernel.

MIPI CSI IMX390 Device Driver, for cameras that are using the Sony* IMX390 sensor and are connected to a Tiger Lake platform SoC via a MIPI CSI connection.

Device Drivers for Intel® Movidius™ Myriad™ X VPUs.

EI for AMR Middleware

EI for AMR integrates the following middleware packages on AMRs:

AAEON* ROS 2 interface, the ROS 2 driver node for AAEON AMRs

GStreamer*, which includes support for libv4l2 video sources, GStreamer* “good” plugins for video and audio, and GStreamer* plugin for display to display a video stream in a window

Kobuki, the ROS 2 driver node for Cogniteam’s Pengo AMRs

Intel® oneAPI Base Toolkit, which is able to execute Intel® oneAPI Base Toolkit sample applications. The Intel® oneAPI Base Toolkit is a core set of tools and libraries for developing high-performance, data-centric applications across diverse architectures. It features an industry-leading C++ compiler and the Data Parallel C++ (DPC++) language, an evolution of C++ for heterogeneous computing. For Intel® oneAPI Base Toolkit training and a presentation of the CUDA* converter, refer to:

OpenCV (Open Source Computer Vision Library), an open-source library that includes several hundred computer vision algorithms

Intel® Distribution of OpenVINO™ toolkit, which is a comprehensive toolkit for quickly developing applications and solutions that solve a variety of tasks including emulation of human vision, automatic speech recognition, natural language processing, recommendation systems, and many others. Based on latest generations of artificial neural networks, including Convolutional Neural Networks (CNNs), recurrent and attention-based networks, the toolkit extends computer vision and non-vision workloads across Intel hardware, maximizing performance. It accelerates applications with high-performance, AI and deep learning inference deployed from edge to cloud.

Intel® RealSense™ ROS 2 Wrapper node, used for Intel® RealSense™ cameras with ROS 2

Intel® RealSense™ SDK, used to implement software for Intel® RealSense™ cameras

ROS 2 ros1_bridge, which provides a network bridge allowing the exchange of messages between ROS1 and ROS 2. This lets users evaluate the EI for AMR SDK on AMRs or with sensors for which only ROS1 driver nodes exist.

ROS 2, Robot Operating System (ROS), which is a set of open source software libraries and tools for building robot applications

ROS 2 depends on other middleware, like the Object Management Group (OMG) Data Distribution Service (DDS) connectivity framework that is using a publish-subscribe pattern. The standard ROS 2 distribution includes eProsima Fast DDS implementation.

ROS 2 Battery Bridge, which utilizes the Battery Bridge Kernel Module to forward battery information from an AMR’s microcontroller into the Linux kernel

RPLIDAR ROS 2 Wrapper node, for using RPLIDAR LIDAR sensors with ROS 2

SICK Safetyscanners ROS 2 Driver, which reads the raw data from the SICK Safety Scanners and publishes the data as a laser_scan msg

EI for AMR Algorithms

Edge Insights for Autonomous Mobile Robots includes reference algorithms as well as deep learning models as working examples for the following automated robot control functional areas:

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is an unsupervised clustering algorithm that clusters high dimensional points based on their distribution density. Adaptive DBSCAN (ADBSCAN) has clustering parameters that are adaptive based on range and are especially suitable for processing LIDAR data. It improves the object detection range by 20-30% on average.

Collaborative SLAM, a collaborative visual simultaneous localization and mapping (SLAM) framework for service robots. With an edge server maintaining a map database and performing global optimization, each robot can register to an existing map, update the map, or build new maps, all with a unified interface and low computation and memory cost. The Collaborative SLAM system consists of at least two elements:

The tracker is a visual SLAM system with support for inertial and odometry input. It estimates the camera pose in real-time, and maintains a local map. It can work without a server, but if it has one configured, it will communicate with the server to query and update the map. The tracker represents a robot. There can be multiple trackers running at the same time.

The server maintains the maps and communicates with all trackers. For each new keyframe from a tracker, it detects possible loops, both intra-map and inter-map. Once detected, the server will perform map optimization or map merging, and distribute the updated map to corresponding trackers.

For collaborative SLAM details, refer to A Collaborative Visual SLAM Framework for Service Robots paper.

FastMapping, which is an algorithm to create a 3D voxel map of a robot’s surrounding, based on Intel® RealSense™ depth sensor data.

OpenVINO™ Model Zoo, optimized deep learning models and a set of demos to expedite development of high-performance deep learning inference applications. A developer can use these pre-trained models instead of training their own models to speed-up the development and production deployment process.

ROS 2 Cartographer, a system that provides real-time simultaneous localization and mapping (SLAM) based on real-time 2D LIDAR sensor data. It is used to generate as-built floor plans in the form of occupancy grids.

ROS 2 Depth Image to Laser Scan, which converts a depth image to a laser scan for use with navigation and localization.

ROS 2 Navigation stack, which seeks a safe way to have a mobile robot move from point A to point B. This will complete dynamic path planning, compute velocities for motors, detect and avoid obstacles, and structure recovery behaviors. Nav2 uses behavior trees to call modular servers to complete an action. An action can be to compute a path, control effort, recovery, or any other navigation related action. These are separate nodes that communicate with the behavior tree (BT) over a ROS action server.

RTAB-Map (Real-Time Appearance-Based Mapping), a RGB-D, Stereo and Lidar Graph-Based SLAM approach based on an incremental appearance-based loop closure detector. The loop closure detector uses a bag-of-words approach to determinate how likely a new image comes from a previous location or a new location. When a loop closure hypothesis is accepted, a new constraint is added to the map’s graph, then a graph optimizer minimizes the errors in the map. A memory management approach is used to limit the number of locations used for loop closure detection and graph optimization, so that real-time constraints on large-scale environnements are always respected. RTAB-Map can be used alone with a handheld Kinect, a stereo camera or a 3D lidar for 6DoF mapping, or on a robot equipped with a laser rangefinder for 3DoF mapping.

EI for AMR Applications

Intel In-Band Manageability, monitors device(s) and updates software and firmware of the device(s) remotely.

Object Detection AI Application, detects objects in video data using a deep learning neural network model from the OpenVINO™ Model Zoo.

VDA5050 Sample Handler, processes selected commands from the VDA5050 AMR/AGV interoperability standard and forwards the AMR’s software components for autonomous navigation.

Wandering Application, included in the EI for AMR SDK to demonstrate the combination of the middleware, algorithms, and the ROS 2 navigation stack to move a robot around a room avoiding hitting obstacles, updating a local map in real time exposed as ROS topic, and publish AI-based objects detected in another ROS topic. It uses the robot’s sensors and actuators that are available from the robot’s hardware configuration.

Edge Server Middleware

FIDO Device Onboarding, an automatic onboarding protocol for IoT devices. Permits late binding of device credentials, so that one manufactured device may onboard, without modification, to many different IoT platforms.

Intel® Smart Edge Open, a software toolkit for building edge platforms. It speeds up development of edge solutions that host network functions alongside AI, media processing, and security workloads with reference solutions optimized for common use cases powered by a Certified Kubernetes* cloud native stack.

Edge Server Algorithms

OpenVINO™ Model Zoo, which includes optimized deep learning models and a set of demos to expedite development of high-performance deep learning inference applications. A developer can use these pre-trained models instead of training their own models to speed-up the development and production deployment process.

Edge Server Applications

OpenVINO™ Model Server (OVMS), a high-performance system for serving machine learning models. It is based on C++ for high scalability and optimized for Intel solutions, so that you can take advantage of all the power of the Intel® Xeon® processor or Intel’s AI accelerators and expose it over a network interface. OVMS uses the same architecture and API as TensorFlow Serving, while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making it easy to deploy new algorithms and AI experiments.

ThingsBoard*, an open-source IoT platform for data collection, processing, visualization, and device management. It enables device connectivity via industry standard IoT protocols - MQTT, CoAP and HTTP and supports both cloud and on-premises deployments.

Tools

ROS Tools

Edge Insights for Autonomous Mobile Robots is validated using ROS 2 nodes. ROS 1 is not compatible with EI for AMR components. A ROS 1 bridge is included to allow EI for AMR components to interface with ROS 1 components.

From the hardware perspective of the supported platforms, there are no known limitations for ROS 1 components.

For information on porting ROS 1 applications to ROS 2, here is a guide from the ROS community.

Edge Insights for Autonomous Mobile Robots includes:

colcon (collective construction), a command line tool to improve the workflow of building, testing, and using multiple software packages. It automates the process, handles the ordering, and sets up the environment to use the packages.

rqt, a software framework of ROS 2 that implements the various GUI tools in the form of plugins.

rviz2, a tool used to visualize ROS 2 topics.

Simulation

Edge Insights for Autonomous Mobile Robots includes:

The Gazebo* robot simulator, making it possible to rapidly test algorithms, design robots, perform regression testing, and train AI systems using realistic scenarios. Gazebo offers the ability to simulate populations of robots accurately and efficiently in complex indoor and outdoor environments.

An industrial simulation room model for Gazebo*, the Open Source Robotics Foundation (OSRF) Gazebo Environment for Agile Robotics (GEAR) workcell that was used for the ARIAC competition in 2018.

Other Tools

Edge Insights for Autonomous Mobile Robots includes:

Intel® oneAPI Base Toolkit, which includes the DPC++ compiler and compatibility tool, as well as debugging and profiling tools like VTune™ Profiler, etc. (formerly known as Intel System Studio).

OpenVINO™ Tools, including the model optimization tool.

Deployment

All application, algorithm, and middleware components which are executed as standalone processes are deployed in their own Docker container. This allows to selectively pull these components onto an AMR or Edge Server and launch them there.

For development purposes, the middleware libraries and all tools are deployed in a single container called Full SDK. This container is constructed hierarchically by extending the OpenVINO SDK container, which itself extends the ROS2 SDK container. For storage space savings, you can choose to run any of the containers depending on the needs of your application.

The ROS2 SDK container includes the ROS 2 middleware and tools, Intel® RealSense™ SDK and ROS 2 wrapper, GStreamer* and build tools, ROS 2 packages (Cartographer, Navigation, RTAB_MAP) and the Fast Mapping application (the Intel-optimized version of octomap).

The OpenVINO SDK container includes the ROS2 SDK, as well as the OpenVINO™ development toolkit, the OpenVINO™ DL GStreamer* plugins and the Wandering demonstration application.

The Full SDK container includes the OpenVINO™ container, as well as the Intel® oneAPI Base Toolkit, the Data Parallel C++ (DPC++) compatibility tool and profiler, analyzer tools.