Intel® Integrated Performance Primitives (Intel® IPP) Developer Guide and Reference

A newer version of this document is available. Customers should click here to go to the newest version.

Visible to Intel only — GUID: GUID-84B3C09A-970B-4AB7-931C-BFEE48A11303

Visible to Intel only — GUID: GUID-84B3C09A-970B-4AB7-931C-BFEE48A11303

Cache Optimizations

To get better performance, work should be grouped to take advantage of locality in the lowest/fastest level of cache possible. This is the same for threading or cache blocking optimizations.

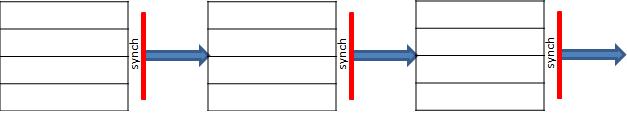

For example, when operations on each pixels in an image processing pipeline are independent, the entire image is processed before moving to the next step. This may cause many inefficiencies, as shown in a figure below.

In this case cache may contain wrong data, requiring re-reading from memory. If threading is used, the number of synchronization point/barriers is more than the algorithm requires.

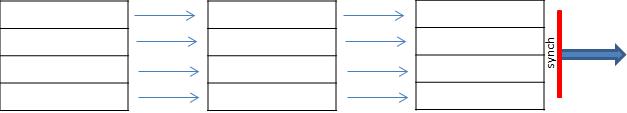

You can get better performance after combining steps on local data, as shown in a figure below. In this case each thread or cache-blocking iteration operates with ROIs, not full image.

It is recommended to subdivide work into smaller regions considering cache sizes, especially for very large images/buffers.