Visible to Intel only — GUID: GUID-60944EAD-C642-4CF9-AC72-FE8C98BA88AD

Visible to Intel only — GUID: GUID-60944EAD-C642-4CF9-AC72-FE8C98BA88AD

Softmax

General

The softmax primitive performs forward or backward softmax or logsoftmax operation along a particular axis on data with arbitrary dimensions. All other axes are treated as independent (batch).

Forward

In general form, the operation is defined by the following formulas (the variable names follow the standard Naming Conventions).

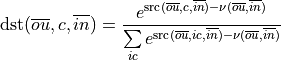

Softmax:

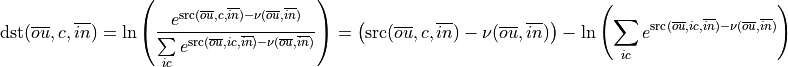

Logsoftmax:

Above

is the axis over which the operation is computed on,

is the axis over which the operation is computed on, is the outermost index (to the left of the axis),

is the outermost index (to the left of the axis), is the innermost index (to the right of the axis), and

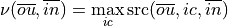

is the innermost index (to the right of the axis), and is used to produce numerically stable results and defined as:

is used to produce numerically stable results and defined as:

Difference Between Forward Training and Forward Inference

There is no difference between the dnnl_forward_training and dnnl_forward_inference propagation kinds.

Backward

The backward propagation computes  , based on

, based on  and

and  .

.

Execution Arguments

When executed, the inputs and outputs should be mapped to an execution argument index as specified by the following table.

Primitive input/output |

Execution argument index |

|---|---|

|

DNNL_ARG_SRC |

|

DNNL_ARG_DST |

|

DNNL_ARG_DIFF_SRC |

|

DNNL_ARG_DIFF_DST |

|

DNNL_ARG_ATTR_SCALES | DNNL_ARG_SRC |

|

DNNL_ARG_ATTR_SCALES | DNNL_ARG_DST |

|

DNNL_ARG_ATTR_MULTIPLE_POST_OP(binary_post_op_position) | DNNL_ARG_SRC_1 |

Implementation Details

General Notes

Both forward and backward propagation support in-place operations, meaning that src can be used as input and output for forward propagation, and diff_dst can be used as input and output for backward propagation. In case of in-place operation, the original data will be overwritten. This support is limited to cases when data types of src / dst or diff_src / diff_dst are identical.

Post-ops and Attributes

Attributes enable you to modify the behavior of the softmax primitive. The following attributes are supported by the softmax primitive:

Propagation |

Type |

Operation |

Description |

Restrictions |

|---|---|---|---|---|

forward |

attribute |

Scales the corresponding tensor by the given scale factor(s). |

Supported only for int8 softmax and one scale per tensor is supported. |

|

forward |

post-op |

Applies a Binary operation to the result |

General binary post-op restrictions |

|

forward |

Post-op |

Applies an Eltwise operation to the result. |

Data Type Support

The softmax primitive supports the following combinations of data types:

Propagation |

Source |

Destination |

|---|---|---|

forward |

f32, f64, bf16, f16, u8, s8 |

f32, bf16, f16, u8, s8 |

backward |

f32, f64, bf16, f16 |

f32, bf16, f16 |

Data Representation

Source, Destination, and Their Gradients

The softmax primitive works with arbitrary data tensors. There is no special meaning associated with any logical dimensions. However, the softmax axis is typically referred to as channels (hence in formulas we use  ).

).

Implementation Limitations

Refer to Data Types for limitations related to data types support.

GPU

Only tensors of 6 or fewer dimensions are supported.

Post-ops are not supported.

Performance Tips

Use in-place operations whenever possible.

Currently the softmax primitive is optimized for the cases where the dimension of the softmax axis is physically dense. For instance:

Optimized: 2D case, tensor

, softmax axis 1 (B), format tag dnnl_ab

, softmax axis 1 (B), format tag dnnl_abOptimized: 4D case, tensor

, softmax axis 3 (D), format tag dnnl_abcd

, softmax axis 3 (D), format tag dnnl_abcdOptimized: 4D case, tensor

, softmax axis 1 (B), format tag dnnl_abcd, and

, softmax axis 1 (B), format tag dnnl_abcd, and

Optimized: 4D case, tensor

, softmax axis 1 (B), format tag dnnl_acdb or dnnl_aBcd16b, and

, softmax axis 1 (B), format tag dnnl_acdb or dnnl_aBcd16b, and

Non-optimized: 2D case, tensor

, softmax axis 0 (A), format tag dnnl_ab, and

, softmax axis 0 (A), format tag dnnl_ab, and

Non-optimized: 2D case, tensor

, softmax axis 1 (B), format tag dnnl_ba, and

, softmax axis 1 (B), format tag dnnl_ba, and

Non-optimized: 4D case, tensor

, softmax axis 2 (C), format tag dnnl_acdb, and and

, softmax axis 2 (C), format tag dnnl_acdb, and and

Example

Softmax Primitive Example

This C++ API example demonstrates how to create and execute a Softmax primitive in forward training propagation mode.

Key optimizations included in this example:

In-place primitive execution;

Softmax along axis 1 (C) for 2D tensors.