Visible to Intel only — GUID: GUID-BA715009-AB4F-42DD-8C5A-8BF6F4F4D2A9

Visible to Intel only — GUID: GUID-BA715009-AB4F-42DD-8C5A-8BF6F4F4D2A9

LeakyReLU

General

LeakyReLU operation is a type of activation function based on ReLU. It has a small slope for negative values with which LeakyReLU can produce small, non-zero, and constant gradients with respect to the negative values. The slope is also called the coefficient of leakage.

Unlike PReLU, the coefficient  is constant and defined before training.

is constant and defined before training.

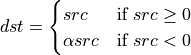

LeakyReLU operation applies following formula on every element of  tensor (the variable names follow the standard Naming Conventions):

tensor (the variable names follow the standard Naming Conventions):

Operation attributes

Attribute Name |

Description |

Value Type |

Supported Values |

Required or Optional |

|---|---|---|---|---|

Alpha is the coefficient of leakage. |

f32 |

Arbitrary f32 value but usually a small positive value. |

Required |

Execution arguments

The inputs and outputs must be provided according to below index order when constructing an operation.

Inputs

Index |

Argument Name |

Required or Optional |

|---|---|---|

0 |

src |

Required |

Outputs

Index |

Argument Name |

Required or Optional |

|---|---|---|

0 |

dst |

Required |

Supported data types

LeakyReLU operation supports the following data type combinations.

Src |

Dst |

|---|---|

f32 |

f32 |

bf16 |

bf16 |

f16 |

f16 |