oneAPI has come a long way. Five years ago, in November 2019, Bill Savage (then VP, Intel Architecture, Graphics and Software and GM Compute Performance and Developer Products) announced the creation of the oneAPI industry initiative at SC19 in Denver, Colorado.

The latest 2025.0 release of the Intel® Developer Tools represents our tremendous progress on oneAPI’s promise of an open cross-architecture software development platform for highly parallel accelerated Edge, AI, and HPC compute which scales without vendor lock-in.

You can find the complete detailed news update listing all the key feature improvements at this link:

→ The Intel® Software Development Tools 2025.0 Are Here

Our current product releases put an emphasis on strengthening developer productivity through a wholehearted embrace of open industry standards like LLVM*, SPIR-V,* OpenMP*, SYCL*, Fortran*, MPI* and Python*. We focus on expanding support for the latest parallel programming extensions, optimizations, and tuning for the latest AI and compute platforms. We pursue these improvements always having productivity, software scaling, maintainability, and flexibility in mind.

The result is a comprehensive software development stack covering everything from parallel hardware runtimes to Fortran, C/C++, and Python tools to data analytics, ML and AI models and frameworks.

Figure 1: Software Development Stack

Of course, this software development tools stack includes optimized support for the latest Intel platforms: Intel® Xeon® 6 processors with E-cores, Intel® Xeon® 6 processors with P-cores and Intel® Core™ Ultra processors (Series 2) with integrated GPU and NPU.

Five Years of oneAPI: The Movement Evolves

The vision of oneAPI is to provide a comprehensive set of libraries, open source repositories, SYCL* -based C++ language extensions, and optimized reference implementations to accelerate the following goals:

- Define a common unified and open multiarchitecture multivendor software platform.

- Ensure functional code and performance portability across hardware vendors and accelerator technologies.

- Maintain and nurture a comprehensive set of library APIs to cover programming domain needs across sectors and use cases.

- Provide a developer community and open forum to drive a unified API functionality and interfaces that meet the needs for a unified multiarchitecture software development model.

- Encourage collaboration on oneAPI projects and compatible oneAPI implementations across the developer ecosystem.

With millions of installations and a constantly growing active developer community, including the over 30 research institutions and universities comprising the oneAPI Academic Centers of Excellence and a long list of awesome-onapi projects, oneAPI has evolved into a cornerstone for the Unified Acceleration Foundation (UXL) established over a year ago.

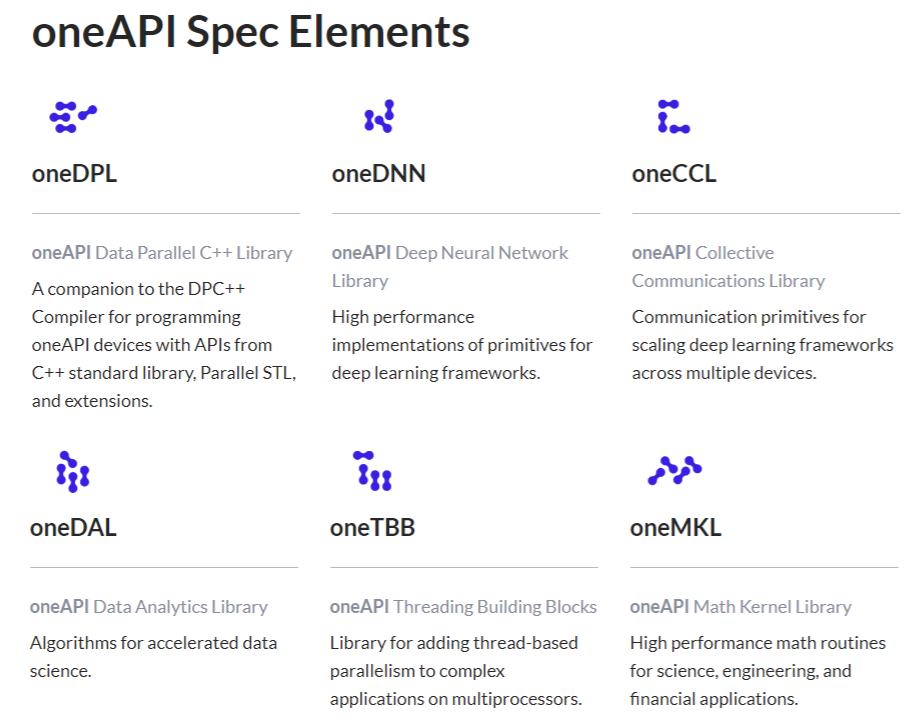

The oneAPI software platform and open multiarchitecture programming model, with its community of over 150 active participants, libraries, and specifications complete the rich set of building blocks the UXL Foundation is expanding on:

Figure 2: oneAPI Specification Elements

oneAPI along with the SYCL and OpenCL projects at Khronos Group* and UXL Foundation’s other affiliate partner, the Autoware Foundation*, assists the foundation's over 30 members, silicon vendors, software vendors, original design manufacturers, AI solution providers, and automotive companies in achieving their goals. Its reach expands beyond open accelerated parallel computing into Artificial Intelligence, Visual Computing, Edge Computing, and more.

A Community Celebrates

oneAPI changed the way the software developer community can scale entire application software stacks across various hardware configurations. It frees software developers from vendor-lock minimizing the need for code rewrites when moving between hardware platforms with diverse heterogenous architectures and compute capabilities.

Below some of our fellow travelers and contributors in the oneAPI community share their experience adopting the open standards paradigm that defines oneAPI as well as the UXL Foundation:

Celebrating five years of oneAPI. In ExaHyPE, oneAPI has been instrumental in implementing the numerical compute kernels for hyperbolic equation systems, making a huge difference in performance with SYCL providing the ideal abstraction and agnosticism for exploring these variations. This versatility enabled our team, together with Intel engineers, to publish three distinct design paradigms for our kernels.

oneAPI has revolutionized the way we approach heterogeneous computing by enabling seamless development across architectures. Its open, unified programming model has accelerated innovation in fields from AI to HPC, unlocking new potential for researchers and developers alike. Happy 5th anniversary to oneAPI!

Intel's commitment to their oneAPI software stack is testament to their developer-focused, open-standards commitment. As oneAPI celebrates its 5th anniversary, it provides comprehensive and performant implementations of OpenMP and SYCL for CPUs and GPUs, bolstered by an ecosystem of library and tools to make the most of Intel processors.

Happy 5th anniversary, oneAPI! We've been partners since the private beta program in 2019. We are currently exploring energy-efficient solutions for simulations in material science and data analysis in bioinformatics with different accelerators. For that, the components of oneAPI, its compilers with back ends for various GPUs and FPGAs, oneMKL, and the performance tools Intel® VTune™ Profiler and Intel® Advisor are absolutely critical.

Flexibility Through Open Industry Standards

Let us discuss some of the new and extended capabilties that help you take software development productivity to the next level.

Intel not only was a founding member of oneAPI and the UXL Foundation. Our commitment and contribution to the open source software ecosystem has a long history. With the 2025.0 release we continue to be at the forefront of adopting the latest open industry standard features and proposals.

LLVM

Let us start by having a look at LLVM Sanitizers. They help identify and pinpoint undesirable or undefined behavior in your code. They provide a convenient way for software developers to verify code changes before submitting them to a repository branch. Intel Compilers support the following sanitizers:

- AddressSanitizer - detect memory safety bugs.

- UndefinedBehaviourSanitizer - detect undefined behavior bugs.

- MemorySanitizer - detect use of uninitialized memory bugs.

- ThreadSanitizer – detect data races.

- Device-Side AddressSanitizer – detect memory safety bugs in SYCL device code.

Among these the ThreadSanitizer and Device-Side AddressSanitizer have been newly added,

- The new ThreadSanitizer allows you to catch data races in OpenMP and threaded applications. You can enable the sanitizer via the -fsanitize=thread flag.

- The AddressSanitizer, a tool for detecting memory errors in C/C++ code, now includes support for SYCL device code. To activate this feature for the device code, use the flag -Xarch_device -fsanitize=address. The flag -Xarch_host -fsanitize=address should be used to identify memory access problems in the host code. This new SYCL accelerator extension thus provides a Device-Side AddressSanitizer.

Find out more in the article:

→ Find Bugs Quickly Using Sanitizers with the Intel® oneAPI DPC++/C++ Compiler

oneTBB

The collaboration between Intel and the oneAPI Center of Excellence (COE) at Durham University under on oneTBB is a prime example of our community engagement. Professor Tobias Weinzierl and his team develop ExaHyPE, a generic collection of state-of-the-art numerical ingredients to write new solvers for hyperbolic equation systems.

The most widely used oneTBB generic parallel algorithms, such as parallel_for, take range as an argument. Together with Intel engineers, they developed and proposed a new oneTBB range type, blocked_nd_range targeted for inclusion in the official oneTBB specification. You can find more details about this extension in the Unified Acceleration Foundation (UXL) GitHub* oneAPI Specification.

Multi-threaded applications run faster with the new Intel® oneAPI Threading Building Blocks' task_group, flow_graph and parallel_for_each improved scalability.

Durham University is also working with the oneTBB development on enhancements to the task_group API. Among them is an extension to the types of the objects returned by task_group (task_handle objects) to represent tasks for the purpose of adding dependencies. With the new handles, you can submit tasks straightaway, setting them as predecessors to other tasks later. This dramatically increases the theoretical concurrency, avoiding sequential task graph assembly.

Find out more in the article:

→ The oneAPI Center of Excellence at Durham University Brings Its Experience from ExaHype into oneTBB

In addition, oneTBB flow graph now enables you to process overlapping messages on a shared graph, waiting for a specific message using the new try_put_and_wait experimental API.

OpenMP

In the latest releases of the Intel® oneAPI DPC++/C++ Compiler and Intel® Fortran Compiler, we are implementing many new features introduced as part of OpenMP 5.2 enhancements and the latest proposals for OpenMP 6.0. This includes support for the latest generation Intel® Arc™ Graphics GPU, Intel® Data Center GPU, and the integrated Intel® Arc™ Xe2 Graphics GPU.

Looking specifically at GPU execution control OpenMP provides:

- Data management in heterogeneous memory architecture.

- Execution policy/configuration of GPU thread management.

- Leveraging existing APIs to GPU-optimized libraries or other compilation units.

- GPU instructions selection/optimization.

- Control flow/branch control of concurrent thread execution.

With the Intel® Compilers 2025.0 we introduce the following new OpenMP features:

- GROUPPRIVATE directive (in OpenMP 6.0) for data management on GPU shared local memory.

- LOOP directive,

- REDUCTION clause on TEAMS directive,

- NOWAIT clause on TARGET directive (OpenMP 5.1) for execution policy.

- INTEROP clause on DISPATCH directive (OpenMP 6.0) for leveraging existing APIs and enabling SYCL* and OpenMP interoperability.

Find out more in the article:

→ Advanced OpenMP* Device Offload with Intel® Compilers

MPI

Intel® MPI now offers a full MPI 4.0 implementation including partitioned communication and improved error handling.

New optimizations for MPI_Allreduce improve scale up and scale out performance for Intel GPUs.

Cryptography

In today’s digital environment, ensuring the security of cryptographic modules is critical, especially for organizations that manage sensitive information. Be ready for FIPS Compliance and the new challenges of bad actors in a post-quantum computing world. The Intel® Cryptography Primitives Library stays at the forefront of security and privacy with following the latest open standards with thread-safe parallelism enabled cryptography algorithm solutions.

Speed Through Parallelism

The emphasis on parallel compute acceleration does however not stop there.

oneMKL

The Intel® oneAPI Math Kernel Library (oneMKL) in its 30th year continues to be on the forefront of math libraries.

- Workloads using single precision 3d real in-place FFT get significant improvements executing on Intel® Data Center GPUs.

- The SYCL Discrete Fourier Transform API is easier to use and to debug with more compilation messages added for type safety, reducing time to develop application, especially when targeting Intel GPUs.

- Sparse domain on SYCL API now supports sparse matrices using Coordinate Format (COO). This format is widely used for fast sparse matrices construction, and it can be easily converted to other popular formats such as Compressed Sparse Row (CSR) and Compressed Sparse Column) CSC.

- New distribution models and data types are available for Random Number Generation (RNG) using SYCL device API.

One specific example how oneMKL algorithm performance benefits from our laser focus on increased parallelism is the introduction of sub-sequence parallelism for random number generation on GPU.

Random number generators are essential in many applications, such as cryptography, simulation, and scientific computing. They play a key role in providing the random seeds for many different prediction scenarios from predictive maintenance, quantitative finance risk assessment, or earthquake and tsunami emergency response planning.

We enable improving its performance even more by splitting the algorithm into several smaller tasks that can be processed simultaneously.

Find out how it works in the article:

→ Fast Sub-Stream Parallelization for oneMKL MRG32k3a Random Number Generator

Intel® Distribution for Python

Streamlining parallel Python execution is at the heart of a lot of our work upstreaming and contributing our optimizations to the PyTorch ecosystem.

The latest updates to the Intel® Distribution for Python come with the following new capabilities:

- Drop-in, near-native performance on CPU and GPU for numeric compute, powered by oneAPI.

- Data Parallel Extension for Python (dpnp) expands compatibility, adding NumPy* 2.0 support in the runtime and providing asynchronous execution of offloaded operations. This update provides significant new functionality, with support for more than 25 new functions and keywords, providing 90% functional compatibility with CuPy*.

- Data Parallel Control (dpctl) expands compatibility, adding NumPy 2.0 support in the runtime and providing asynchronous execution of offloaded operations. This update expands the functionality of Python Array API support.

PyTorch Optimizations

Native Support for PyTorch 2.5 is accessible on Intel’s Data Center GPUs, Core Ultra processors, and client GPUs, where it can be used to develop on Windows with out-of-the-box support for Intel® Arc™ Graphics and Intel® Iris® XE Graphics GPUs.

The latest in CPU performance for PyTorch Inference is now available in PyTorch 2.5 with support for Torch.Compile with optimizations for the current processor generation and the ability to compile TorchInductors with the Intel oneAPI DPC++/C++ Compiler.

Optimized for the Latest AI and Compute Platforms

With the latest release of Intel Developer Tools, we can thus

- take full advantage of the PC for AI development with Intel developer tools support for Intel® Core™ Ultra processors (Series 2), formerly code-named Lunar Lake,

- maximize performance for compute-intensive HPC and AI workloads with Intel developer tools support for Intel® Xeon® 6 processors with P-cores, formerly code-named Granite Rapids,

and scale performance across the entire portfolio of Intel CPUs and GPUs.

Focus on Developer Productivity

It is however not only about performance, but also about productivity.

Compiler Optimization Reports

You can improve the performance of your application early during software development. Intel® oneAPI DPC++/C++ Compiler and Intel® Fortran Compiler 2025.0 come with substantially enhanced optimization reports covering

- Inlining

- Profile Guided Optimization

- Loop Optimization

- SIMD Vectorization

- OpenMP

- Code Generation

Let the compiler tell you how streamline your code as you are writing it.

Find out more in the article:

→ Develop Highly Optimized Applications Faster with Compiler Optimization Reports

Tuning AI Workload Performance

Application tuning and profiling with Intel® VTune™ Profiler 2025.0 is not limited to accelerated computing, but PyTorch and OpenVINO™ AI workloads using CPU, GPU and Intel Core Ultra Processor Series 2 NPU can be profiled as well.

Identify performance bottlenecks, streamline parallel execution of deep learning inference and training workloads, eliminate memory and cache latency, all by simply running the Intel VTune Profiler on your workload and leveraging Intel® Instrumentation and Tracing Technology API (ITT API) as well as Python awareness and OpenVINO integration.

With Intel VTune Profiler you can indeed streamline and accelerate ML and AI performance across CPU, GPU and NPU.

Find out more in the following cookbook recipes:

→ Profiling Large Language Models on Intel® Core™ Ultra 200V

→ Profiling OpenVINO™ Applications

→ Profiling Data Parallel Python* Applications

Download the Software

The 2025.0 releases of our Software Development Tools are available for download here:

- Intel® oneAPI Base Toolkit - Multiarchitecture C++ and Python* Developer Tools for

Open Accelerated Computing - Intel® oneAPI HPC Toolkit - Deliver Fast Applications That Scale across Clusters

- AI Tools Selector - Achieve End-to-End Performance for AI Workloads, Powered by oneAPI.

Looking for smaller download packages?

Streamline your software setup with our toolkit selector to install full kits or new, right-sized sub-bundles containing only the needed components for specific use cases! Save time, reduce hassle, and get the perfect tools for your project with Intel® C++ Essentials, Intel® Fortan Essentials, and Intel® Deep Learning Essentials.

Additional Resources

Compilers

- Advanced OpenMP* Features for Device Offload in Intel® Compilers

- Develop Highly Optimized Applications Faster with Compiler Optimization Reports

- Find Bugs Quickly Using Sanitizers with the Intel® oneAPI DPC++/C++ Compiler

- Intel® oneAPI DPC++/C++ Compiler Boosts PyTorch* Inductor Performance on Windows* for CPU Devices

Libraries and APIs

- The oneAPI Center of Excellence at Durham University Brings Its Experience from ExaHype into oneTBB

- Fast Sub-Stream Parallelization for oneMKL MRG32k3a Random Number Generator

- Faster Core-to-Core Communications

- Be Ready for Post-Quantum Security with Intel® Cryptography Primitives Library

- Intel® Tools for OpenCL™ Software

Performance Profiling

- Boost the Performance of AI/ML Applications using Intel® VTune™ Profiler

- Profiling Large Language Models on Intel® Core™ Ultra 200V

- Profiling Applications in Performance Monitoring Unit (PMU)-Enabled Google Cloud* Virtual Machines

- Introduction to Intel® Data Direct I/O Technology (Intel® DDIO) Analysis with Performance Monitoring