10/18/2024

Introduction

The performance of PyTorch-based AI applications and scalability across different platforms can greatly benefit from native C++ kernels that they use, taking full advantage of the underlying hardware’s capabilities. This can be achieved by building these native kernels with compilers that support the latest architecture extensions and code-path optimizations. That way, you can ensure the desired level of performance and responsiveness of your AI application on the hardware platform of your choice.

In this brief discussion, we will discuss how to achieve this on a 12th Gen. Intel® Core™ processor-based computer running Microsft Windows*. We will build Python reference benchmark applications using the Microsoft* Visual C++ Compiler as well as the Intel oneAPI DPC++/C++ Compiler.

TorchInductor is the default compiler backend of PyTorch* 2.x.[1] compilation that compiles a PyTorch program into C++ kernels for CPU. It offers a significant speedup over eager mode execution through graph-level optimization powered default Inductor backend. Compilers applied to C++ kernels in TorchInductor can play an important role in accelerating compute-intensive AI and ML workloads and scaling them on x86 platforms.

In this tutorial, we introduce the steps for utilizing Intel® oneAPI DPC++/C++ Compiler [2] to speed up TorchInductor (C++ kernels) on Windows for CPU devices. We also provide some performance testing results for popular PyTorch benchmarks (i.e., Torchbench*, HuggingFace*, and TIMM* models) on typical x86 client machines.

Software Installation

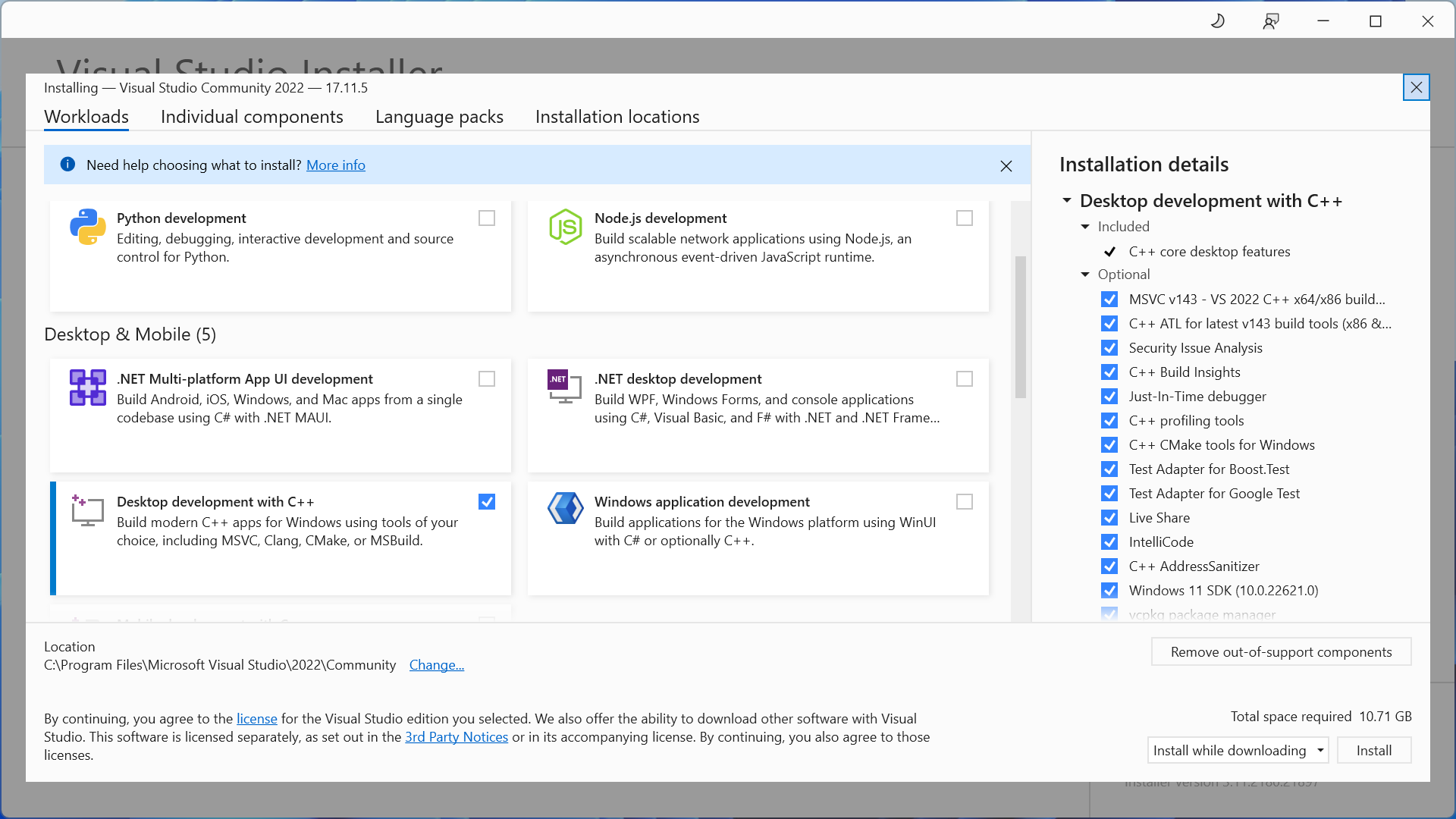

- Download and install : Microsoft* Visual Studio Community 2022

Install runtime libraries from Microsoft Visual C++

Download link: https://visualstudio.microsoft.com/downloads/

Select "Desktop development with C++" and install.

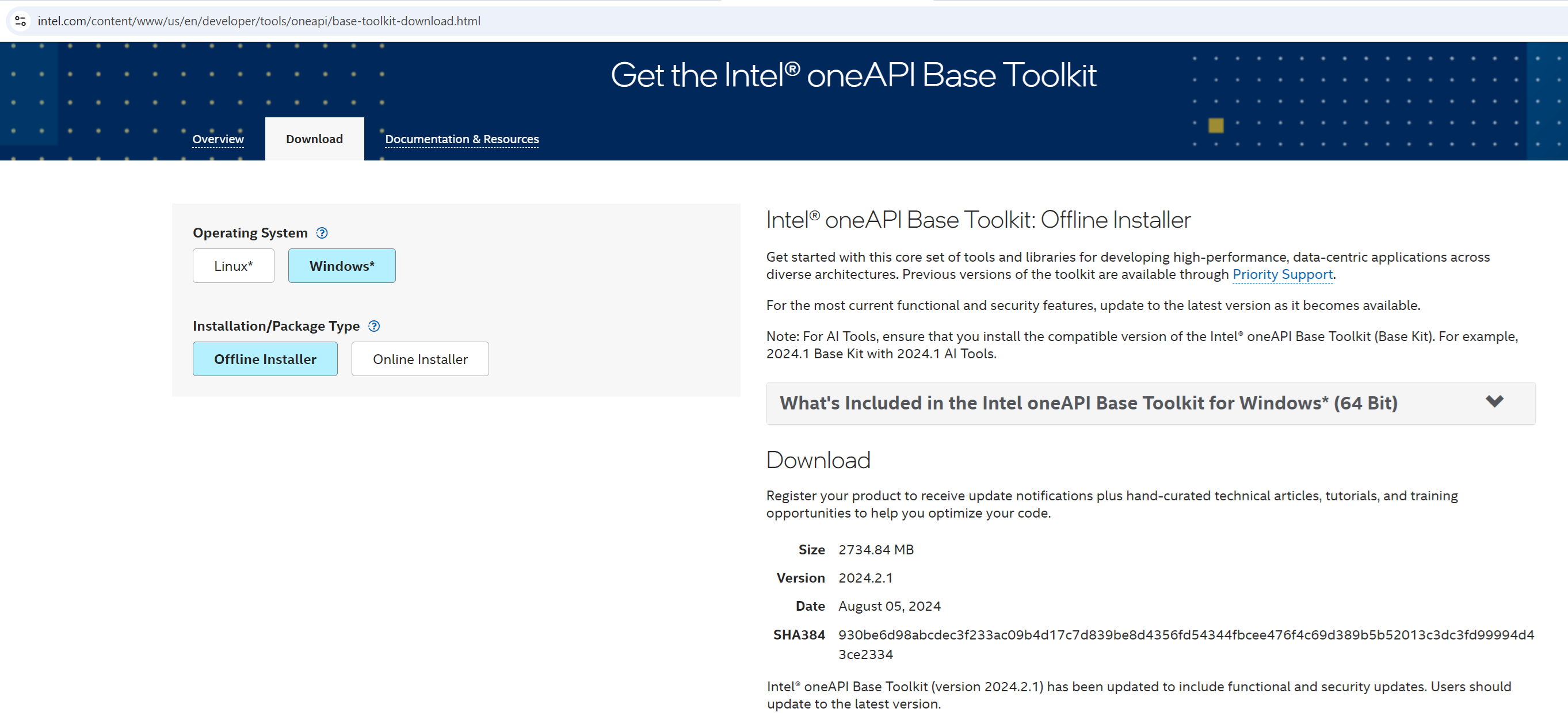

- Download and install the Intel oneAPI DPC++/C++ Compiler:

Download link: https://www.intel.com/content/www/us/en/developer/tools/oneapi/base-toolkit-download.html

Select "Intel oneAPI Base Toolkit for Windows* (64 Bit)" and install.

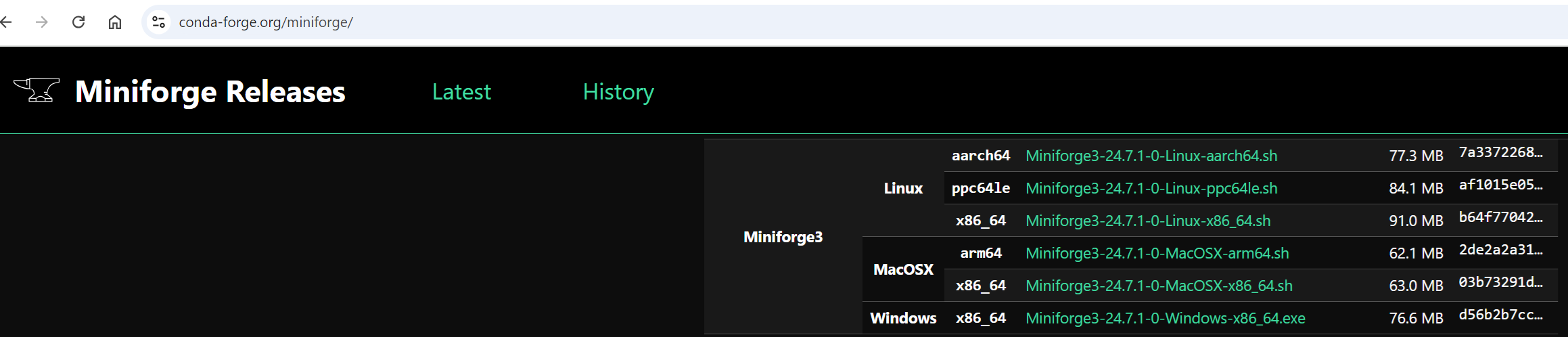

- Download and install Miniforge 64-bit for Windows

Download Link: https://conda-forge.org/miniforge/

Select "Miniforge3 Windows” and install.

Setup the Intel® oneAPI DPC++/C++ Compiler with the PyTorch Environment

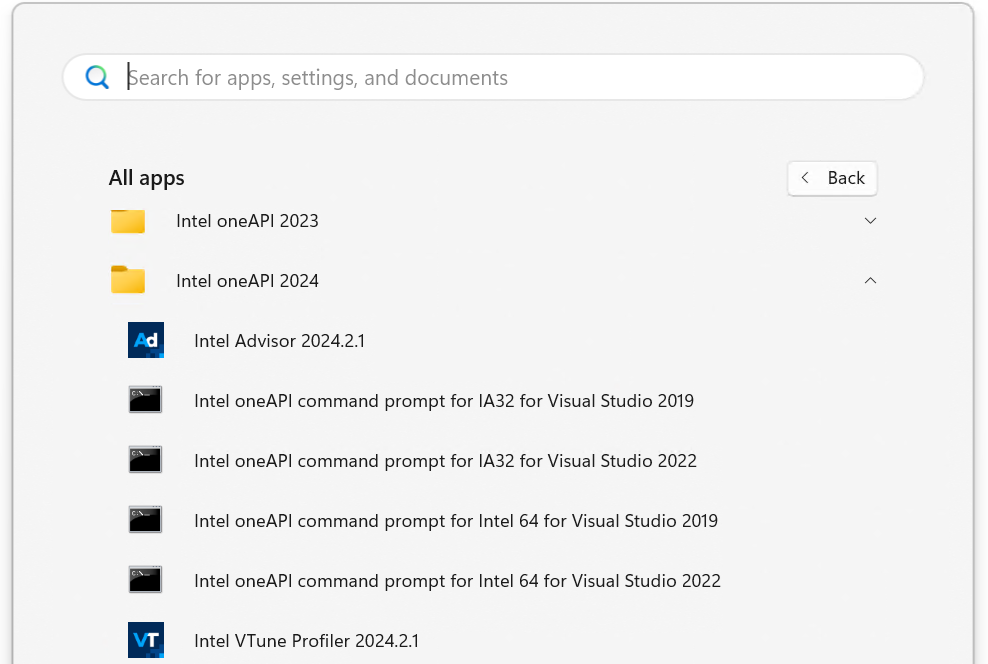

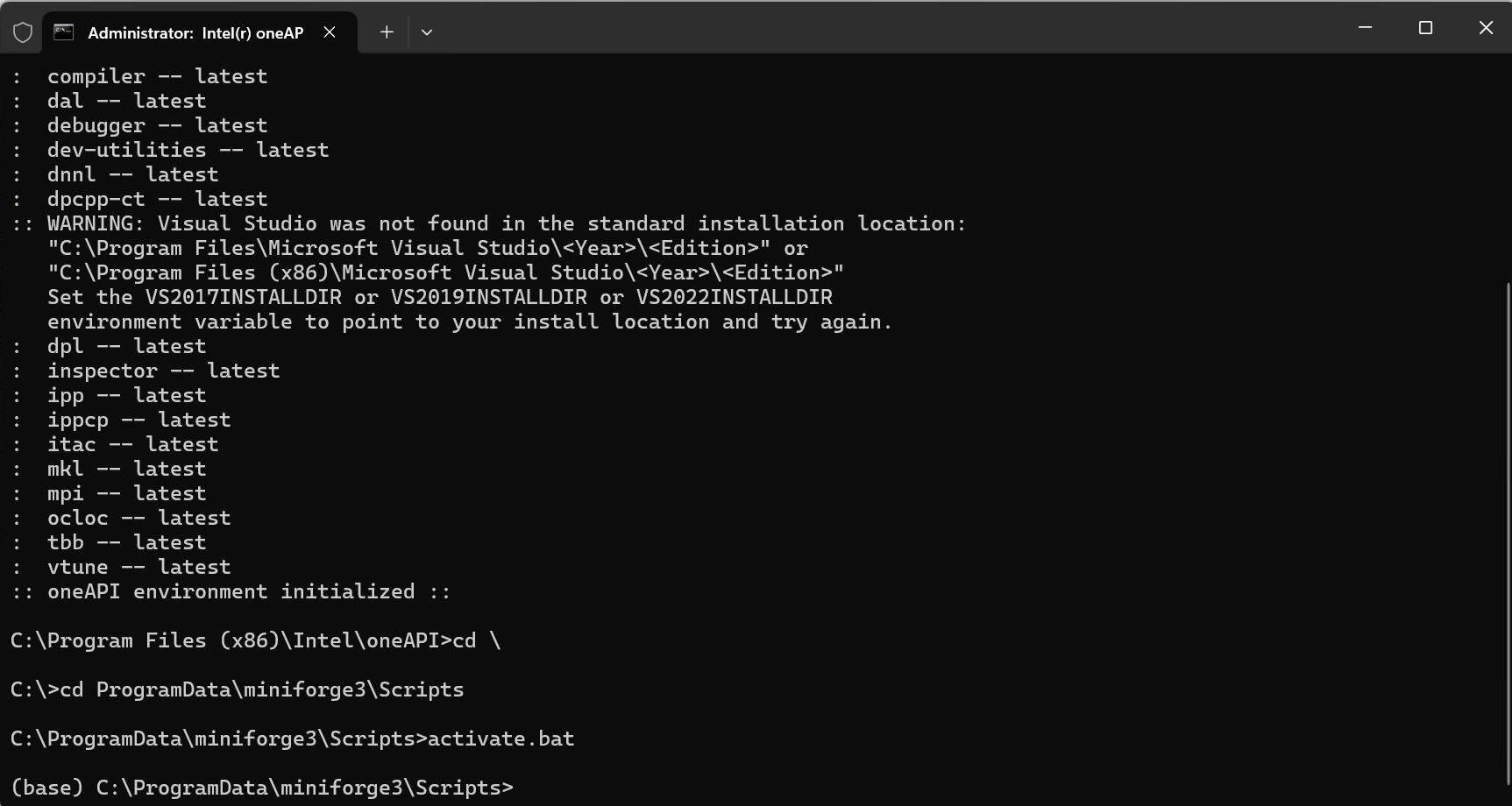

- Launch build console:

Launch the “Intel oneAPI command prompt for Intel 64 for Visual Studio 2022” console.

- Activate Conda-Forge:

Conda-Forge is a powerful command line tool for package and environment management that runs on Windows.

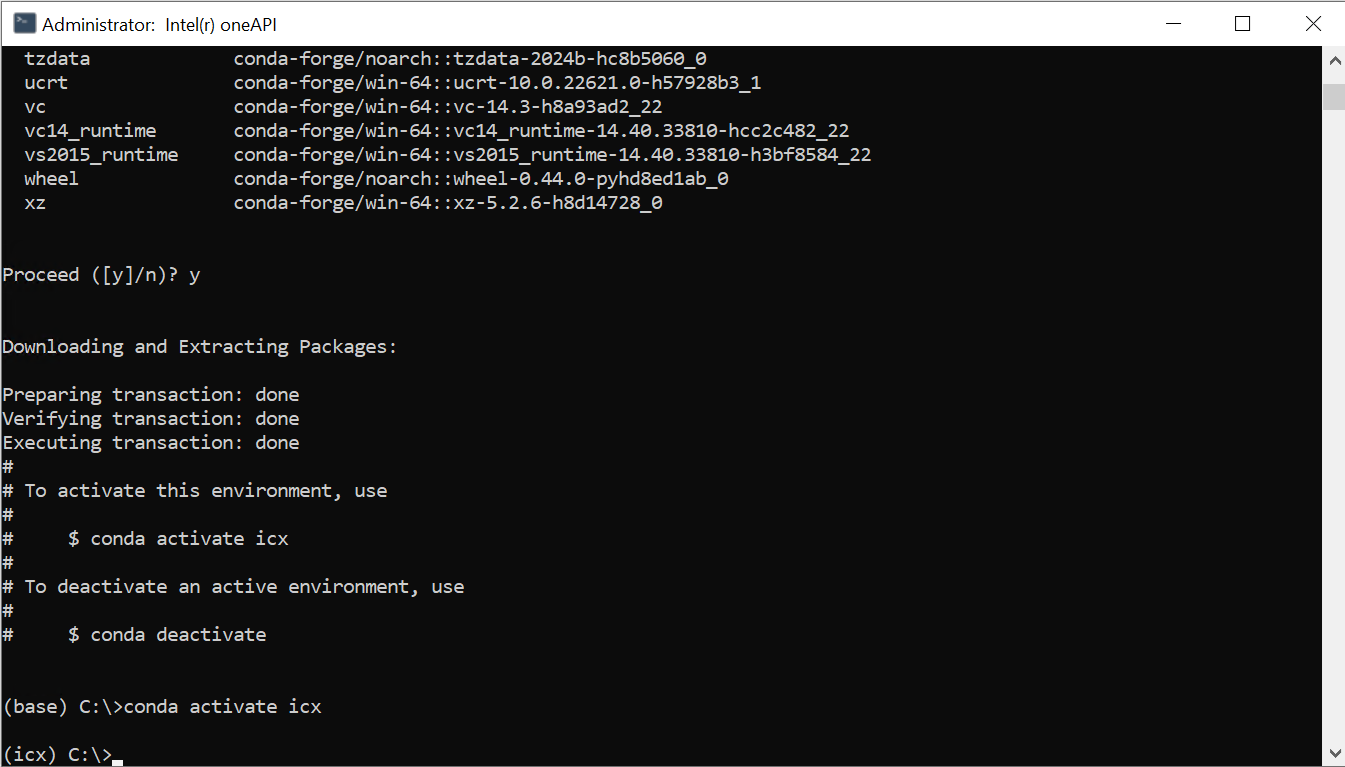

- Create a Conda-Forge space for use with the Intel® oneAPI DPC++/C++ Compiler:

Create a separate compiler environment to install PyTorch

conda create -n icx python==3.11

conda activate icx

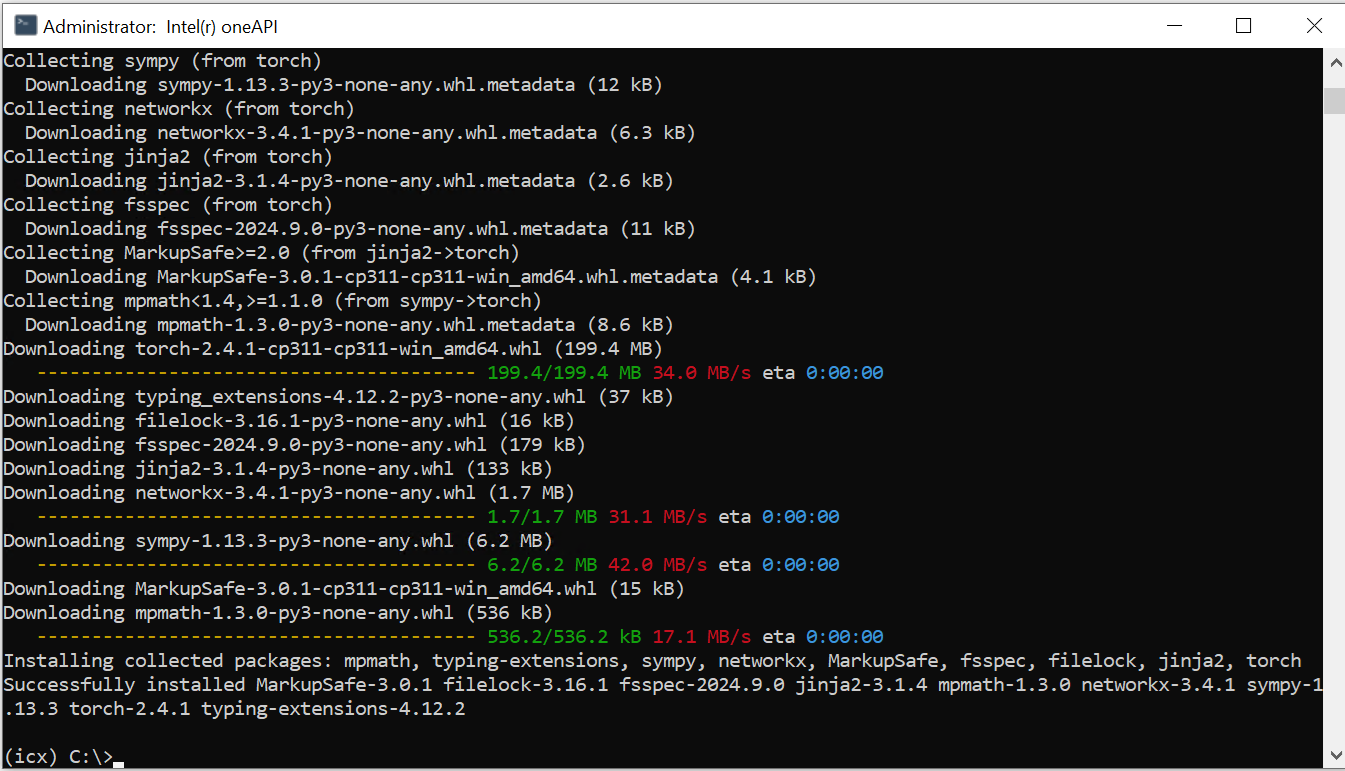

- Install PyTorch 2.5 or later:

Note: only version 2.5 and above support Windows PyTorch Inductor.

pip install torch

Run PyTorch with Intel® oneAPI DPC++/C++ Compiler

- Set Intel® oneAPI DPC++/C++ Compiler as Windows Inductor C++ Compiler via environment variable: “CXX” as below. The Microsoft Visual C++ Compiler is the default compiler for PyTorch, and PyTorch expects the compiler flag syntax on Windows to be the same as this default. Therefore, we need to use the “icx-cl” compiler command line driver as described in the Intel oneAPI DPC++/C++ Compiler Developer Guide and Reference.

- Using TorchInductor with Intel® oneAPI DPC++/C++ Compiler on Windows CPU: Here’s a simple example to demonstrate how to use Intel® oneAPI DPC++/C++ Compiler for TorchInductor.

Use Intel® oneAPI DPC++/C++ Compiler to Boost PyTorch Performance

The Intel® oneAPI DPC++/C++ Compiler deploys industry-leading compiler technology to generate an optimized binary for x86 and provide higher performance for PyTorch Inductor. We test popular PyTorch benchmarks: Torchbench*, HuggingFace*, and TIMM* models as below:

Speedup = Inductor_Perf/Eager_Perf

|

|

Default Wrapper Speedup Over Eager Mode (Higher is Better) |

|

|

Workload |

Microsoft Visual C++ Compiler (default) |

Intel® oneAPI DPC++/C++ Compiler |

|

Torchbench |

1.132 |

1.389 |

|

HuggingFace |

0.911 |

1.351 |

|

Timm_models |

1.365 |

1.720 |

- Torchbench Performance Results:

- HuggingFace Performance Results:

- Timm_models Performance Results:

Product and Performance Information

Testing Date: Performance results are based on testing by Intel as of October 11, 2024 and may not reflect all publicly available security updates.

Configuration Details:

OS version: Windows 11 Enterprise Version 22H2 (OS Build 22621.4169)

Compiler versions:

Microsoft Visual C++ Compiler: 19.41.34120

Intel oneAPI DPC++/C++ Compiler: 2024.2.1

Python version: 3.11.0

Torch version: 2.5.0+cpu

Machine configurations: 12th Gen Intel® Core™ i9-12900K Processor, Performance-core Base Frequency: 3.20 GHz, Sockets: 1, Total Cores: 16, Total Threads: 24, Virtualization: Enabled, L1 cache: 1.4 MB, L2 cache: 14.0 MB, L3 cache: 30.0 MB, Memory: 32.0 GB, Speed: 4800 MT/s, Slots used: 2 of 4, Form factor: DIMM

Conclusion

This tutorial shows how to use the Intel® oneAPI DPC++/C++ Compiler to speed up PyTorch* Inductor on Windows* for CPU devices. In addition, we share some performance testing results for popular PyTorch benchmarks (i.e., Torchbench*, HuggingFace*, and TIMM* models) on typical x86 Windows client machines with both Microsoft Visual C++ Compiler and Intel® oneAPI DPC++/C++ Compiler.

Download the Compiler Now

You can download the Intel oneAPI DPC++/C++ Compiler on Intel’s oneAPI Developer Tools product page.

This version is also in the Intel® oneAPI Base Toolkit, which includes an advanced set of foundational tools, libraries, analysis, debug and code migration tools.

You may also want to check out our contributions to the LLVM compiler project on GitHub.

Additional Resources

[2] Intel® oneAPI DPC++/C++ Compiler

[3] Get the Intel® oneAPI Base Toolkit

[4] Intel oneAPI DPC++/C++ Compiler Developer Guide and Reference

Notices & Disclaimers:

Performance varies by use, configuration, and other factors. Learn more on the Performance Index site. Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure. Your costs and results may vary. Intel technologies may require enabled hardware, software, or service activation.

Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

AI Disclaimer:

AI features may require software purchase, subscription or enablement by a software or platform provider, or may have specific configuration or compatibility requirements. Details at www.intel.com/AIPC. Results may vary.