3. Fronthaul Compression IP Functional Description

Compression and Decompression

A preprocessing block-based bit shift block generates the optimum bit-shifts for a resource block of 12 resource elements (REs). The block reduces the quantization noise, especially for low-amplitude samples. Hence, it reduces the error vector magnitude (EVM) that compression introduces. The compression algorithm is almost independent of the power value. Assuming the complex input samples is x = x1 + jxQ, the maximum absolute value of the real and imaginary components for the resource block is:

The maximum value of the resource block n is:

Having the maximum absolute value for the resource block, the following equation determines the left shift value assigned to that resource block:

Where bitWidth is the input bit width.

The IP supports compression ratios of 8, 9, 10, 11, 12, 13, 14, 15, 16.

For a compression ratio of 16 (udCompParam field = 0, according to the O-RAN WG specification), the IP does not perform compression or decompression.

Mu-Law Compression and Decompression

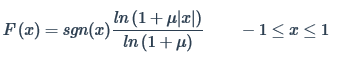

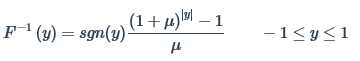

The algorithm uses Mu-law companding technique, which speech compression widely uses. This technique passes the input uncompressed signal, x, through a compressor with function, f(x), before rounding and bit-truncation. The technique sends compressed data, y, over the interface. The received data passes through an expanding function (which is the inverse of the compressor, F-1(y). The technique reproduces the uncompressed data with minimal quantization error.

The Mu-law IQ compression algorithm follows the O-RAN specification.