Visible to Intel only — GUID: GUID-079F8689-FD8D-4130-BA77-D3CEBEA1AAF4

Visible to Intel only — GUID: GUID-079F8689-FD8D-4130-BA77-D3CEBEA1AAF4

Pooling

General

The pooling primitive performs forward or backward max or average pooling operation on 1D, 2D, or 3D spatial data.

Forward

The pooling operation is defined by the following formulas. We show formulas only for 2D spatial data which are straightforward to generalize to cases of higher and lower dimensions. Variable names follow the standard Naming Conventions.

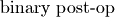

Max pooling:

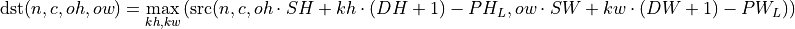

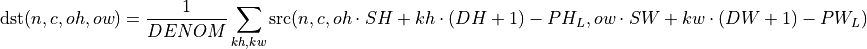

Average pooling:

Here output spatial dimensions are calculated similarly to how they are done in Convolution.

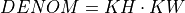

Average pooling supports two algorithms:

dnnl_pooling_avg_include_padding, in which case

,

,dnnl_pooling_avg_exclude_padding, in which case

equals to the size of overlap between an averaging window and images.

equals to the size of overlap between an averaging window and images.

TODO: a picture would be nice here.

Difference Between Forward Training and Forward Inference

Max pooling requires a workspace for the dnnl_forward_training propagation kind, and does not require it for dnnl_forward_inference (see details below).

Backward

The backward propagation computes  , based on

, based on  and (in case of max pooling) workspace.

and (in case of max pooling) workspace.

Execution Arguments

When executed, the inputs and outputs should be mapped to an execution argument index as specified by the following table.

Primitive input/output |

Execution argument index |

|---|---|

|

DNNL_ARG_SRC |

|

DNNL_ARG_DST |

workspace |

DNNL_ARG_WORKSPACE |

|

DNNL_ARG_DIFF_SRC |

|

DNNL_ARG_DIFF_DST |

|

DNNL_ARG_ATTR_MULTIPLE_POST_OP(binary_post_op_position) | DNNL_ARG_SRC_1 |

Implementation Details

General Notes

During training, max pooling requires a workspace on forward (dnnl_forward_training) and backward passes to save indices where a maximum was found. The workspace format is opaque, and the indices cannot be restored from it. However, one can use backward pooling to perform up-sampling (used in some detection topologies). The workspace can be created via workspace_desc() from the pooling primitive descriptor.

A user can use memory format tag dnnl_format_tag_any for dst memory descriptor when creating pooling forward propagation. The library would derive the appropriate format from the src memory descriptor. However, the src itself must be defined. Similarly, a user can use memory format tag dnnl_format_tag_any for the diff_src memory descriptor when creating pooling backward propagation.

Data Type Support

The pooling primitive supports the following combinations of data types:

Propagation |

Source |

Destination |

Accumulation data type (used for average pooling only) |

|---|---|---|---|

forward / backward |

f32 |

f32 |

f32 |

forward / backward |

f64 |

f64 |

f64 |

forward / backward |

bf16 |

bf16 |

bf16 |

forward / backward |

f16 |

f16 |

f32 |

forward |

s8 |

s8 |

s32 |

forward |

u8 |

u8 |

s32 |

forward |

s32 |

s32 |

s32 |

forward inference |

s8 |

u8 |

s32 |

forward inference |

u8 |

s8 |

s32 |

forward inference |

s8 |

f16 |

f32 |

forward inference |

u8 |

f16 |

f32 |

forward inference |

f16 |

s8 |

f32 |

forward inference |

f16 |

u8 |

f32 |

forward inference |

s8 |

f32 |

f32 |

forward inference |

u8 |

f32 |

f32 |

forward inference |

f32 |

s8 |

f32 |

forward inference |

f32 |

u8 |

f32 |

Data Representation

Source, Destination, and Their Gradients

Like other CNN primitives, the pooling primitive expects data to be an  tensor for the 1D spatial case, an

tensor for the 1D spatial case, an  tensor for the 2D spatial case, and an

tensor for the 2D spatial case, and an  tensor for the 3D spatial case.

tensor for the 3D spatial case.

The pooling primitive is optimized for the following memory formats:

Spatial |

Logical tensor |

Data type |

Implementations optimized for memory formats |

|---|---|---|---|

1D |

NCW |

f32 |

|

1D |

NCW |

s32, s8, u8 |

|

2D |

NCHW |

f32 |

dnnl_nchw ( dnnl_abcd ), dnnl_nhwc ( dnnl_acdb ), optimized^ |

2D |

NCHW |

s32, s8, u8 |

|

3D |

NCDHW |

f32 |

dnnl_ncdhw ( dnnl_abcde ), dnnl_ndhwc ( dnnl_acdeb ), optimized^ |

3D |

NCDHW |

s32, s8, u8 |

dnnl_ndhwc ( dnnl_acdeb ), optimized^ |

Here optimized^ means the format that comes out of any preceding compute-intensive primitive.

Post-Ops and Attributes

Implementation Limitations

Refer to Data Types for limitations related to data types support.

CPU

Different data types of source and destination in forward inference are not supported.

GPU

dnnl_pooling_max for f64 data type will return -FLT_MAX as an output value instead of -DBL_MAX in scenarios when pooling kernel is applied to a completely padded area.

Performance Tips

N/A

Example

Pooling Primitive Example

This C++ API example demonstrates how to create and execute a Pooling primitive in forward training propagation mode.