Visible to Intel only — GUID: GUID-F7D66404-19F0-4FDA-90FD-C0B4146BA3E0

Visible to Intel only — GUID: GUID-F7D66404-19F0-4FDA-90FD-C0B4146BA3E0

Inner Product

General

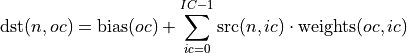

The inner product primitive (sometimes called fully connected) treats each activation in the minibatch as a vector and computes its product with a weights 2D tensor producing a 2D tensor as an output.

Forward

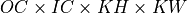

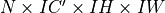

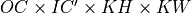

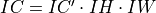

More precisely, let  ,

,  ,

,  and

and  be

be  ,

,  ,

,  , and

, and  tensors, respectively (variable names follow the standard Naming Conventions). Then:

tensors, respectively (variable names follow the standard Naming Conventions). Then:

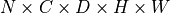

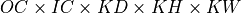

In cases where the  and

and  tensors have spatial dimensions, they are flattened to 2D. For example, if they are 4D

tensors have spatial dimensions, they are flattened to 2D. For example, if they are 4D  and

and  tensors, then the formula above is applied with

tensors, then the formula above is applied with  . In such cases, the

. In such cases, the  and

and  tensors must have equal spatial dimensions (e.g.

tensors must have equal spatial dimensions (e.g.  and

and  for 4D tensors).

for 4D tensors).

Difference Between Forward Training and Forward Inference

There is no difference between the dnnl::prop_kind::forward_training and dnnl::prop_kind::forward_inference propagation kinds.

Backward

The backward propagation computes  based on

based on  and

and  .

.

The weights update computes  and

and  based on

based on  and

and  .

.

and

and

might be different on forward propagation, backward propagation, and weights update.

might be different on forward propagation, backward propagation, and weights update.

Execution Arguments

When executed, the inputs and outputs should be mapped to an execution argument index as specified by the following table.

Primitive input/output |

Execution argument index |

|---|---|

|

DNNL_ARG_SRC |

|

DNNL_ARG_WEIGHTS |

|

DNNL_ARG_BIAS |

|

DNNL_ARG_DST |

|

DNNL_ARG_DIFF_SRC |

|

DNNL_ARG_DIFF_WEIGHTS |

|

DNNL_ARG_DIFF_BIAS |

|

DNNL_ARG_DIFF_DST |

|

DNNL_ARG_ATTR_MULTIPLE_POST_OP(binary_post_op_position) | DNNL_ARG_SRC_1 |

|

DNNL_ARG_ATTR_MULTIPLE_POST_OP(prelu_post_op_position) | DNNL_ARG_WEIGHTS |

Implementation Details

General Notes

N/A.

Data Types

Inner product primitive supports the following combination of data types for source, destination, weights, and bias:

Propagation |

Source |

Weights |

Destination |

Bias |

|---|---|---|---|---|

forward / backward |

f32 |

f32 |

f32 |

f32 |

forward |

f16 |

f16 |

f32, f16, u8, s8 |

f16, f32 |

forward |

u8, s8 |

s8 |

u8, s8, s32, bf16, f32 |

u8, s8, s32, bf16, f32 |

forward |

bf16 |

bf16 |

f32, bf16 |

f32, bf16 |

backward |

f32, bf16 |

bf16 |

bf16 |

|

backward |

f32, f16 |

f16 |

f16 |

|

weights update |

bf16 |

f32, bf16 |

bf16 |

f32, bf16 |

weights update |

f16 |

f32, f16 |

f16 |

f32, f16 |

Data Representation

Like other CNN primitives, the inner product primitive expects the following tensors:

Spatial |

Source |

Destination |

Weights |

|---|---|---|---|

1D |

|

|

|

2D |

|

|

|

3D |

|

|

|

Memory format of data and weights memory objects is critical for inner product primitive performance. In the oneDNN programming model, inner product primitive is one of the few primitives that support the placeholder format dnnl::memory::format_tag::any (shortened to any from now on) and can define data and weight memory objects formats based on the primitive parameters. When using any it is necessary to first create an inner product primitive descriptor and then query it for the actual data and weight memory objects formats.

The table below shows the combinations of memory formats the inner product primitive is optimized for. For the destination tensor (which is always  ) the memory format is always dnnl::memory::format_tag::nc (dnnl::memory::format_tag::ab).

) the memory format is always dnnl::memory::format_tag::nc (dnnl::memory::format_tag::ab).

Source / Destination |

Weights |

Limitations |

|---|---|---|

any |

any |

N/A |

dnnl_nc , dnnl_nwc , dnnl_nhwc , dnnl_ndhwc |

any |

N/A |

dnnl_nc , dnnl_ncw , dnnl_nchw , dnnl_ncdhw |

any |

N/A |

dnnl_nc , dnnl_nwc , dnnl_nhwc , dnnl_ndhwc |

dnnl_io , dnnl_wio , dnnl_hwio , dnnl_dhwio |

N/A |

dnnl_nc , dnnl_ncw , dnnl_nchw , dnnl_ncdhw |

dnnl_oi , dnnl_oiw , dnnl_oihw , dnnl_oidhw |

N/A |

Post-Ops and Attributes

Post-ops and attributes enable you to modify the behavior of the inner product primitive by chaining certain operations after the inner product operation. The following post-ops are supported by inner product primitives:

Propagation |

Type |

Operation |

Description |

Restrictions |

|---|---|---|---|---|

forward |

attribute |

Scales the result of inner product by given scale factor(s) |

int8 inner products only |

|

forward |

post-op |

Applies an Eltwise operation to the result |

||

forward |

post-op |

Adds the operation result to the destination tensor instead of overwriting it |

||

forward |

post-op |

Applies a Binary operation to the result |

General binary post-op restrictions |

|

forward |

post-op |

Applies an PReLU operation to the result |

The following masks are supported by the primitive:

0, which applies one scale value to an entire tensor, and

1, which applies a scale value per output channel for DNNL_ARG_WEIGHTS argument.

When scales masks are specified, the user must provide the corresponding scales as additional input memory objects with argument DNNL_ARG_ATTR_SCALES | DNNL_ARG_${MEMORY_INDEX} during the execution stage.

Implementation Limitations

Check Data Types.

The CPU engine does not support u8 or s8 data type for dst with f16src and weights.

Performance Tips

Use dnnl::memory::format_tag::any for source, weights, and destinations memory format tags when create an inner product primitive to allow the library to choose the most appropriate memory format.

Example

Inner Product Primitive Example

This C++ API example demonstrates how to create and execute an Inner Product primitive.

Key optimizations included in this example:

Primitive attributes with fused post-ops;

Creation of optimized memory format from the primitive descriptor.