Intel® oneAPI Data Analytics Library Developer Guide and Reference

A newer version of this document is available. Customers should click here to go to the newest version.

Visible to Intel only — GUID: GUID-6AB12D0A-FC16-4732-AB88-9B31A4C1A556

Visible to Intel only — GUID: GUID-6AB12D0A-FC16-4732-AB88-9B31A4C1A556

LASSO and Elastic Net Computation

Batch Processing

LASSO and Elastic Net algorithms follow the general workflow described in Regression Usage Model.

Training

For a description of common input and output parameters, refer to Regression Usage Model. Both LASSO and Elastic Net algorithms have the following input parameters in addition to the common input parameters:

Input ID |

Input |

|---|---|

weights |

Optional input. Pointer to the By default, all weights are equal to 1. |

gramMatrix |

Optional input. Pointer to the By default, the table is set to an empty numeric table. It is used only when the number of features is less than the number of observations. |

Chosse the appropriate tab to see the parameters used in LASSO and Elastic Net batch training algorithms:

LASSO

Parameter |

Default Value |

Description |

|---|---|---|

algorithmFPType |

float |

The floating-point type that the algorithm uses for intermediate computations. Can be float or double. |

method |

defaultDense |

The computation method used by the LASSO regression. The only training method supported so far is the default dense method. |

interceptFlag |

True |

A flag that indicates whether or not to compute |

lassoParameters |

A numeric table of size |

A numeric table of size

This parameter can be an object of any class derived from NumericTable, except for PackedTriangularMatrix, PackedSymmetricMatrix, and CSRNumericTable. |

optimizationSolver |

Optimization procedure used at the training stage. |

|

optResultToCompute |

0 |

The 64-bit integer flag that specifies which extra characteristics of the LASSO regression to compute. Provide the following value to request a characteristic:

|

dataUseInComputation |

doNotUse |

A flag that indicates a permission to overwrite input data. Provide the following value to restrict or allow modification of input data:

|

Elastic Net

Parameter |

Default Value |

Description |

|---|---|---|

algorithmFPType |

float |

The floating-point type that the algorithm uses for intermediate computations. Can be float or double. |

method |

defaultDense |

The computation method used by the Elastic Net regression. The only training method supported so far is the default dense method. |

interceptFlag |

True |

A flag that indicates whether or not to compute |

penaltyL1 |

A umeric table of size |

L1 regularization coefficient (penaltyL1 is The numeric table of size

This parameter can be an object of any class derived from NumericTable, except for PackedTriangularMatrix, PackedSymmetricMatrix, and CSRNumericTable. |

penaltyL2 |

A numeric table of size |

L2 regularization coefficient (penaltyL2 is The numeric table of size

This parameter can be an object of any class derived from NumericTable, except for PackedTriangularMatrix, PackedSymmetricMatrix, and CSRNumericTable. |

optimizationSolver |

Optimization procedure used at the training stage. |

|

optResultToCompute |

0 |

The 64-bit integer flag that specifies which extra characteristics of the Elastic Net regression to compute. Provide the following value to request a characteristic:

|

dataUseInComputation |

doNotUse |

A flag that indicates a permission to overwrite input data. Provide the following value to restrict or allow modification of input data:

|

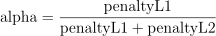

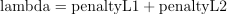

compromise between L1 (lasso penalty) and L2 (ridge-regression penalty) regularization:

control full regularization:

In addition, both LASSO and Elastic Net algorithms have the following optional results:

Result ID |

Result |

|---|---|

gramMatrix |

Pointer to the computed Gram Matrix with size |

Prediction

For a description of the input and output, refer to Regression Usage Model.

At the prediction stage, LASSO and Elastic Net algorithms have the following parameters:

Parameter |

Default Value |

Description |

|---|---|---|

algorithmFPType |

float |

The floating-point type that the algorithm uses for intermediate computations. Can be float or double. |

method |

defaultDense |

Default performance-oriented computation method, the only method supported by the regression-based prediction. |

Examples

LASSO

C++: lasso_reg_dense_batch.cpp

Elastic Net

Performance Considerations

For better performance when the number of samples is larger than the number of features in the training data set, certain coordinates of gradient and Hessian are computed via the component of Gram matrix. When the number of features is larger than the number of observations, the cost of each iteration via Gram matrix depends on the number of features. In this case, computation is performed via residual update [Friedman2010].

To get the best overall performance for LASSO and Elastic Net training, do the following:

If the number of features is less than the number of samples, use homogenous table.

If the number of features is greater than the number of samples, use SOA layout rather than AOS layout.

numeric table with weights of samples. The input can be an object of any class derived from NumericTable except for PackedTriangularMatrix, PackedSymmetricMatrix, and CSRNumericTable.

numeric table with weights of samples. The input can be an object of any class derived from NumericTable except for PackedTriangularMatrix, PackedSymmetricMatrix, and CSRNumericTable. numeric table with pre-computed Gram Matrix. The input can be an object of any class derived from NumericTable except for CSRNumericTable.

numeric table with pre-computed Gram Matrix. The input can be an object of any class derived from NumericTable except for CSRNumericTable. that contains the default LASSO parameter equal to 0.1.

that contains the default LASSO parameter equal to 0.1. coefficients:

coefficients:

(where k is the number of dependent variables) or

(where k is the number of dependent variables) or  for

for  .

. .

. as described in

as described in  for

for  .

. as described in

as described in  for

for  .

.