We have an exciting new announcement: Intel’s fast-growing cloud service is now called Intel® Tiber™ AI Cloud. It is part of the Intel® Tiber™ Cloud Services portfolio and focused on making Intel computing and software accessible at scale for AI deployments, developer ecosystem support, and benchmark testing.

Built on the backbone of Intel® Tiber™ Developer Cloud, Intel Tiber AI Cloud reflects the increasing emphasis on production-scale AI deployments with more paying customers and AI partners while growing the user community of developers and open source partnerships.

Explore: Intel Tiber AI Cloud

New Enhancements

During the recent months, we have introduced a series of new enhancements in Intel Tiber AI Cloud:

- New compute options: Alongside last month’s Intel® Gaudi® 3 announcement, we started the buildout of Intel’s latest AI accelerator platform in Intel Tiber AI Cloud —expect general availability later this year. In addition, compute instances with the new 128-core Intel® Xeon® 6 CPU and pre-release Dev Kits for the AI PC with the new Intel® Core™ Ultra processors are now available for tech evaluation via the Preview catalog in the Tiber AI Cloud.

- Open source model and framework integrations: Intel announced Intel GPU support in the recent PyTorch* 2.4 release. Developers can now evaluate the latest Intel-supported Pytorch releases via notebooks in the Learning catalog.

- Llama 3.2, the latest version in Meta’s Llama mode collection, was successfully tested with Intel Gaudi AI accelerator, Intel Xeon processors, and AI PCs with Intel Core Ultra processors and is ready for deployment in Intel Tiber AI Cloud.

- Expanded learning resources: Access Gaudi AI accelerators via new notebooks added to the Learning catalog. Using these notebooks, AI developers and data scientists can execute interactive shell commands on Gaudi accelerators. In addition, the Intel cloud team partnered with DeepLearning.ai and Prediction Guard to offer a new popular LLM course supported by Gaudi 2 computing.

- Partnerships: During the Intel Vision 2024 conference, we announced Intel’s partnership with Seekr, a fast-growing AI company. Seekr is now using one of the new super-compute Gaudi clusters, which features over 1,024 accelerators in Intel’s AI Cloud, to train foundation models and LLMs that help enterprises overcome the challenges of inaccuracy and bias in their AI applications. Its trusted AI platform, SeekrFlow, is now available via the Software Catalog in Intel Tiber AI Cloud.

How it Works

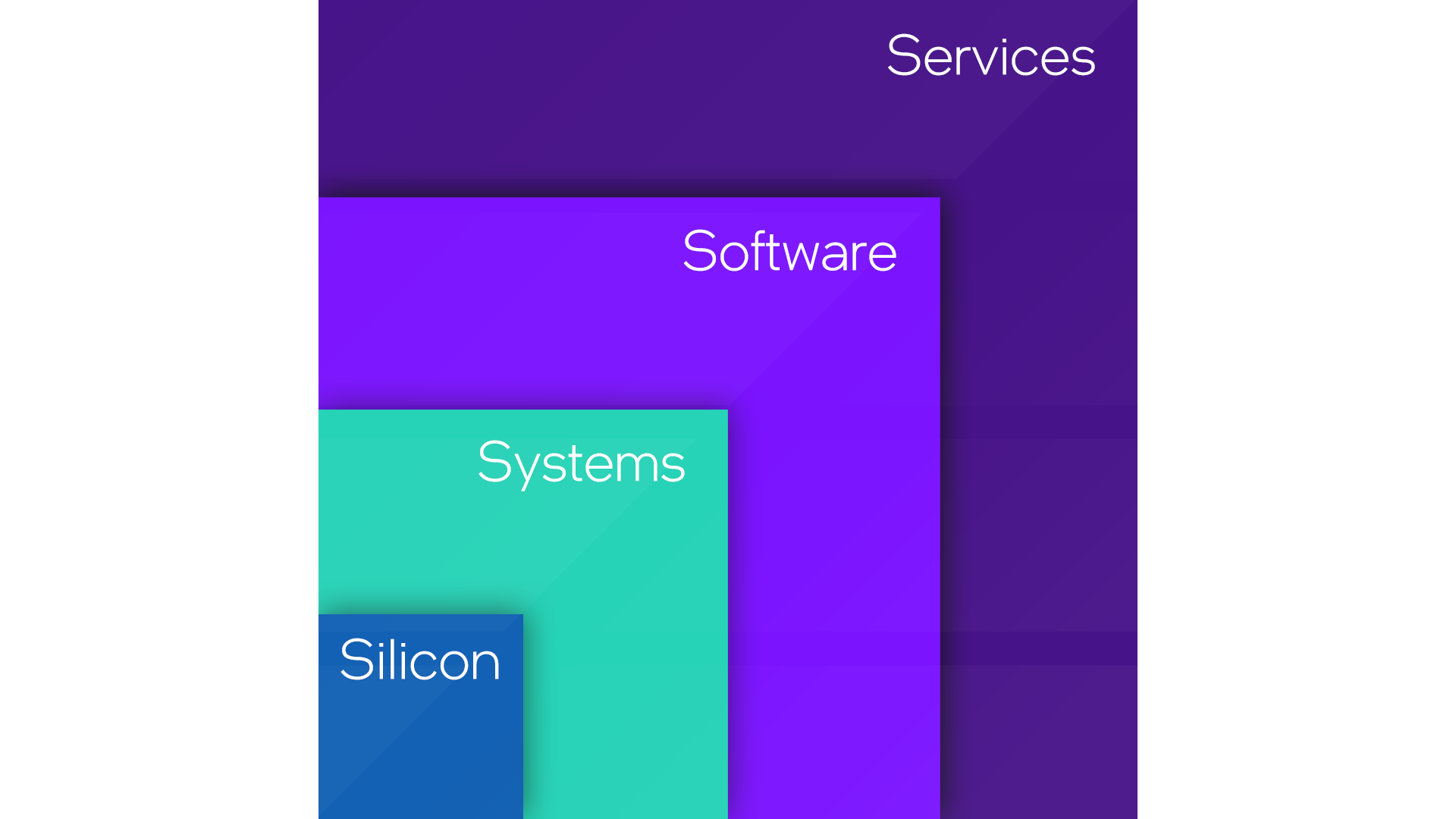

Let’s unpack what’s under the hood and cover the main building blocks of Intel’s AI cloud offering: silicon, systems, software, and services.

Silicon:

- Intel Tiber AI Cloud is first-in-line and Customer Zero for the latest Intel® CPUs, GPUs, and AI accelerators, with direct support channels into Intel engineering.

- As a result, Intel’s AI cloud can offer early access to the latest Intel hardware and software at a low cost, which may not always be generally available yet.

Systems:

- The demands of compute infrastructure rapidly evolve due to increasing AI workload scale and performance requirements.

- Intel Tiber AI Cloud offers various computing options to deploy AI workloads, including containers, virtual machines, and dedicated systems. In addition, we recently introduced small and super-compute scale Intel Gaudi clusters with high-speed Ethernet networking for foundation model training.

Software:

- Intel supports the growing open software ecosystem for AI and actively contributes to open source projects such as Pytorch and UXL Foundation.

- Dedicated Intel cloud computing is allocated to support open source projects, enabling users to access the latest AI frameworks and models in Intel Tiber AI Cloud.

Services:

- Enterprise users and developers consume computing AI services differently, depending on technical expertise, implementation requirements, and use cases.

- Intel Tiber AI Cloud offers server CLI access, curated interactive access via notebooks, and serverless API access to meet these diverse access requirements.

Why It Matters

Intel Tiber AI Cloud is a key part of Intel’s strategy to level the playing field and remove the barriers to AI adoption for startups, enterprise customers, and developers:

- Cost performance: It offers early access to the latest Intel computing at an attractive cost performance for optimal AI deployments.

- Scale: It offers a scale-out computing platform for growing AI deployments, ranging from virtual machines (VMs) and single nodes to large-scale super-computing Gaudi clusters.

- Open source: It offers access to Intel-supported open source frameworks, models, and accelerator software, ensuring portability and avoiding vendor lock-in.

What’s Next

Over the next few months, we will continue building out additional Gaudi 3 and Xeon 6 computing capacity and add new regions to expand our AI deployment footprint.

- Get started with Intel Tiber AI Cloud

- Explore the docs, tutorials, and more