7/24/2024

PyTorch* 2.4 now provides initial support1 for Intel® Data Center GPU Max Series, which brings Intel GPUs and the SYCL* software stack into the official PyTorch stack to help further accelerate AI workloads.

1 For GPU support and better performance, we recommend that you install Intel® Extension for PyTorch*.

Benefits

Intel GPU support provides more GPU choices for users, providing a consistent GPU programming paradigm on both front ends and back ends. You can now run and deploy workloads on Intel GPUs with minimal coding efforts. This implementation generalizes the PyTorch device and runtime (device, stream, event, generator, allocator, and guard) to accommodate streaming devices. The generalization not only eases the deployment of PyTorch on ubiquitous hardware but also makes integrating different hardware back ends easier.

Intel GPU users will have a better experience by directly obtaining continuous software support from built-in PyTorch, unified software distribution, and consistent product release time.

Overview of Intel GPU Support

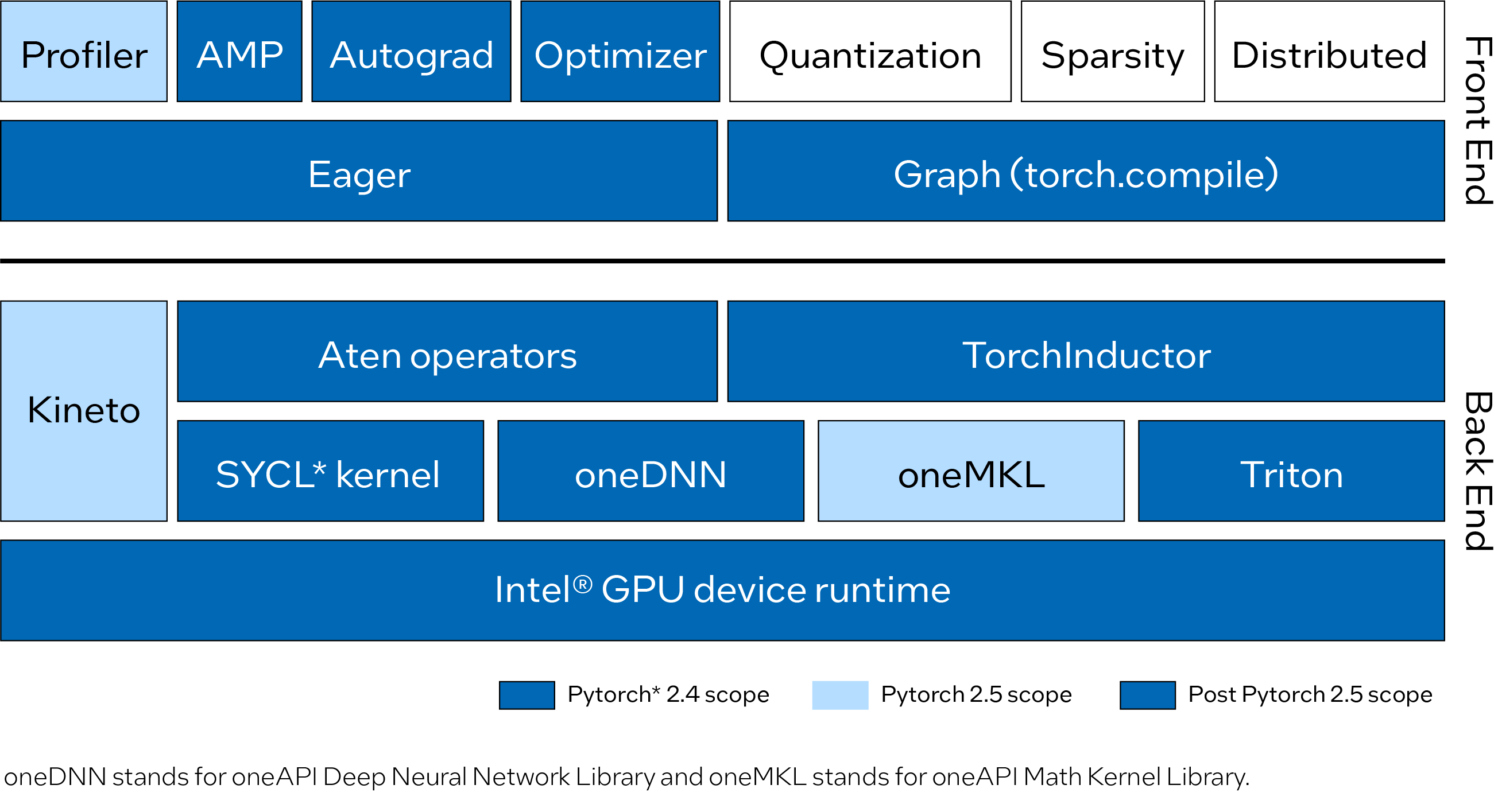

Intel GPU support upstreamed into PyTorch provides eager mode and graph mode support in the PyTorch built-in front end. Eager mode now has an implementation of commonly used Aten operators with the SYCL programming language. The most performance-critical graphs and operators are highly optimized by using oneAPI Deep Neural Network Library (oneDNN) and oneAPI Math Kernel Library (oneMKL). Graph mode (torch.compile) now has an enabled Intel GPU back end to implement the optimization for Intel GPUs and to integrate Triton.

Essential components of Intel GPU support were added to PyTorch 2.4, including Aten operators, oneDNN, Triton, Intel GPU source build, and Intel GPU tool chains integration. Meanwhile, PyTorch Profiler (based on a Kineto and oneMKL integration) is being actively developed in preparation for the upcoming PyTorch 2.5 release. Figure 1 shows the current and planned front-end and back-end improvements for Intel GPU upstreamed into PyTorch.

Figure 1. Architecture roadmap for Intel GPU upstreamed to PyTorch

Features

In addition to providing key features for Intel Data Center GPU Max Series for training and inference, the PyTorch 2.4 release on Linux* keeps the same user experience as other hardware the PyTorch supports. So, if you migrate code from CUDA*, you can run the existing application code on an Intel GPU with minimal code changes for the device name (from cuda to xpu). For example:

# CUDA Code tensor = torch.tensor([1.0, 2.0]).to("cuda") # Code for Intel GPU tensor = torch.tensor([1.0, 2.0]).to("xpu")

PyTorch 2.4 features with an Intel GPU include:

- Training and inference workflows.

- Both torch.compile and basic function of eager are supported, and fully run a Dynamo Hugging Face* benchmark for eager and compile modes.

- Data types such as FP32, BF16, FP16, and automatic mixed precision (AMP).

-

Runs on Linux and Intel Data Center GPU Max Series.

Get Started

- Try Intel Data Center GPU Max Series through Intel® Tiber™ Developer Cloud.

- Get a tour of the environment setup, source build, and examples.

- To learn how to create a free Standard account, see Get Started. Then do the following:

- Sign in to the cloud console.

- From the Training section, open the PyTorch 2.4 on Intel GPUs notebook.

- Ensure that the PyTorch 2.4 kernel is selected for the notebook.

Summary

Intel GPU on PyTorch 2.4 is an initial support (prototype) release, which brings the first Intel GPU of Intel Data Center GPU Max Series into the PyTorch ecosystem for AI workload acceleration.

We are continuously enhancing Intel GPU support functionality and performance to reach Beta quality in the PyTorch 2.5 release. With further product development, Intel Client GPUs will be added to the GPU-supported list for AI PC use scenarios. We are also exploring more features in PyTorch 2.5, such as:

- Eager Mode: Implement more Aten operators and fully run Dynamo Torchbench and TIMM eager mode.

- Torch.compile: Fully run Dynamo Torchbench and TIMM benchmark compile mode with compromising performance.

- Profiler and Utilities: Enable torch.profile to support Intel GPU.

- PyPI wheels distribution.

- Windows and Intel Client GPU Series support.

We welcome the community to evaluate these new contributions to Intel GPU support on PyTorch.

Acknowledgments

We want thank PyTorch open source community for their technical discussions and insights: Nikita Shulga, Jason Ansel, Andrey Talman, Alban Desmaison, and Bin Bao.

We also thank collaborators from PyTorch for their professional support and guidance.