Visible to Intel only — GUID: GUID-52F5ED9F-7713-476E-A703-C4C505AA4ACB

Visible to Intel only — GUID: GUID-52F5ED9F-7713-476E-A703-C4C505AA4ACB

DynamicQuantize

General

DynamicQuantize operation converts a f32 tensor to a quantized (s8 or u8) tensor. It supports both per-tensor and per-channel asymmetric linear quantization. The target quantized data type is specified via the data type of dst logical tensor. Rounding mode is library-implementation defined.

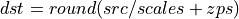

For per-tensor quantization

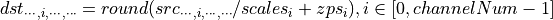

For per-channel quantization, taking channel axis = 1 as an example:

Operation attributes

Attribute Name |

Description |

Value Type |

Supported Values |

Required or Optional |

|---|---|---|---|---|

Specifies which quantization type is used. |

string |

per_tensor (default), per_channel |

Optional |

|

Specifies dimension on which per-channel quantization is applied. |

s64 |

A s64 value in the range of [-r, r-1] where r = rank(src), 1 by default. Negative value means counting the dimension backwards from the end. |

Optional |

Execution arguments

The inputs and outputs must be provided according to below index order when constructing an operation.

Inputs

Index |

Argument Name |

Required or Optional |

|---|---|---|

0 |

src |

Required |

1 |

scales |

Required |

2 |

zps |

Optional |

Outputs

Index |

Argument Name |

Required or Optional |

|---|---|---|

0 |

dst |

Required |

Supported data types

DynamicQuantize operation supports the following data type combinations.

Src |

Scales |

Zps |

Dst |

|---|---|---|---|

f32 |

f32 |

s8, u8, s32 |

s8 |

f32 |

f32 |

s8, u8, s32 |

u8 |