Build and Run a Intel® oneAPI DL Framework Developer Toolkit Sample Using the Command Line

An internet connection is required to download the samples for oneAPI toolkits. If you are using an offline system, download the samples from a system that is internet connected and transfer the sample files to your offline system. If you are using an IDE for development, you will not be able to use the oneAPI CLI Samples Browser while you are offline. Instead, download the samples and extract them to a directory. Then open the sample with your IDE. The samples can be downloaded from here:

Intel® oneAPI DL Framework Toolkit Code SamplesAfter you have downloaded the samples, follow the instructions in the README.md file.

Command line development can be done with a terminal window or done through Visual Studio Code*. For details on how to use VS Code locally, see Basic Usage of Visual Studio Code with oneAPI on Linux*. To use VS Code remotely, see Remote Visual Studio Code Development with oneAPI on Linux*.

Download Samples using the oneAPI CLI Samples Browser

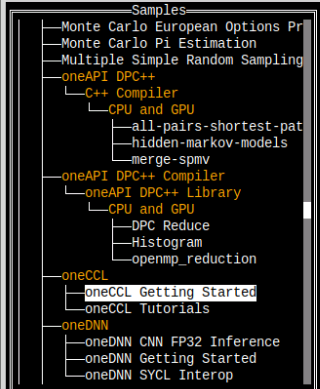

Use the oneAPI CLI Samples Browser to browse the collection of online oneAPI samples. As you browse the oneAPI samples, you can copy them to your local disk as buildable sample projects. Most oneAPI sample projects are built using Make or CMake, so the build instructions are included as part of the sample in a README file. The oneAPI CLI utility is a single-file, stand-alone executable that has no dependencies on dynamic runtime libraries. You can find the samples browser in the <install-dir>/dev-utilities/latest/bin folder on your development host system.

An internet connection is required to download the samples for oneAPI toolkits. For information on how to use this toolkit offline, see Developing with Offline Systems in the Troubleshooting section.

- Open a terminal window.

- If you did not complete the steps in Option 2: One time set up for setvars.sh in the Configure Your System section,set system variables by sourcing setvars:

For root or sudo installations:

. <install_dir>/setvars.sh

For local user installations:

. ~/intel/oneapi/setvars.sh

- If you customized the installation folder, setvars.sh is in your custom folder.

NOTE:

The setvars.sh script can also be managed using a configuration file. For more details, see Using a Configuration File to Manage Setvars.sh.

- In the same terminal window, run the application (it should be in your PATH):

The oneAPI CLI menu appears:oneapi-cli

- Move the arrow key down to select Create a project, then press Enter

- Select the language for your sample. For your first project, select cpp, then press Enter. The toolkit samples list appears.

- Select the sample you wish to use. For your first sample, select the CCL Getting Started Sample. After you successfully build and run the CCL Getting Started Sample, you can download more samples. Descriptions of each sample are in the Samples Table.

After you select a sample, press Enter.

- Enter an absolute or a relative directory path to create your project. Provide a directory and project name. The Project Name is the name of the sample you chose in the previous step.

Press Tab to select Create, then press Enter:

The directory path is printed below the Create button.

Samples can be built and run using SYCL*, C, or C++ on a CPU and GPU. For users who are only using C or C++ follow the instructions to run on CPU only.

| Sample Name | Description |

| oneCCL_Getting_Started for CPU and GPU | This C++ API example demonstrates basic of CCL programming model by invoking an allreduce operation. |

| oneDNN_Getting_Started for CPU and GPU | This C++ API example demonstrates basic of oneDNN programming model by using a ReLU operation. |

| oneDNN_CNN_FP32_Inference for CPU and GPU | This C++ API example demonstrates building/running a simple CNN fp32 inference against different oneDNN pre-built binaries. |

| oneDNN_SYCL_InterOp for CPU and GPU | This C++ API example demonstrates oneDNN SYCL extensions API programming model by using a custom SYCL kernel and a ReLU operation. |

CCL Getting Started Sample for CPU and GPU

The commands below will allow you to build your first oneCCL project on a CPU or a GPU. When using these commands, replace <install_dir> with your installation path, (example: /opt/intel/oneapi)

Build a Sample Project Using the Intel® oneAPI DPC++/C++ Compiler

- Using a clean console environment without exporting any default environment variables, source setvars.sh:

source <install_dir>/setvars.sh --ccl-configuration=cpu_gpu_dpcpp Navigate to where the sample is located (i.e., DLDevKit-code-samples):

cd <project_dir>/oneCCL_Getting_Started

- Navigate to the build directory:

mkdir build cd build

- Build the program with CMake. This will also copy the source file sycl_allreduce_cpp_test.cpp from <install_dir>/ccl/latest/examples/sycl to the build/src/sycl folder:

cmake .. -DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=dpcpp make sycl_allreduce_cpp_test

NOTE:If you receive any error messages after running the command above, refer to Configure Your System to ensure your environment is set up properly. - Run the program. Replace the ${NUMBER_OF_PROCESSES} with the appropriate integer number and select gpu or cpu:

Example: mpirun -n 2 ./out/sycl/sycl_allreduce_cpp_test gpumpirun -n ${NUMBER_OF_PROCESSES} ./out/sycl/sycl_allreduce_cpp_test {gpu|cpu}

Result Validation on CPU

Provided device type cpu Running on Intel(R) Core(TM) i7-7567U CPU @ 3.50GHz PASSED

Result Validation on GPU

Provided device type gpu Running on Intel(R) Gen9 Provided device type gpu Running on Intel(R) Gen9 PASSED

Run the oneAPI CLI Samples Browser to download another sample.

oneDNN Getting Started Sample for CPU and GPU

Using the GNU C++ compiler, this sample demonstrates basic oneDNN operations on an Intel CPU and uses GNU OpenMP for CPU parallelization.

- Using a clean console environment without exporting any default environment variables, source setvars.sh:

source /opt/intel/oneapi/setvars.sh --dnnl-configuration=cpu_gomp - Navigate to where the project is located (i.e., DLDevKit-code-samples):

cd <project_dir>/oneDNN_Getting_Started - Navigate to the cpu_comp directory:

mkdir cpu_comp cd cpu_comp - Build the program with CMake.

NOTE:If any errors appear after building with CMake, change to root privileges and try again.

cmake .. -DCMAKE_C_COMPILER=gcc -DCMAKE_CXX_COMPILER=g++ make getting-started-cpp - Enable oneDNN Verbose Log:

export DNNL_VERBOSE=1 Run the program on a CPU:

./out/getting-started-cpp- Result Validation on a CPU:

dnnl_verbose,info,DNNL v1.0.0 (commit 560f60fe6272528955a56ae9bffec1a16c1b3204) dnnl_verbose,info,Detected ISA is Intel AVX2 dnnl_verbose,exec,cpu,eltwise,jit:avx2,forward_inference,data_f32::blocked:acdb:f0 diff_undef::undef::f0,alg:eltwise_relu:0:0,1x3x13x13,968.354 Example passes

Run the oneAPI CLI Samples Browser to download another sample.

oneDNN CNN FP32 Inference Sample for CPU and GPU

The cnn-inference-f32-cpp sample is included in the toolkit installation.

By using the Intel® oneAPI DPC++/C++ Compiler, this sample will support CNN FP32 on an Intel® CPU and an Intel® GPU. To build with CMake and the Intel® oneAPI DPC++/C++ Compiler:

- Using a clean console environment without exporting any default environment variables, source setvars.sh:

source /opt/intel/oneapi/setvars.sh --dnnl-configuration=cpu_dpcpp_gpu_dpcppcmake .. -DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=dpcpp make cnn-inference-f32-cpp Navigate to where the sample is located (i.e., DLDevKit-code-samples):

cd <sample_dir>/oneDNN_CNN_INFERENCE_FP32- Navigate to the dpcpp directory:

mkdir dpcpp cd dpcpp - Build the program with CMake:

cmake .. -DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=dpcpp make cnn-inference-f32-cppNOTE:If any errors appear after building with CMake, change to root privileges and try again. If it still does not work, refer to Configure Your System to ensure you have completed all set up steps. - Enable oneDNN Verbose Log

export DNNL_VERBOSE=1 - Run the program on the following:

- On a CPU

./out/cnn-inference-f32-cpp cpu - On a GPU (if available)

./out/cnn-inference-f32-cpp gpu

- On a CPU

Result Validation

The output shows how long it takes to run this code on CPU and GPU. For example, here is a run time of 33 seconds on CPU.

On CPU:

dnnl_verbose,info,DNNL v1.0.0 (commit 560f60fe6272528955a56ae9bffec1a16c1b3204) dnnl_verbose,info,Detected ISA is Intel AVX2 ... /DNNL VERBOSE LOGS/ ... dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:abcd:f0 wei_f32::blocked:abcd:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,mb1ic9216oc4096,5.50391 dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,mb1ic4096oc4096,2.58618 dnnl_verbose,exec,cpu,inner_product,gemm:jit,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,mb1ic4096oc1000,0.667969 dnnl_verbose,exec,cpu,reorder,jit:uni,undef,src_f32::blocked:ab:f0 dst_f32::blocked:ab:f0,num:1,1x1000,0.0368652 Use time 33.22

On GPU

dnnl_verbose,info,DNNL v1.0.0 (commit 560f60fe6272528955a56ae9bffec1a16c1b3204) dnnl_verbose,info,Detected ISA is Intel AVX2 ... /DNNL VERBOSE LOGS/ ... dnnl_verbose,exec,gpu,reorder,ocl:simple:any,undef,src_f32::blocked:aBcd16b:f0 dst_f32::blocked:abcd:f0,num:1,1x256x6x6 dnnl_verbose,exec,gpu,inner_product,ocl:gemm,forward_inference,src_f32::blocked:abcd:f0 wei_f32::blocked:abcd:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,mb1ic9216oc4096 dnnl_verbose,exec,gpu,inner_product,ocl:gemm,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,mb1ic4096oc4096 dnnl_verbose,exec,gpu,inner_product,ocl:gemm,forward_inference,src_f32::blocked:ab:f0 wei_f32::blocked:ab:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,,mb1ic4096oc1000 dnnl_verbose,exec,gpu,reorder,ocl:simple:any,undef,src_f32::blocked:ab:f0 dst_f32::blocked:ab:f0,num:1,1x1000 Use time 106.29

Run the oneAPI CLI Samples Browser to download another sample.

oneDNN SYCL Interop Sample for CPU and GPU

This DNNL SYCL Interop sample code is implemented using C++ and SYCL language for CPU and GPU.

Using DPC++ Compiler

Using a clean console environment without exporting any default environment variables, source setvars.sh:

source /opt/intel/oneapi/setvars.sh --dnnl-configuration=cpu_dpcpp_gpu_dpcpp

Navigate to where the sample is located (i.e., DLDevKit-code-samples)

cd <sample_dir>/oneDNN_SYCL_InterOp

Navigate to the dpcpp directory.

mkdir dpcpp cd dpcpp

- Build the program with CMake

cmake .. -DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=dpcpp make sycl-interop-cpp

- Enable oneDNN Verbose Log:

export DNNL_VERBOSE=1

- Run the Program on the following:

On a CPU

./out/sycl-interop-cpp cpu

On a GPU

./out/sycl-interop-cpp gpu

Result Validation

CPU Results

dnnl_verbose,info,DNNL v1.0.0 (commit 560f60fe6272528955a56ae9bffec1a16c1b3204) dnnl_verbose,info,Detected ISA is Intel AVX2 dnnl_verbose,exec,cpu,eltwise,jit:avx2,forward_training,data_f32::blocked:abcd:f0 diff_undef::undef::f0,alg:eltwise_relu:0:0,2x3x4x5,958.552 Example passes

GPU Results

dnnl_verbose,info,DNNL v1.0.0 (commit 560f60fe6272528955a56ae9bffec1a16c1b3204) dnnl_verbose,info,Detected ISA is Intel AVX2 dnnl_verbose,exec,gpu,eltwise,ocl:ref:any,forward_training,data_f32::blocked:abcd:f0 diff_undef::undef::f0,alg:eltwise_relu:0:0,2x3x4x5 Example passes

Run the oneAPI CLI Samples Browser to download another sample.

oneCCL Getting Started Sample for CPU Only

The commands below will allow you to build your first oneCCL project on a CPU or a GPU. When using these commands, replace $<install_dir> with your installation path, (example: /opt/intel/oneapi)

Build a Sample Project Using the Intel® oneAPI DPC++/C++ Compiler

- Using a clean console environment without exporting any default environment variables, source setvars.sh:

source /opt/intel/oneapi/setvars.sh --ccl-configuration=cpu_icc Navigate to where the sample is located (i.e., DLDevKit-code-samples):

cd <project_dir>/oneCCL_Getting_Started

- Navigate to the build directory:

mkdir build cd build

- Build the program with CMake:

cmake .. -DCMAKE_C_COMPILER=gcc -DCMAKE_CXX_COMPILER=g++ make

NOTE:If you receive any error messages after running the command above, refer to Configure Your System to ensure your environment is set up properly. - Run the program. Replace the ${NUMBER_OF_PROCESSES} with the appropriate integer number and select gpu or cpu:

Example: mpirun -n 2 ./out/cpu_allreduce_cpp_test gpumpirun -n ${NUMBER_OF_PROCESSES} ./out/cpu_allreduce_cpp_test {gpu|cpu}

Result Validation on CPU

Provided device type: cpu Running on Intel(R) Core(TM) i7-7567U CPU @ 3.50GHz Example passes

Run the oneAPI CLI Samples Browser to download another sample.