NIC Pinning

Use this feature to control the assignment of NICs to ranks and threads. This feature is for machines with multiple NICs per node.

To enable NIC pinning, set I_MPI_MULTIRAIL=true to enable the use of multiple NICs on the machine and, in turn, enable NIC pinning. The NIC pinning information is printed out in the Intel(R) MPI debug output withI_MPI_DEBUG=3.

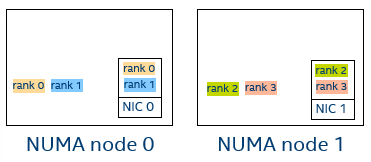

Default Settings

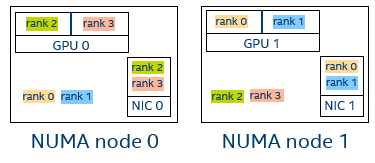

By default, when multi-rail is enabled, the available NICs are distributed between MPI ranks and threads as equally as possible depending on the hardware topology. The NIC closest to the pinned CPU and GPU (when enabled) is preferred. This leads to selecting the most effective NIC because it has the fewest number of PCIe hops from the CPU/GPU to itself.

Examples

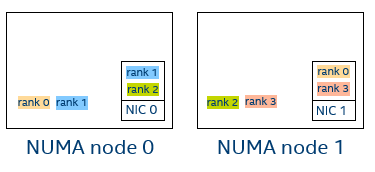

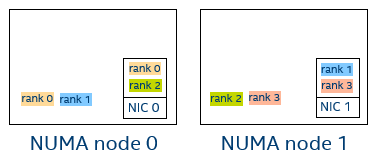

The following examples represent a machine configuration with two NUMA nodes and two NICs.

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Thread ID Pin nic NIC ID

[0] MPI startup(): 0 0 nic0 0

[0] MPI startup(): 1 0 nic0 0

[0] MPI startup(): 2 0 nic1 1

[0] MPI startup(): 3 0 nic1 1

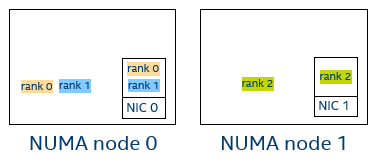

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Thread ID Pin nic NIC ID

[0] MPI startup(): 0 0 nic0 0

[0] MPI startup(): 1 0 nic0 0

[0] MPI startup(): 2 0 nic1 1

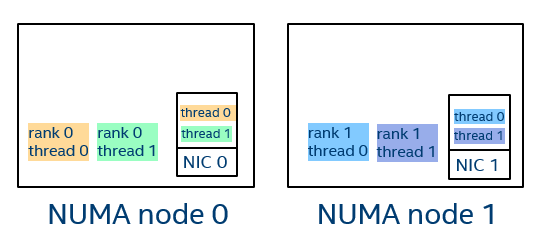

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Thread ID Pin nic NIC ID

[0] MPI startup(): 0 0 nic0 0

[0] MPI startup(): 0 1 nic0 0

[0] MPI startup(): 1 0 nic1 1

[0] MPI startup(): 1 1 nic1 1

I_MPI_OFI_NIC_AFFINITY

Control the selection strategy used in NIC affinity when Intel(R) GPUs are used.

Syntax

I_MPI_OFI_NIC_AFFINITY=<strategy>

Arguments

| Value | Description |

|---|---|

| <strategy> | Specify the NIC-selection strategy. |

| cpu | Select NIC so it prefers the closest CPU followed by the closest GPU. |

| gpu | Select NIC so it prefers the closest GPU followed by the closest CPU. |

Description

Set this environment variable to control the selection strategy for the NIC affinity of ranks and threads.

In most cases, the default NIC-selection logic performs best. Use this environment variable only to override the default NIC-selection strategy.

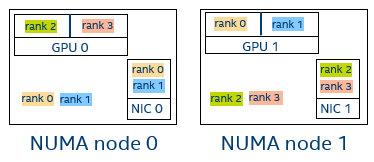

Examples

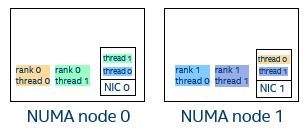

The following examples represent a machine configuration with two NUMA nodes and two NICs.

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Thread ID Pin nic NIC ID

[0] MPI startup(): 0 0 nic0 0

[0] MPI startup(): 1 0 nic0 0

[0] MPI startup(): 2 0 nic1 1

[0] MPI startup(): 3 0 nic1 1

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Thread ID Pin nic NIC ID

[0] MPI startup(): 0 0 nic1 1

[0] MPI startup(): 1 0 nic1 1

[0] MPI startup(): 2 0 nic0 0

[0] MPI startup(): 3 0 nic0 0

I_MPI_OFI_NIC_LIST

Override the default NIC selection with an explicit list of NICs.

Syntax

I_MPI_OFI_NIC_LIST=<niclist>

Arguments

| Value | Description |

|---|---|

| <niclist> | A comma-separated list of the NIC ids and/or NIC id ranges. |

| <l>-<m> | Range of NICs with IDs from l to m. |

| <k>,<l>-<m> | NIC with the id k, NICs with ids l to m. |

Description

Set this environment variable to explicitly control the NIC selection.

Define a list of NIC ids to map a local rank to a NIC. The list should contain at least as many entries as the number of local ranks. When ranks × number multithreading is enabled, it should contain at least as many entries as number of local ranks * number of threads per rank. The individual values should be between 0 and the NIC id of the last NIC in the node. The process or thread with the i-th index is pinned to the i-th NIC in the list.

The NIC id is not the absolute NIC number but the logical NIC index assigned to the NIC by Intel(R) MPI. You can view the logical indices for available NICs in the NIC pinning output with I_MPI_DEBUG=3.

For example, if a node has three NICs, which are registered on the machine as cxi2, cxi1,and cxi0, in that order, they are assigned logical ids as 0 (for cxi2), 1 (for cxi1), and 2 (for cxi0) respectively. Specifying the value of the environment variable as 0,2,1 for a 3-process run results in Rank 0 using cxi2, Rank 1 using cxi1, and Rank 3 using cxi0.

This environment variable is only relevant when you enable the multi-rail capability with I_MPI_MULTIRAIL=1.

In most cases, the default NIC-selection logic performs best. Use this environment variable only to override the default NIC-selection list.

Examples

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Pin nic NIC ID

[0] MPI startup(): 0 nic1 1

[0] MPI startup(): 1 nic0 0

[0] MPI startup(): 2 nic0 0

[0] MPI startup(): 3 nic1 1

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Pin nic NIC ID

[0] MPI startup(): 0 nic0 0

[0] MPI startup(): 1 nic1 1

[0] MPI startup(): 2 nic0 0

[0] MPI startup(): 3 nic1 1

Debug output I_MPI_DEBUG=3:

[0] MPI startup(): Number of NICs: 2

[0] MPI startup(): ===== NIC pinning on host1 =====

[0] MPI startup(): Rank Thread ID Pin nic NIC ID

[0] MPI startup(): 0 0 nic1 1

[0] MPI startup(): 0 1 nic0 0

[0] MPI startup(): 1 0 nic0 0

[0] MPI startup(): 1 1 nic1 1