Improve MPI Performance by Hiding Latency

Fabio Baruffa, PhD, senior software applications engineer, Intel Corporation

@IntelDevTools

Get the Latest on All Things CODE

Sign Up

Scaling MPI applications on large HPC systems requires efficient communication patterns. Whenever possible, the application needs to overlap communication and computation to hide the communication latency. Previous articles discussed implementing the nonblocking collective communications provided by Intel® MPI Library for speeding up nonblocking communication and I/O collective operations (see Hiding Communication Latency Using MPI-3 Non-Blocking Collectives). These improvements are based on the asynchronous progress threads support that allows the Intel MPI Library to perform communication and computation in parallel. This article focuses on nonblocking, point-to-point communication (mainly the MPI_Isend and MPI_Irecv functions) and how an efficient ghost cell exchange mechanism for a domain decomposition code can effectively hide communication latency in MPI applications.

Benchmark the Point-to-Point Overlap

To test the ability of Intel MPI Library to overlap communication and computation, the authors implemented a simple ping-pong benchmark where the sleep function simulates computation and the communication uses the nonblocking send (Isend) and receive (Irecv). This is similar to the approach used by Wittmann (and others) in Asynchronous MPI for the Masses.

Set up the benchmark so that the first task initializes the communication with MPI_Isend, sleeps for time Tcpu to simulation computation, then posts an MPI_Wait while the second task posts an MPI_Irecv. This is performed several times to collect enough statistical data (in our case, we run it for 100 iterations). Here’s the code snippet:

Measure the total time Tt of the benchmark and the time of the compute part Tcpu and for nonasynchronous progress, the total time Tt = Tcpu + Tc where Tc is the communication time. When asynchronous communication is enabled, the total time of the benchmark results in Tt = max(Tcpu, Tc), showing an overlap release_mt between computation and communication.

Using the release_mt version of Intel MPI Library, the following environment variable must be set to enable the asynchronous progress thread feature:

For more details, see Intel MPI Library Developer Guide for Linux* and Intel MPI Library Developer Reference for Linux.

By default, one progress thread is generated per MPI task.

Figure 1 shows the results of our ping-pong benchmark. Different colors correspond to different message sizes for the Isend/Irecv communication, starting from 16 MB (blue lines) to 64 MB (green lines). Smaller messages might be sent as nonblocking (even for blocking communication) due to the eager protocol for the MPI communication. The two lines with the same color correspond to the cases with and without asynchronous progress enabled (triangle and circle markers, respectively). The effectiveness of overlapping communication and computation is clearly visible in the behavior of the 16 MB async, 32 MB async, and 64 MPI async lines. The horizontal portions show that communication overhead dominates the total time. Indeed, the compute time is invisible in the timings. But for larger compute times (represented by the x-axis), the computation dominates the total time. The communication is hidden and Tt = Tcpu, results in computation to communication overlap of 100%.

Figure 1. Overlap latency versus the working time for different message sizes. This experiment uses two nodes with one MPI process per node.

Ghost Cell Exchange in Domain Decomposition

To better investigate the performance benefit of the asynchronous progress threads, let's investigate the behavior of a typical domain decomposition application that uses a ghost cell exchange mechanism. Figure 2 shows an example of two-dimensional domain partitioning in four blocks, each assigned to a different MPI process (denoted by different colors). The white cells with colored dots correspond to the cells that each process computes independently. The colored cells at the borders of each subdomain correspond to the ghost cells that must be exchanged with neighboring MPI processes.

Figure 2. Example of two-dimensional domain partitioning

The benchmark application solves the diffusive heat equation in three dimensions, using 2D domain decomposition with a stencil of one element in each direction. The general update scheme can be summarized as follows:

- Copy data of the ghost cells to send buffers

- Halo exchange with Isend/Irecv calls

- Compute (part 1): Update the inner field of the domain

- MPI_Waitall

- Copy data from receive buffers to the ghost cells

- Compute (part 2): Update the halo cells

- Repeat N times

The computation exhibits strong scaling with the number of MPI processes used to run the simulation. The copy step is needed to fill the buffers used for the data exchange. In terms of simulation time, the overhead of copying is negligible. The halo exchange is used for instantiating the communication of the ghost cells, which are not used during computation of the inner field. Therefore, updating the inner field can be run asynchronously with respect to the halo exchange. The outer boundary of the domain is ignored for simplicity.

Strong Scaling Benchmark

On a multi-node system, we measured how the number of iterations per second changes with increasing resources. Each node is a two-socket, Intel® Xeon® Gold 6148 processor system. Figure 3 shows the results of strong scaling, where the problem size is fixed to a 64 x 64 x 64 grid and the number of nodes increases. The difference between the blue and orange lines shows the performance improvement if we overlap communication and computation using the asynchronous progress thread implementation of Intel MPI Library. The improvement is significant, up to 1.9x for the eight-node case. This is confirmed by plotting the computation and communication times (Figure 4). The blue lines correspond to the standard MPI, while the orange lines correspond to the asynchronous progress thread implementation. The compute and the communication time are represented in dashed and solid lines, respectively. As the number of nodes increases, the benefit isn’t as high because the computation per MPI process becomes smaller and the communication overhead dominates the application.

Figure 3. The total number of iterations computed per second versus the number of nodes of the system (higher is better). Grid size: 64 x 64 x 64. Two MPI processes per node.

Figure 4. A breakdown of the computation and communication times. Lower is better. Grid size: 64 x 64 x 64. Two MPI processes per node.

Increase the Number of MPI Processes per Node

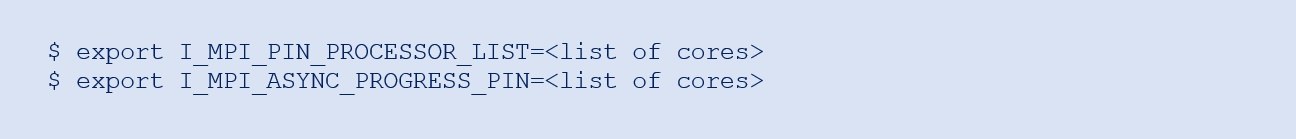

At higher numbers of MPI processes per node, increase the grid size to 128 x 128 x 128 to have enough computation for overlapping with the communication of the ghost cells. Figures 5 and 6 show the number of iterations per second versus the number of nodes for the 10 and 20 MPI processes per node cases, respectively. For larger numbers of MPI processes, we need to balance the available resources. Intel MPI Library lets us pin the MPI processes and the asynchronous progress thread to specific CPU cores using these environment variables:

Using these environment variables and pinning the asynchronous thread close to the corresponding MPI process gives best performance. We observed high speedups in both cases, up to 2.2x for the 16-node case and 10 MPI processes per node.

Figure 5. Total number of iterations computed per second versus the number of nodes of the system. Higher is better. Grid size: 128 x 128 x 128. Ten MPI processes per node. We set export I_MPI_PIN_PROCESSOR_LIST=1,5,9,13,17,21,25,29,33,37 and export I_MPI_ASYNC_PROGRESS_PIN=0,4,8,12,16,20,24,28,32,36 to balance resources and pin the asynchronous thread close to the corresponding MPI process.

Figure 6. Total number of iterations computed per second versus the number of nodes of the system. Higher is better. Grid size: 128 x 128 x 128. Twenty MPI processes per node. We set export I_MPI_PIN_PROCESSOR_LIST=1,3,5,7,9,11,13,15,17,19,21,23,25,27,29 ,31,33,35,37,39 and export I_MPI_ASYNC_PROGRESS_PIN=0,2,4,6,8,10,12,14,16,18,2, 0,22,24,26,28,30,32,34,36,38 to balance resources and pin the asynchronous thread close to the corresponding MPI process.

Weak Scaling Benchmark

For the last performance test, we performed a weak scaling investigation. In Figure 7, we report the performance speedup when overlapping communication with computation, where we increase the grid size by two along with the number of nodes, up to 128. The computation size and the number of MPI processes per node is kept constant at four processes. The overall benefit is significant, up to a 32% performance improvement for the largest test.

Figure 7. Performance speedups for the weak scaling benchmark. Each bar shows the speedup using the asynchronous progress thread. Higher is better.

Conclusion

This investigation and analysis focused on the possibility of overlapping communication and computation for nonblocking point-to-point MPI functions using the asynchronous progress thread control that Intel MPI Library provides. You saw the performance benefit of using this implementation for a typical domain decomposition code using the ghost cells exchange mechanism. Applications with similar communication patterns using the nonblocking Isend/Irecv scheme could observe similar speedups and scaling behavior.

References

Configuration: Testing by Intel as of February 14, 2020. Node configuration: 2x Intel Xeon Gold 6148 processor at 2.40 GHz, 20 cores per CPU, 96 GB per node (nodes connected by the Intel® Omni-Path Fabric), Intel MPI Library 2019 update 4, release_mt, asynchronous progress control enabled via export I_MPI_ASYNC_PROGRESS=1, export I_MPI_ASYNC_PROGRESS_THREADS=1.

Intel’s compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice. Notice revision #20110804.

______

You May Also Like

Make HPC Clusters More Efficient Using Intel® MPI Library Tuning

Read

Get the Latest on the Intel MPI Library to Boost Performance, Container, and Cloud Support

Watch

Intel® MPI Library

Deliver flexible, efficient, and scalable cluster messaging with this multifabric message-passing library. Intel MPI Library is included in the Intel® oneAPI HPC Toolkit.

Get It Now

See All Tools