6/10/2024

oneMKL Overview

Many computing workloads in science, finance, enterprise, and communications rely on advanced math libraries to efficiently handle linear algebra (BLAS, LAPACK, SPARSE), vector math, Fourier transforms, random number generation, and even solvers for linear equations or analysis. oneAPI Math Kernel Library (oneMKL) is a complete and comprehensive package of math functions and solvers, based on the oneAPI Specification for oneMKL. This specification defines the SYCL/DPC++ interfaces for performance math library functions.

- Intel implementation of the specification is named oneAPI Math Kernel Library (oneMKL). It is provided as a free binary-only distribution, and is included in the Intel® oneAPI Base Toolkit. oneMKL is highly optimized for Intel CPU and Intel GPU hardware.

- The oneAPI Math Kernel Library (oneMKL) Interfaces Project is an open-source implementation of the specification. The project goal is to demonstrate how the SYCL/DPC++ interfaces documented in the oneMKL specification can be implemented for any math library and work for any target hardware. This opens it up to being used on a wide variety of computational devices: CPUs, GPUs, FPGAs, and other accelerators.

NOTE: While the open-source implementation may not yet be the full implementation of the specification, the goal is to build it out over time. We encourage the community to contribute to this project and help to extend support to multiple hardware targets and other math libraries. For more information, refer to oneMKL Interfaces - Readme, Frequently Asked Questions.

Both implementations allow oneMKL to share in the vision of oneAPI and provide freedom of choice for targeted hardware platform configurations, using a common codebase for different accelerated compute platforms through source code portability and performance portability.

oneMKL Software Architecture

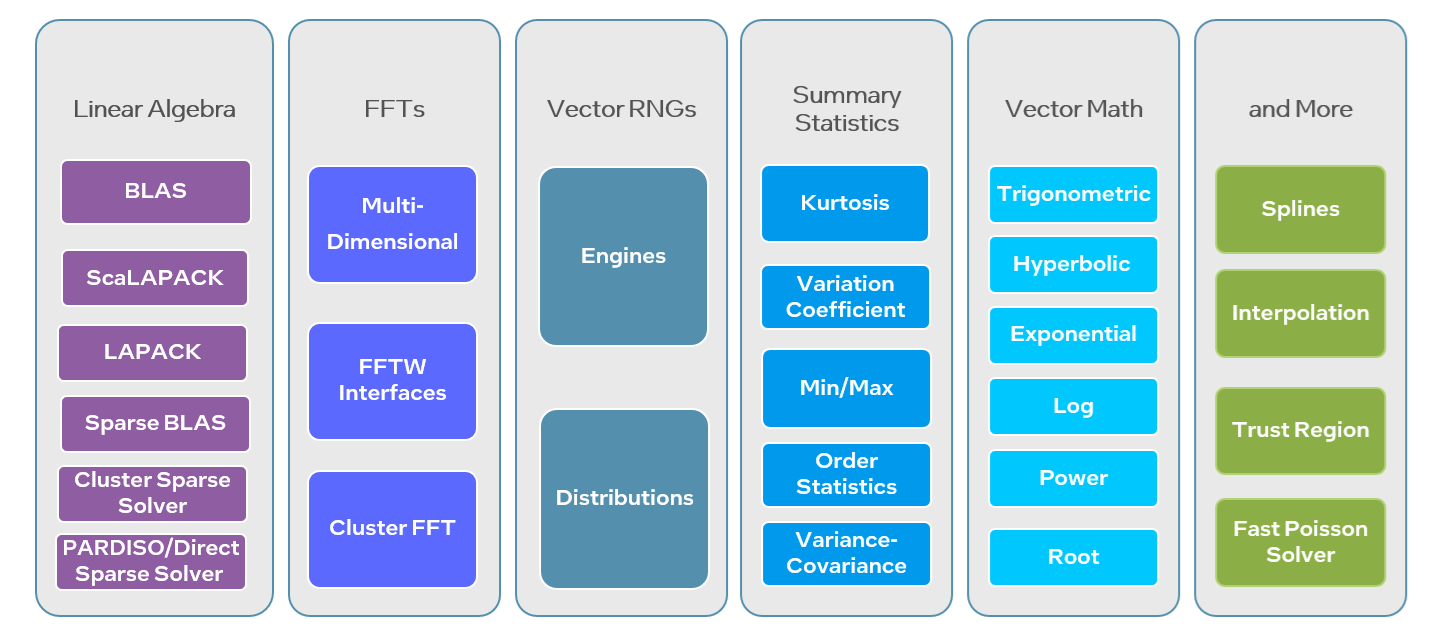

The functionality included in the oneAPI specification is subdivided into the following domains:

- Dense Linear Algebra (BLAS, LAPACK)

- Sparse Linear Algebra (SPARSE)

- Discrete Fourier Transforms (FT)

- Random Number Generators (RNG)

- Vector Math

Supported domains for oneMKL include Linear Algebra, including BLAS and LAPACK operations, Sparse Linear Algebra Functions (SPARSE), Fast Fourier Transforms (FFT), Random Number Generator Functions (RNG), Data Fitting, Vector Math, Summary Statistics.

Supported domains for oneMKL Interfaces currently include: BLAS, LAPACK, RNG, DFT, SPARSE_BLAS.

Figure 1. Intel® oneAPI Math Kernel Library Function Domain

How It Works

oneMKL GPU Offload Model

The General Purpose GPU (GPGPU) compute model consists of a host connected to one or more compute devices. Each compute device consists of many GPU Compute Engines (CE), also known as Execution Units (EU) or Xe Vector Engines (XVE). A host program and a set of kernels execute within the context set by the host. The host interacts with these kernels through a command queue.

Figure 2. GPU Execution Model Overview

When a kernel-enqueue command submits a kernel for execution, the command defines an N-dimensional index space. A kernel-instance consists of the kernel, the argument values associated with the kernel, and the parameters that define the index space. When a compute device executes a kernel-instance, the kernel function executes for each point in the defined index space or N-dimensional range.

Synchronization can also occur at the command level, where the synchronization can happen between commands in host command-queues. In this mode, one command can depend on execution points in another command or multiple commands.

Other types of synchronization based on memory-order constraints inside a program include Atomics and Fences. These synchronization types control how a memory operation of any particular work-item is made visible to another, which offers micro-level synchronization points in the data-parallel compute model.

oneMKL directly takes advantage of this basic execution model, which is implemented as part of Intel® Graphics Compute Runtime for oneAPI Level Zero and OpenCL™ Driver.

oneMKL SYCL API Basics

Although oneMKL supports automatic GPU offload dispatch with OpenMP pragmas, we are going to focus on its support for SYCL queues.

SYCL is a royalty-free, cross-platform abstraction layer that enables code for heterogeneous and offload processors to be written using ISO C++ or newer. It provides APIs and abstractions to find devices (e.g. CPUs, GPUs, FPGAs) on which code can be executed and to manage data resources and code execution on those devices.

Being open-source and part of the oneAPI spec, the oneMKL SYCL API provides the perfect vehicle for migrating CUDA proprietary library function APIs to an open standard.

oneMKL uses C++ namespaces to organize routines by mathematical domain. All oneMKL objects and routines are contained within the oneapi::mkl base namespace. The individual oneMKL domains use a secondary namespace layer as follows:

|

namespace |

oneMKL domain or content |

|---|---|

|

oneapi::mkl |

oneMKL base namespace, contains general oneMKL data types, objects, exceptions, and routines. |

|

oneapi::mkl::blas |

Dense linear algebra routines from BLAS and BLAS-like extensions. The oneapi::mkl::blas namespace should contain two namespaces, column_major and row_major, to support both matrix layouts. |

|

oneapi::mkl::lapack |

Dense linear algebra routines from LAPACK and LAPACK-like extensions. |

|

oneapi::mkl::sparse |

Sparse linear algebra routines from Sparse BLAS and Sparse Solvers. |

|

oneapi::mkl::dft |

Discrete and fast Fourier transformations. |

|

oneapi::mkl::rng |

Random number generator routines. |

|

oneapi::mkl::vm |

Vector mathematics routines, e.g., trigonometric, exponential functions acting on elements of a vector. |

oneMKL class-based APIs, such as those in the RNG and DFT domains, require a sycl::queue as an argument to the constructor or another setup routine. The execution requirements for computational routines from the previous section also apply to computational class methods.

To assign a target GPU device and control device usage, a sycl::device instance can be assigned. Such a device instance can then be partitioned into subdevices, if supported by the underlying device architecture.

The oneMKL SYCL API is designed to allow asynchronous execution of computational routines; this facilitates concurrent usage of multiple devices in the system. Each computational routine enqueues work to be performed on the selected device and may (but is not required to) return before execution completes.

sycl::buffer objects automatically manage synchronization between kernel launches linked by a data dependency (either read-after-write, write-after-write, or write-after-read). oneMKL routines are not required to perform any additional synchronization of sycl::buffer arguments.

When Unified Shared Memory (USM) pointers are used as input to, or output from, a oneMKL routine, it becomes the calling application’s responsibility to manage possible asynchronicity. To help the calling application, all oneMKL routines with at least one USM pointer argument also take an optional reference to a list of input events, of type std::vector<sycl::event>, and have a return value of type sycl::event representing computation completion:

sycl::event mkl::domain::routine(..., std::vector<sycl::event> &in_events = {});

All oneMKL functions are host thread safe.

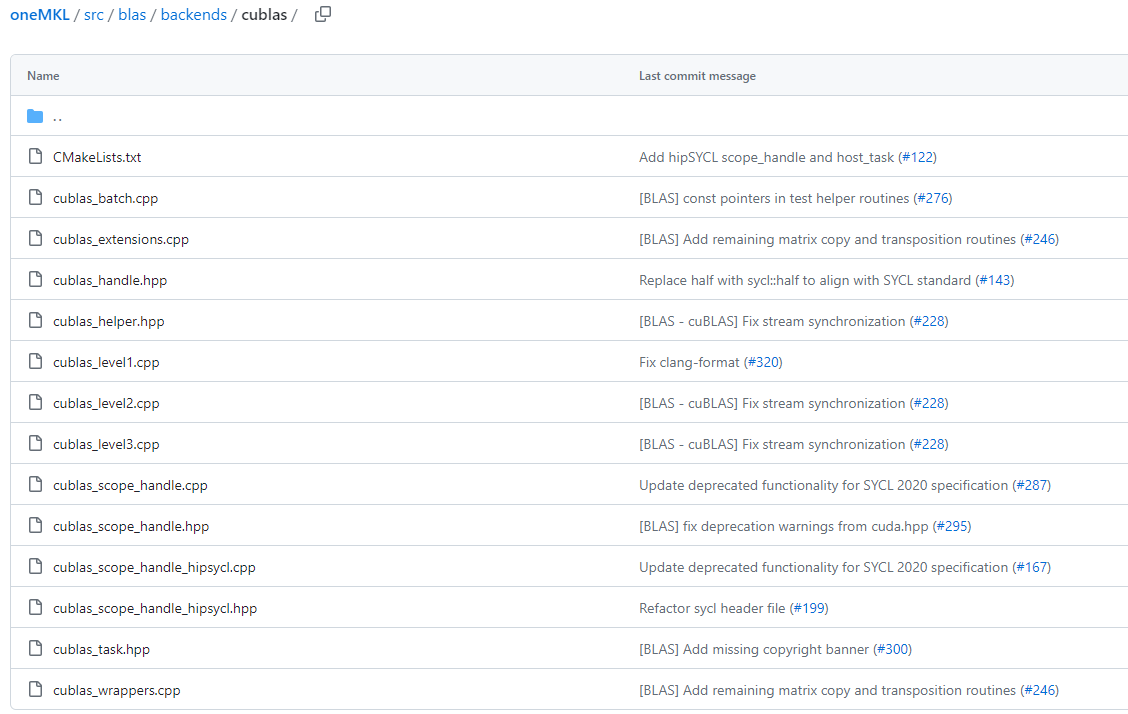

oneMKL SYCL API Source Code

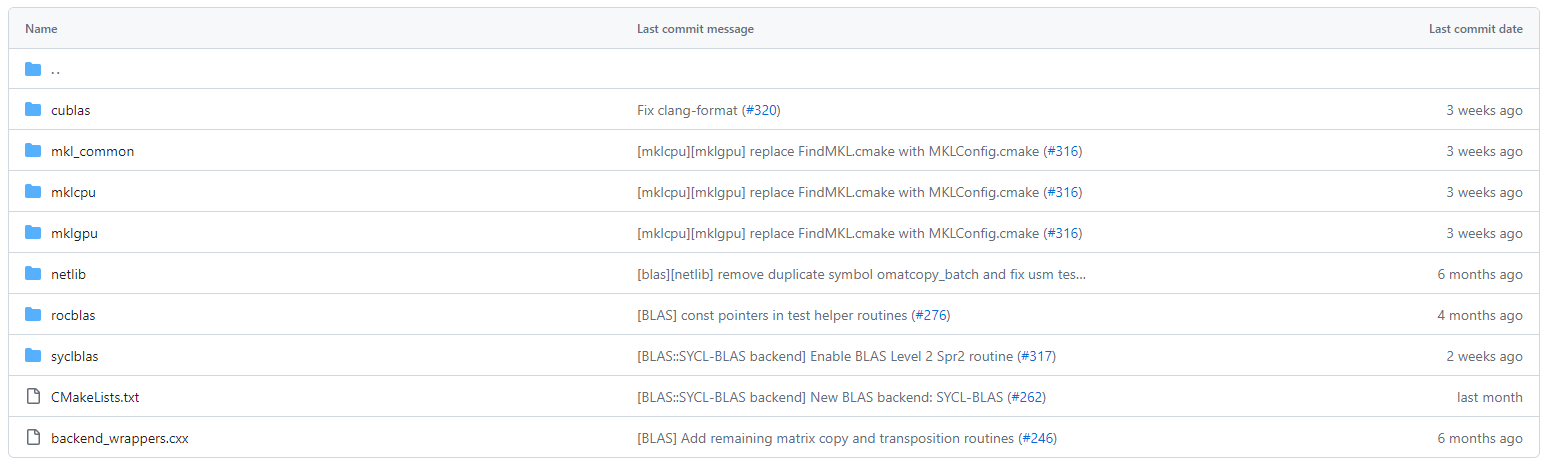

The oneMKL SYCL API source code is available on the oneAPI GitHub repository. It is under continuous active development. Backend mapping and wrappers for equivalent CUDA, AMD ROCm* and SYCL implementation for the domains BLAS, DFT, LAPACK, and RND are provided as well:

Figure 3. Available oneMKL BLAS Compatibility Backends

This open, backend architecture is what enables the oneMKL SYCL API to be applicable to a wide range of offload devices, including (but not limited to) Intel CPUs, GPUs, and accelerators.

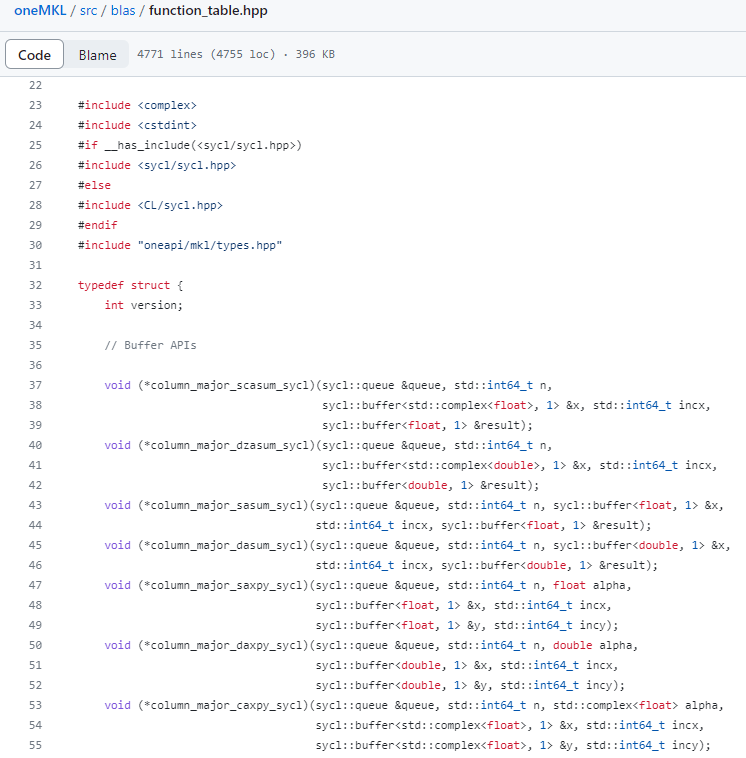

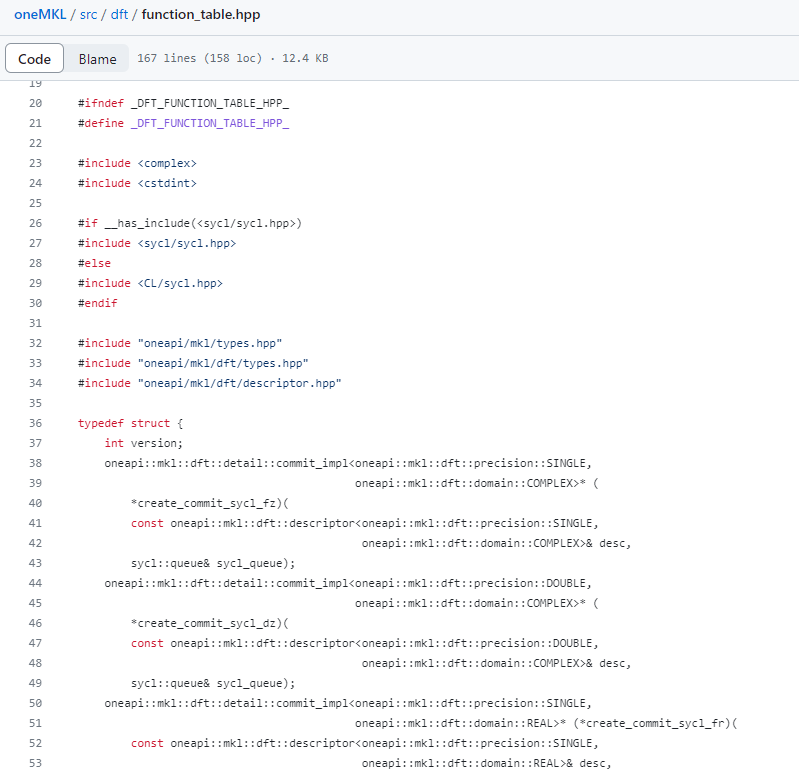

For the complete function table in each domain you can reference the respective function_table.hpp header file:

Figure 4. oneMKL BLAS Function Table Header File

Figure 5. oneMKL FFT Function Table Header File

Support of 3rd Party GPUs

This open, backend architecture is what enables the oneMKL SYCL API to be applicable to a wide range of offload devices, including non-Intel hardware like NVIDIA or AMD GPUs.

If your program targets non-Intel GPUs, you should install the appropriate GPU drivers or plug-ins to compile your program to run on AMD* or NVIDIA* GPUs:

-

- To use an AMD GPU, use the oneAPI for AMD GPUs plugin.

- To use an NVIDIA GPU, use the oneAPI for NVIDIA GPUs plugin.

CUDA Compatibility

Library Wrappers

To get a detailed perspective on how oneMKL functionality maps to a specific backend, you can simply go to the specific backend directory of interest and inspect the respective {backend}_wrappers.cpp file.

Figure 6. oneMKL - cuBLAS Compatibility Backend File Listing

In the cuBLAS* example in Figure 6, the file in question is cublas_wrappers.cpp.

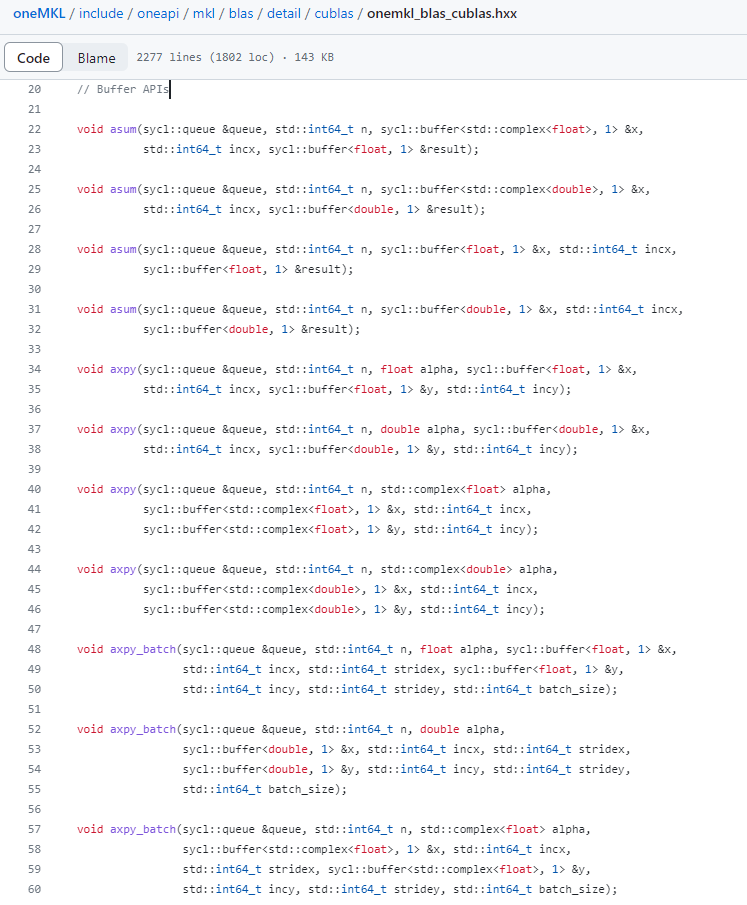

The actual mapping implementation that you will find in a corresponding header includes files called onemkl_{domain}_cu{domain}.hpp and onemkl_{domain}_cu{domain}.hxx.

As before, we are using cuBLAS as the reference for our repository source screenshot in Figure 7. That said, the very same applies for cuFFT*, cuSolver* / LAPACK, and cuRAND*.

Figure 7. oneMKLBLAS - cuBLAS Compatibility Function Headers

See also: Random Number Generation with cuRAND* and oneMKL

Supported CUDA Functions

You can get the list of supported CUDA functions after you install the CUDA-to-SYCL migration tool as explained in the next section and run the following command:

dpct --query-api-mapping=<cuda_function>

CUDA-to-SYCL Migration

CUDA code migration to SYCL helps you to simplify heterogeneous computing for math functions that support CUDA-compatible backends and proprietary NVIDIA hardware while simultaneously freeing your code to run on multi-vendor hardware.

This section describes CUDA->SYCL migration tools, process and options, focusing on math library migration.

Get the Software

Intel and oneAPI provide streamlined tools for CUDA-to-SYCL migration.

- If you plan to use Intel-branded software implementation and target Intel hardware, consider deploying the latest Intel® oneAPI Base Toolkit to get all necessary Intel components at once, including:

- DPC++ Compatibility tool (migration tool)

- Intel® oneAPI DPC++/C++ Compiler

- Intel® oneMKL library

- Intel® VTune™ Profiler (recommended for post-migration code optimization)

- If you plan to use open-source software and target, for example, Nvidia or AMD GPUs, consider deploying the corresponding tools:

- SYCLomatic migration tool available from the GitHub repository; follow the build steps described in its Readme.

- oneMKL Interfaces available from the GitHub repository; follow the build instructions described in the Get Started document.

- A compiler that supports the DPC++/SYCL -specific extensions used in code. Required to compile the output SYCL code. To select a proper compiler for your application, follow the guidelines in oneMKL Specification.

- If your program targets AMD or NVIDIA GPUs, install the appropriate plug-ins to compile your program:

- To use an AMD* GPU, install the oneAPI for AMD GPUs plugin.

- To use an NVIDIA* GPU, install the oneAPI for NVIDIA GPUs plugin

Practice with Code Samples

The CUDA-to-SYCL migration training offers a series of learning samples to master the SYCL migration process, starting with the most basic CUDA examples and then going to more complex CUDA projects using different features and libraries.

The Migrate from CUDA to C++ with SYCL portal also presents a collection of technical information regarding the use of available automated migration tools, including many guided CUDA-to-SYCL code examples. Most interesting for a deeper understanding of migrating CUDA library code to oneMKL are the following:

- Migrating the MonteCarloMultiGPU from CUDA* to SYCL* (Source Code) that provides a guided example for cuRAND to oneMKL and SYCL migration.

- Guided cuBLAS Examples for SYCL Migration that provides a similarly detailed reference for migration of linear algebra and GEMM routines.

- CUDA-to-SYCL Migration Training Jupyter Notebook GitHub.

cuBLAS Migration Code Sample

Let us have a closer look at the migration of the cuBLAS Library - APIs Examples in the NVIDIA/CUDA Library GitHub repository.

The samples source code (SYCL) is migrated from CUDA for offloading computations to a GPU/CPU. The sample demonstrates how to:

- migrate code to SYCL

- optimize the migration steps

- improve processing time

The source files for each of the cuBLAS samples show the usage of oneMKL cuBLAS routines. All are basic programs containing the usage of a single function.

This sample contains two sets of sources in the following folders:

|

Folder Name |

Description |

|---|---|

|

01_sycl_dpct_output |

Contains output of Intel DPC++ Compatibility Tool used to migrate SYCL-compliant code from CUDA code. |

|

02_sycl_dpct_migrated |

Contains SYCL to CUDA migrated code generated by using the Intel DPC++ Compatibility Tool with the manual changes implemented to make the code fully functional. |

The functions are classified into three levels of difficulty. There are 52 samples:

- Level 1 samples (14 samples)

- Level 2 samples (23)

- Level 3 samples (15)

Build the cuBLAS Migration Sample

Note: If you have not already done so, set up your CLI environment by sourcing the setvars script in the root of your oneAPI installation.

Linux*:

• For system wide installations: . /opt/intel/oneapi/setvars.sh

• For private installations: . ~/intel/oneapi/setvars.sh

• For non-POSIX shells, like csh, use the following command:

bash -c 'source <install-dir>/setvars.sh ; exec csh'

For more information on configuring environment variables, see Use the setvars Script with Linux* or macOS*.

- On your Linux* shell, change to the sample directory.

- Build the samples.

By default, this command sequence builds the version of the source code in the 02_sycl_dpct_migrated folder.$ mkdir build $ cd build $ cmake .. $ make - Run the cuBLAS Migration sample.

Run the programs on a CPU or GPU. Each sample uses a default device, which in most cases is a GPU.

• Run the samples in the 02_sycl_dpct_migrated folder.

• $ make run_amax - Example Output:

[ 0%] Building CXX object 02_sycl_dpct_migrated/Level-1/CMakeFiles/amax.dir/amax.cpp.o

[100%] Linking CXX executable amax

[100%] Built target amax

A

1.00 2.00 3.00 4.00

=====

result

4

Understand Code Transformation

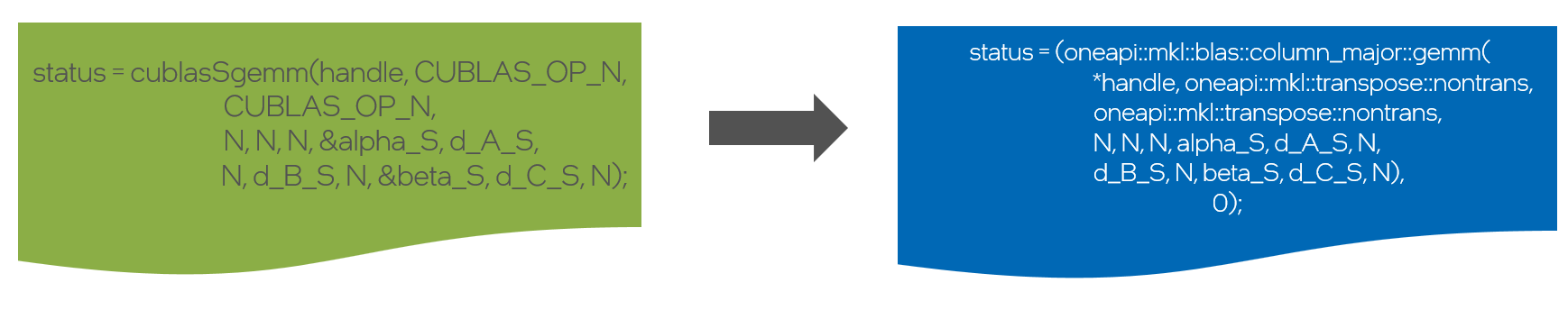

Upon completion, this example will give you a good representation of the flow of code migration for cuBLAS function calls to oneMKL.

You can have a close look at the resulting migrated code for all the included examples at this source location (02_sycl_dpct_migrated).

A typical conversion will look similar to the example for SGEMM in Figure 8:

Figure 8. cuBLAS to oneMKL call conversion for GEMM

The next sections explain how to perform CUDA-to-SYCL migration using the SYCLomatic open-source migration tool. If you plan to work with Intel-branded solutions, follow the instructions on the CUDA to SYCL Migration Portal.

Prepare for Migration

Detailed instructions on preparing your CUDA project for migration are provided in the SYCLomatic Developer Guide. You should take the following steps:

- Prepare Your Cuda Project

- Configure the Migration Tool

- Record Compilation Commands

- Set Up Revision Control

- Run Analysis Mode

Migrate Your Code

Follow the guidelines provided in the SYCLomatic Developer Guide.

Review the Migrated Code

Follow the guidelines provided in the SYCLomatic Developer Guide to review and modify the migrated code, as necessary.

Build and Validate the New SYCL Project

After you have completed any manual migration steps, build your converted code and validate your new code base, as described in the SYCLomatic Developer Guide.

IMPORTANT! If your program targets AMD* or NVIDIA GPUs, you should install the appropriate Codeplay* plugin for the target GPU before compiling. See Compile for AMD or NVIDIA GPU for more information.

Optimize Your Code

Optimize your migrated code, as recommended in the SYCLomatic Developer Guide.

- For detailed information about optimizing your code for AMD GPUs, refer to the Codeplay AMD GPU Performance Guide.

- For detailed information about optimizing your code for NVIDIA GPUS, refer to the Codeplay NVIDIA GPU Performance Guide.

Additional Resources

- oneAPI Math Kernel Library (oneMKL) Interfaces

- oneMKL - Data Parallel C++ Developer Reference

- Migrating the MonteCarloMultiGPU from CUDA* to SYCL* (Source Code)

- Guided cuBLAS Examples for SYCL Migration

- Migrate from CUDA* to C++ with SYCL*

- Intel® DPC++ Compatibility Tool

- SYCLomatic Overview

Ready to Migrate your Math Library Calls?

oneMKL is available standalone or as part of the Intel® oneAPI Base Toolkit. In addition to these download locations, they are also available through partner repositories. Detailed documentation is also available online.