Accelerate pandas Code Using Intel® Distribution of Modin*

By reusing your existing pandas code, you can scale data analytics to a larger dataset without crashing your computer. This demo uses Intel® Distribution of Modin*, a key component of the AI Tools, along with real-world examples to make striking performance comparisons.

Highlights

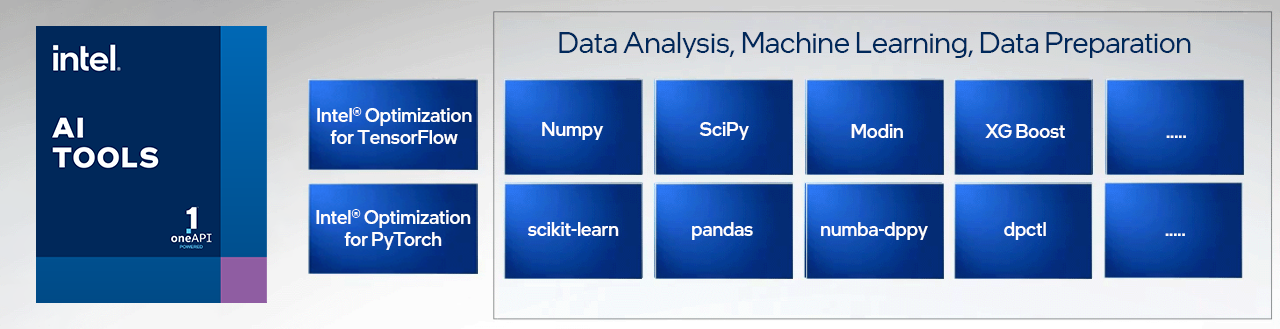

| 00:41.90 | Tools, resources, and utilities that are part of AI Tools include optimized deep learning frameworks and Python* packages for data analysis, machine learning, and data preparation. |

| 01:04.28 |

Intel Distribution of Modin supports all pandas APIs and works seamlessly with the Python ecosystem. When you call Modin instead of pandas, your code looks exactly the same.

|

| 01:33.92 |

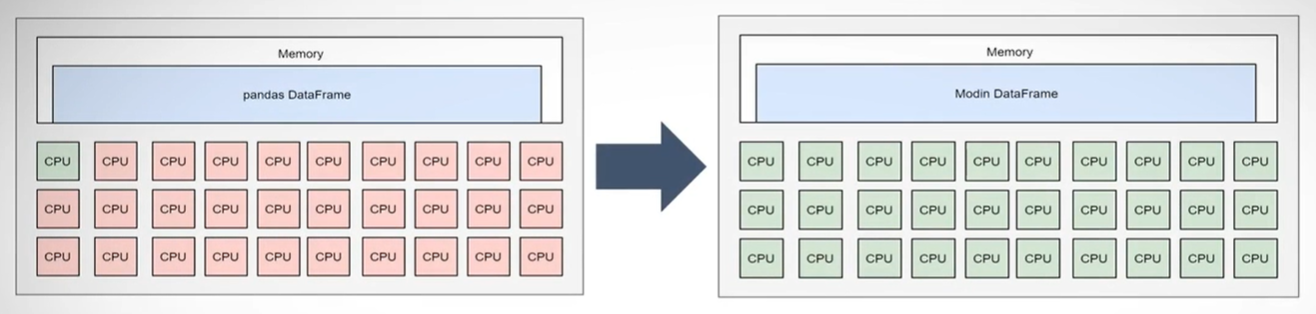

Compare core processing of pandas and Intel Distribution of Modin: If pandas runs on one core of the CPU, Modin can use all the cores out of the box without tuning. No supercomputer needed. The performance increase happens because of the underlying oneAPI optimized libraries and other optimized APIs.

|

| [02:37.60] | The initial performance demo is run on an Intel® Xeon processor. Performance is compared by generating a syntheic array, about 1.5 GB, and is saved as a file. Reading the file with pandas takes 10 seconds; with Modin 2.5 seconds—so it's 5x faster using Modin. |

| [03:07.81] |

The second performance demo uses applymap to perform a Lambda function on the elements of the array. With pandas this takes 24 seconds; with Modin, this takes .04 seconds—a speedup of 60x. The last example concantenates the same data frame and the same array four times using pandas and Modin. This time, Modin is 50x faster. |

Featured Software

Intel Distribution of Modin

Scale your pandas workflows to distributed DataFrame processing by changing only a single line of code.

You May Also Like

Learn More about AI Software Optimized by Intel

Intel Distribution of Modin Documentation

Article: Data Science at Scale with Intel Distribution of Modin

Visit Intel Distribution of Modin on GitHub*

Product and Performance Information

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.