Runtime Instructions

The following are the run instructions needed to set up the node, the model infrastructure and the full runtimes for the model.

Accessing the Intel Gaudi Node

To access an Intel Gaudi node in the Intel Tiber AI cloud, go to Intel Tiber AI Cloud Console, access the hardware instances to select the Intel Gaudi 2 platform for deep learning and follow the steps to start and connect to the node.

The website will provide you an ssh command to login to the node, and it’s advisable to add a local port forwarding to the command to be able to access a local Jupyter Notebook. For example, add the command: ssh -L 8888:localhost:8888 .. to be able to access the Notebook.

Docker* Setup

Now that you have access to the node, you will use the latest Docker image by first calling the docker run command which will automatically download and run the docker:

docker run -itd --name Gaudi_Docker --runtime=habana -e HABANA_VISIBLE_DEVICES=all -e OMPI_MCA_btl_vader_single_copy_mechanism=none --cap-add=sys_nice --net=host --ipc=host vault.habana.ai/gaudi-docker/1.18.0/ubuntu22.04/habanalabs/pytorch-installer-2.4.0:latest

We then start the docker and enter the docker environment by issuing the following command:

docker exec -it Gaudi_Docker bash

Model Setup

Now that we’re running in a docker environment, we can now install the remaining libraries and model repositories:

Start in the root directory and install the DeepSpeed* Library; DeepSpeed is used to improve memory consumption on Intel Gaudi while running large language models.

cd ~

pip install git+https://github.com/HabanaAI/DeepSpeed.git@1.18.0

Now install the Hugging Face Optimum Intel Gaudi library and GitHub examples. We’re selecting the latest validated release of optimum-habana:

pip install optimum-habana==1.14.1

git clone -b v1.14.1 https://github.com/huggingface/optimum-habana

Then, we transition to the text-generation example and install the final set of requirements to run the model:

cd ~/optimum-habana/examples/text-generation

pip install -r requirements.txt

pip install -r requirements_lm_eval.txt

How to Access and Use the Llama 2 Model

Use of the pre-trained model is subject to compliance with third-party licenses, including the “Llama 2 Community License Agreement” (LLAMAV2). For guidance on the intended use of the LLAMA2 model, what will be considered misuse and out-of-scope uses, who are the intended users and additional terms please review and read the instructions. Users bear sole liability and responsibility to follow and comply with any third-party licenses, and Habana Labs disclaims and will bear no liability with respect to users’ use or compliance with third-party licenses.

To be able to run gated models like this Llama-2-70b-hf, you need the following:

- Have a Hugging Face account and agree to the terms of use of the model in its model card on the Hugging Face hub

- Create a read token and request access to the Llama 2 model from meta-llama

- Login to your account using the Hugging Face CLI:

huggingface-cli login --token <your_hugging_face_token_here>

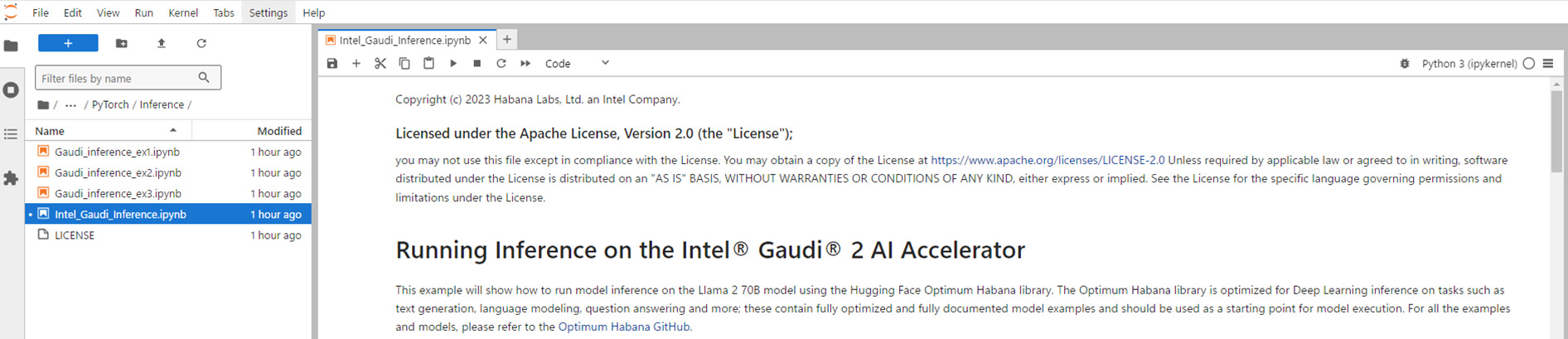

If you want to run with the associated Jupyter Notebook for inference, please see the running and fine-tuning addendum section for setup of the Jupyter Notebook and you can run these steps directly in the Jupyter interface.