If you experience graph recompilations with your model, you can find possible solutions toward eliminating potential latency in the Handling Dynamic Shapes guide.

The Habana bridge provides the functionality to compile a Intel® Gaudi® software graph and launch the resulting recipe in an asynchronous method. The recipes are cached by the Habana bridge to avoid recompilation of the same graph. This caching is done at an eager op level as well as at a JIT graph level. During training, the graph compilation is only required for the initial iteration; thereafter the same compiled recipe is rerun every iteration (with new inputs) unless there is a change in the operations being run.

In some cases, Intel Gaudi software must recompile the graph. This is mostly due to dynamic shaped input such as varying sentence lengths in language models or differing image resolutions in image model. Frequent graph recompilations can lead to a longer time to train.

Following is the step-by-step process for detecting frequent graph recompilations on the Intel Gaudi software platform.

Start Docker*

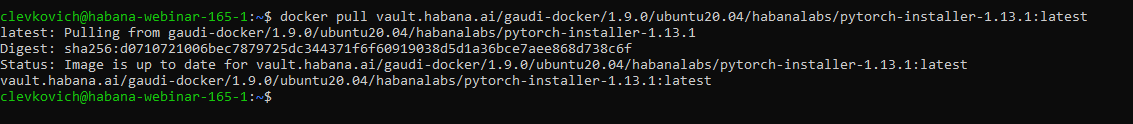

Make sure to use the latest PyTorch* container.

docker pull vault.habana.ai/gaudi-docker/1.9.0/ubuntu20.04/habanalabs/pytorch-installer-1.13.1:latest

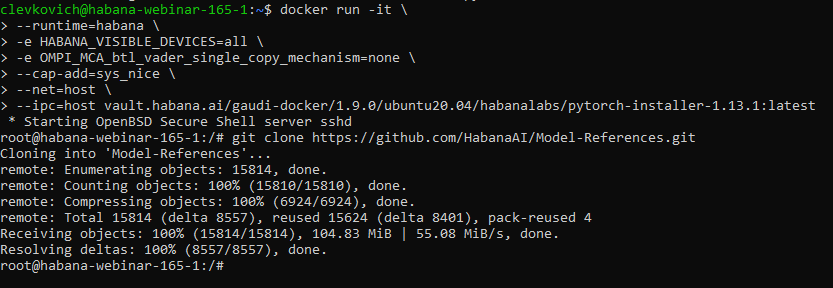

docker run -it \

--runtime=habana \

-e HABANA_VISIBLE_DEVICES=all \

-e OMPI_MCA_btl_vader_single_copy_mechanism=none \

--cap-add=sys_nice \

--net=host \

--ipc=host vault.habana.ai/gaudi-docker/1.9.0/ubuntu20.04/habanalabs/pytorch-installer-

1.13.1:latest

Prepare the Model

This tutorial uses an MNIST example model.

Clone the Model References repository inside the container that you just started:

git clone https://github.com/HabanaAI/Model-References.git

Move to the subdirectory containing the hello_world example:

cd Model-References/PyTorch/examples/computer_vision/hello_world/

Update PYTHONPATH to include Model-References repository and set PYTHON to python8 executable:

export PYTHONPATH=$PYTHONPATH:Model-References

export PYTHON=/usr/bin/python3.8

Training on a Single Intel® Gaudi® Processor

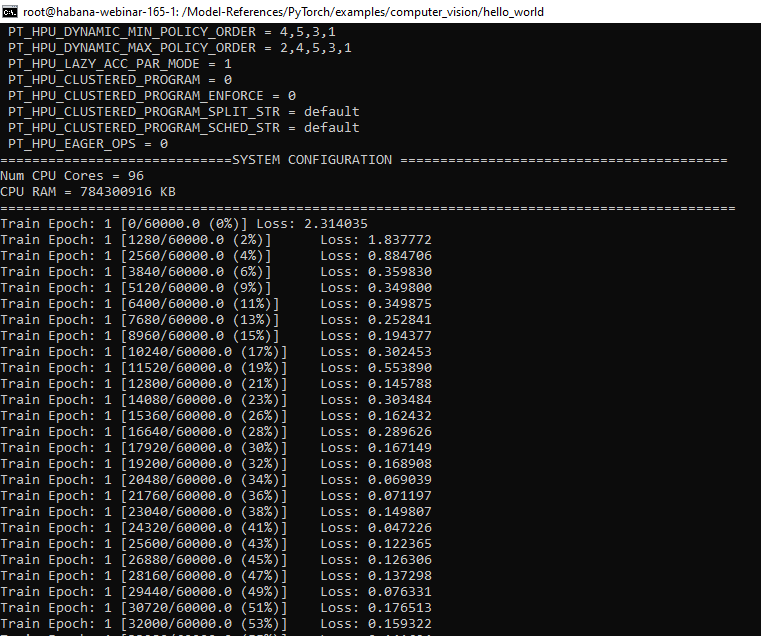

Run training on a single Intel® Gaudi® processor in BF16 with Habana mixed precision enabled:

$PYTHON mnist.py --batch-size=128 --epochs=1 --lr=1.0 \

--gamma=0.7 --hpu --hmp \

--hmp-bf16=ops_bf16_mnist.txt \

--hmp-fp32=ops_fp32_mnist.txt \

--use_lazy_mode

Detect Recompilations

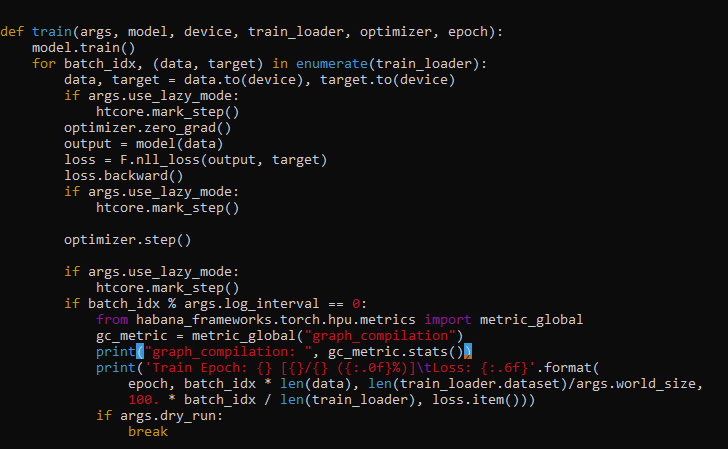

Add the code to model artificial dynamicity by constantly changing the batch size. To detect the frequent recompilations, use the Metric APIs.

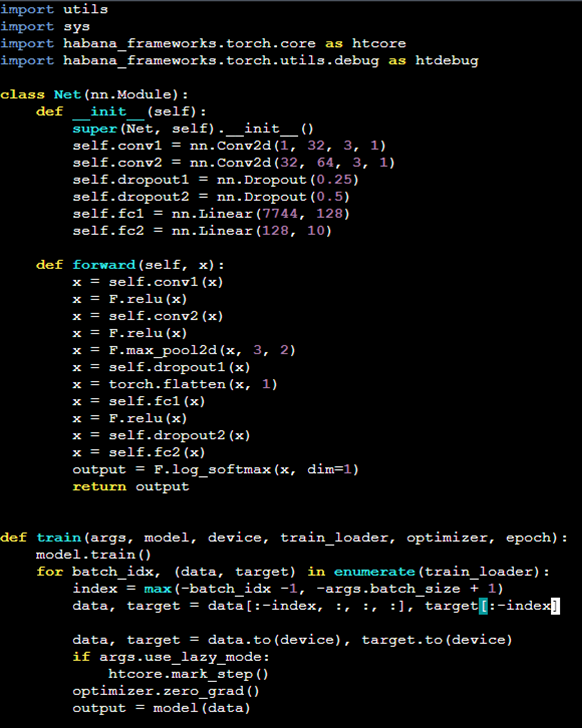

Edit the mnist.py file, and in line 61, add the line:

from habana_frameworks.torch.hpu.metrics import metric_global

gc_metric = metric_global("graph_compilation")

print("graph_compilation: ", gc_metric.stats())

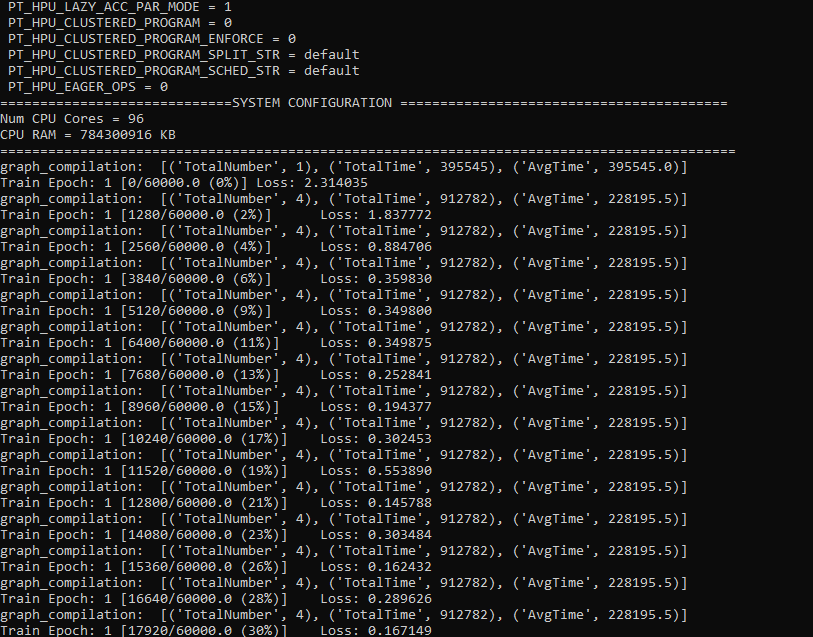

Now run the training code again. You will notice some graph compilations occur.

$PYTHON mnist.py --batch-size=128 --epochs=1 --lr=1.0 \

--gamma=0.7 --hpu --hmp \

--hmp-bf16=ops_bf16_mnist.txt \

--hmp-fp32=ops_fp32_mnist.txt \

--use_lazy_mode

Now add some artificial dynamicity by adding the following code at line 46

index = max(-batch_idx -1, -args.batch_size + 1)

data, target = data[:-index, :, :, :], target[:-index]

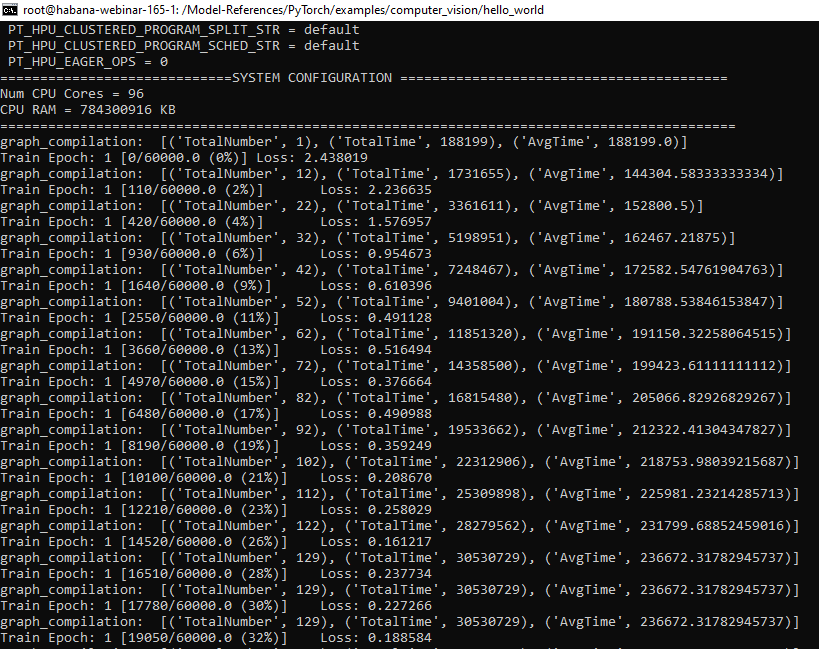

While running the training code again, you can see that graph compilation is much more frequent.

$PYTHON mnist.py --batch-size=128 --epochs=1 --lr=1.0 \

--gamma=0.7 --hpu --hmp \

--hmp-bf16=ops_bf16_mnist.txt \

--hmp-fp32=ops_fp32_mnist.txt \

--use_lazy_mode

As long as the batch size keeps changing, there are more recompilations. Training may also be noticeably slower.

What’s Next?

Feel free to use the same technique to check the frequency of graph recompilations in your code. If needed, look for possible resolutions using the Handling Dynamic Shapes guide.