This article provides an overview of the application interface, philosophy, purpose, and vision of Level Zero. We will look at the basic architecture of Level Zero and its benefits for low-level access control to compute unit resources. It can be used with language extensions like OpenMP* and SYCL*. We will briefly highlight how the interaction between Level Zero and the SYCL C++ language extension abstraction layer is exposed to the application software developer.

Level Zero is designed as a low-level API to configure and manage access to any number of offload devices. In doing so, it also provides the abstraction layer that enables C++ standard-compliant heterogeneous computation without interfering with the program flow. This makes code portable across different runtime environments. Being aware of the Level Zero API backend allows you to go beyond the abstraction of the SYCL or OpenMP language extension, thus increasing your level of control.

The Level Zero interface is part of the oneAPI Specification. It complements the API-based and direct programming models of oneAPI with bare-metal access to CPUs, GPUs, and accelerators. Intel’s reference implementation targeting Intel® GPUs as part of the Intel® oneAPI Base Toolkit and its usage with the Intel® oneAPI DPC++/C++ Compiler are also well documented. After reading this article, you should have the resources at your fingertips to dive deeper and get started using a Level Zero runtime or contemplating your own runtime.

Unlock Heterogeneous Computing

Intel’s first implementation of Level Zero targets Intel GPUs. However, the vision and potential of Level Zero goes far beyond that. It has the potential to create a tailored abstraction for specific device requirements. It can be adapted to support broader sets of language features such as function pointers, memory, and I/O. The API is designed to work across a variety of compute devices, including CPUs, GPUs, Field Programmable Gate Arrays (FPGAs), and other accelerator architectures.

Level Zero can coexist with other low-level APIs such as OpenCL* and Vulkan*. However, it is intended to evolve independently to permit the high-level oneAPI and SYCL developer experience to stay hardware agnostic and as architecture independent and flexible as possible. It also provides explicit controls that higher-level runtime APIs and libraries taking advantage of SYCL may desire. Level Zero is fully open-source, with its specification, source repository, and Intel GPU compute runtime implementation easily accessible on GitHub.

In short, Level Zero unlocks the vision of heterogeneous compute and offers the flexible open backend to make true choice of offload compute a reality. It provides the ability to explicitly control system-level interfaces through capabilities like:

- Device discovery

- Memory allocation

- Peer-to-peer communication

- Interprocess sharing

- Kernel submission

- Asynchronous execution and scheduling

- Synchronization primitives

- Metrics reporting

- System management

Let’s start with the key concepts of Level Zero.

Level Zero Basics

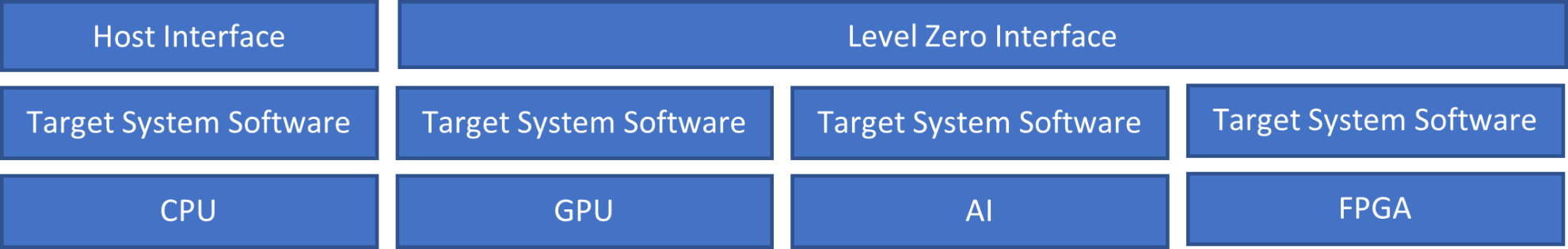

Level Zero sits below the application layer. It can be adopted as an abstraction interface between a C++ application and target device properties (Figure 1). These could include CPUs as well as other compute devices. In doing so, it enables the developer to interact seamlessly with shared device resources and to dispatch workloads to a specific Level Zero driver supported device. The driver adds a supported device to an available device list, and any SYCL queue can be mapped to and submit work to that device. If we do not need to access specific device properties, or resources shared between multiple Level Zero devices, those devices thus behave exactly like any other device using a different SYCL backend API.

Figure 1. Level Zero interface in the context of the oneAPI backend architecture.

The real strength of Level Zero lies in its low-level control and support for device-specific memory sharing or synchronization context objects. This not only adds more transparency for a device, but it also gives the Level Zero API additional configurability for heterogeneous offload target devices.

The sequential flow of Level Zero device detection and selection is as follows.

Level Zero Loader

Accessing an offload device or accelerator starts with the Level Zero Loader. It discovers Level Zero drivers for devices in the system. The loader project also contains the Level Zero headers and libraries that allow us to build and interact with Level Zero implementations. Driver initialization and discovery are illustrated in the following code example:

// Initialize the driver zeInit(0); // Discover all the driver instances uint32_t driverCount = 0; zeDriverGet(&driverCount, nullptr); ze_driver_handle_t* allDrivers = allocate(driverCount * sizeof(ze_driver_handle_t)); zeDriverGet(&driverCount, allDrivers); // Find a driver instance with a GPU device ze_driver_handle_t hDriver = nullptr; ze_device_handle_t hDevice = nullptr; for(i = 0; i < driverCount; ++i) { uint32_t deviceCount = 0; zeDeviceGet(allDrivers[i], &deviceCount, nullptr); ze_device_handle_t* allDevices = allocate(deviceCount * sizeof(ze_device_handle_t)); zeDeviceGet(allDrivers[i], &deviceCount, allDevices); for(d = 0; d < deviceCount; ++d) { ze_device_properties_t device_properties {}; device_properties.stype = ZE_STRUCTURE_TYPE_DEVICE_PROPERTIES; zeDeviceGetProperties(allDevices[d], &device_properties); if(ZE_DEVICE_TYPE_GPU == device_properties.type) { hDriver = allDrivers[i]; hDevice = allDevices[d]; break; } } free(allDevices); if(nullptr != hDriver) { break; } } free(allDrivers); if(nullptr == hDevice) return; // no GPU devices found

This is followed by the creation of a context object for the purpose of managing memory, command queues, modules, synchronization objects, and such. The use of contexts becomes especially important if managing system resources that could be shared by multiple devices. A simple example for the scenario of shared memory is given below:

// Create context(s) zeContextCreate(hDriver, &ctxtDesc, &hContextA); zeContextCreate(hDriver, &ctxtDesc, &hContextB); zeMemAllocHost(hContextA, &desc, 80, 0, &ptrA); zeMemAllocHost(hContextB, &desc, 88, 0, &ptrB);

Scheduling Model

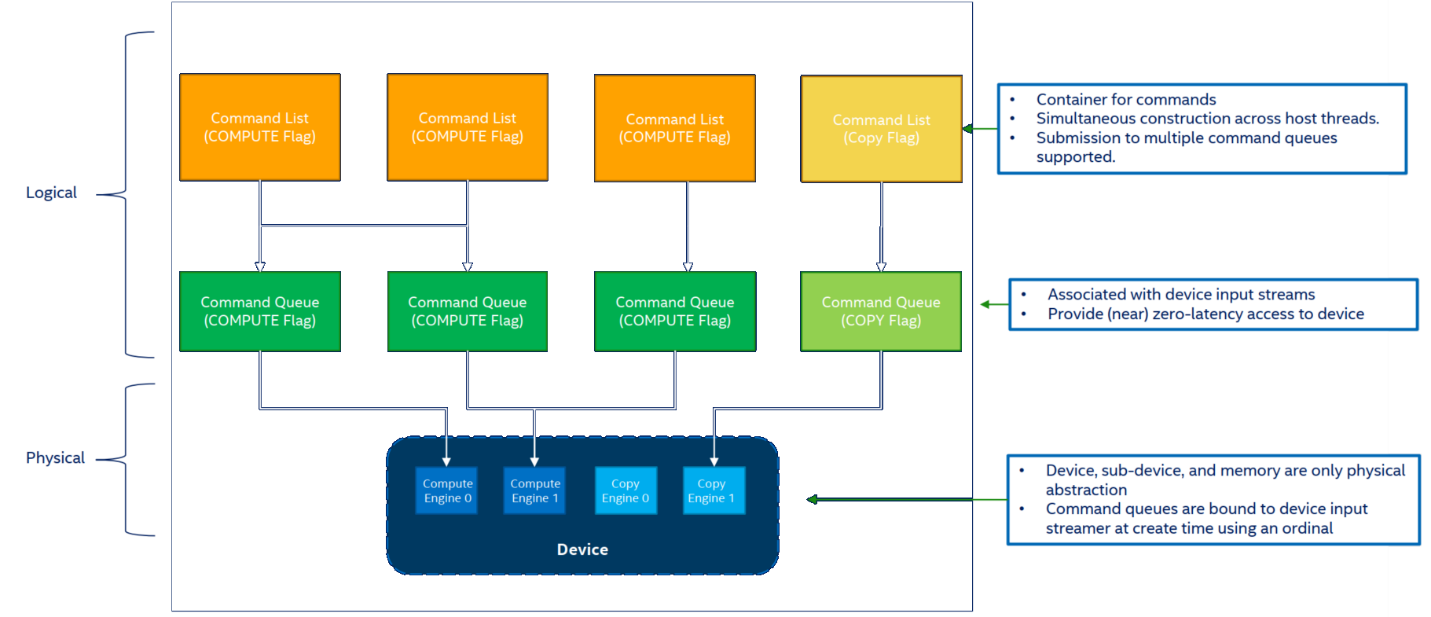

Any commands will be scheduled and dispatched to Level Zero devices as depicted in the scheduling diagram in Figure 2.

Figure 2. Level Zero scheduling model

Commands are appended to command lists that represent a sequence of commands to be executed on the offload compute unit or accelerator. A command list can be recycled by resetting the list, without needing to create it again. It can be reused by submitting the same sequence of commands several times, without needing to reappend commands.

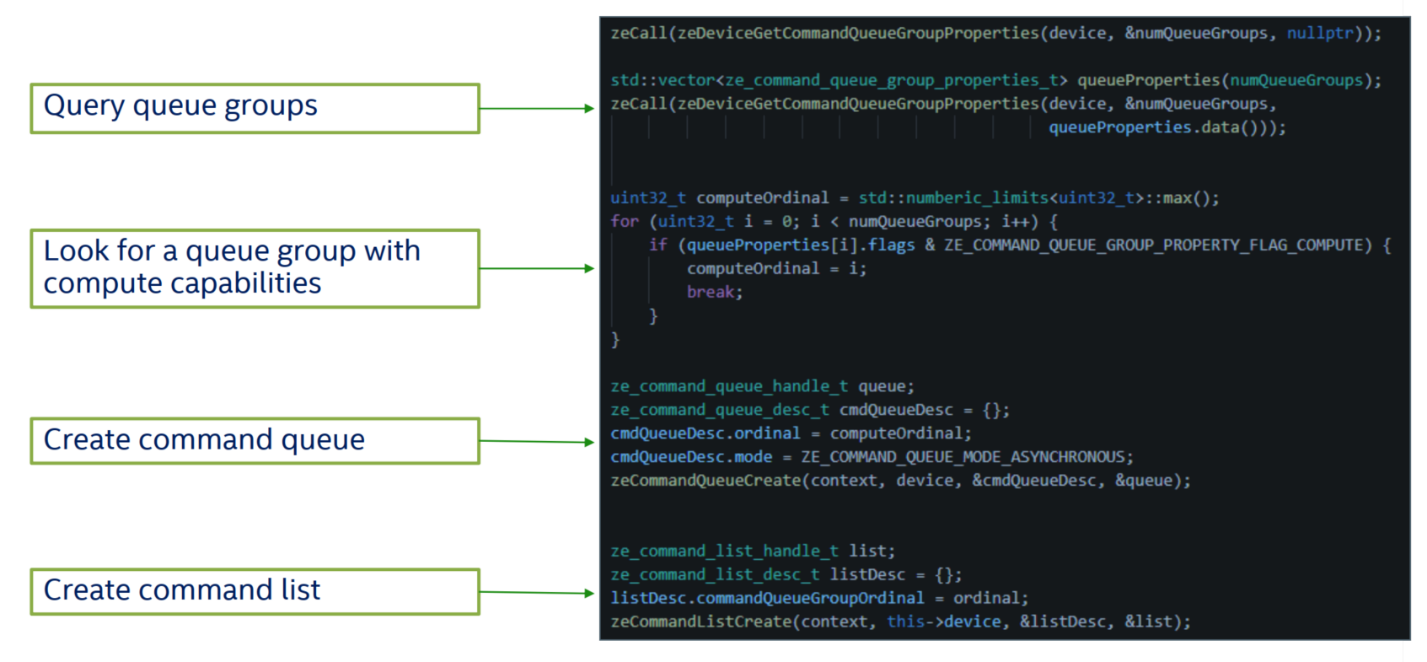

Command lists are then submitted to a command queue for execution. A queue is a logical object associated to a physical input stream in the device, which can be configured as synchronous or asynchronous and can be organized in queue groups. This scheduling model translates into the source code flow shown in Figure 3.

Figure 3. Level Zero scheduling execution flow

Immediate Command Lists

Command list handling can also be optimized to help manage latency. High priority tasks requiring guaranteed response times can be handled via low-latency immediate command lists. This is a special type of command list dedicated to very low-latency submission usage models.

The command list and its implicit command queue are created using a command queue descriptor. Commands appended into an immediate command list are immediately executed on the device. Commands appended into an immediate command list may execute synchronously by blocking until the command is complete. An immediate command list does not need to be closed or reset after completion. However, usage will be honored, and expected behaviors will be followed. The following pseudo-code demonstrates a basic sequence for creation and usage of immediate command lists:

// Create an immediate command list ze_command_queue_desc_t commandQueueDesc = { ZE_STRUCTURE_TYPE_COMMAND_QUEUE_DESC, nullptr, computeQueueGroupOrdinal, 0, // index 0, // flags ZE_COMMAND_QUEUE_MODE_DEFAULT, ZE_COMMAND_QUEUE_PRIORITY_NORMAL }; ze_command_list_handle_t hCommandList; zeCommandListCreateImmediate(hContext, hDevice, &commandQueueDesc, &hCommandList); // Immediately submit a kernel to the device zeCommandListAppendLaunchKernel(hCommandList, hKernel, &launchArgs, nullptr, 0, nullptr); ...

Imagine the wealth of closed-feedback-loop use cases where immediate action may be required upon determining an industrial system error condition. The concept of immediate command lists introduces GPU offload compute to use cases that require guaranteed response times.

Application Developer Benefits

Now that we’ve covered the key design principles of Level Zero, let’s have a look at how application developers can interact with it from their SYCL-based applications.

Device Selection

Any device with a Level Zero driver implementation can be initialized and used by the application developer. Intel’s Level Zero implementation is available on GitHub. It can be used as a reference for other devices that want to take advantage of oneAPI cross-architecture heterogeneous compute support.

To select a specific offload device, the SYCL_DEVICE_FILTER environment variable can be used. Using it affects all the device query functions and device selectors. To check on the availability of devices for use with SYCL on a running system, simply use the sycl-ls command, e.g.:

$ sycl-ls [opencl:acc:0] Intel(R) FPGA Emulation Platform for OpenCL(TM), Intel(R) FPGA Emulation Device 1.2 [2022.13.3.0.16_160000] [opencl:cpu:1] Intel(R) OpenCL, 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz 3.0 [2022.13.3.0.16_160000] [opencl:gpu:2] Intel(R) OpenCL HD Graphics, Intel(R) Iris(R) Xe Graphics 3.0 [31.0.101.3358] [ext_oneapi_level_zero:gpu:0] Intel(R) Level-Zero, Intel(R) Iris(R) Xe Graphics 1.3 [1.3.23828] [host:host:0] SYCL host platform, SYCL host device 1.2 [1.2]

We can think about about device properties as follows. Driver objects represent a collection of physical devices in the system. More than one driver may be available. For example, one driver may support accelerators from one vendor, and another driver may support an accelerator from a different vendor. Context objects represent device or system resources. Their primary purpose is creation and management of resources that may be used by multiple devices. Device objects represent a physical device in the system. The device discovery API is used to enumerate devices in system. The zeDeviceGet() function is used to query the number of Level Zero devices supported by a driver and obtain any devices objects, which are read-only global constructs. Every device has a 16-byte universal unique globally identifier (UUID) assigned to it. A device handle is used during creation and management of resources that are specific to a device.

If we want to take advantage of the additional capabilities of Level Zero, we first want to enable interoperability between SYCL-enabled C++ code and the Level Zero API. If we intend to directly interact with Level Zero device-specific context objects from within a C++ application, the following header files need to be included in our source code in the order shown:

#include "level_zero/ze_api.h" #include "sycl/backend/level_zero.hpp"

The Level Zero backend is added to the sycl::backend enumeration with:

enum class backend { // ... ext_oneapi_level_zero, // ... };

This way, you can use the sycl:get-native API from the SYCL namespace to request Level Zero data structures underlying SYCL objects.

template <backend BackendName, class SyclObjectT> auto get_native(const SyclObjectT &Obj) -> backend_return_t<BackendName, SyclObjectT> ext_oneapi_level_zero,

Please consult the Intel® oneAPI Level Zero Backend Specification for more details. A given SYCL queue will attach to an available device on the system:

try { vector<device> sub_devices = ...; for (auto &d : sub_devices) { // Each queue is in its own context, no data sharing across them. auto q = queue(d); q.submit([&](handler& cgh) {...}); } }

The device is then used and has an execution queue assigned to it like any other SYCL device. Where it all gets interesting is when we want to access device-specific resources, or resources that are shared between host and offload execution devices. Let’s take unified shared memory (USM) as an example for accessing device-specific resources. The outline below reflects how Intel implemented USM, but identical functionality could be implemented for other Level Zero libraries.

Unified Shared Memory

Memory is visible to the upper-level software stack as unified memory with a single virtual address space covering both CPU and GPU. Linear, unformatted memory allocations are represented as pointers in the host application. A pointer on the host has the same size as a pointer on the device. There are three ways to allocate memory using the SYCL namespace:

- sycl::malloc_device

- Allocation can only be accessed by the specified device, but not by the host or other devices in the context.

- The data always stays on the device and is the fastest available for kernel execution.

- Explicit copy is needed for transferring data to the host or other devices in the context.

- sycl::malloc_host

- Allocation can be accessed by the host and any other device in the context.

- The data always stays on the host and is accessed via Peripheral Component Interconnect (PCI) from the devices.

- No explicit copy is needed for synchronizing the data with the host or devices.

- sycl::malloc_shared

- Allocation can only be accessed by the host and the specified device.

- The data can migrate (operated by the Level Zero driver) between the host and the device for faster access.

- No explicit copy is necessary for synchronizing between the host and the device, but it is needed for other devices in the context.

The equivalent lower-level Level Zero calls invoked this way are zeMemAllocDevice, zeMemAllocHost, and zeMemAlloc_shared, respectively. If you’d like to delve a bit deeper into the implementation behind this, please check out the Level Zero Core API Specification.

USM is only one example of the advanced device awareness that Level Zero provides. More details can be found in the Level Zero Core Programming Guide.

Summary and Next Steps

Level Zero provides a rich set of interfaces to schedule work and manage memory on compute units and accelerators. It provides all the services for loading and executing programs, allocating memory, and managing heterogeneous workloads. It does so with an open interface that can be customized to your specific hardware configuration, while maintaining the abstraction and flexibility required for workloads to run on less specialized setups.

Objects defined in Level Zero, such as command queues and command lists, allow for low-level control of the underlying hardware. With these and available optimization techniques, high-level programming languages and applications may execute workloads with close-to-metal latencies for higher performance.

In conjunction with SYCL, it can be seamlessly used and accessed using C++. Furthermore, we are currently leveraging our experiences with Python*, Julia*, and Java* to provide better language runtime support in Level Zero across languages. We would like to invite the open source community and the industry as a whole to contribute to Level Zero to make it an even more versatile and powerful interface for multi-architectural choice.

Additional Resources

- Intel® Graphics Compute Runtime for oneAPI Level Zero and OpenCL Driver

- Intel oneAPI DPC++/C++ Compiler Developer Guide and Reference: Intel oneAPI Level Zero

- oneAPI Level Zero Headers, Loader, and Validation Layer

The Parallel Universe Magazine

Intel’s quarterly magazine helps you take your software development into the future with the latest tools, tips, and training to expand your expertise.