Introduction

Intel® QuickAssist Technology (Intel® QAT) delivers high-performance capabilities for commonly used encryption/decryption and compression/decompression operations. With the advent of the Intel® Xeon® processor Scalable family, some servers now ship with Intel QAT onboard as part of the system’s Platform Controller Hub. It’s a great time for data center managers to learn how to optimize their operations with the power of Intel QAT.

Like any other attached device, Intel QAT should be used carefully to obtain optimal performance, especially in light of non-uniform memory access (NUMA) concerns on multisocket servers. When using an Intel QAT device identifier, it is best to be aware of where it sits in the system topology so you can intelligently direct workloads into and out of the Intel QAT device channels.

In this article, we show how to discover your system’s NUMA topology, find your Intel QAT device identifiers, and configure your Intel QAT drivers to ensure that you are using the system as efficiently as possible.

Why Is NUMA Awareness Important?

In general, NUMA awareness is important whenever you are using a server that supports two or more physical processor sockets. Each processor socket has its own connections for accessing the system’s main memory and device buses. In a NUMA-based architecture, there will be physical memory and device buses that are accessed from specific processor sockets. Take, for example, a two-socket server in which there are processors numbered 0 and 1. When accessing the memory that is directly attached to processor 0, processor 1 will have to cross an inter-processor bus. This access is “non-uniform” in the sense that processor 0 will access this memory faster than processor 1 does, due to the longer access distance. Under some (but not all) conditions, accessing memory or devices across NUMA boundaries will result in decreased performance.

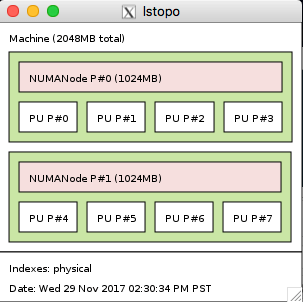

Figure 1. A simple, abstracted two-socket NUMA layout.

It is outside the scope of this article to discuss specific NUMA scenarios, architectures, and solutions, or the details of how memory and I/O buses are accessed in these scenarios. We will instead discuss an example of a current-model, two-socket Intel® architecture server as we discuss Intel QAT utilization. The general ideas described here can be extrapolated to other scenarios such as four- and eight-socket servers.

For more general information about NUMA, read NUMA: An Overview.

Discovering NUMA Topology

Note that in Figure 1, the processor sockets are labeled as NUMA nodes. Usually this is a direct mapping of node to socket, but it doesn’t have to work this way. When determining the NUMA layout, read the hardware documentation carefully to understand the exact definition and boundaries of a NUMA node.

A commonly available package for determining these layouts is the hwloc package, more formally known as the Portable Hardware Locality project. The site includes complete instructions for obtaining and installing hwloc for your OS.

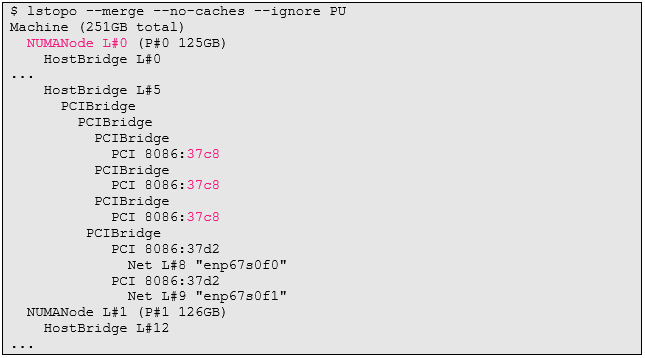

Once installed, you can use the lstopo command to determine your system’s topology. The tool can output in graphical format (see Figure 2 for an example) or in plain text.

Figure 2. Graphical output from the lstopo command.

The output shows that the system queried has two NUMA nodes. Here is the full command that was used to produce this output:

This directs the output to remove processing units (processor core details) and processor caches. It also removes elements that do not affect the NUMA hierarchy, such as the processor cores themselves. This information is interesting and useful for other queries, but is of little value in assessing Intel QAT location, at least for now.

Locating Intel QuickAssist Technology (Intel® QAT) Within the NUMA Topology

Locating Intel QAT devices can be a challenge, even with the help of lstopo. We must know which device identifier to look for. To do so, we'll use the lspci command, which is generally in the pciutils package. Most Linux* distributions include it by default.

Here is a command that can help locate the Intel QAT coprocessor devices:

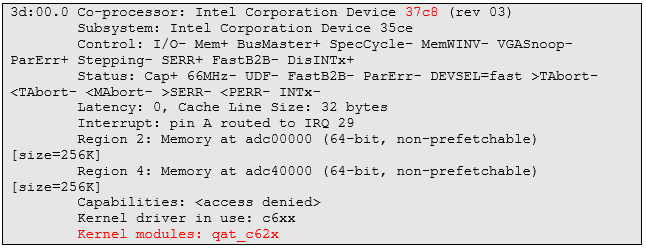

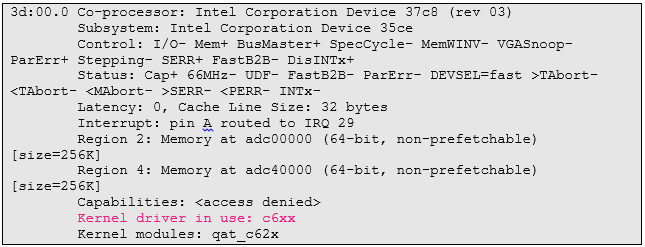

This command generates quite a bit of output. To find your Intel QAT devices, type /qat to search for the driver, and then page up or down to view entries. You should find entries that look like this one:

We've highlighted in red two items in the output. The one at the bottom is the one we found by searching the output, indicating that this device is controlled by the Intel QAT kernel driver. The second is the device identifier 37c8. You can see that this identifier is in the lstopo output shown in Figure 1. You can also see it in the text output, shown here:

We've elided some of the output (where “...” is shown) to make things visually clearer. However, looking at either this output’s indentation or the graphical output shown in Figure 1 shows that all three Intel QAT devices represented on this particular system fall into NUMA node 0.

Locating SR-IOV Devices

If your Intel QAT kernel driver was installed with support for hosting Single-Root I/O Virtualization (SR-IOV), you will see many more devices present (see Figure 3).

Figure 3. SR-IOV devices.

The graphical output has helpfully collapsed the SR-IOV virtual functions (VFs) into arrays of 16 devices per physical function (PF) device. In text format, each device identifier will be shown in the lstopo (and lspci) output individually.

Note that since VFs are derived from their host PFs, they are still going to be installed to the same NUMA nodes as the PFs. Thus, in our example, all 48 VFs are in NUMA node 0.

Using Intel® QAT with NUMA Awareness

The good news is that it is quite likely that your Intel QAT driver installation has already set up the Intel QAT devices correctly for the NUMA topology of your system. To check that it is correct and to fine tune it, however, you must understand the topology, where Intel QAT exists within it, and how to ensure Intel QAT uses processor cores that are within the same NUMA node. We now know how to do the first two of those activities; let's examine the last.

When we worked with lstopo previously, we used the --ignore PU flag so that the output would not be cluttered with extra information. Now we want to see the layout of processor cores, so let’s try it without the flag:

Figure 4. Four cores per NUMA node.

In this case, we actually cheated a little. Here's the command that generated that output:

We asked lstopo to simulate a four-core-per-node NUMA topology with two nodes. This was for the benefit of seeing the output, since the machine we've been running our tests on has 48 processor cores on board. This would create very long output, in the case of text, or very wide output, in the case of graphics.

The important part is seeing the processor numbering. Here it is evident that processors 0‒3 are installed to NUMA node 0 and processors 4‒7 are installed to NUMA node 1. Now we are ready to configure our Intel QAT drivers appropriately.

Let's revisit the output from lspci above; this time we'll highlight a different value:

The kernel driver reported to be in use for our current Intel QAT installation is c6xx. The configuration files for the devices in use can be found in /etc with this prefix:

Note that if you have SR-IOV enabled, you will also see the VF device configuration files in /etc, but it is unnecessary to configure them since they will be slaved to their parent host PF configuration. Their affinity selections will be centered on the (likely) single- and dual-core virtual machine (VMs) that they are used by.

Now we can examine the processor affinity selections within the configuration files. Here's an easy way to get that information:

The core affinity settings are specifying specific core numbers to use for various Intel QAT functions. As mentioned at the beginning of this section, it is likely that the installation of your Intel QAT drivers already has configured this, but you should check to make sure. Note that in the above output, affinity values are recorded for processors 0‒22. In the system that we've been using for examples, processors 0‒23 are on NUMA node 0 and therefore co-resident with all the Intel QAT devices. This machine is configured without cross-NUMA-node problems.

If we did find core affinity set to cross the NUMA node boundary, we would want to edit these files to specify core numbers within the same node as the device under consideration. After doing that, we would reset the drivers with the following command:

A note about virtual machines and VFs

In an SR-IOV configuration, it is wise to also pin your physical CPU usage to cores that are in the same NUMA node as your Intel QAT devices. You can do this various ways depending on your hypervisor of choice. See your hypervisor's documentation to determine how to best allocate NUMA resources within it. For example, QEMU*/KVM* allows usage of the -numa flag to specify a particular NUMA node for a VM to run within.

Running applications

Finally, for applications that utilize Intel QAT, it may be optimal to ensure that they too operate within the same NUMA node boundaries. This is easily accomplished by launching them in a NUMA-aware fashion with the numactl command. It is available in the numactl package on most operating systems. The easiest way to ensure usage of local resources is as follows:

This tells the system to launch command with arguments (that is, a normal application launch) and ensure that both memory and CPU utilization are isolated to NUMA node 0. If your targeted Intel QAT devices are on node 0, you might try launching this way to ensure optimal NUMA usage.

Summary

We examined how to discover your systems' NUMA topology, locate Intel QAT devices within that topology, and adjust the configuration of the QAT driver set and application launch to best take advantage of the system.

In general, a well-installed system with proper Intel QAT drivers will likely not need much adjustment. However, if Intel QAT is configured for cross-node operation, performance may suffer.

About the Author

Jim Chamings is a senior software engineer at Intel Corporation. He works for the Intel Developer Relations Division, in the Data Center Scale Engineering team, specializing in Cloud and SDN/NFV. You can reach him at jim.chamings@intel.com.