The Intel oneAPI Centre of Excellence at Hawking Centre for Theoretical Cosmology (CTC) (Department of Applied Mathematics and Theoretical Physics, University of Cambridge) uses SYCL-based GPU accelerator offload and Intel® oneAPI Toolkits to increase the speed and resolution of large-scale cosmological simulations of gravity; pushing the performance of its open-source numerical relativity code, GRTeclyn* to the next level.

Asking Fundamental Questions

- How did the Universe begin?

- What is the nature of gravity?

- What happens when two black holes collide?

These are some of the fundamental questions the Hawking Centre for Theoretical Cosmology (CTC) aims to answer about the nature of our Universe. The CTC has enjoyed a long collaboration with Intel, starting with Professor Stephen Hawking and his text-to-voice transcription program and continuing with the Intel Parallel Computing Center, the precursor to the oneAPI Centers of Excellence. With GRTeclyn, we actively leveraged Intel’s open standards-based and open-source ecosystem of oneAPI software developer solutions. The oneAPI specification at the center of it is a cornerstone of the Unified Acceleration (UXL) Foundation’s effort to build a multi-architecture, multi-vendor software ecosystem for all accelerators for a unified heterogeneous compute ecosystem.

Combining this with the Intel® Max Series product family of CPU and GPU devices for data center, high-performance computing, and AI workloads, we have made substantial advances toward our understanding of gravity.

Understanding Gravitational Fields

When physicists want to know how objects behave in a strong gravitational field, we solve a set of coupled partial differential equations called Einstein’s equations. These equations describe the relationship between the geometry of spacetime and the distribution of matter within it. In the case of complicated geometries, such as the merger of two black holes, we must rely on computing resource-intensive numerical simulations to find solutions and understand how they evolve over time. When solving a physical system using finite volume elements, we aim to discretize the problem such that each grid point represents a location in space and time for a quantity we want to track; this might be ocean currents for meteorologists or wind flow around a turbine. While this is still true for General Relativity, we must carefully consider how the spatial and temporal slicing is achieved within the simulation. Because, as we know from Einstein’s famous thought experiments (and the movie Interstellar!), not every observer measures the passage of time in the same way. Also, as spacetime is stretched in the presence of a massive body, distances are not the same for everybody either. As a result of these ambiguities, there are different ways of writing the Einstein equation; some of these are more numerically stable (well posed) than others. It took about 20 years of research before a stable formalism could be found! Today, these simulations are still incredibly computationally expensive.

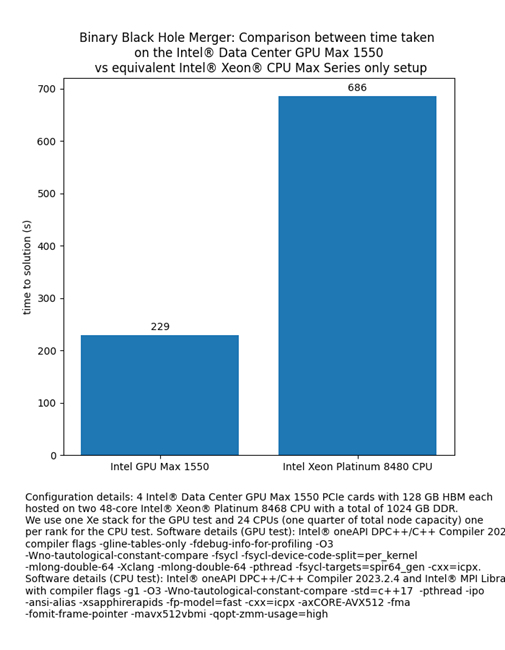

This is where Intel’s expertise in advanced accelerated computing using GPUs comes into play. Using the Intel® Data Center GPU Max 1550, we have run our simulations at approximately 3x the speed of an equivalent CPU simulation. Beyond simply enabling us to run simulations at a low resolution more quickly, these developments will also allow us to explore larger and higher-resolution simulations with more detailed physics.

Our open-source numerical relativity code, GRTeclyn, is based on AMReX* [1]. AMReX is an adaptive mesh refinement library (AMR) optimized for exascale computations, developed as an Exascale Computing Project (ECP) and Linux Foundation project. It has a flexible, performance, portable backend that allows it to run on many different GPU architectures. For Intel GPUs the AMReX backend is based on the SYCL* 2020 standard and compiled using the Intel® oneAPI DPC++/C++ Compiler. Our initial results on a single node are very promising – in the figure below, we show the speed-up obtained on 1 stack of the Intel® Data Center GPU Max Series (1 MPI rank to 1 GPU) vs. one socket of a 4th Gen Intel® Xeon® Scalable Processor node (24 MPI ranks). Our tests were performed on Dawn, the Intel® Data Center GPU Max Series accelerated supercomputer hosted by the University of Cambridge and currently ranked at #65 on the TOP500 (Nov 2024).

Fig.1 Binary Black Hole Merger Computation Time Comparison GPU Offload versus CPU Only

Our calculations are particularly suitable for GPU offloading because the regions in the simulation volume can be broken down into a nested hierarchy of meshes, with each grid point representing a position in space and time that must satisfy Einstein’s equation. Besides a boundary layer of cells (the so-called ‘ghost region’) that must be exchanged with another MPI process at the end of each timestep, each box is self-contained and can be pushed onto the GPU for parallel processing. The Intel Data Center GPU Max Series’ relatively larger device memory makes the process even more efficient.

Visualizing the Early Universe

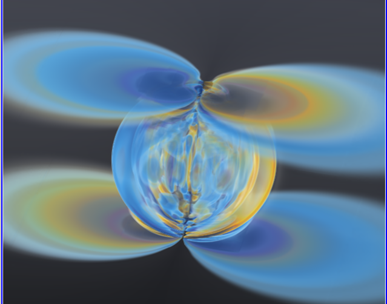

We have also enjoyed close collaboration with Intel’s Visualization and Rendering team, leveraging Intel® OSPRay and Paraview*. Amelia Drew and Paul Shellard of the Department of Applied Mathematics and Theoretical Physics (DAMTP) at the University of Cambridge and Carson Brownlee (formerly Intel) were awarded the DiRAC 2024 Research Image of the Year (particle physics division) for their depiction of the axion radiation emitted by cosmic strings.

These cosmic strings are leftover ‘defects’ from a time when the early Universe underwent an extremely fast period of expansion known as inflation. Detecting the unique radiation signature of cosmic strings would be an important clue as to how the Universe began.

Fig. 2: Dramatic burst of radiation from two colliding kinks on a cosmic string.

Credit: Amelia Drew (DAMTP), Paul Shellard (DAMTP) and Carson Brownlee (Intel).

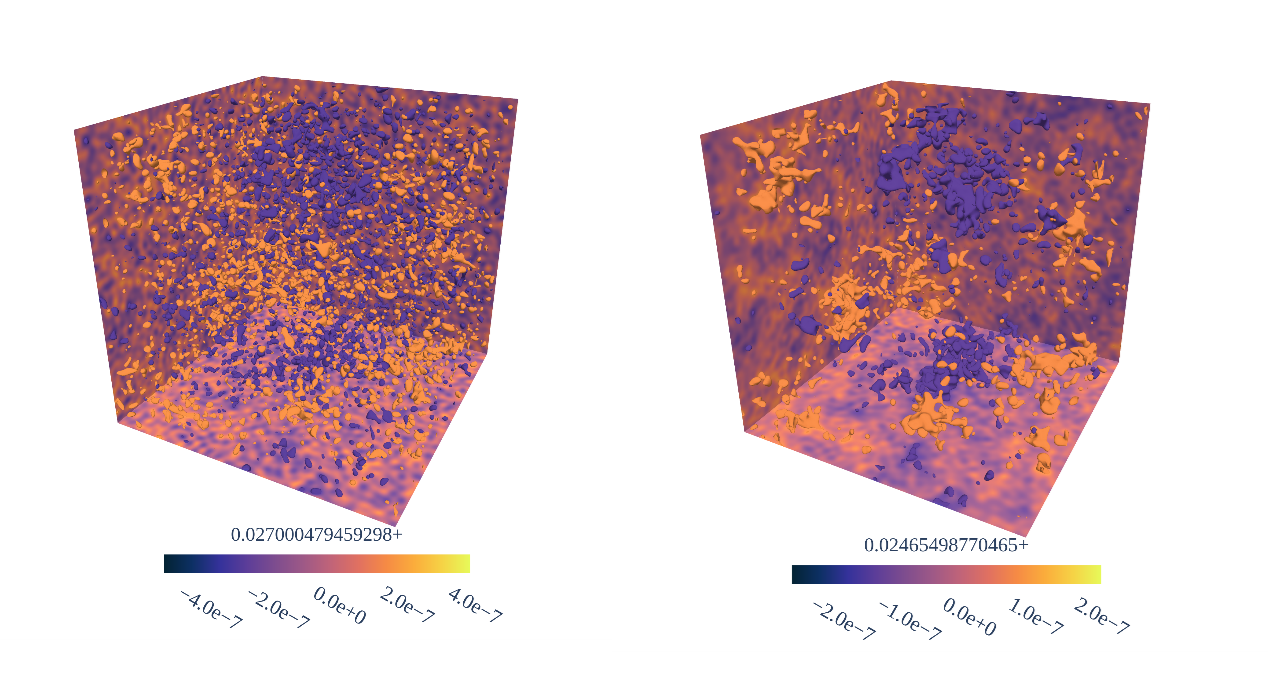

Visualizations can help us to more easily understand the time evolution of physical processes captured by the simulations. With GRTeclyn, scientists Yoann Launay (DAMTP), Gerasimos Rigopoulos (Newcastle University), and Paul Shellard (DAMTP) were able to calculate the evolve the density field during inflation for the first time from stochastic initial conditions and within a fully General Relativistic approach. Figure 3 shows the change in the inflaton field from an early quantum state on the left to the smoother conditions on the right.

Fig. 3: Evolution of quantum density fluctuations in stochastic inflation using GRTeclyn.

Credit: Yoann Launay (DAMTP), Gerasimos Rigopoulos (Newcastle University) and Paul Shellard (DAMTP).

Monitoring Simulation Progress

Additionally, to aid in our exascale goals, we have been working with Intel engineers to develop in-situ visualization techniques for GRTeclyn. In-situ visualization allows us to output the state of the simulation while it is still running to create images for later analysis without having to store fine-grained snapshots. This will be crucial for running on exascale systems as their I/O capabilities simply haven’t kept up with their computational power to save outputs at every timestep to explore fine-grained temporal changes or create movies for education and public outreach. This will also reduce the strain on data storage requirements and the computational systems required for data analysis. For example, a low-resolution binary black hole merger simulation might contain nine AMR levels, each with 27 variables per grid point. While this is only 500MBs in storage, capturing several orbits and as well as the aftermath in the so called final ‘ring-down' phase requires around 2000 timesteps. Creating a smooth movie of the process might require one output every 1-2 timesteps. Furthermore, post-processing the outputs means reading all the grids back into memory, which might only be possible on the cluster used to generate the simulation in the first place!

Our strategy for in-situ visualization is to use ParaView *Catalyst and the Conduit* Blueprint data structure to take the meshes from GRTeclyn and convert them into VTK standard data format, which ParaView can understand. ParaView Catalyst provides a clean, convenient API to ParaView such that users do not have to interact directly with cumbersome VTK functions, while Conduit Blueprint has been designed to handle scientific mesh data and so is very straightforward to use. ParaView Catalyst is initialized at the start of the simulation with a Python control script that specifies the type of visualization to be performed; options include writing a snapshot of the current state of the simulation or passing control to the ParaView Live co-processing which allows the user to stop the simulation within ParaView and inspect its content. When a user has marked a timestep for output, GRTeclyn will communicate its data structures to ParaView Catalyst by passing a Conduit Blueprint node. Paraview Catalyst will then convert the Conduit Blueprint node to VTK data format for ParaView. The tricky part is coordinating all of this in parallel while eliminating as many computational overheads as possible, as each MPI rank will hold a different portion of the nested mesh structure.

Meshes can also be offloaded to the Intel Data Center GPU Max 1550 using the SYCL backend of VTK-m to accelerate the process.

Fig. 4: Integrating ParaView Catalyst with GRTeclyn for Insitu Visualizations.

More Insights to Come

Looking ahead, 2025 will be a busy year for the CTC as we shift towards machine learning and AI applications. Using the oneAPI-accelerated GRTeclyn to generate templates, we hope to train gravitational wave analysis pipelines to identify exotic new forms of matter. We will continue our lecture series on SYCL and performance portability as part of our Master’s program in Data Intensive Science to train the next generation of oneAPI software engineers.

Join the Open Accelerated Computing Revolution

Accelerated heterogeneous computing is finding its way into every facet of technology development. By embracing openness, you can access the active ecosystem of software developers. The oneAPI specification element source code is available on GitHub.

Let us drive the future of accelerated computing together; become a UXL Foundation member today:

Get Started with oneAPI

If you find the possibilities for accelerated compute discussed here intriguing, check out the latest Intel® oneAPI DPC++/C++ Compiler, either stand-alone or as part of the Intel® oneAPI Base Toolkits, or Intel® oneAPI HPC Toolkit, or toolkit selector essentials packages.

Additional Resources

- Hawking Centre for Theoretical Cosmology at the University of Cambridge

- GRTeclyn Open-Source Numerical Relativity Code

- AMReX Block-Structured AMR Software Framework and Applications

References

- [1] Zhang, W., Myers, A., Gott, K., Almgren, A and Bell, J., AMReX: Block-structured adaptive mesh refinement for multiphysics applications, The International Journal of High Performance Computing Applications. 2021;35(6):508-526.

- [2] Drew, A, Kinowski, T and Shellard, E. P. S, 2024, Phys. Rev. D 110, 043513, Axion String Source Modelling