As companies place increasing importance on achieving sustainability goals, more-adaptive on-node systems can help optimize available resources to decrease system power use and cooling costs. By using a combination of TuneD, a system tuning service for Linux*, and Kubernetes* Power Manager, you can leverage power-tuning models already available on the CPU to design more intelligent systems.

This talk was first presented at KubeCon + CloudNativeCon Europe 2023. Check out the full 33-minute session or download the slides.

Power Optimizations at the Operating System (OS) Level

There are two node-level optimizations the CPU exposes to the Linux kernel: TuneD performance profiles and CPU power controls.

TuneD Performance Profiles

In a Red Hat OpenShift* environment, TuneD provides a framework for configuring hardware power optimizations through performance profiles. A performance profile applies your specific tuning configurations to a workload to enable the operating system to perform better. There are out-of-the-box profiles for common use cases, such as high-throughput data workloads and low-latency network workloads, or you can create custom profiles for special workloads.

CPU Power Controls: P-states and C-states

Another way to achieve power optimizations is by controlling your CPU frequency. Each CPU supports a certain range of frequencies; higher frequencies deliver faster processing but consume more power. The CPU will decide how much frequency to use automatically, or you can manually tune the frequency based on your workload needs through two controls: P-states and C-states.

P-states allow you to lock the CPU frequency of each core to run at the same level throughout a workload, which is then applied to Linux kernels through governors. You can choose a governor that saves power, one that maximizes performance, or one that balances both. C-states control the CPU in sleep states. When C-states are set to the lowest end of the range, the CPU is always ready to perform and uses power even when it doesn’t have work. As you increase the C-state setting, more parts of the microprocessor rest to save power. When you have workloads that can operate at higher latency, you can save power by increasing the C-state.

Soon, through an upcoming control called uncore frequency, you’ll be able to control the frequency of everything on the CPU that’s not in a core, such as the memory controller and cache coherency logic. Uncore is not currently supported but is expected in the next Kubernetes Power Manager release.

Power Optimizations at the Kubernetes Level

How does Kubernetes factor into the power savings picture? Once you’ve defined your performance profile and which nodes to apply it to, the Kubernetes Node Tuning Operator (NTO) extracts the tuning rules and applies them to all lower-level components. The kubelet passes this information to the OS on the node, and through its CPU manager, topology manager, and memory manager, controls CPU assignments. TuneD applies the tuning rules to every node, enabling the CPU to automatically adjust the P-states and C-states.

Once you configure the performance profile, the Kubernetes Node Tuning Operator (NTO) shares the tuning rules with the kubelet and TuneD to be applied across the node.

NTO can control several CPU components to enable energy optimizations, including disabling CPU cores and selecting specific CPU governors per core or groups of cores, and granularly control configurations per pod for mixed workloads.

Using NTO to Disable CPUs

Let’s say you overprovisioned your hardware for future growth and there are CPUs you’re not using now. Through a configuration change in the performance profile, you can disable cores to save power until you need them. Note that when you want to turn them back on, you will need to reboot the node. While deactivating CPUs is expected to become hot pluggable soon, this optimization may not be right for every type of deployment now.

Using NTO for Mixed Workloads

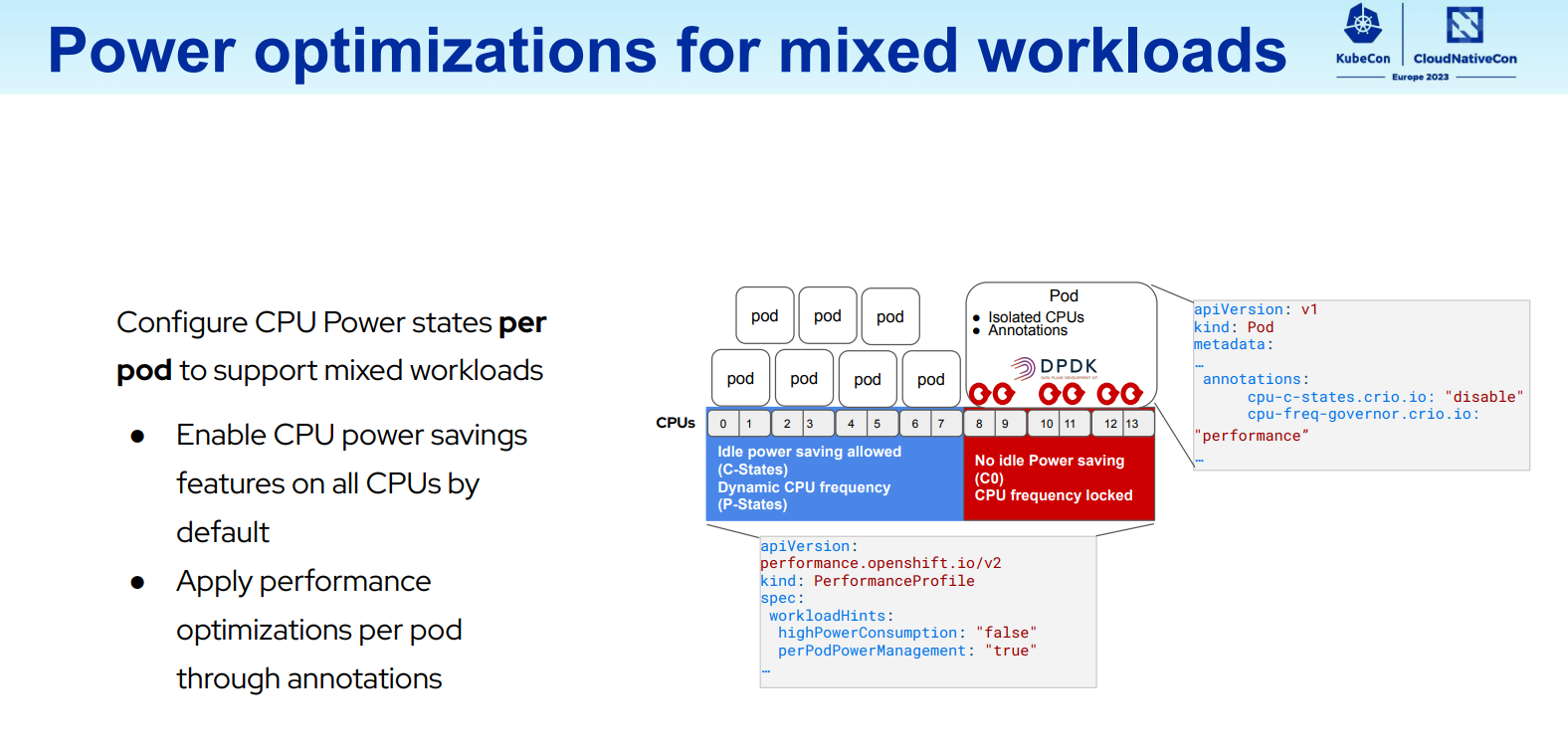

These power optimizations are especially valuable for telecom customers who run latency-sensitive workloads alongside workloads that aren’t, such as a data plane workload versus a control plane workload. Through annotations you can adjust CPU power states per pod to support mixed workloads. This allows you to enable power savings for workloads that aren’t latency-sensitive while prioritizing performance for low-latency workloads.

Telecom companies with mixed workloads can use annotations to apply different performance profiles per pod.

Kubernetes Power Manager Impacts Power Usage per Application Level

Kubernetes Power Manager offers similar power savings benefits on the application level. In Kubernetes now, CPU resources are allocated based on availability, not specific features of the platform such as processors or power utilization. The Kubernetes Power Manager bridges the gap between applications and platforms to provide more control over CPU assignments. For instance, you can assign higher CPU frequencies to high-priority applications and lower frequencies to other applications. Power Manager benefits include the ability to:

- Reduce operational workloads

- Lower power consumption by controlling the frequencies of the shared pool cores

- Turn off uncore functionality as needed

- Choose specific governors

- Adjust various sleep states

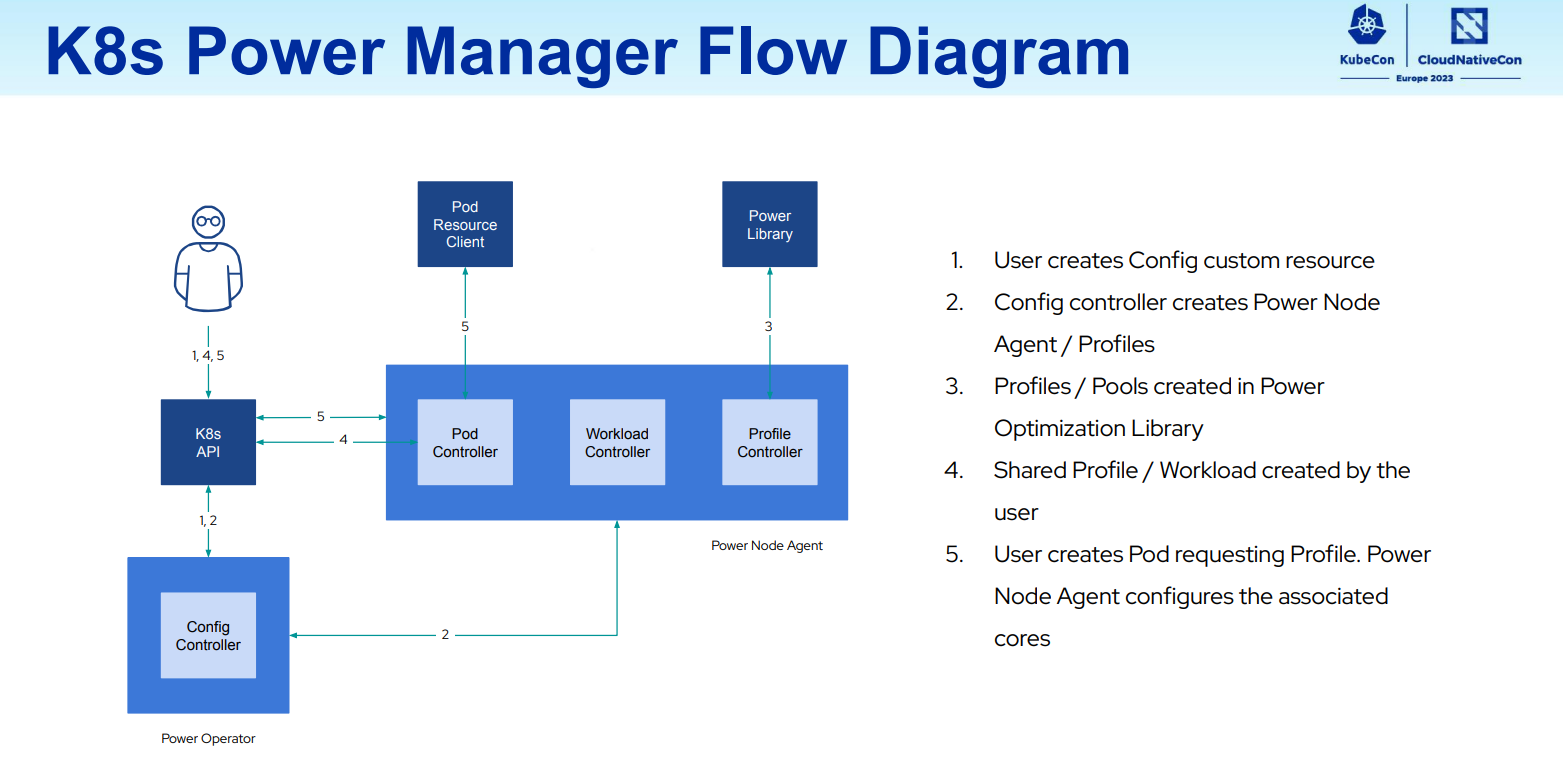

The Power Manager architecture is structured like an operator. To configure a system, the user starts by creating performance profiles through a configuration file or a custom resource like YAML. The configuration controller deploys the profiles across the node for the user to start applying to workloads.

Create a performance profile as a configuration file or YAML, and the Kubernetes Power Manager will share them with controllers across the node for applying to workloads.

Similar to TuneD, the Power Manager profile types include a performance-based profile, a power saving–based profile, and a profile that balances both. The software also allows users to leverage power optimizations by controlling C-state and P-states, setting optimization parameters for high- and low-latency periods of the day, and controlling the frequencies of shared pool cores.

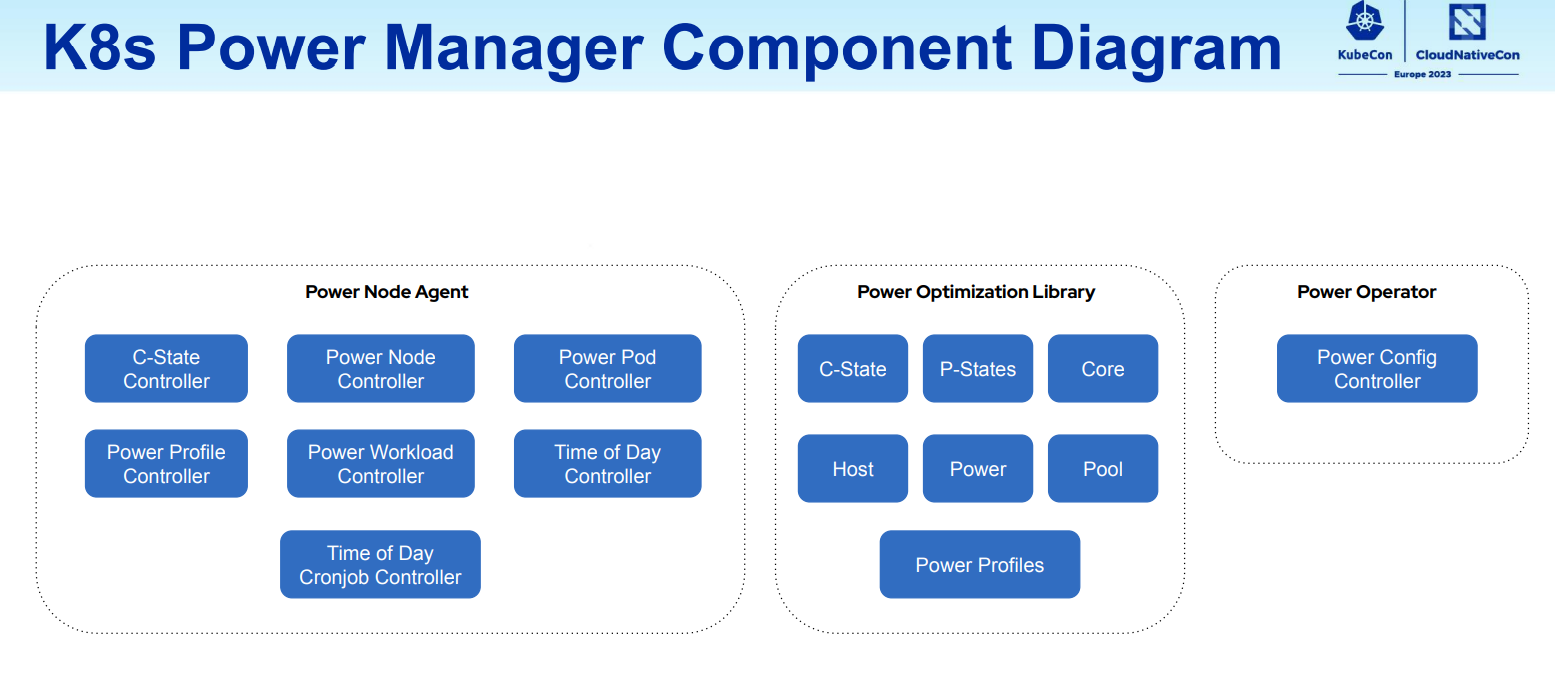

Kubernetes Power Manager offers capabilities as a power node agent, power optimization library, and power operator.

Demo: Deploying an OpenShift Workload Using Power Manager and TuneD

This talk includes a quick demo of how to deploy a workload using the Power Manager and TuneD on the OpenShift platform. Follow along by watching the KubeCon presentation, which includes a four-step overview and a line-by-line walk-through.

About the Presenters

Rimma Iontel is a Chief Architect in Red Hat's Telecommunications, Entertainment and Media (TME) Technology, Strategy and Execution office. Prior to joining Red Hat, she spent 14 years at Verizon working on next-generation networks initiatives and transitioning to software-defined cloud infrastructure.

Dr. Atanas Atanasov is a cloud native senior software engineer who has been at Intel for six years.