This article is a general overview of the ways that web browsers can rasterize website information into actual pixels you can see. When a web browser downloads a page, it parses the source code and creates the DOM. Then it needs to figure out what images/text/frames to show where. This information is represented internally as layer trees. You can think of the layer trees as simplified DOM trees, as they contain only the visible elements of the page and can group some of the DOM elements together into a single layer.

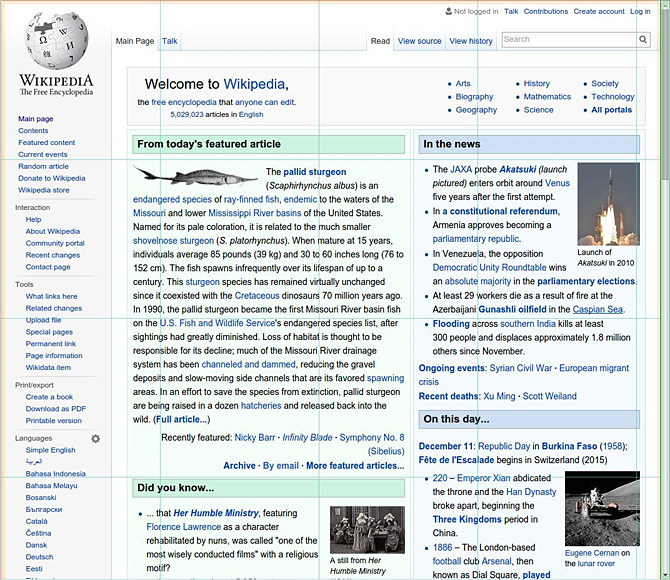

Imagine that you already have the layer trees and you want to show them on the screen. By now, each of the layers already contains information about how to paint it, so you just need to call the correct painting instructions in the correct order. However, you would have to do this for the whole page each time the user scrolls or an animation plays, which is clearly inefficient. Therefore, we divide the page into tiles that make up a grid of squares sized around 256x256 pixels.[1] You can see the tiles on the following picture, with the tile boundaries visualized using the Chromium* dev tools.[2]

Rasterizing a single tile is easier than rasterizing the whole site, since we can ignore paint commands that would not be visible on the affected tiles. If the user clicks on the tile or some animation plays, only the affected tiles have to be rasterized again. On simple web pages, the tiles are usually only rasterized once, but more interactive sites or sites with animations can cause some tiles to be recalculated every frame.

There are two main ways to rasterize a tile—the old way is to do it on the CPU and send it to the GPU as a texture; the newer way is to rasterize it using the GPU with OpenGL. Each method has its own advantages and disadvantages, and each method is best suited for certain kinds of web pages. GPU rasterization will not replace software (CPU) rasterization anytime soon, as I explain later.

Software (CPU) rasterization

Chromium uses the Skia library for rasterization, which eventually uses the scanline algorithm to create a bitmap. Normally, to send the result to the GPU to be drawn on the screen, we could just upload it by calling glTexImage2D(), but Chromium’s security model makes it a bit more complicated.

Since the rendering process is sandboxed and doesn’t have direct access to the GPU, Chromium uses a separate GPU process whose main purpose is to act as a proxy between the rendering process and the GPU, accepting OpenGL commands and passing them to the graphics drivers. Thus, we have to put our rasterized result into shared memory and send a message to the Chromium GPU process to call glTexImage2D() on it.

This has an obvious disadvantage—the upload has to be done each time the tile changes, meaning a lot of data has to be transferred and the GPU process can be kept very busy. Simple web pages are not going be overly affected by this, but interactive web pages that use a lot of animations or JavaScript effects, for example, must be repainted almost every frame (up to 60 times per second). This is especially a problem on mobile devices, since the screens are smaller and designers often hide elements until the user requests them, which creates many transition effects.

Software rasterization with zero copy

Zero copy texture uploads is an optimization done by my colleagues Dongseong Hwang and Tiago Vignatti. It tries to minimize the inefficient process of uploading the texture to the GPU for each tile change. The rasterization is done the same way as before, but instead of uploading textures manually with glTexImage2D() for each tile change, we tell the GPU to memory-map the location of the textures in the main memory, which lets the GPU read them directly. This way, the Chromium GPU process only has to do the initial memory-map setup and can stay idle afterwards. This improves performance and saves a lot of battery life on mobile devices.

GPU rasterization

With GPU rasterization, part of the workload is moved from the CPU to the GPU,. All polygons have to be rendered using OpenGL primitives (triangles and lines). This is also executed by Skia via the GPU backend called Skia Ganesh. The result is never saved in the main memory and therefore doesn’t have to be copied anywhere, because it all happens on the GPU. While this slightly reduces the main memory usage, it will use a bigger amount of GPU memory.

The main problem with GPU rasterization is fonts, or other small, complicated shapes. OpenGL doesn’t have any native text rendering primitives, so you have to use an existing library (or painfully implement your own) which can rasterize them using various methods. For example, using triangles to represent the characters, using precomputed textures, or other mechanisms. It’s not trivial to make the fonts look good while also having an efficient algorithm. Try to imagine how many triangles you’d need to draw a wall of tiny Chinese text.

Why don’t we just copy the rasterization algorithm we used before and paste it into a GPU shader? The scanline algorithm used on the CPU is very linear and depends on the previous results, which makes it difficult to parallelize. While the GPU could technically run it, doing so would completely remove the advantage of using a GPU in the first place, since GPUs are very good at running thousands of independent parallel tasks, but very bad at running a single one.

Instead of rendering the text with triangles, we could precompute the individual characters and put them into a texture, essentially creating a font atlas as shown in the picture below. This texture then gets uploaded to the GPU, and pieces of it are mapped onto squares consisting of two triangles. Yet this still wouldn’t solve our problem with Chinese text, since it has different characters for almost everything. The texture would end up being huge, which removes the advantage we get from using the GPU and otherwise not having to transfer a lot of data. What Chromium* does is to create a new font atlas for each web page, although this has its own disadvantages—the rasterization has to be done again if the user zooms in, otherwise the fonts would seem blurred.

Conclusion

While it would be great to make use of the GPU to accelerate rendering of all web pages, it’s just not suitable for some tasks. The plan to solve this issue in Chromium is to use software (CPU) rendering with the zero copy optimization for pages where that method is faster, but to use GPU rendering otherwise. We can determine which method should be used by some heuristic. For example, we can use the CPU if the site has a lot of text (especially Chinese or Arabic), and use the GPU on pages with many animations and transition effects. For the cases where it makes sense to use it, GPU accelerated rendering allows for more seamless animations and better performance.

1 This is a simplification. The tiles don’t have to be squares and can sometimes be overlapping or even moving around during some animations. Tiles are usually independent of how the layer trees are structured, but sometimes a layer can get its own tile, for example when an animation is moving it around the screen.

* Other names and brands may be claimed as the property of others.

2 To see this visualization for any page, open Chromium dev tools (Ctrl+Shift+I), show the console (press Esc), look at the the tabs on the bottom and open “Rendering”. From there, check the “Show composited layer borders.”