Introduction

The IEI TANK* AIoT Developer Kit is a ruggedized embedded computer system for performing deep learning inference at the edge. This computer platform supports multiple devices for heterogeneous workflows, including CPU, GPU and FPGA.

This paper provides introductory information, links and resources for operating an IEI TANK with the Intel® Distribution of OpenVINO™ toolkit for Linux* with FPGA support. The steps for running an inference engine API sample in Python* targeting the FPGA are also described below.

Hardware and Software Components

The IEI New Product Launch video provides an overview of the Mustang-F100-A10 acceleration card, along with information on how the card is installed in an IEI TANK. Figure 1 shows the IEI TANK AIoT Developer Kit with a Mustang-F100-A10 acceleration card installed and operational.

Figure 1. Installed Mustang-F100-A10 acceleration card.

The hardware and software components for the system show in Figure 1 are listed below.

Computer

- IEI TANK* AIoT Developer Kit:

- Model TANK-870 AI-i5 / 8GB / 2A-R10

- i5-6500TE, 2.3GHz, Quad Core

- 8GB RAM

- 1TB HDD

Software

- Intel® Distribution of OpenVINO™ toolkit. The 2018 R4 Release supports Intel® Vision Accelerator Design with Intel® Arria® 10 FPGA (preview), a PCIe add-in card that boosts performance while providing low power consumption and low latency. See the Free Download page for the Intel® Distribution of OpenVINO™ toolkit with FPGA support.

- Operating System: Ubuntu* 16.04 LTS

Note: The IEI TANK AIoT Developer Kit specified comes with pre-installed Ubuntu 16.04 LTS, pre-installed Intel® Distribution of OpenVINO™ toolkit, Intel® Media SDK, Intel® System Studio and Arduino Create*.

FPGA

- Intel® Vision Accelerator Design with Intel® Arria® 10 FPGA:

- Model Mustang-F100-A10

- Intel® Arria® 10 FPGA GX1150

- Low profile, two slot, compact size

- PCIe Gen 3x8

Software Installation

The online document “Install the Intel® Distribution of OpenVINO™ toolkit for Linux with FPGA Support” provides detailed steps for installing and configuring the required software components. This comprehensive procedure demonstrates how to:

- Install the core components and external software dependencies

- Configure the Model Optimizer

- Set up the Intel® Arria® 10 FPGA

- Program a FPGA bitstream

- Verify the installation by running a C++ classification sample using the -d parameter option to target the FPGA.

Note: All of these steps must be completed before attempting to run the Python classification sample presented in the next section.

Run a Python* Classification Sample Targeting FPGA

The steps required to run the Python classification sample included in the Intel® Distribution of OpenVINO™ toolkit are shown below. This workflow is similar to the steps shown in the installation guide under the section titled “Run a Sample Application,” only here we are running a classification sample created with the Python programming language.

- Open a terminal and enter the following command to go to the Python samples directory:

- Run a Python classification sample application targeting only the CPU, and use the -ni parameter to increase the number of iterations to 100:

The output of this program, with the -ni 100 parameter included, is shown in Figure 2.

Figure 2. Classification results using CPU only. - Next, run the command again using the -d option to target the FPGA:

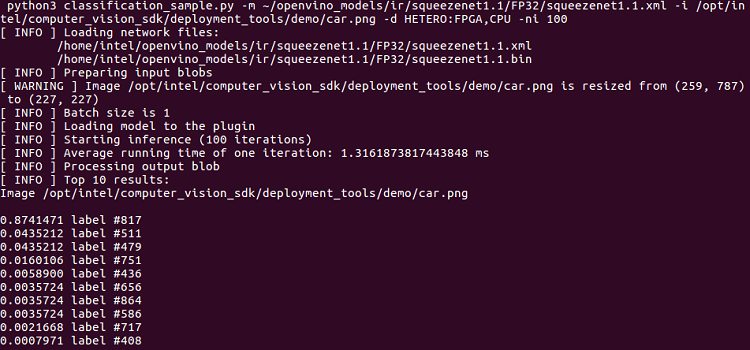

The output of this program, with the -d HETERO:FPGA,CPU -ni 100 parameters included, is shown in Figure 3.

Figure 3. Classification results using FPGA.

Summary

For more information about the IEI TANK* AIoT Developer Kit and the Intel® Distribution of OpenVINO™ toolkit for Linux with FPGA support, take a look at the resources provided below.

Product information

- IEI TANK AIoT Developer Kit

- Get Started with the IEI TANK AIoT Developer Kit

- Mustang-F100-A10 - Intel® Vision Accelerator Design with Intel® Arria® 10 FPGA

- Intel® Distribution of OpenVINO™ toolkit

Articles and tutorials

- OpenVINO™ Toolkit and FPGAs

- Install the Intel® Distribution of OpenVINO™ toolkit for Linux with FPGA Support

- Kits To Accelerate Your Computer Vision Deployments

- Use an Inference Engine API in Python* to Deploy the Intel® Distribution of OpenVINO™ Toolkit