Story at a Glance

- New features in PyTorch 1.13 and the Intel® Extension for PyTorch* 1.13.0-cpu, when used together, offer AI developers the potential to enhance model performance and accuracy while also monitoring the performance of applications.

- AI developers can monitor the performance of applications with Intel® VTune™ Profiler to verify they are performing as designed and identify potential issues that need to be remediated.

- With the formats BF16 and channels last, performance improvements may be observed in computer vision models.

- Lastly, ipex.optimize automatically takes advantage of the optimizations for developers when the extension is imported.

New Features in PyTorch 1.13

Performance Analysis: Intel® VTune™ Profiler Instrumentation and Tracing Technology (ITT) APIs Integration

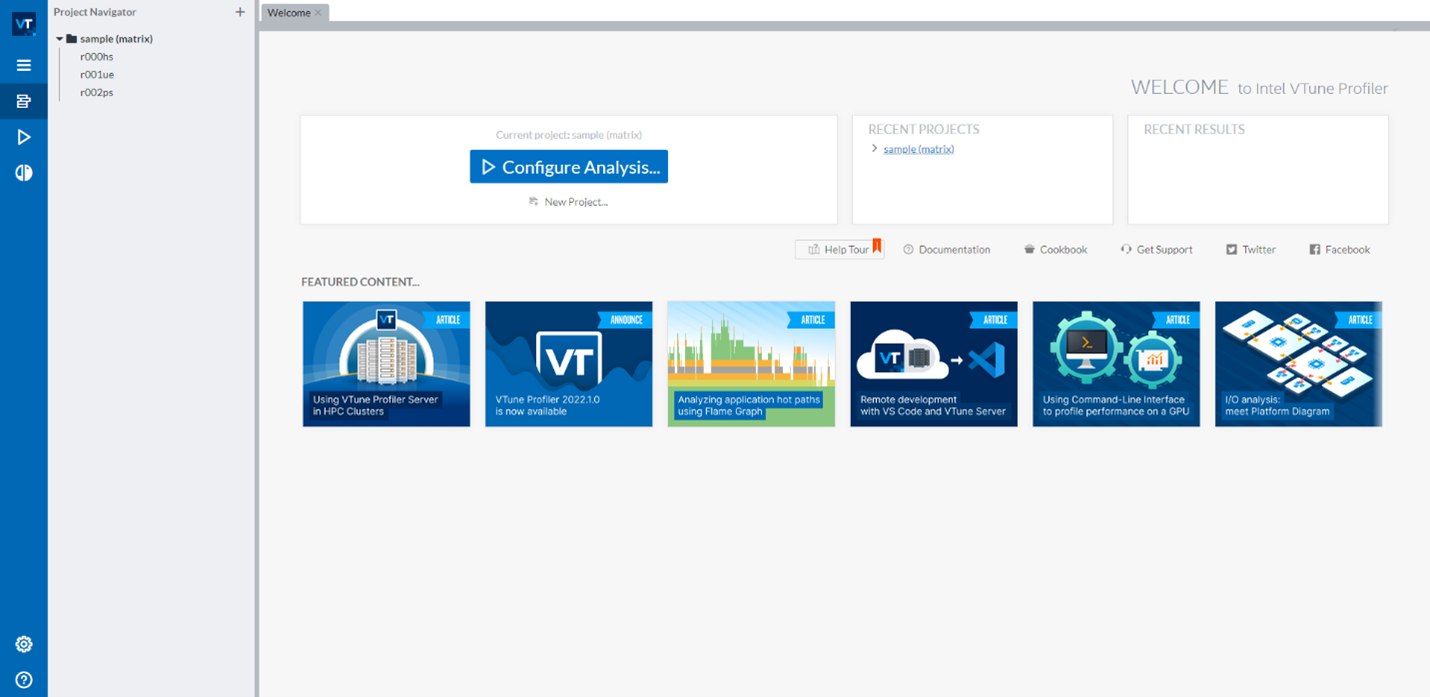

Intel VTune Profiler is a performance analysis tool for serial and multithreaded applications. It provides a rich set of metrics to help users understand how an application is executing on Intel® platforms and identify where any performance bottlenecks are. (Figure 1)

Figure 1. Intel VTune Profiler interface to configure and monitor application performance

The Instrumentation and Tracing Technology API (ITT API) provided by VTune Profiler enables a target application to generate and control the collection of trace data during its execution.

The advantage of this feature is the ability to label the time span of individual PyTorch operators, as well as customized regions, on the VTune Profiler GUI. When anything abnormal is found, it is very helpful to locate which operators behaved unexpectedly.

PyTorch users can visualize the op-level timeline of PyTorch scripts execution when they need to analyze per-op performance with low-level performance metrics on Intel® platforms. The code snippet below shows how to enable ITT for tracing in PyTorch code as well as annotate PyTorch models with ITT.

Learn more about performance monitoring with our tutorial.

BF16 and Channels-Last Formats Support in TorchScript

TorchScript graph-mode inference performance on x86 CPU is boosted by adding channels-last and BF16 formats. PyTorch users may benefit from channels-last optimization on the most popular x86 CPUs and benefit from BF16 on both the 3rd and 4th Gen Intel® Xeon® Scalable processors—a promising performance boost is observed with computer vision models using these two formats on 3rd Gen Intel Xeon® Scalable processors.

The performance benefit may be obtained with existing TorchScript, channels-last, and BF16 Autocast APIs. See the code snippet below which incorporates them both.

Control Verbose Messages During Runtime

Intel® oneAPI Math Kernel Library (oneMKL) and Intel® oneAPI Deep Neural Network Library (oneDNN) are used to accelerate performance on Intel platforms. Verbose messaging during runtime of these libraries is helpful to diagnose operator execution issues. However in some cases, the number of messages generated can be very unwieldy. To restrict the number of messages, functionality has been added to partially profile the execution process. Code snippets are available for mkldnn_verbose.py and mkl_verbose.py.

New Features in the Intel® Extension for PyTorch* 1.13.0-cpu

To take advantage of the features below, PyTorch 1.13 and the Intel Extension for PyTorch 1.13.0-cpu must be installed. With ipex.optimize, users can take advantage of the following three features to help improve performance and accuracy on CPUs. The code snippet for importing the extension is shown in the code block below.

1. Automatic channels-last format conversion: Channels-last conversion is now applied to PyTorch modules automatically with ipex.optimize by default for both training and inference scenarios. Users don’t have to explicitly convert input and weight for computer vision models.

2. Code-free optimization (experimental): ipex.optimize is automatically applied to PyTorch modules without the need of code changes when the PyTorch program is started with the Intel Extension for PyTorch launcher via the new --auto-ipex option.

3. Graph-capture mode of ipex.optimize (experimental): A new boolean flag graph_mode (default off) was added to ipex.optimize. When turned on, it converts the eager-mode PyTorch module into graph(s) to get the best of graph optimization. Under the hood, it combines the goodness of both TorchScript tracing and TorchDynamo to get as max graph scope as possible. Currently it only supports FP32 and BF16 inference. INT8 inference and training support are under development.

For further details on additional features and code samples, read the release notes for the Intel Extension for PyTorch 1.13.0-cpu.

Improving Application Performance

These new features in PyTorch 1.13 and the Intel Extension for PyTorch 1.13.0-cpu, when used together, offer AI developers the potential to enhance model performance and accuracy while also monitoring the performance of applications.

We encourage you to check out Intel’s other AI Tools and Framework optimizations and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio.

Related Blog

PyTorch 1.13 release, including beta versions of functorch and improved support for Apple’s new M1 chips.