Today, we released our latest full set of 2025.1 software developer tools update releases of Intel’s

- AI Tools and Frameworks

- Intel® Distribution for Python*

- Intel® oneAPI Base Toolkit

- Intel® oneAPI HPC Toolkit

In this set of releases, we emphasize increased productivity and performance for AI on CPU, GPU, and NPU. We strengthened support for Visual AI inference and doubled down on increases in highly parallel compute productivity and code quality assurance for all software developers. Whether you are targeting a cloud-connected edge inference device, an exascale supercomputer, or anything in between, the updates in compiler and library capabilities in this release can make your work easier and more productive.

We will discuss how these improvements will benefit your software development experience.

You can find a brief news update listing of the key feature improvements at this link:

→ Faster AI, Real-Time Graphics, & Smarter HPC: Intel Developer Tools 2025.1

Let us start by looking at GenAI and Visual AI use cases.

Embrace Open Source AI Frameworks

We approach AI by looking at the most vibrant industry-standard tools and frameworks and contributing our optimization know-how and experience. This approach benefits you by allowing you to use your existing development workflows and AI frameworks.

Thus, you can streamline their use and migrate them to take advantage of Intel hardware without being locked into a particular hardware set and abandoning your legacy codebase.

Optimizations for Intel hardware are being added to TensorFlow* and PyTorch* and even the JAX* Python library. The JAX Python library has been integrated into the AI Tools, allowing it to benefit from Intel® Distribution for Python optimizations to accelerate numerical and scientific computing and optimization tasks in machine learning models.

Latest Optimizations for PyTorch

The latest additions and optimizations available with the Intel® Extension for PyTorch and upstreamed to PyTorch 2.6 highlight this philosophy of having the AI solutions of choice target Intel CPUs and GPUs seamlessly and without friction.

We integrated the Intel® Extension for DeepSpeed* into the PyTorch release. We also added the same support for SYCL kernels on Intel GPU devices to the Microsoft Research open-source optimization library already upstreamed to stock DeepSpeed and applied additional optimizations to a wide range of large language models (LLM).

Since last year already you can run accelerator offload code on SYCL*-enabled devices with a simple torch.tensor device name change:

# CUDA* Code

tensor = torch.tensor([1.0, 2.0]).to("cuda")

# SYCL Code

tensor = torch.tensor([1.0, 2.0]).to("xpu")

|

Use the data types best suited for your AI use case with data types such as FP32, BF16, FP16, and automatic mixed precision (AMP). GenAI LLMs are now optimized for INT4, and transformer engines support FP8. For example, using the reduced FP16 floating-point data type, along with CPU native Intel® Advanced Matrix Extensions (Intel® AMX) accelerators on CPUs like the Intel® Xeon® 6 with P-Cores substantially accelerates inference for both PyTorch inductor and eager modes. Optimizations have been expanded for Intel® GPUs, including the full family of Intel® Arc™ Graphics (e.g., Intel® Core™ Ultra Series 2, Intel® Arc™ A-Series graphics, and Intel® Arc™ B-Series graphics). On Windows, the Intel GPU software stack installation has been simplified with one-click installation of torch-xpu target support and binary releases for torch core. FlexAttention to Speed Up InferenceAnother interesting addition is x86 CPU support for the TorchInductor CPP backend optimizations of FlexAttention, which accelerates advanced weighted inference performance. Attention settings are a means to assign weights to a model to selectively focus on the most relevant parts of a dataset input sequence. However, there are many variants of these attention weights. Suppose you combine that with the frequent need for highly customized combinations of weights to achieve the desired result. In that case, it is unsurprising that a developer or data analyst frequently runs into a situation where inference performance tanks simply because their attention mechanism is not well-tuned. This is where PyTorch’s FlexAttenstion comes in. It provides pre-optimized attention variants that can be reused with only a few lines of PyTorch code. The desired combination of attention mechanisms can then be fused and optimized into a FlexAttension kernel using a torch.compile. This includes taking advantage of holistic optimizations that can be applied across all the attention variants used to achieve performance comparable to hand-written code. → Check out the blog “Unlocking the Latest Features in PyTorch 2.6 for Intel® Platforms” Built on oneDNN and PythonIntel’s optimizations for PyTorch also benefit from the latest work put into the Intel® Distribution for Python and the Intel® oneAPI Deep Neural Network Library (oneDNN). The Intel Distribution for Python consists of 3 foundational packages:

It is now

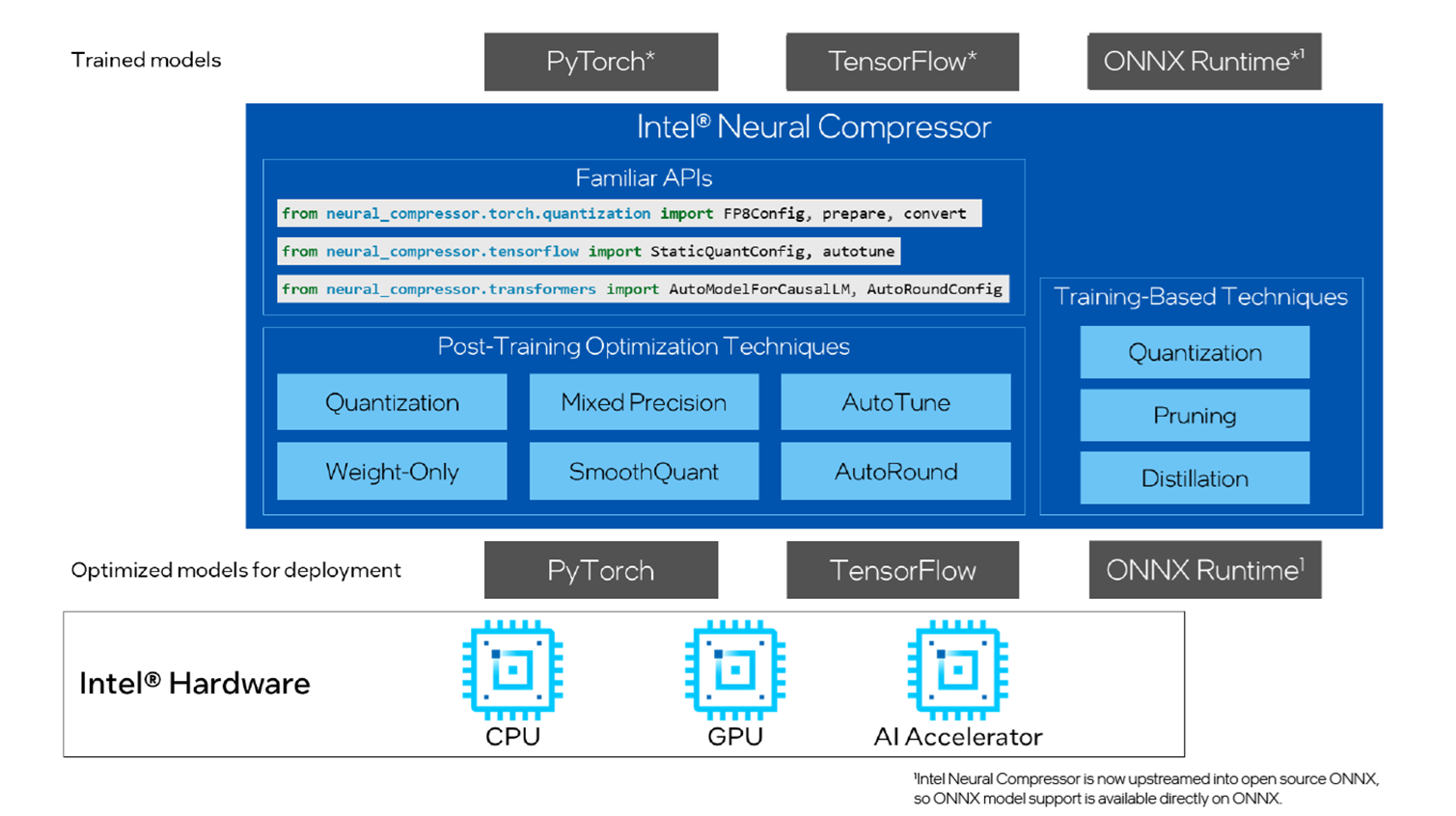

making it even easier to use existing Python implementations without change when targeting Intel hardware. A lot of effort and work went into oneDNN as well. The focus here is on additional optimizations and performance tuning for the latest Intel® Xeon® Processors and a wider range of Intel® Arc™ Graphics. Developers can leverage enhanced matrix multiplication and convolution performance with optimizations for the latest Xeon processors, including Intel® Xeon® 6 processors with the Intel AMX instruction set, thus accelerating data center AI workloads. AI inference on client CPUs sees improved performance with oneDNN on Intel Arc Graphics., especially also on Intel® Core™ Ultra processor (Series 2) AI PCs and Intel Arc B-series discrete graphics. oneDNN optimizes AI model performance by zooming in on Gated Multi Level Perceptron (Gated MLP) and Scaled Dot-Product Attention (SDPA) with implicit casual mask. Support for int8 or int4 compressed key and value through the Graph API enhances both speed and efficiency for inference. Model Compression Speeds Up InferenceThe Intel® Neural Compressor’s purpose is to assist with inference efficiency. It performs model optimization to reduce the model size and increase the speed of deep learning inference for deployment on CPUs, GPUs, or Intel® Gaudi® AI accelerators. It is an open-source Python* library that automates popular model optimization technologies, such as quantization, pruning, and knowledge distillation across multiple deep learning frameworks, all backed by state-of-the-art Low-bit LLM quantization with INT8/FP8/INT4/FP4/NF4 (Normalized Float 4) and sparsity exploitation. Using it as a tool for distilling knowledge from a larger model to improve the accuracy of a smaller model for deployment makes it ideal for prepping inference scaling from edge to cloud.

Figure 1: Intel® Neural Compressor Overview Accelerate Visual AI and RenderingIn the new release, the Intel® oneAPI DPC++/C++ Compiler and Intel® DPC++ Compatibility Tool deliver enhanced SYCL interoperability with Vulkan* and DirectX12*. This enables sharing image texture map data directly from the GPU, eliminating extra image copying between CPU and GPU, ensuring seamless performance in image processing and advanced rendering applications, and boosting content creation productivity. Additionally, we propose a SYCL 2020 bindless image extension to enhance image management capabilities, particularly for dynamic image arrays and accessing images through handles instead of accessors. These improvements aim to simplify the development of gaming, graphics, visual AI, and visually appealing dynamic content with SYCL-enabled GPU acceleration. The resulting productivity boost will benefit GenAI, visual AI, and advanced complex image data analytics. Profile and Tune AI InferenceIf we look at edge inference performance, understanding potential performance bottlenecks and tuning your workload running on an Intel® Core™ Ultra processor AI PC becomes key. This processor type has three compute engines: CPU, GPU, and the Neural Processing Unit (NPU). The Intel® VTune™ Profiler assists with visual AI optimization by identifying performance bottlenecks of AI workloads calling DirectML or WinML APIs and pointing out the most time-consuming code sections and critical code paths for Python 3.12. For AI PCs, this is combined with platform and microarchitecture awareness that includes the NPU. NPUs are great for sustained low-power AI-assisted workloads running over an extended time interval. For example, if you perform webcam tasks like background blur and image segmentation, execution on an NPU will provide better overall performance. To maximize the performance of your AI application you need to ensure maximum utilization of the NPU compute and memory resources. Using the Intel VTune Profiler, you can identify bottlenecks in the code running on NPU. You can

→ Check out the tutorial “Accelerate NPU-Bound AI PC Applications using Intel® VTune™ Profiler on Intel® Core™ Ultra Processor”, to read up on how to accelerate AI PC inference workloads.

Figure 2: Intel VTune Profiler NPU Exploration Thread View → For a more general tutorial on using the Intel VTune Profiler for performance analysis and tuning of GenAI large language models, check out the article: “Enhance the Performance of LLMs using Intel® VTune™ Profiler for High-Performance GenAI.” High-Performance Data AnalyticsPerformance scaling is, however, not just about supporting edge inference and image processing in form factor or power consumption limited environments. It is also about using and expanding the same software development principles and tools to use cases requiring top-notch capabilities only distributed compute configurations and supercomputers can provide. Scaling for Distributed ComputeThe Intel® oneAPI Collective Communications Library (oneCCL) helps optimize and scale inference and training for very large datasets. Imagine applying AI to large-scale worldwide environmental data surveys, large demographic population studies, epidemiology, or financial risk assessments. Applying the principles of high-performance distributed computing to AI can address the extra compute need and the sheer volume of addressable model data that needs to be accessed. Thus, it enables you to train complex or large dataset models more quickly with distributed multi-node configurations. It is built on top of the common MPI and lib-fabrics standards, supporting a variety of interconnects such as Cornelis Networks*, InfiniBand*, and Ethernet. The reliance on common standards makes it easy to integrate into new and existing deep learning frameworks by design. With 2025.1, we

This computational arena of large dataset AI is where traditional high-performance computing and AI intersect. More and more distributed large dataset visualization and simulation workloads rely on GPU acceleration offload kernels for training, inference, numerical solutions for non-linear or differential equations, and Monte Carlo simulation. This is where, along with the Intel® MPI Library, the new product release of the Intel® SHMEM Library comes into play. With it, you can improve the efficiency of communications and remote memory access (RMA) in distributed environments. You can target multi-node accelerator devices and hosts with OpenSHMEM 1.5 compliant features, including point-to-point Remote Memory Access (RMA) and OpenSHMEM 1.6 strided RMA operations, Atomic Memory Operations (AMO), Signaling, Memory Ordering, Teams, Collectives, and Synchronization. Simplify distributed multi-node SYCL* device access with Intel SHMEM SYCL queue-ordered RMA and SYCL host USM access using a symmetric heap API. → Check out code samples and the detailed Intel® SHMEM Library documentation. To support all this, MPI-standard-based distributed message passing has seen a broad set of improvements as well:

→ Learn how the Intel® oneAPI HPC Toolkit and our AI Tools and Frameworks help you fully leverage the power of the Intel® Xeon® 6 processor with E-cores and P-cores. Reliability, Maintainability, ProductivityThe ever-evolving complexity of the problems and algorithms addressed by software applications implies not only the need for standardized multi-architecture compute kernel offload using language constructs like SYCL*, OpenCL*, or OpenMP*but also demands the ability to write, maintain, and ensure the reliability of generated code more efficiently. This is what this cycle of LLVM-based compiler technology improvements targeted. The introduction of Ccache* with the Intel® oneAPI DPC++/C++ Compiler accelerates software development by reducing the time spent recompiling code after minor changes. By caching and reusing previous compilation results, developers can experience faster iterations and more efficient workflows, allowing them to focus on writing high-quality code rather than waiting for builds. When using it on the command line, simply add a call to the ccache utility preprocessor:

In a cmake environment, use the -DCMAKE_CXX_COMPILER_LAUNCHER option.

Another way to improve productivity and speed up product release is to reduce the number of debug and validation delays before product launch. This can be achieved by catching potential issues early during the original code design and implementation. Sanitizers help identify and pinpoint undesirable or undefined behavior in your code. They are enabled with a compiler option switch that instruments your program, adding additional safety checks to the binary. Using sanitizers can effectively catch issues early in the development process, saving time and reducing the likelihood of costly errors in production code. The Intel® Compilers support a rich set of sanitizers for code targeting CPU and GPU:

New with the Intel® oneAPI DPC++/C++ Compiler 2025.1 release are

The same level of Address Sanitizer support for OpenMP has also been extended to the Intel® Fortran Compiler.

→ To activate the Numerical Stability Sanitizer for the C\C++ code, use the flag -fsanitize=numerical.

→ To activate this feature for the device code, use the flag -Xarch_device -fsanitize=memory and set runtime variable UR_ENABLE_LAYERS=UR_LAYER_MSAN. The flag -Xarch_host -fsanitize=address should be used to identify memory access problems in the host code. → A detailed discussion of all the sanitizer features can be found here: Find Bugs Quickly Using Sanitizers with Intel® Compilers.

The Latest Open Standards SupportExperience parallel offload efficiency with the latest OpenMP and SYCL features. Enjoy more coding flexibility with some brand-new Fortran 23 features for argument handling and list variables:

→ Try the new software development tools versions now for free in a hosted Jupyter notebook on Intel® Tiber™ AI Cloud and start exploring the possibilities. Get Started with Enhanced Software Development ProductivityThe 2025.1 releases of all our tools are available for download here:

Looking for smaller download packages?Streamline your software setup with our toolkit selector to install full kits or new, right-sized sub-bundles containing only the needed components for specific use cases! Save time, reduce hassle, and get the perfect tools for your project with Intel® C++ Essentials, Intel® Fortan Essentials, and Intel® Deep Learning Essentials. Try it out with Ready-to-Use Jupyter Notebooks.Take new software development tools for a free test drive in a hosted Jupyter notebook on Intel® Tiber™ AI Cloud. Find out MoreProduct News

Code Generation and Code Correctness

AI Workload Profiling

|