Authors: Yolanda Chen, Kaining Yuan, Intel

Co-Authors: Yi Zhang, Daoming Qiu, and Lei Shi, Intel

Abstract

The World Wide Web was invented for interlinked document, and over the years it evolves into an application platform which can be accessed via a web browser. Progressive Web Applications (PWAs) are a set of modern web standards that enhance web applications with modern application features, like being installed as a desktop or home-screen icon, working with poor network or even completely offline, and sending push notification to end users. In this paper, we will firstly introduce how we measure the performance of one vital real-world PWA - Office Online and make the metrics reproducible and reliable. Then we apply Profile Guided Optimization (PGO) on the Office Online workload in Chrome* build process and compare the performance gain from benchmark profiles (Speedometer2 and others). We find while the overall performance gain of Office365 profile is 1.6% better than using Speedometer2 benchmark profile, 90% of subcases are beneficial from PGO by both profiles, 10% unaffected, and 13% are degraded. We identify the causes of the unaffected and degraded cases and proposed a more effective way in profile selection when applying PGO to improve real-life performance.

Introduction

Microsoft* Office is the most popular productivity software application suite in the world. It also ships with Office Online, which is the web version of each software application in the suite, providing seamless online experience to the native counterpart. Part of the Microsoft Office Online applications are already PWAs and gained a great number of users.

It is different to measure the performance of PWA / vanilla web application, comparing to measuring the performance of a web site. Web applications, e.g., Microsoft Office Online applications, are often designed as a Single Page Application (SPA), there is no page navigation in the entire life cycle of the SPA, there are resources loaded and unloaded dynamically which led to massive change in the same web page, there is heavy JavaScript* computation demand. Traditional performance metrics for web pages don’t work for web applications anymore. Therefore, we must design a new Microsoft Office Online PWA Workload to be able to perform the measurement. Take an example, LightHouse*1 is a tool built for measuring web page performance and PWA readiness, it can report several page-loading performance metrics and a checklist of PWA readiness, but it doesn’t measure task completion performance inside a web page. There are existing workloads, e.g., WebXPRT2, which can measure task completion performance inside a web page, but they only perform designed tasks instead of real-world tasks. Therefore, it’s necessary for us to design a new Microsoft Office Online PWA Workload, as a real-world productivity web applications workload, to help us to understand those representative usage scenarios and to find performance bottlenecks.

PGO is a widely used compiler optimization technique that uses profiling to improve application runtime performance. It collects profile data from a sample run of the program across a representative input set. The caveat, however, is that the sample of data fed to the program during the profiling stage must be statistically representative of the typical usage scenarios; otherwise, profile-guided feedback has the potential to harm the overall performance of the final build instead of improving it3. Normally applications will collect profile data from benchmarks which will improve the benchmark performance dramatically, without guaranteeing the impact to the real-life performance.

Contributions: This paper makes the following contributions.

- Developed world first Office Online Workload: We develop a benchmarking infrastructure and a local cache server to run real-life user scenarios over all four Office Online products including Outlook, Work, Excel and PowerPoint. We design metrics to measure user experience, load time and user task response time.

- Tackle PGO over Multi-process and Multi-threads Application: We identify ways to collect precise profiles when running Office Online over multiple browser tabs with 100+ browser processes and get rid of profiles that are not critical to performance.

- Performance Analysis of Office Online Profile and Benchmark Profiles: Using Office Online workloads and collected profiles we conduct performance analysis on PGO over 52 subcases, comparing performance gain between benchmark, Office Online and merged profiles. Even though there’s 1.6% regression by using Speedometer 2.0 profile comparing Office Online profile, this benchmark profile still can contribute 80% PGO gain. We also identify 10% cases are not affected by both benchmark and Office Online PGO. We root cause the reason and provide guidance on selecting samples for PGO in improving PWA real-world performance.

Design of Office Online Workload

There are benchmarks in industry that measures performance of native Office product, e.g., PCMark4 and SYSmark5. They measure various user operations and experiences of different kinds of PC software which Office is part of it. The scenarios of Office part are mainly about opening and closing an Office document and some limited simple operations like copy and paste etc.

Our design goal of Office Online workload is to encapsulate more than those benchmarks. It should consist of typical real life user manipulation on Office Online into workload scenarios and show the performance by metrics that is reliable and repeatable. The design includes scenarios and metrics definition, network cache server design and automation execution infrastructure design.

Scenarios and Metrics Definition

The workload scenarios cover four Office Online products, Outlook, Word, Excel and PowerPoint. For each product only typical scenarios are implemented into subcases. The scenarios are a set of user manipulations on Office Online products with clear prerequisites and tear down operations. They include but not limited to:

- Outlook loading mail list, creating, editing, forwarding, sending and deleting a mail, editing mail content, creating and editing a calendar item, creating and editing a contact item etc.

- Word copying text from Excel, web or another Word document, creating and editing text and rich contents, opening large content, pages scrolling etc.

- Excel creating and editing table, opening large content, editing pivot chart, sheets scrolling etc.

- PowerPoint opening and editing slides, opening all formatted pictures, opening large slides etc.

We also take popular web workload like Speedometer6, JetStream7, WebXPRT2 as a reference workload, in terms of scoring calculation and test scenario definition.

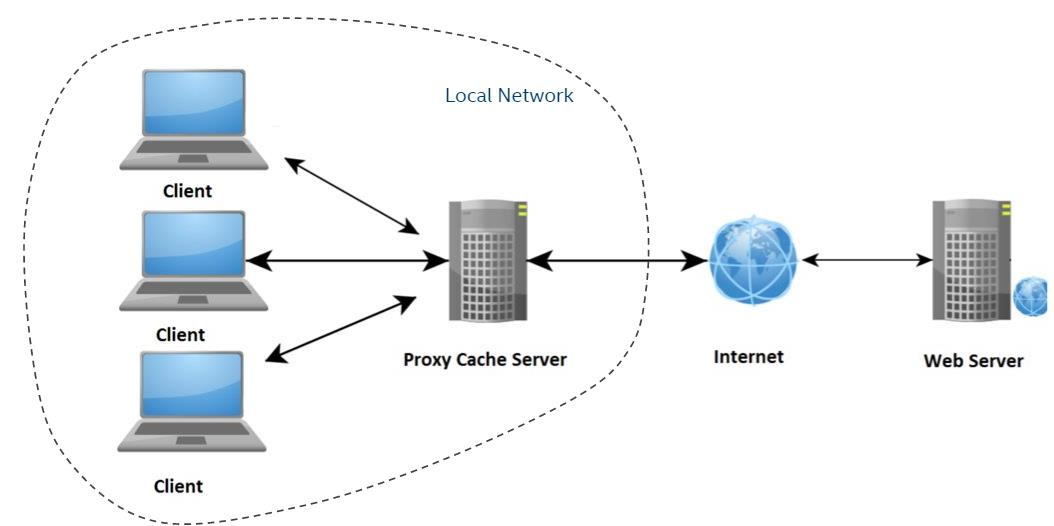

Network Cache Server

One of the biggest challenges for real life workload measurement is that the network condition is not stable which leads to severe performance variances. To eliminate the network impact for performance measurement, a network cache server is introduced which records the request and response packets exchanged between Clients and Microsoft Office Online server and caches the packets on a Proxy Cache Server.

Figure1. Cache server design

Testing Clients send the same requests to Proxy Cache Server and this Server responses corresponding response package as if responded by Microsoft Office Online server. By this means measurement procedure bypasses Office Online server and simulates an exact the same server environment inside local network. The network latency can be limited to the least level.

Automation Execution Infrastructure

Test scenarios are written into test scripts in JavaScript language. Each test scenario is represented by a test case, defined by a JavaScript function. A typical test case does the following steps:

- Make sure test environment is ready

- Prepare test data

- Perform user action via automation provided by web browsers

- Measure the task completion time

- Cleanup test data

Test cases can be run individually, or collectively aka the workload. And each test case can be run in 3 modes.

- live mode: bypass the proxy cache server, connect to live cloud server.

- record-mode: connect to live cloud server and at the same time load the proxy cache server with

- web traffics.

- replay-mode: use web traffics loaded in proxy cache server and bypass the live cloud server. This reduces run-to-run variance to a minimum level.

We run the whole collection of the test cases in record-mode, to load the proxy cache server, and then run the whole collection of the test cases again but in replay-mode, to test the performance with least run-to-run variance.

Result Reliability

The score is only calculated from user interested operations. The preparation and resource clearing phases for a scenario are skipped out of scoring region. With the help of network cache server, the network latency could be ignored. Even under such ideal testing environment, we still observed result variant. Reasons are Chrome browser is a complicated software stack which runs dynamic code in web application, GC and timers are triggered per need which is not predictable. That will impact the performance for a single workload run.

To eliminate such impact the default measurement is taken for 7 times and the max and min result are abandoned and the result is calculated by geomean of the remaining 5 measurements.

Run-to-run variance: the standard deviation of running the whole workload is typically 0.5% of the amount of time of all tested tasks, while the standard deviation of individual test case is typically 5% of the task completion time of that test case. The standard deviation might change on different hardware platforms, e.g. the standard deviation varies from 0.3% to 1.4%, depending on various conditions: the nature of hardware platform, the cooling conditions, the manufacturing flaws, etc.

Enable PGO on Office Online

Google has selected Speetometer2.0 benchmark to apply PGO on Chrome browser. According to their analysis, Speedometer benchmark approximates many sites due to its inclusion of real web frameworks including React, Angular, Ember, and jQuery. They investigated snapshots of popular web pages such as Reddit*, Twitter*, Facebook*, and Wikipedia, but not including Office Online and PWAs8.

Because hardware PGO ex.AutoFDO9 is still not implemented for Windows* platform yet, we only evaluate instrumented PGO performance in this paper.

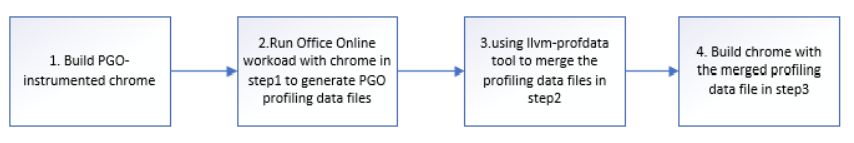

To enable PGO on Office Online workload, we use following steps:

Figure 2. Steps of enabling PGO on Office Online workload

Step1: build chrome v89.0.4386.0 with args “chrome_pgo_phase=1”

Step3: llvm version v11.1.0-rc2

Step4: build chrome v89.0.4386.0 with args “chrome_pgo_phase=2” and specified pgo_data_path

Due to the complexity of Office Online workload, we encountered several issues in collecting or merging profiles and delivered solutions.

Issue1: How to deal with the PGO profiling data files of different processes, for example, browser process?

The Office Online workload have more than 50 test cases. It will launch a chrome browser to run most of the test cases, and each test case will open/close a new tab, then chrome under-the-hood will yield more than 100 render processes. There are also several cold-start test cases, which will create and close a new chrome browser several times for each run, so the profiling data files will include more than 100 chrome-xx.profraw data files from browser process and 4 child_pool-[0-3].profraw data file from subprocesses (including render processes). The issue is whether to merge those chrome-xx.profraw files to the final profiling data file?

Although the workload test cases' scoring part are running on the render process, they also depend on browser process (for example, networking communications), so including all the profiling data will be better for the workload performance.

Issue2: How to deal with potential conflicts arising from multiple render processes writing to same profiling data file?

The Office Online workload’s test case normally have several iterations (including one warmup iteration, and several scoring iterations). For each iteration, the test case normally will open and close a new tab, so the related new render process is created and destroyed. During the workload running, there are totally more than 100 render processes dynamically generated. All those processes write their profiling data to the four data files: child_pool-[0-3].profraw.

The issue is whether to do some extra handling to solve conflicts for those render processes writing to only 4 data files. First, the test cases running is serial: i.e., the test case is run one by one, and the next case will only run after previous one finished. There is no conflict between different cases' render process. Second, in the test case’s test iteration, normally less than 4 render processes are running, so the conflict possibility is quite low. Third (and most importantly), the chrome’s PGO approach will automatically merge the profiling data when multiple processes try to write to the same profiling file10.

Issue3: How to differentiate the profiling data in non-scoring region and scoring region of the workload?

When running the Office Online workload, each test case will have some preparation steps and some finalization steps. For example, logging into the outlook account. Those steps are not inside scoring region, but they are necessary and will generate profiling data. They occupy ~2/3 of the total workload running time.

The issue is whether to do some differentiation of the profiling data from non-scoring region. There are too many non-scoring regions and current chrome PGO approach doesn’t provide related scoring region support. If we want to use the profiling data from scoring region only, it will be too complicated. Another reason is that those preparation steps are common web operations and improving their performance is also meaningful.

We have also tried to increase the iterations of test cases to occupy the major workload running time, but the final performance impact is similar.

Issue4: How to handle the unaffected and degraded test cases whose scores are not obviously increased by PGO optimization?

We found that some test cases’ score is increased in trivial percentage (less than +1%) or even decreased a bit after applying PGO. The underlying cause might be that the test case is not sensitive to PGO optimization or the final profiling data is inaccurate for the test case.

The issue is whether to use those cases’ profiling data files in the final profiling data.

We select only the positive cases (whose score is increased at least +5% after applying PGO) and do performance comparison. For example, rebuilding a chrome with the final profiling data only from positively impacted cases and comparing the performance impacts, etc.

Performance Results and Analysis

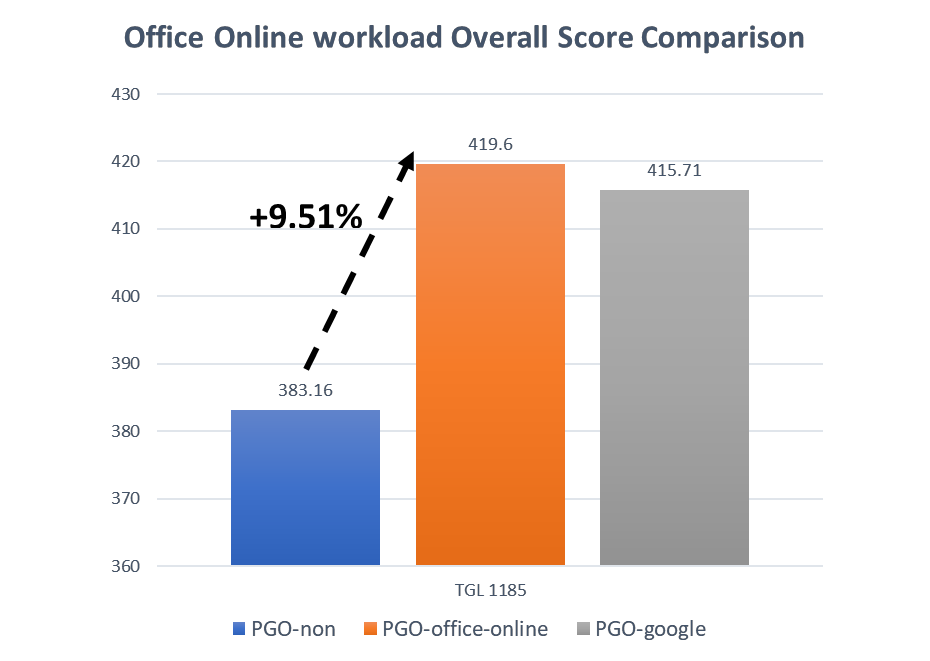

We have collected Office Online workload performance scores base on three profiles of Chrome: built with PGO profile data of Office Online workload (abbreviated as “pgo-office-online”); built with PGO disabled (abbreviated as “pgo-non”); built with Google default PGO profile data (abbreviated as “pgo-google”).

The hardware and system information are as below:

| Category | Intel |

|---|---|

| System Manufacturer | MSI |

| CPU | TGL i7-1185G7 @3GHz |

| GPU | Intel® Iris® Xe Graphics |

| GPU Driver Version | Driver Version 27.20.100.8935 |

| Memory | 16G |

| Screen Resolution | 1920 x 1080 |

| Power Governor | Balanced |

| OS | Windows® 10 Home 64 bits |

| Browser | Chrome-89.0.4386.0 |

Overall Scores Comparison

We executed each profile for 7 rounds. And the results are calculated by removing the MAX and MIN scores and then average remain 5 scores. As Figure3 shows “pgo-office-online” has 9.51% performance improvement than “pgo-non”, while “pgo-google” has 8.49% (“pgo-office-online” is 0.93% better than “pgogoogle”).

Figure 3. Office Online workload Overall Score Comparison

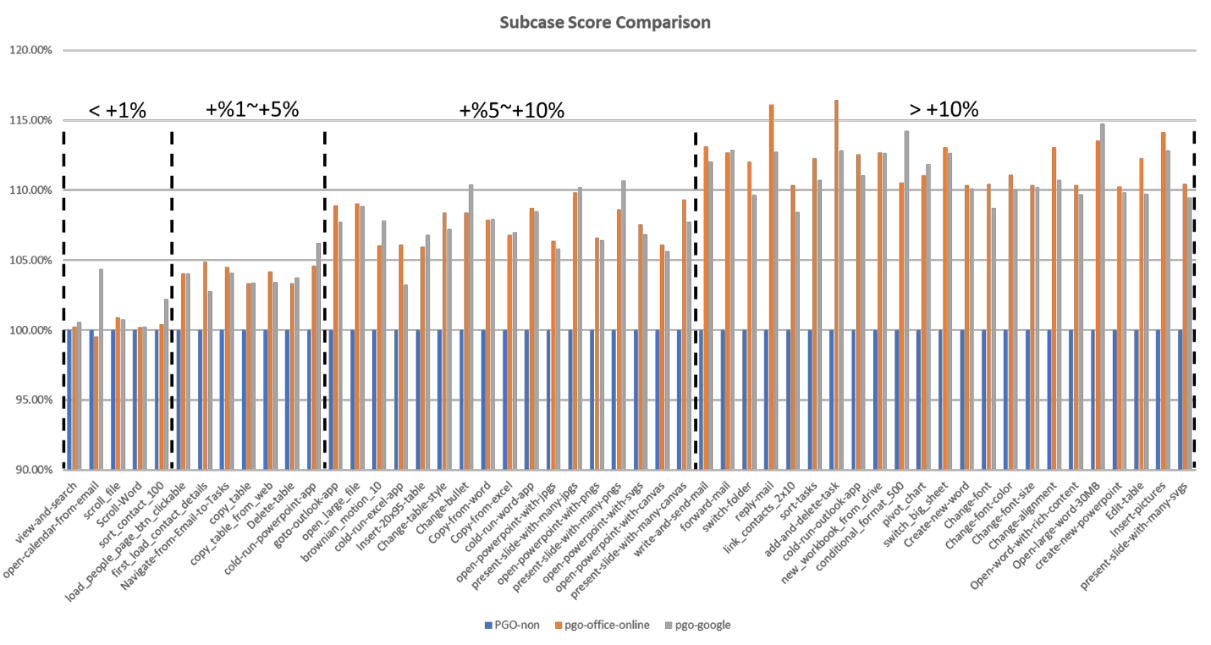

Sub-cases Score Comparison

The overall score of “pgo-office-online” has 9.51% improvement than “pgo-non”, but each sub-case does not have same improvement. The improvements of each sub-case are from less than 1% to more than 10%. To get a clear picture of the performance improvement of each sub-case, we divided the cases into four regions:

- region 1: “pgo-office-online” has < 1% performance improvement than “pgo-non”

- region 2: “pgo-office-online” has 1% ~ 5% performance improvement than “pgo-non”

- region 3: “pgo-office-online” has 5% ~ 10% performance improvement than “pgo-non”

- region 4: “pgo-office-online” has >10% performance improvement than “pgo-non”

As Figure4 shows, region 1 has 5 cases; region 2 has 7 cases, region 3 has 17 cases and region 4 has 23 cases.

Figure 4. Sub-case Score Comparison

Investigation with Sub-cases “Scroll-Word” & “Reply-mail”

As Figure4 shows, some cases have very little performance improvement with PGO while some other cases have more than 10% improvement. We did some further investigation to clarify why PGO does not take effect to some case but have obvious improvement to some other cases. We picked up sub case “Scroll-Word” which only has 0.19% performance improvement with PGO, and sub case “Reply-mail” which has 16.09% performance improvement with PGO.

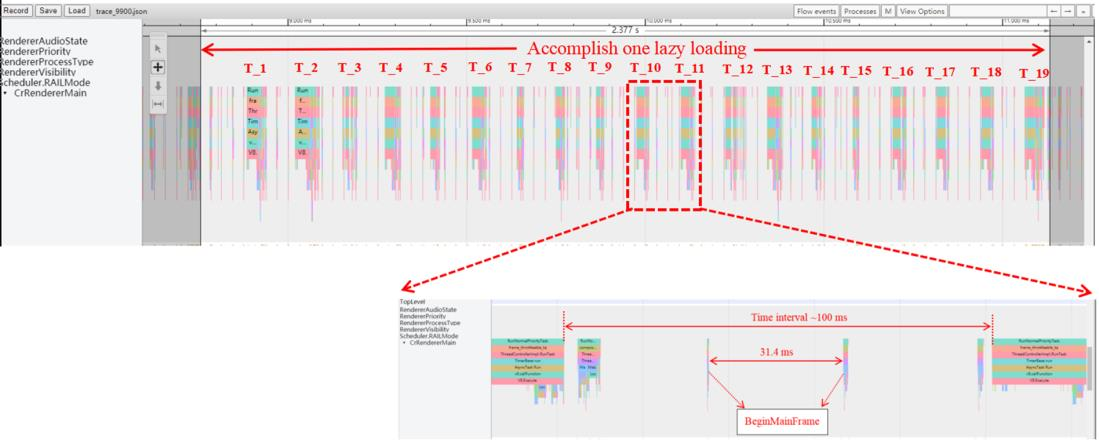

“Scroll-Word” scrolls a Word document from page 1 to certain pages, which triggers delayed content loading (lazy loading), measures how much time it takes, indicating how fast user can see all the content of a typical Word document.

We use Chrome Tracing tool to investigate what Office Online has done when the user scrolls a document. As Figure5 shows, one lazy loading (update the DOM elements) is divided into some tasks. There are time intervals initiated by JS timer between each task. During a complete lazy loading, the task execution time only occupy about 10% percent of the time while time intervals occupy nearly 90%. This makes the CPU utilization very low. And the PGO can only improve the task execution time, but not the interval time. Office Online workload is a real-life workload. In real-life usage, the Office Online operations may rely on these time intervals to wait network response. We provide a guidance by only integrating PGO and Office Online beneficial sub-cases into official profile and achieve 1% additional performance gain.

Figure 5. Lazy loading breakdown

Conclusion1: for most cases with <1% performance improvement, they might have similar behavior: low CPU utilization and might have long idle time intervals or highly network bounded.

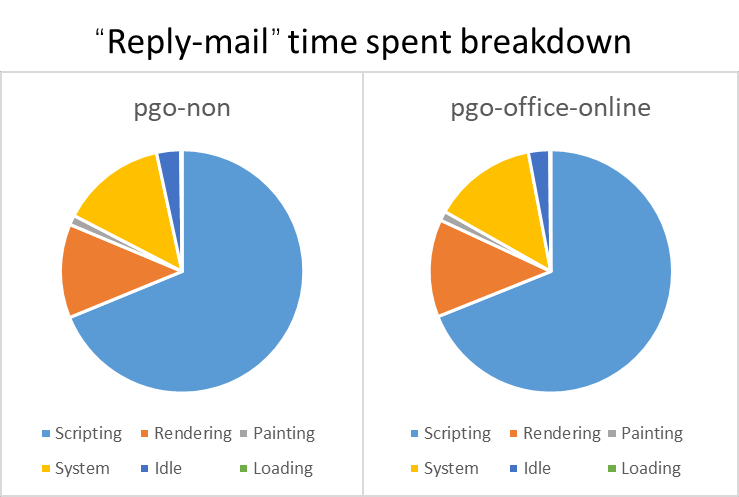

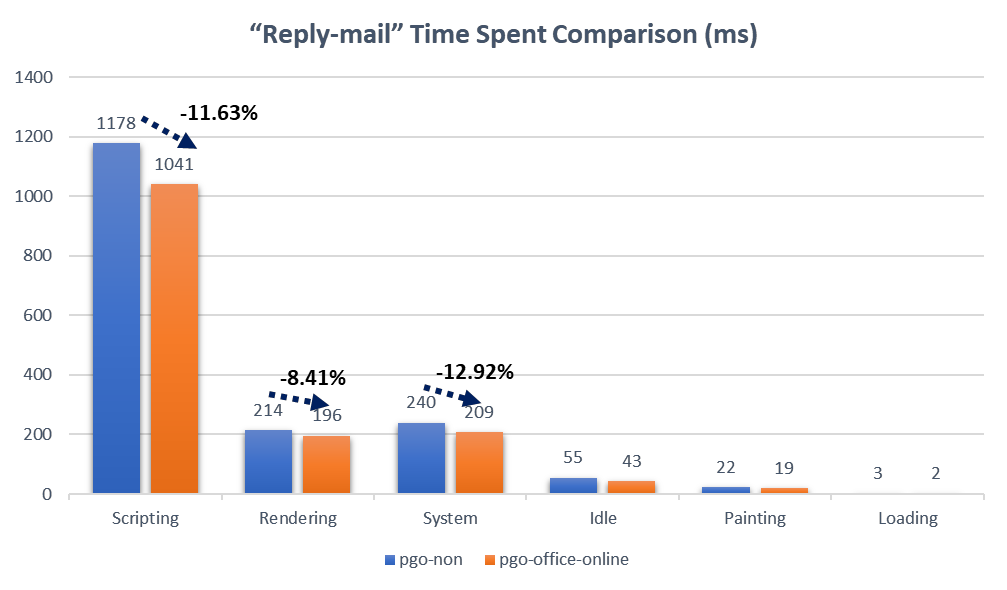

“Reply-mail” case opens an email and reply with text & attachment, measure how much time it takes, indicating how fast user can reply to an email.

We use Chrome Dev tool to investigate what Office Online spends time when the user replies to an email. From Figure6, we can see that case “Reply-mail” spends most time on Scripting (JavaScript executing, jitted code included), Rendering and System call on both “pgo-non” & “pgo-office-online”. That means this case has higher CPU utilization and less idle time. And as Figure7 shows, “pgo-office-online” profile spends 11.63% less time on Scripting, 8.41% less time on Rendering and 12.92% on System call. This means PGO takes effect and makes “pgo-office-online” profile has higher score than “pgo-non” on this case.

Figure 6. “Reply-mail” time spent breakdown

Figure 7. “Reply-mail” time spent comparison

Conclusion2: for most cases with >10% performance improvement, they have less idle time, higher CPU utilization and spend most time on JS scripting and Rendering.

Improving PGO profile for Office Online

From Figure4, we found that some cases have worse performance on “pgo-office-online” than “pgo-google”. To improve Office Online performance, we collected the PGO profile data with cases which have better improvement than “pgo-google” and merged them together with the Google PGO profile data to build a new profile Chrome. With this new profile, Office Online workload has 9.46% performance improvement than “pgo-non” and 0.89% improvement than “pgo-google”.

To contrast with Google’s analysis on Speedometer2 benchmark for popular web sites, we also run Office Online workload with a Chrome built with Speedometer2 PGO profile. The result shows that it has 7.74% performance improvement than “pgo-non” and has 1.61% performance regression than “pgo-office-online”.

Summary

This paper conducts comparison analysis between benchmark profile and real-world PWA profiles in optimizing PWA Office Online performance by using common compiler optimization technology. We design measurable performance metrics and develop reproducible automation infrastructure to test real-life PWA performance. We find that even though there’re obvious user experience differences between PWA and traditional web sites, optimization over traditional web benchmark (like Speedometer2.0) through PGO compiler technology still can contribute over 80% improvement to PWA performance.

The overall score of “pgo-office-online” has 9.51% improvement than “pgo-non”, and 0.93% better than “pgogoogle”. To improve “pgo-google” profile Chrome performance, we create a new profile which merges “pgogoogle” profile data with the profile data of cases which have better improvement of “pgo-office-online” than “pgo-google”. The new profile has 0.89% performance improvement than “pgo-google”. We also identify 13% subcases with lower gains using Office Online profile than Google’s benchmark profiles. The underlying cause might be that these test cases are not sensitive to PGO optimization or the final profiling data is not accurate enough due to the execution time outside the workload scoring region. This occupies around two thirds of the total workload running time and requires further exploration on PGO profiling for the region of interests only.

Acknowledgments

We would like to appreciate Wei Wang, Donna Wu, Ting Shao, Leon Han, Hong Zheng, Qi Zhang, Jonathan Ding, Xin Wang and his team, Shiyu Zhang and his team, for their support and guidance to our work.

References

- LightHouse

- WebXPRT

- PGO

- PCMark

- SYSMark

- Speedometer workload

- JetStream workload

- Real-world Javascript Performance

- Dehao Chen, David Xinliang Li, Tipp Moseley. AutoFDO: Automatic Feedback-Directed Optimization for Warehouse-Scale Applications. CGO’16, March 12-18, 2016, Barcelona, Spain ACM.

- Check if the Clang-profiling file pool index range should be increased for PGO builds. https://bugs.chromium.org/p/chromium/issues/detail?id=1059335