Get Top Performance on Modern Multicore Systems

Dmitri Goloubentsev, head of Automatic Adjoint Differentiation, MatLogica,

and Evgeny Lakshtanov, principal researcher, Department of Mathematics,

University of Aveiro, Portugal and MatLogica

@IntelDevTools

Get the Latest on All Things CODE

Sign Up

If you’re interested in high-performance computing, high-level, object-oriented languages aren’t the first things that come to mind. Object abstractions come with a runtime penalty and are often difficult for compilers to vectorize. Adapting your code for multithreading execution is a huge challenge, and the resulting code is often a headache to maintain.

You’re in luck if performance-critical parts of your code are localized and can be flattened and safely parallelized. However, many performance-critical problems can benefit from object-oriented programming abstractions. We propose a different programming model that lets you achieve top performance on single instruction multiple data (SIMD), non-uniform memory access (NUMA) multicore systems.

Operator Overloading for Valuation Graph Extraction

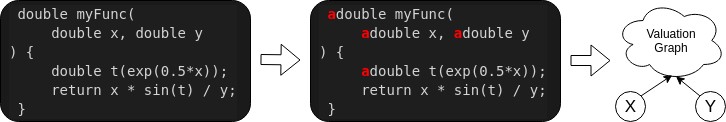

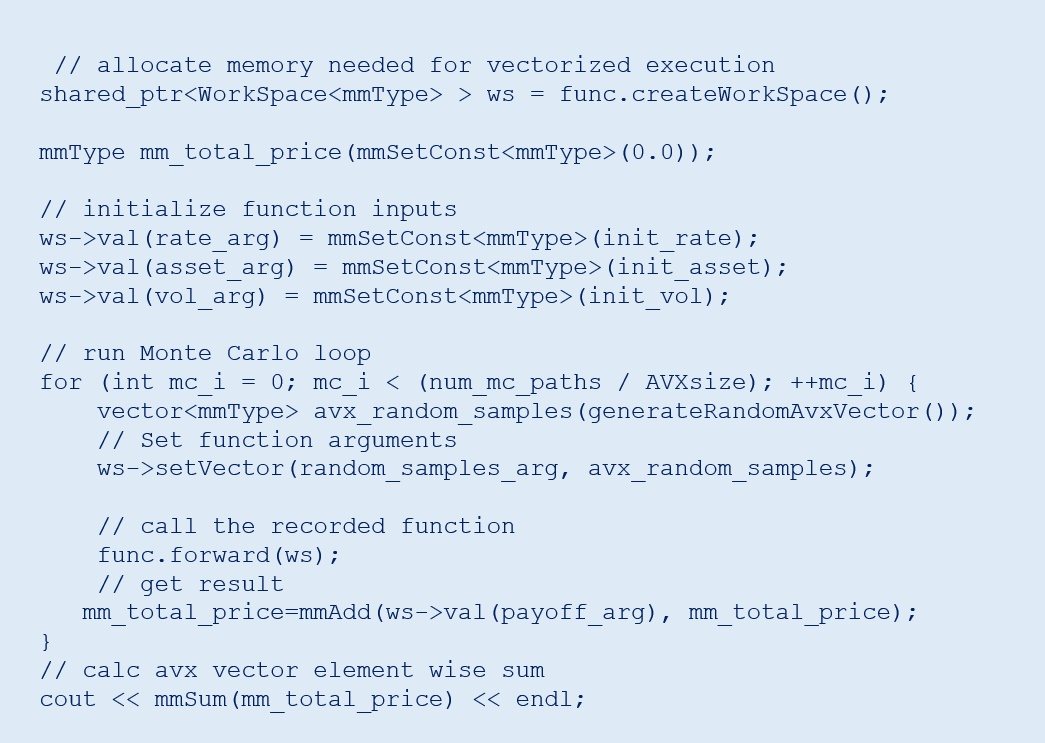

We focus on problems where the same function, F(1), needs to be run on a dataset X[i]. For example, let’s look at Monte Carlo simulations in the finance world where, X[i] is a random sample and F(.) is a pricing function (Figure 1). We use an operator overloading pattern to extract all primitive operations performed by F(.).

Figure 1. Example of operator overloading pattern

This pattern is common in automatic adjoint differentiation (AAD) libraries. Unlike traditional AAD libraries, we do not build a data structure to represent the valuation graph. Instead, we compile binary machine code instructions to replicate valuations as defined in the graph, which can be seen as a just-in-time (JIT) compilation. However, we don’t work with the source code directly. Instead, we compile a valuation graph produced by the user’s algorithm. Since we want to apply F(.) to a large set of data points, we can compile this code to expand all scalar operations to full SIMD vector operations and process four Intel® Advanced Vector Extensions 2 (Intel® AVX2) or eight Intel® Advanced Vector Extensions 512 (Intel® AVX-512) data samples in parallel.

Learn by Example

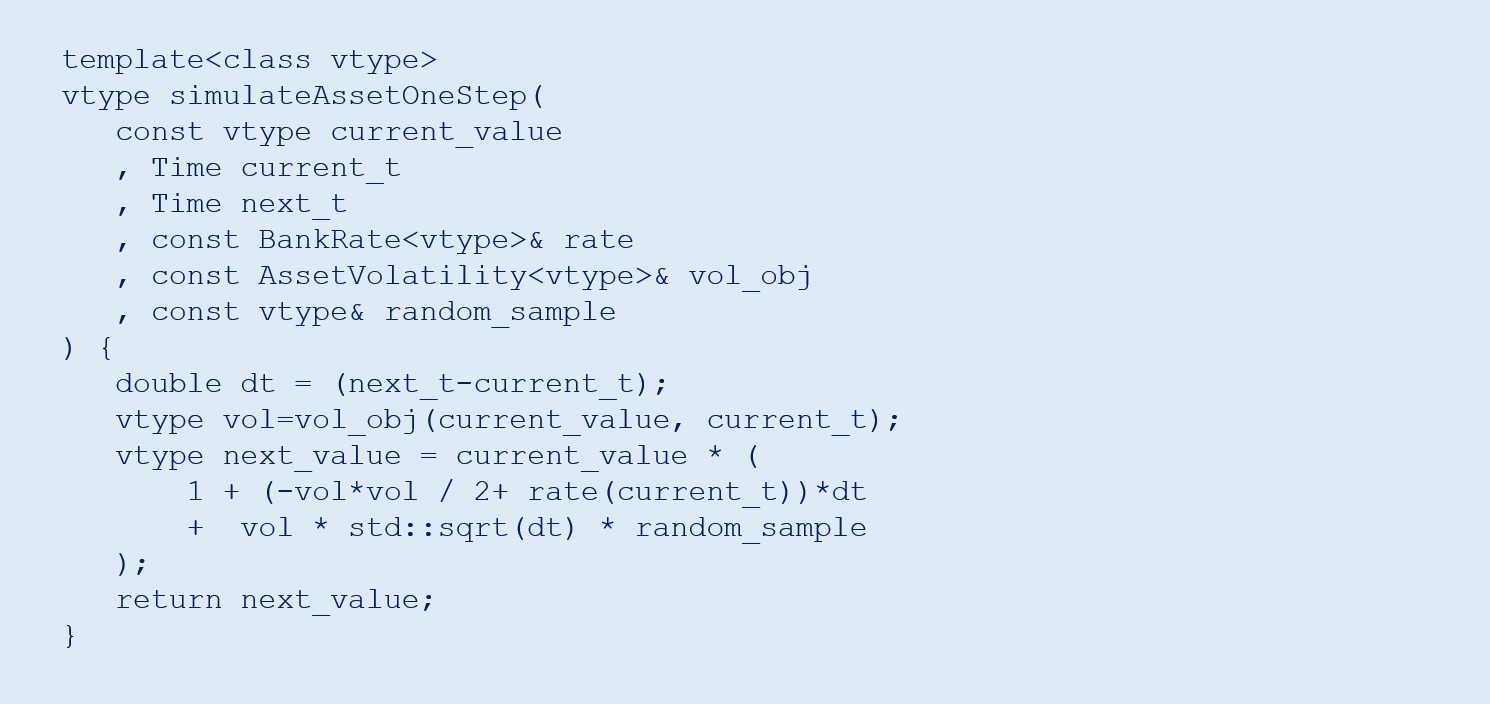

Let’s look at a simple option pricing framework where we use various abstract business objects. In this example, we simulate asset values as a random process.

The classes BankRate and AssetVolatility can define different ways of computing model parameters, and implementation can be done deep in the derived classes. This function can be used with the native double type. When applied along timepoints, t[i] can be used to simulate asset value at the option expiry.

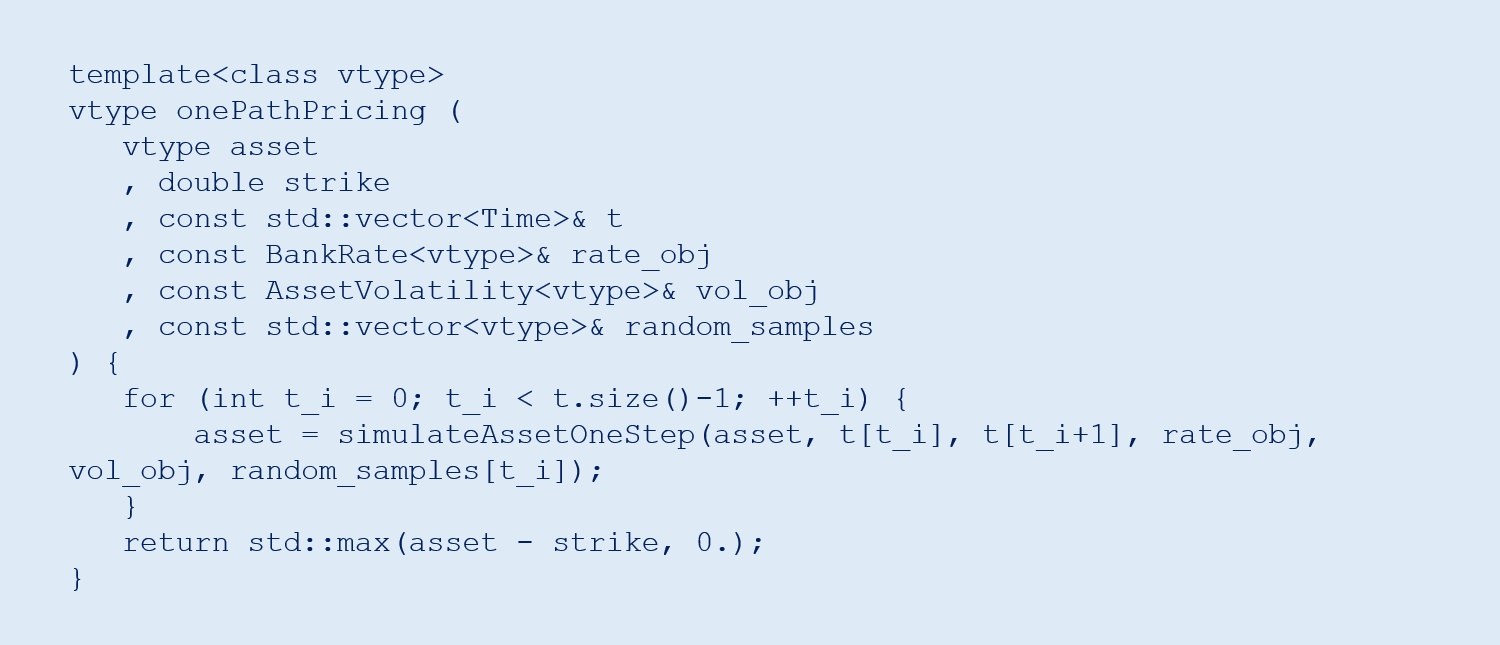

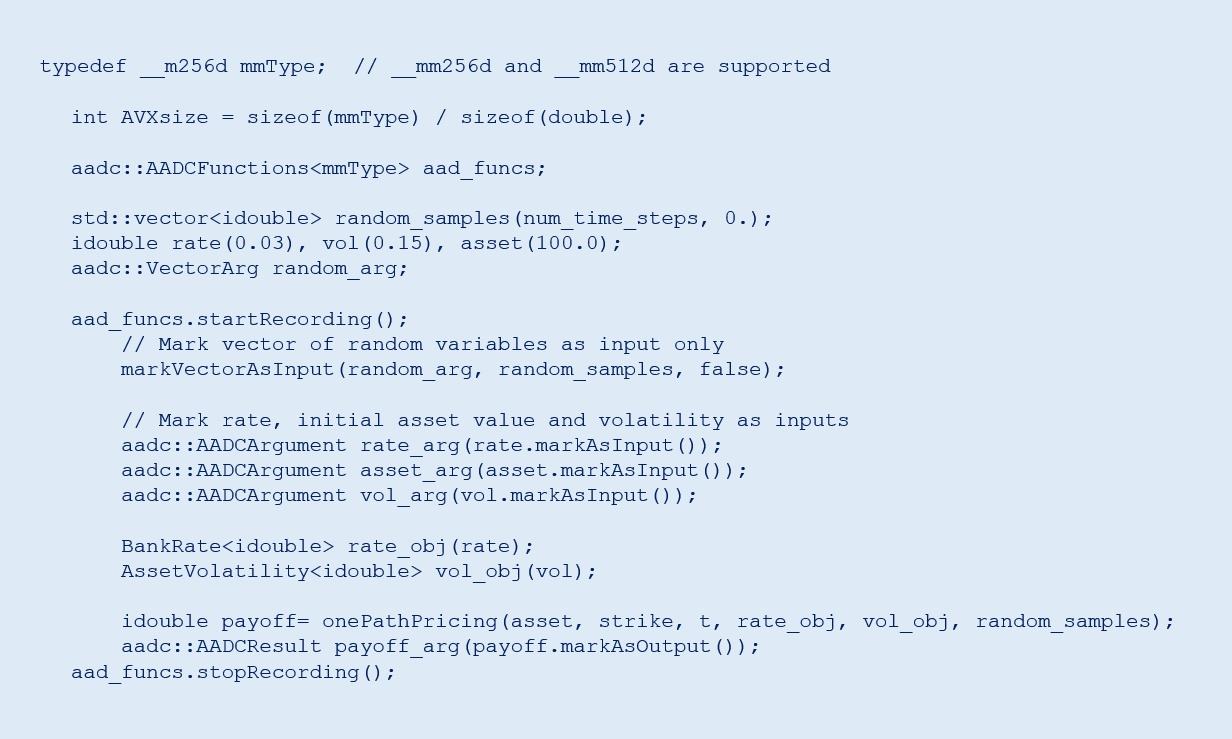

However, this leads to bad performance because the compiler can’t effectively vectorize the code and business objects may contain virtual function calls. Using the AAD runtime compiler, we can run the function, record one random path of asset evolution, and compute option intrinsic value at the expiry.

At this stage, the function object contains compiled, vectorized machine code that replicates valuations to produce the final payoff output value by giving the arbitrary random_samples vector as input. The function object remains constant after recording and requires memory context for execution.

Free Multithreading

Making efficient and safe multithreaded code can be difficult. Notice that the recording happens only for one input sample and can be run in the controlled, stable, single-threaded environment. The resulting recorded function, however, is thread-safe and only needs separate workspace memory allocated for each thread. This is a very attractive property, since it lets us turn non multithread-safe code into something that can be safely executed on multicore systems. Even optimal NUMA memory allocation becomes a trivial task. You can view the full code listing for the multithreaded example in GitHub*.

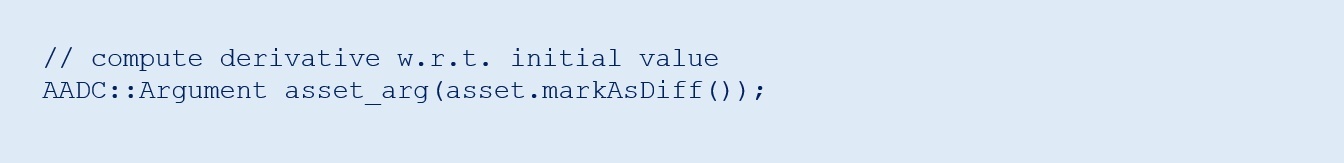

Automatic Differentiation

This technique not only accelerates your function, it can also create an adjoint function to compute derivatives of all inputs concerning all outputs. This process is similar to the back-propagation algorithm used for deep neural network (DNN) training. Unlike DNN training libraries, this approach works for almost any arbitrary C++ code. To record an adjoint function, mark which input variables are required for differentiation.

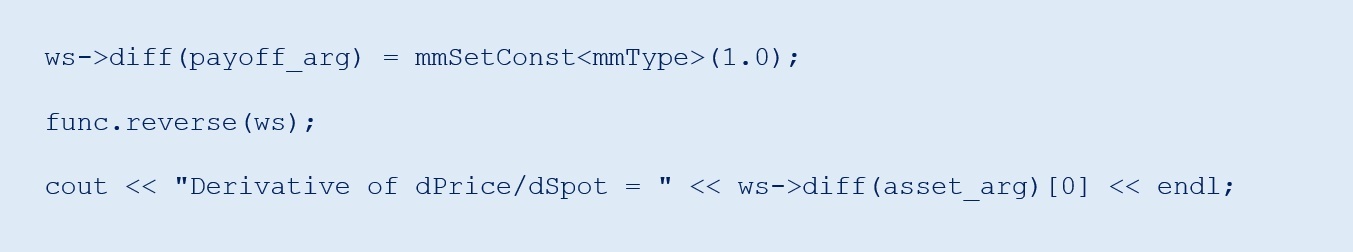

Finally, to run the adjoint function, initialize the gradient values of outputs and call the reverse() method on the function object.

Get Top Performance

Hardware is evolving towards increasing parallelism with more cores, wider vector registers, and accelerators. For object-oriented programmers, it’s hard to adapt single-threaded code to existing parallel methods like OpenMP* and CUDA*. Using the AADC tool from MatLogica, programmers can turn their object-oriented, single-threaded, scalar code into Intel® AVX2 and Intel® AVX-512 vectorized, multithreaded, and thread-safe lambda functions. Crucially, the AAD-C tool can also generate a lambda function for the adjoint method of computing with all required derivatives using the same interface. For more details and a demonstration version of AAD-C, visit MatLogica.

Acknowledgments

Evgeny Lakshtanov is partially supported by Portuguese funds through the Center for Research and Development in Mathematics and Applications (CIDMA) and the Portuguese Foundation for Science and Technology (FCT, Fundação para a Ciência e a Tecnologia), within project UIDP/04106/2020.,

______

You May Also Like

Measure the Impact of NUMA Migrations on Performance

Intel® oneAPI Base Toolkit

Get started with this core set of tools and libraries for developing high-performance, data-centric applications across diverse architectures.

Get It Now

See All Tools