Introducing the latest work of Intel Graphics Research at SIGGRAPH 2023, the premier conference for computer graphics, we are delighted to bring together two fields known for stunning visual fidelity, seamlessly connecting both neural rendering and real-time path tracing in one unified framework.

Neural rendering techniques have recently revolutionized the quality of appearance representation across a vast range of visual details and scales. Path tracing on the GPU is quickly attaining the ability to dynamically synthesize imagery indistinguishable from real-world perceptions in real time, under a vast range of lighting and shadowing conditions.

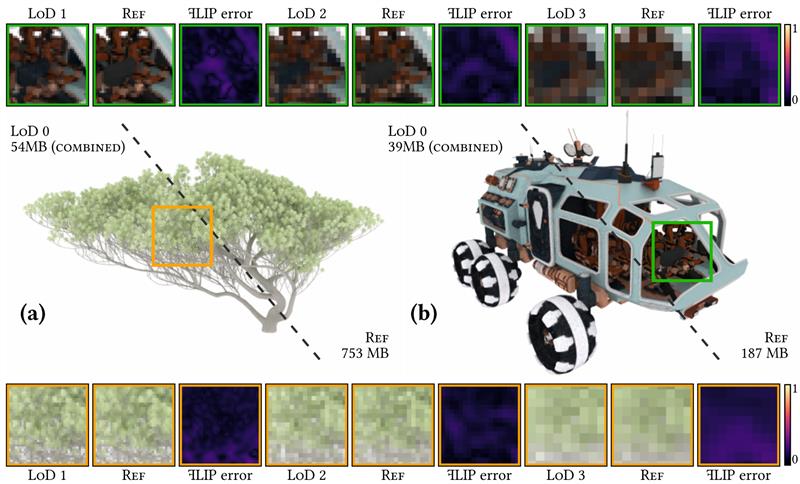

Bay Cedar tree asset from the Moana island, scene dataset courtesy of Walt Disney Animation Studios.

Rover asset courtesy of BlendSwap user vajrablue.

Realism by scalable tracing of light travelling through neural representations

Path tracing is an elegant algorithm that can simulate many of the complex ways that light travels and scatters in virtual scenes, just as it would in the real world. With classic computer graphics representations (e.g. triangle meshes), it uses ray tracing to determine the visibility in-between scattering events. Integrating neural objects into a rendered scene requires efficient equivalent operations, which determine the space occupied by their neural representations. For this, various forms of ray marching are common in previous neural rendering applications, which unfortunately quickly outgrow feasible frame time budgets when trying to correctly render and anti-alias many higher-resolution neural objects extending into the distance. In our latest work, we introduce a new multi-scale representation for both visibility and appearance, which allows taking larger steps in visibility tests even for highly complex and detailed objects as they move into the distance, without sacrificing the accuracy of their realistic appearance.

A versatile toolbox for real-time graphics ready to be unpacked

One of the oldest challenges and a holy grail of rendering is a method with constant rendering cost per pixel, regardless of the underlying scene complexity. While GPUs have allowed for acceleration and efficiency improvements in many parts of rendering algorithms, their limited amount of onboard memory can limit practical rendering of complex scenes, making compact high-detail representations increasingly important.

With our work, we expand the toolbox for compressed storage of high-detail geometry and appearances that seamlessly integrate with classic computer graphics algorithms. We note that neural rendering techniques typically fulfill the goal of uniform convergence, uniform computation, and uniform storage across a wide range of visual complexity and scales. With their good aggregation capabilities, they avoid both unnecessary sampling in pixels with simple content and excessive latency for complex pixels, containing, for example, vegetation or hair. Previously, this uniform cost lay outside practical ranges for scalable real-time graphics. With our neural level of detail representations, we achieve compression rates of 70–95% compared to classic source representations, while also improving quality over previous work, at interactive to real-time framerates with increasing distance at which the LoD technique is applied.

The beginning of an exciting journey, as the view on visual AI clears

Our work builds a bridge between fully neural and fully classic representations, used in a single unified path tracer. We provide one example of a classically hard problem that is solved elegantly by neural optimization tools. Seeing the utility of such tools becoming undeniable for many such problems, and their real-time application becoming technically possible on a growing base of consumer GPUs, we are faithful that this is just the beginning of an exciting journey of discovery. As the software and hardware ecosystem matures, and as the development of neural solutions for real-time graphics and games becomes less of a challenge with better integration into existing renderers, game engines, and creation workflows, we can't wait for the stunning discoveries to come as developers and researchers throughout the industry become familiar with the new tools. As we keep advancing the platforms for integration of AI and graphics, we expect many more bridges to be built, many more barriers to fall, and we are excited to keep pushing forward on this journey towards the next level of real-time graphics together.