As more users turn to Kubernetes* as a management solution for complex workloads at the network edge, they’re finding the Kubernetes resource allocation model to be limiting. Because Kubernetes is currently only equipped to handle local resources, such as RAM and CPU, users can’t connect the kind of specialized hardware needed to process complex workloads.

Enter Dynamic Resource Allocation (DRA), a new API that offers a more flexible way to describe and manage resources, enabling users to more easily leverage accelerators like GPUs.

Patrick Ohly, an Intel cloud software architect, leads DRA design and implementation efforts. He recently provided an update on the new alpha feature, covering how DRA manages more advanced resources, DRA’s limitations, and what’s needed to move DRA into beta.

What is DRA?

DRA introduces a new API for describing which hardware resources a pod needs, enabling support of more complex accelerators such as:

- Network-attached resources, for example, an IP camera, or devices with different types of interconnects, e.g. Compute Express Link*

- Resources with custom parameters, for instance a GPU that requires string feature flags in addition to RAM and CPU units

- Hardware resources that require the user to provide additional parameters for setup, like an FPGA

- Resources that can be shared between containers and pods

DRA uses Container Device Interface (CDI), a specification for JSON files that container runtimes can read. By introducing an abstract notion of a device as a resource, CDI enables you to set up a new device inside a container without having to modify Kubernetes.

DRA is not intended to solve all Kubernetes resource issues. Here are three areas DRA does not impact:

- NUMA alignment: The Kubernetes scheduler recognizes whether a node is available to be assigned a port; it does not manage the individual parts of a node, such as non-uniform memory access (NUMA) alignment.

- Kubelet architecture: While there are efforts to rethink how kubelet is implemented into Kubernetes, DRA will not change kubelet’s architecture.

- Existing native and extended resources: DRA is intended for more-complex hardware and will not replace the Kubernetes scheduler or kubelet, which work well for managing single, linear resources such as RAM, CPU, and some extended resources.

Technical Overview

Taking inspiration from volume handling in Kubernetes, DRA’s resource class and resource claim directly correspond to Kubernetes’ persistent volume claim and storage class, respectively.

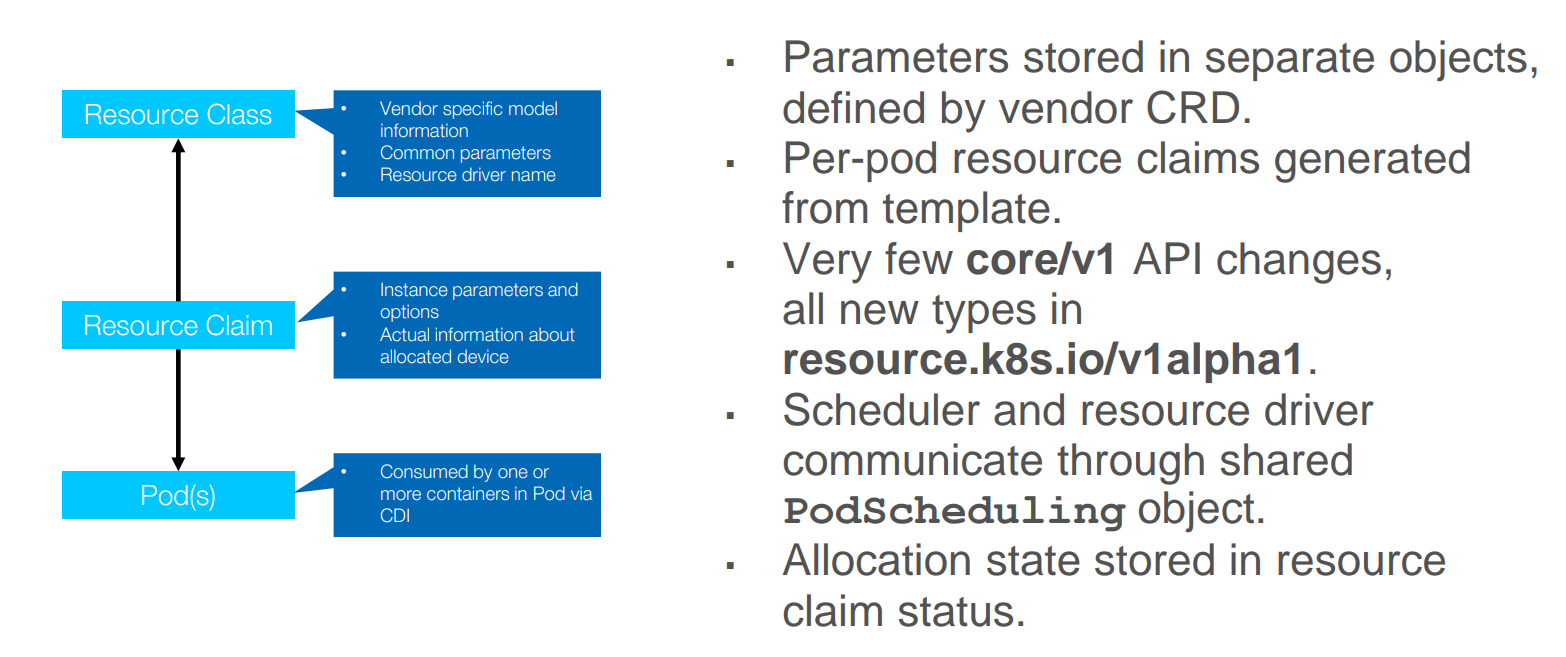

The technical details of DRA fall into three conceptually different levels called resource class, resource claim, and pods.

- Resource class is where the administrator can store privileged parameters and deploy them to a specific resource driver for hardware.

- Resource claim is what the user can use to manually describe a resource, often incorporating the parameters from a custom resource definition provided by the vendor.

- Pods reference resource claims to begin allocation. Multiple pods can reference the same resource when supported by the hardware or by using a template to generate resource claims for a pod automatically.

This model offers improvements to pod scheduling. Because the Kubernetes scheduler cannot read parameters, only CPU and RAM requirements, it sometimes assigns hardware to nodes on which it can’t run, causing the pod to become stuck. DRA avoids this issue by ensuring that all required resources are reserved before scheduling the pod.

This example shows how a resource claim is created manually. Inside a container, the first pod, which is the Java* on the left-hand side of the image, specifies which resources it needs and assigns one or more containers to that resource. The resource claim has been manually created and named “external-claim.”

In this simple test resource driver demo, a resource claim is manually created.

Kubernetes Components that will be Changed or added

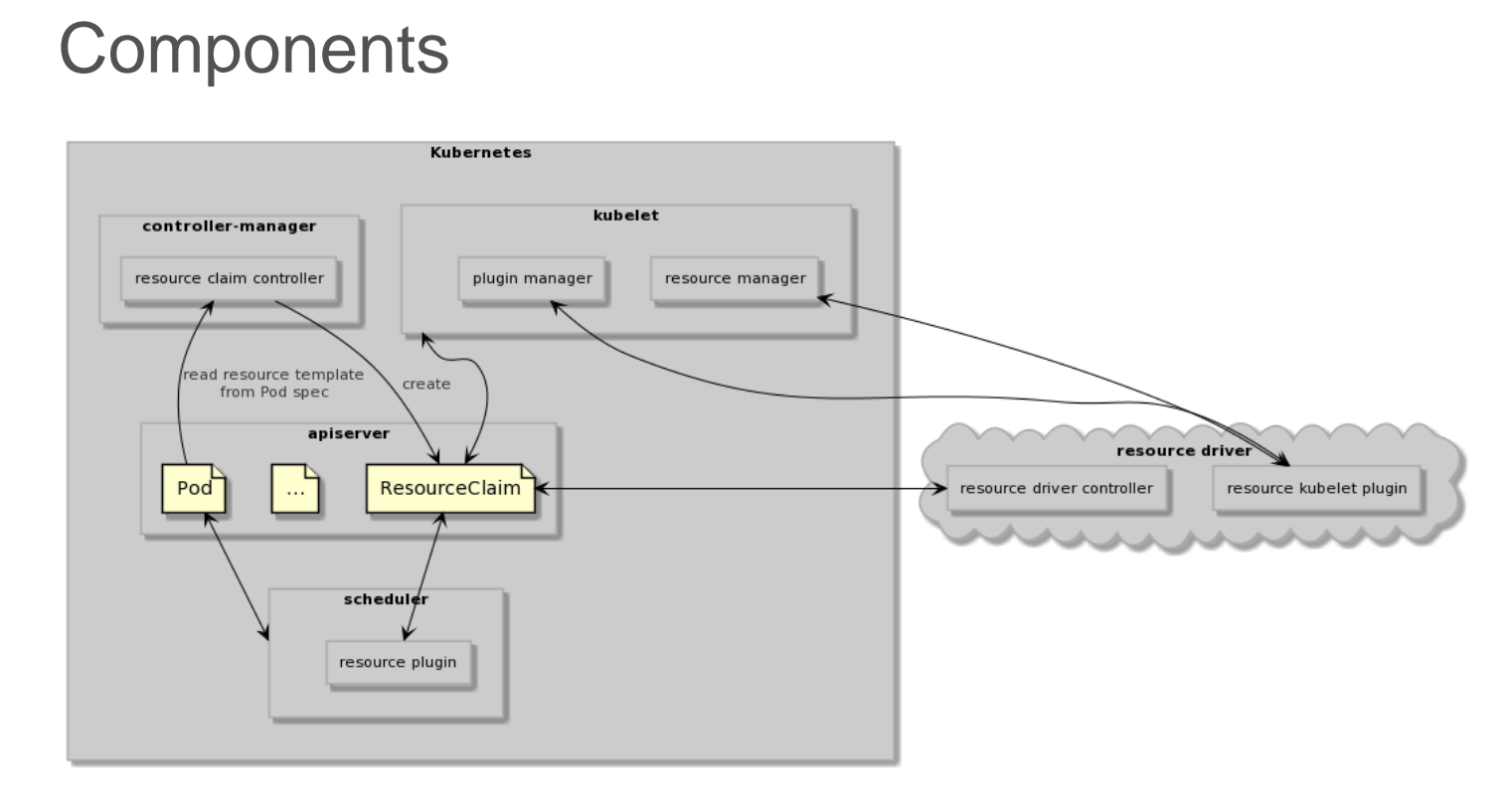

Several Kubernetes components will need to be modified or added.

While most of the new features are implemented through the new DRA API group, several components in Kubernetes must be modified or added.

- The built-in resource claim types in apiserver will be extended.

- A new controller will be added to controller-manager to monitor pods and create a new resource claim for each pod that uses a template.

- The scheduler will be modified to monitor which pods are using cooperative scheduling and determine which nodes might be suitable before the pod is allocated.

The resource driver will need two new components.

- The resource driver controller will interface with the control plane in Kubernetes via the scheduler to determine which resources are available, match them with available resource claims, and update their status when they have been allocated.

- Once a resource has been marked as allocated, the resource kubelet plugin matches the resource with available nodes.

Get Involved

This project has been underway for years, but it’s vitally important that DRA receives interest from the wider community and support from multiple companies to help move DRA into beta.

This project has been underway for years, but it’s vitally important that DRA receives interest from the wider community and support from multiple companies to help move DRA into beta.

This work is open to the public, and you can follow along with progress and open pool requests through the SIG Node: Dynamic Resource Allocation project board, join the Kubernetes Slack channel to ask questions, or join discussions about the CDI standard on the Cloud Native Computing Foundation (CNCF) container-orchestrated device working group.

You can also join the weekly meeting (Mondays at 11 a.m. CET) by emailing Patrick Ohly.

About the Presenter

Patrick Ohly is a cloud software architect at Intel. Most recently in his nearly 20-year tenure at Intel, he has led efforts to enable additional hardware in Kubernetes, including SIG Storage, SIG Instrumentation, SIG Node, and SIG Testing.