Intel continuously works with industry leaders and innovators to optimize performance of its AI solutions for use in cutting-edge models. Today, we are excited to announce the optimization of Intel AI solutions from datacenter to client and edge for the global launch of Qwen2 developed by Alibaba Cloud.

“Today, Alibaba Cloud launched their Qwen2 large language models. Our launch day support provides customers and developers with powerful AI solutions that are optimized for the industry’s latest AI models and software.”

Pallavi Mahajan, Corporate Vice President and General Manager, Datacenter & AI Software, Intel

Peter Chen, Vice President and General Manager, Datacenter & AI China, Intel

Software Optimization

To maximize the efficiency of LLMs, such as Alibaba Cloud* Qwen2, a comprehensive suite of software optimizations is essential. These optimizations range from high-performance fused kernels to advanced quantization techniques that balance precision and speed. Additionally, key-value (KV) caching, PagedAttention mechanisms, and tensor parallelism are employed to enhance inference efficiency. Intel hardware is accelerated through software frameworks and tools, such as PyTorch* & Intel® Extension for PyTorch, OpenVINO™ Toolkit, DeepSpeed*, Hugging Face* libraries and vLLM for optimal LLM inference performance.

Alibaba Cloud and Intel collaborate in AI software for datacenter, client and edge platforms, fostering an environment that drives innovation, with examples including but not limited to ModelScope, Alibaba Cloud PAI, OpenVINO, and others. As a result, Alibaba Cloud's AI models can be optimized across various computing environments.

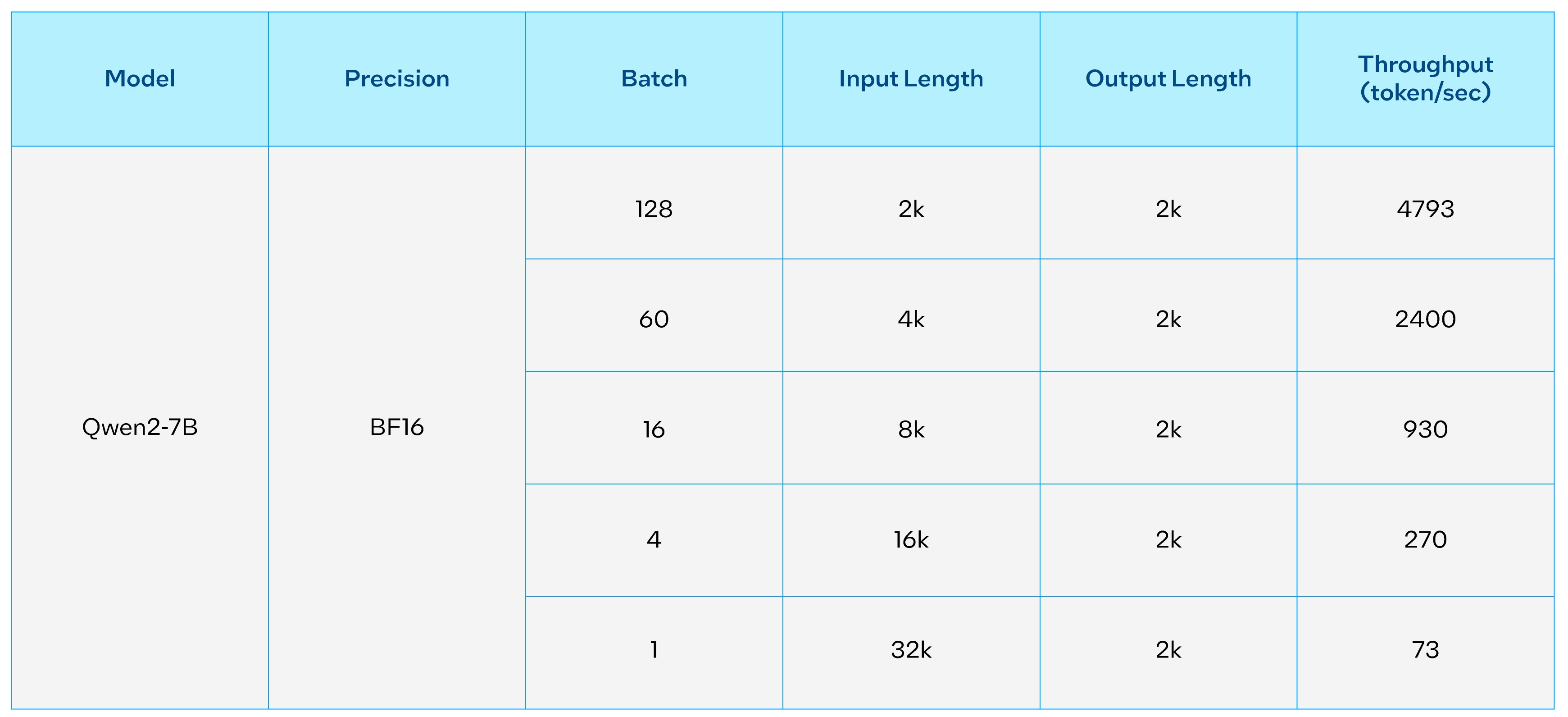

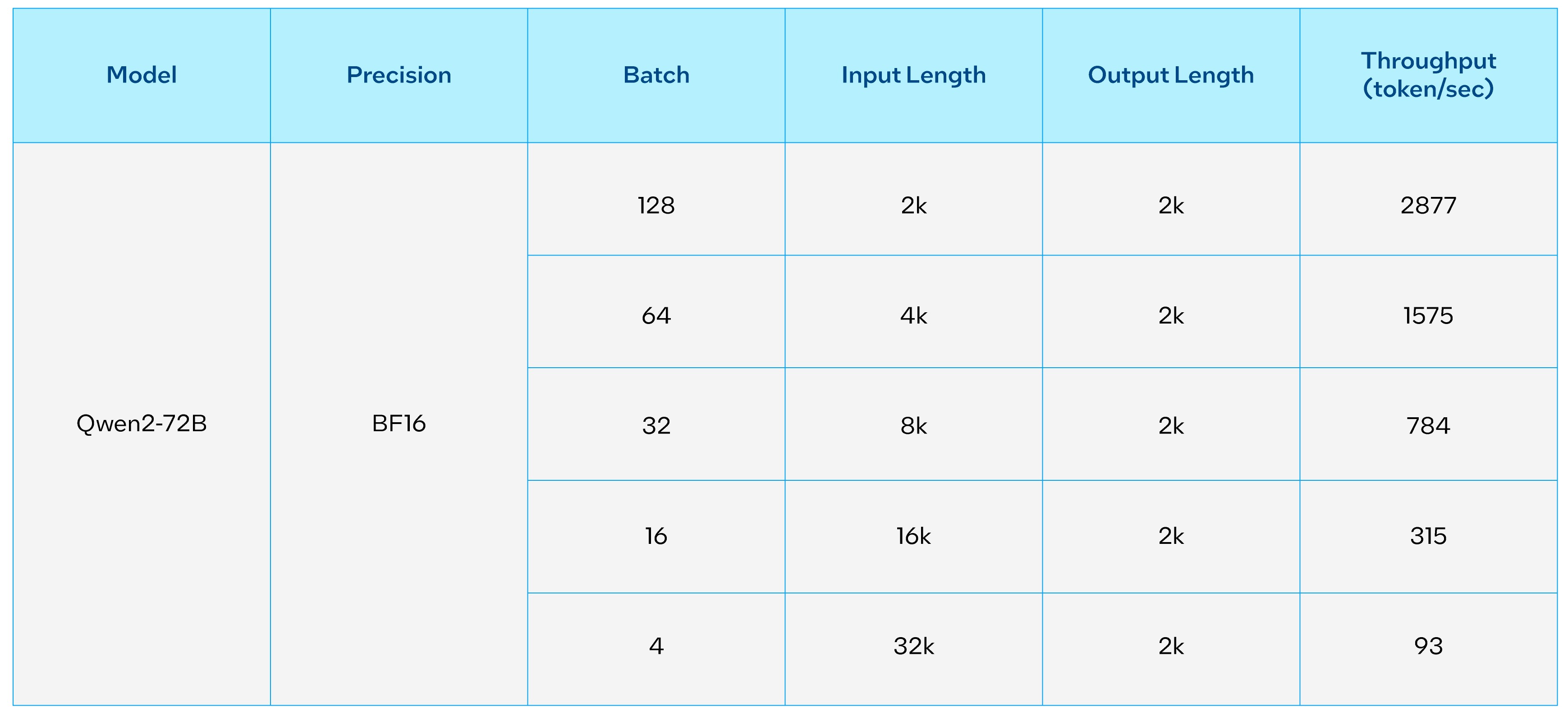

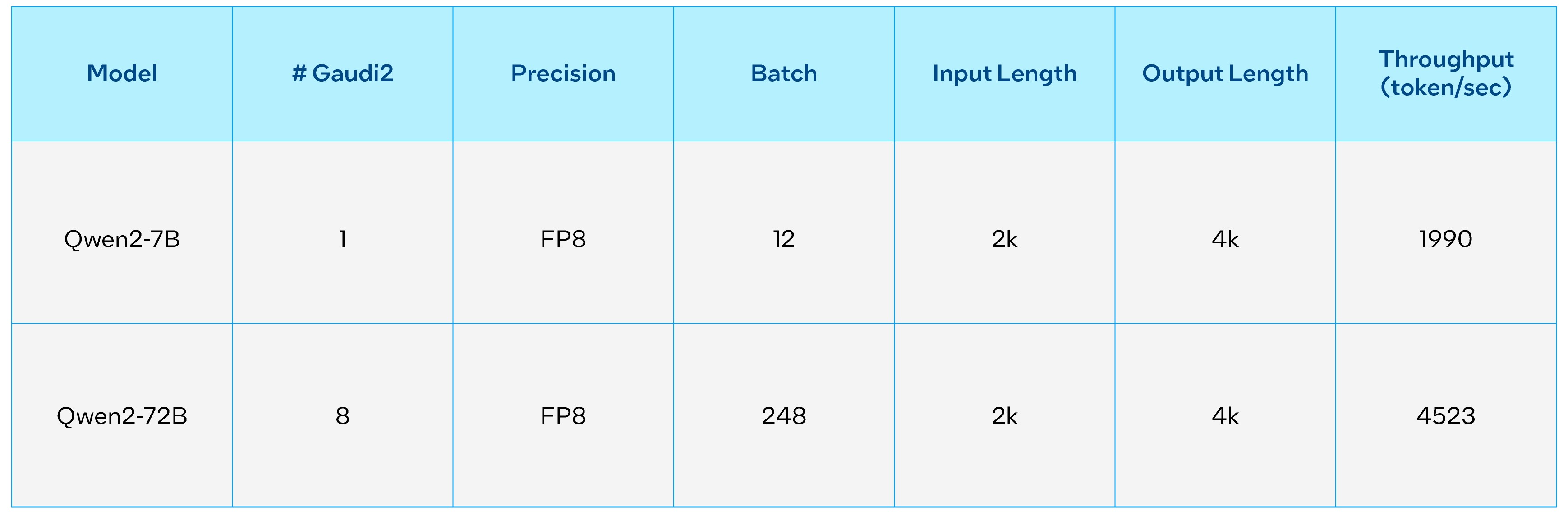

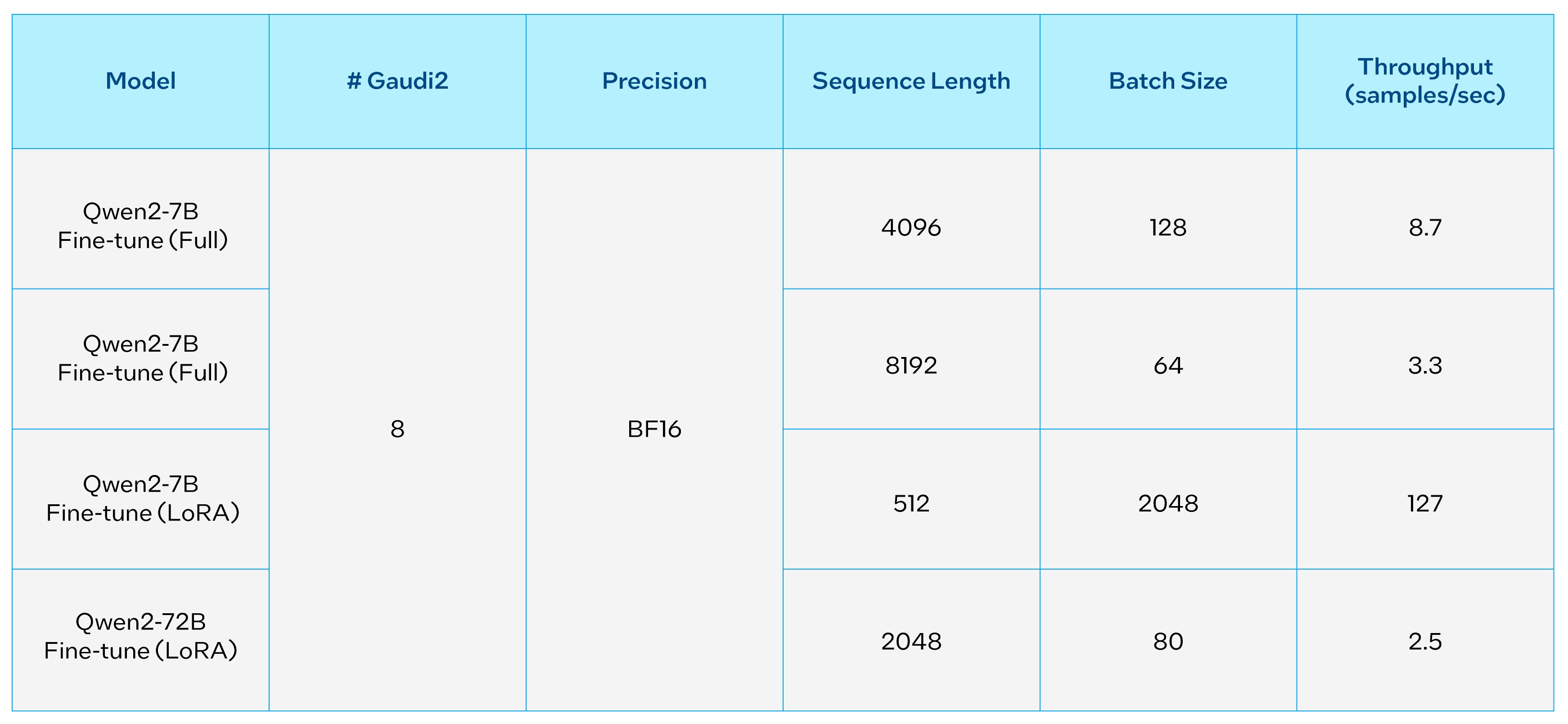

Benchmarking of Intel® Gaudi® AI Accelerators

The Intel® Gaudi® AI accelerators are designed for high-performance acceleration of Generative AI and LLMs. With the latest version of Optimum for Intel Gaudi, the new LLMs models can be easily deployed. We have benchmarked the throughput for inference and fine-tuning of the Qwen2 7B and 72B parameter models on Intel Gaudi 2. The performance metrics are detailed below.

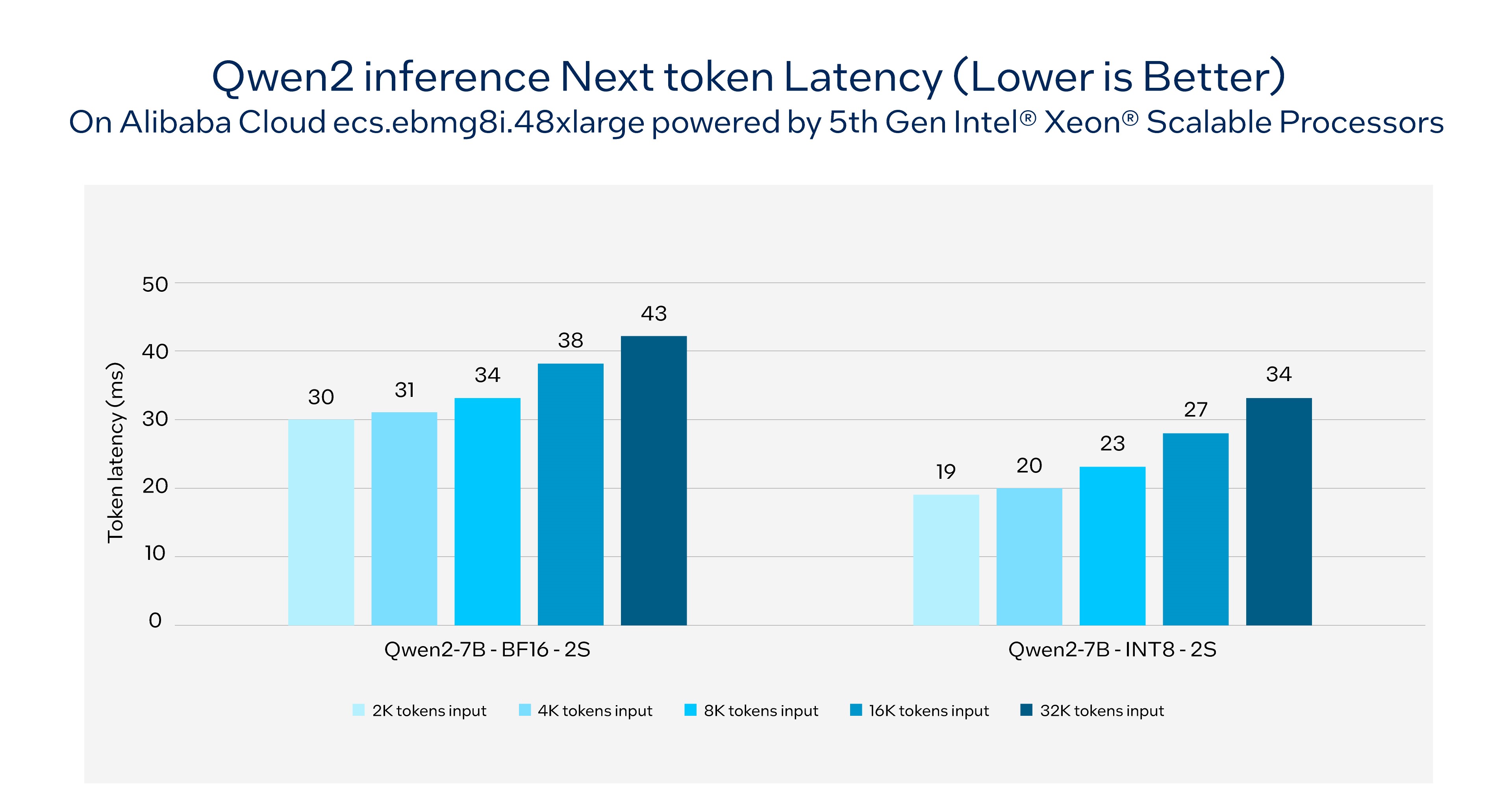

Benchmarking of Intel® Xeon® Processors

Intel® Xeon® processors are the ubiquitous backbone of general compute, offering easy access to powerful computing resources across the globe. Intel Xeon processors are widely available and can be found in data centers of all sizes, making them an ideal choice for organizations looking to quickly deploy AI solutions without the need for specialized infrastructure. Each core of the Intel Xeon processor includes Intel® Advanced Matrix Extensions (Intel® AMX), a feature that tackles a wide spectrum of AI workloads and accelerates AI inference. Figure 1 shows Intel Xeon delivering latency that meets multiple production use cases.

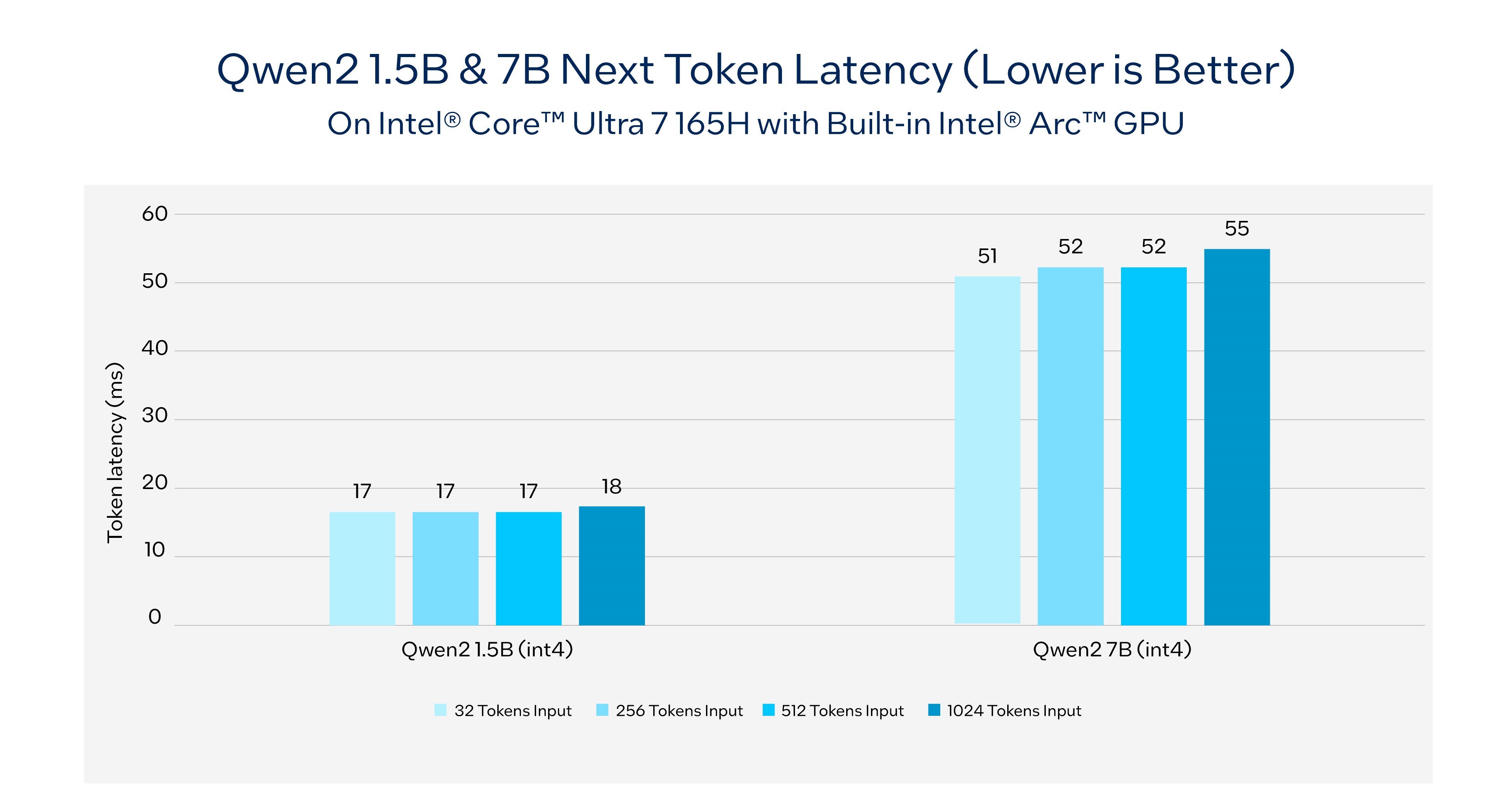

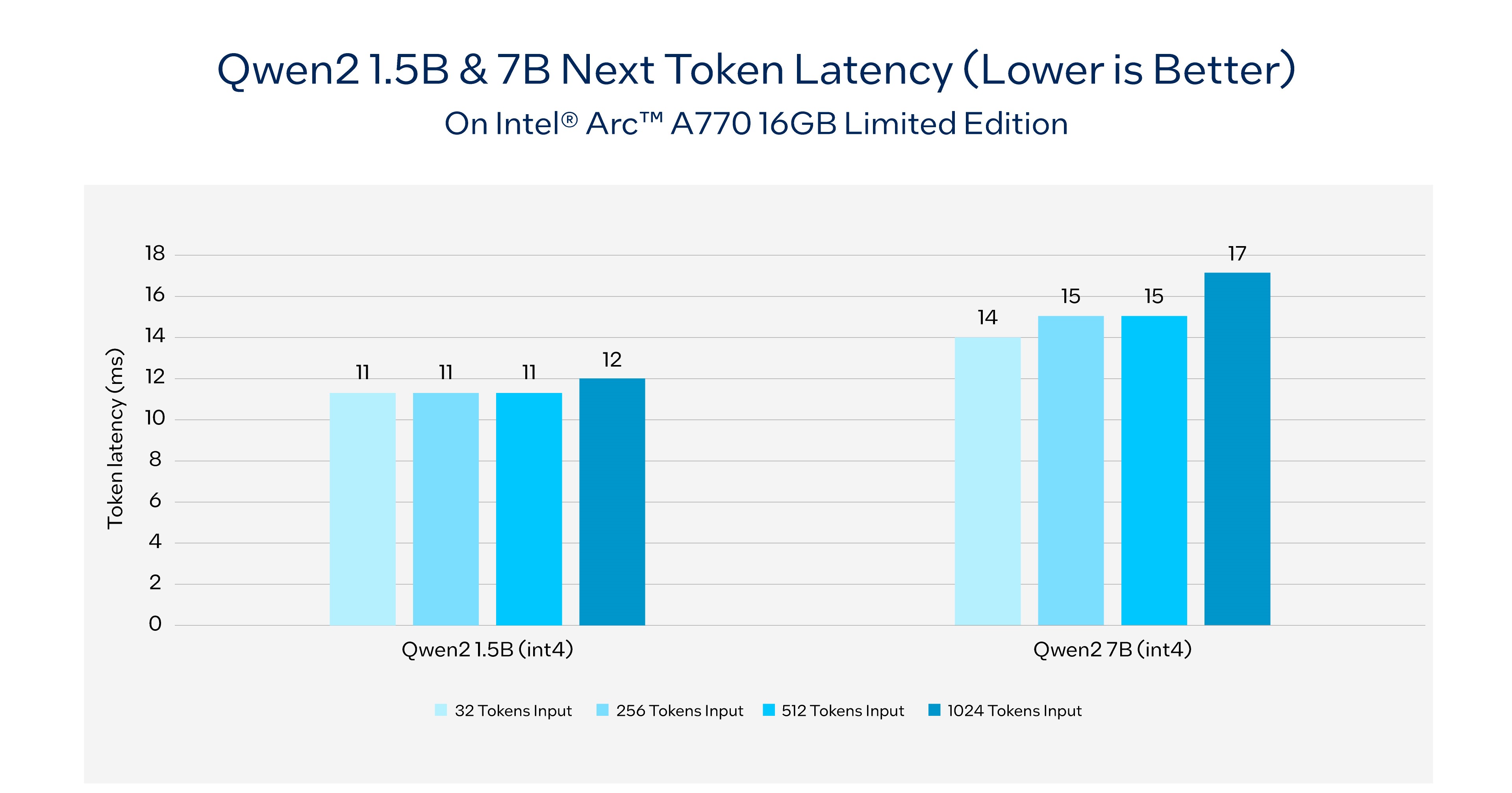

AI PCs

AI PCs powered by the latest Intel® Core™ processors and Intel® Arc™ graphics bring the power of AI to the client and edge, enabling developers to deploy LLMs locally. AI PCs are equipped with specialized AI hardware, such as Neural Processing Units and available built-in Arc™ GPUs, or Intel® Arc™ A-Series Graphics with Intel® Xᵉ Matrix Extensions acceleration, to handle demanding AI tasks at the edge. This local processing capability allows for personalized AI experiences, enhanced privacy, and fast response times, which are critical for interactive applications.

See below for impressive performance and a demo of Qwen2 1.5B working on Intel® Core™ Ultra based AI PC’s available in the market today.

Get Started

Here are the resources for getting started with Intel AI solutions.

- Quick start on Gaudi2

- PyTorch Get Started on Intel Xeon

- PyTorch Get Started on Intel GPUs

- OpenVINO Get Started example for Qwen2 (for AI PCs, Arc GPUs, and Intel Xeon)

Product and Performance Information

Intel Gaudi 2 AI Accelerator: Measurement on System HLS-Gaudi2 with eight Habana Gaudi2 HL-225H Mezzanine cards and two Intel® Xeon® Platinum 8380 CPU @ 2.30GHz, and 1TB of System Memory. Common Software Ubuntu22.04, Habana Synapse AI 1.15.1, PyTorch: Models run with PyTorch v2.2.0 use this Docker image Environment: These workloads are run using the Docker images running directly on the Host OS. Performance was measured on June 5, 2024.

Intel Xeon Processor: Measurement on 5th Gen Intel® Xeon® Scalable processor (formerly codenamed: Emerald Rapids) using: 2x Intel® Xeon® Platinum 8575C, 48cores, HT On, Turbo On, NUMA 2, 1024GB (16x64GB DDR5 5600 MT/s [5600 MT/s]), BIOS 3.0.ES.AL.P.087.05, microcode 0x21000200, Alibaba Cloud Elastic Block Storage 1TB, Alibaba Cloud Linux 3, 5.10.134-16.1.al8.x86_64, Models run with PyTorch v2.3 and IPEX. Test by Intel on June 4, 2024. Repository here.

Intel® Core™ Ultra: Measurement on a Microsoft Surface Laptop 6 with Intel Core Ultra 7 165H platform using 32GB LP5x 7467Mhz total memory, Intel graphics driver 101.5534, Windows* 11 Pro version 22631.3447, Performance power policy, and core isolation enabled. Intel® Arc™ graphics only available on select H-series Intel® Core™ Ultra processor-powered systems with at least 16GB of system memory in a dual-channel configuration. OEM enablement required; check with OEM or retailer for system configuration details. Test by Intel on June 4, 2024. Repository here.

Intel® Arc™ A-Series Graphics: Measurement on Intel Arc A770 16GB Limited Edition graphics using Intel Core i9-14900K, ASUS ROG MAXIMUS Z790 HERO motherboard, 32GB (2x 16GB) DDR5 5600Mhz and Corsair MP600 Pro XT 4TB NVMe SSD. Software configurations include Intel graphics driver 101.5534, Windows 11 Pro version 22631.3447, Performance power policy, and core isolation disabled. Test by Intel on June 4, 2024. Repository here.

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more on the Performance Index site. Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure. Your costs and results may vary. Intel technologies may require enabled hardware, software or service activation.

Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

AI disclaimer:

AI features may require software purchase, subscription or enablement by a software or platform provider, or may have specific configuration or compatibility requirements. Details at www.intel.com/AIPC. Results may vary.