Abstract

HDR (High Dynamic Range) technology brings life to natural scenes with vivid colors, enables visible content even under challenging lighting conditions, and provides higher contrast in the scenes, thereby allowing better artistic representations. This paper introduces what HDR is and how to deliver HDR in media delivery solutions on Intel® Graphics via Intel® Video Processing Library (VPL).

With video technology evolving from high-definition (HD) to ultra-high definition (UHD), new video-enhanced characteristics are refining video such as higher resolution, higher frame rate, and higher dynamic range (HDR). A marked improvement over high-definition video technology, HDR is increasing perceived quality, making each pixel able to better represent the full range of brightness we see. This enables the viewer to see a more realistic image and have an immersive visual experience.

Intel Video Processing Library (VPL) is a public programming interface which delivers fast, high-quality, real-time video decoding, encoding, transcoding, and processing on CPUs, GPUs, and other accelerators for broadcasting, live streaming and VOD, cloud gaming, and more. This paper explains the powerful advantages of HDR and shows how we use VPL to deliver these advantages.

Generally speaking, HDR technology (e.g., HDR video recording and HDR TV display) is a combination of several components including High Dynamic Range, Wide Color Gamut, Higher Bit-Depth (10/12bit sampling), and OETF/EOTF Transfer Functions. With this combination, HDR technology improves the pixels and brings life to natural scenes with vivid colors, enables higher contrast in scenes which allow better artistic representations and enables visible content even under challenging lighting conditions.

High Dynamic Range

The dynamic range of video refers to its luminance range, the maximum and minimum levels. Luminance was measured candela per square meter (cd/m2) – or “nits”. The human eye can adapt to an enormous range of light intensity levels; it can detect a luminance range from 10-6 cd/m2 to 108 cd/m2. The conventional, now referred to as Standard Dynamic Range (SDR) supports luminance values only in the range of 0.01 to 100 cd/m2. High Dynamic Range is the capability to represent a large luminance variation in the video signal, i.e., from very dark values (less than 0.01 cd/m2) to very bright values (10000 cd/m2). HDR creates brighter whites, darker blacks, and brighter colors that better match images we see in the real world. The enhanced image quality due to HDR is appreciable under virtually any viewing condition.

Figure 1 – Luminance in Nits HDR VS SDR

Wide Color Gamut

Wide Color Gamut is the capability of representing a wide range of colors than have been supported. The traditional has been based on Rec.709 color space, which only captures a relatively small percentage (35.9%) of all visible chromaticity values, according to CIE 1931. But Wider color spaces, such as Rec.2020 can represent a much larger percentage of visible chromaticities (~75.8 %).

Figure 2 – Wider Color Gamut HDR vs SDR

Higher Bit-Depth

The conventional uses 8-bit sample precision and does not provide sufficient brightness or color

levels, which could result in the viewer seeing artifacts such as visible banding in the picture. This

limitation is exacerbated with WCG and HDR. Higher sample precision, such as 10-bit or 12-bit, represents more colors and brightness levels and greatly improves the ability to represent smoother transitions between hues or brightness to reduce banding effects.

OETF/EOTF Transfer Function

OETF (Optical Electrical Transfer Function) refers to the way the optical signal gets translated into voltage at the capture (e.g., camera) side and EOTF (Electrical Optical Transfer Function) refers to the way the electrical signal gets translated into optical signal at the display side. These transfer functions are based on gamma, power functions, logarithmic functions, and on perceptual models to mimic the non-linear behavior of the human visual system. Examples of OETF/EOTF transfer functions are Standard Gamma (transfer function for Rec.709/Rec.601), Hybrid Log-Gamma (HLG, developed by BBC & NHK) and Perceptual Quantization (SMPTE ST.2084).

HDR vs SDR Comparison

HDR vs SDR comparison are shown in Table 1

| Component |

Standard Dynamic Range |

High Dynamic Range |

| Luminance range |

Up to 100 nits |

Up to 10000 nits |

| Color Gamut |

Rec. 709 in general |

DCI-P3, Rec. 2020 in general |

| Bit Depth |

Usually in 8-bits |

In 10-bits, 12-bits |

| Transfer Function |

Standard Gamma |

PQ ST2084, HLG |

Table 1 - HDR vs SDR comparison

There is a lot of effort to define new standards for delivering HDR content. The following provides an overview of standards in HDR technology.

SMTPE ST.2084

SMTPE ST.2084: High Dynamic Range Electro-Optical Transfer Function of Mastering Reference Displays

This standard specifies an EOTF characterizing high-dynamic-range reference displays used primarily for mastering non-broadcast content. This standard also specifies an Inverse-EOTF derived from the EOTF.

SMTPE ST.2086

SMTPE ST.2086: Mastering Display Color Volume Metadata Supporting High Luminance and Wide Color Gamut Images

This standard specifies the metadata items to specify the color volume (the color primaries, white

point, and luminance range) of the display that was used in mastering video content. The metadata is

specified as a set of values independent of any specific digital representation.

SMTPE ST.2094

SMTPE ST.2094: Dynamic Metadata for Color Volume Transform

The color transformation process can be optimized through the use of content dependent, dynamic color transform metadata rather than using only display color volume metadata.

HDR Coding

SMPTE ST.2084 and ST.2086 were added to HEVC as part of an extension (SEI: supplemental enhancement information) update in 2014 so it is included in the compressed HEVC file, also the latest AV1.

HDR Types

Actually, there is no final description of HDR types. There are two widely used standards employed today: HDR10 and Dolby Vision. In addition to these, there is a new HDR10+ format which plans to launch dynamic HDR to the HDR10 standard while staying royalty-free. The BBC of the UK and NHK of Japan have jointly proposed the HLG HDR type which is optimized more for real-time broadcast production and is royalty-free. UVA (UHD Video Alliance in China) also proposed HDR Vivid as the type (mainly for China) with royalty-free. The following table lists a few parameters of HDR types for comparison.

|

Types |

Transfer Function |

Metadata |

Max Luminance |

|

HDR10 |

ST2084 |

Static |

Typically, up to 4000 nits |

|

HDR10+ |

ST2084 |

Dynamic |

Up to 10000 nits |

|

Dolby Vision |

ST2084 |

Dynamic |

Up to 10000 nits |

|

HLG |

Hybrid Log Gamma (HLG) |

N/A |

N/A |

|

HDR Vivid |

ST2084 or HLG |

Dynamic |

Up to 10000 nits |

Table 2 – HDR types comparison

Overview

Intel® Video Processing Library (VPL) is a programming interface for video decoding, encoding, and processing to build portable media pipelines on CPUs, GPUs, and other accelerators. The VPL API is used to develop quality, performant video applications that can leverage Intel® hardware accelerators. It provides device discovery and selection in media-centric and video analytics workloads, and API primitives for zero-copy buffer sharing. VPL is backward-compatible with Intel® Media SDK and cross-architecture compatible to ensure optimal execution on current and next-generation hardware without source code changes. For more details, please visit Intel® Video Processing Library. The following diagram shows how VPL is working on Intel® Graphics Stack (Linux*).

Figure 3 - Intel® software stack to build media pipelines on an Intel® GPU (Linux)

HDR in VPL

As the paper introduced in the previous section, HDR technology is the combination of the following components: High Luminance Dynamic Range, Wide Color Gamut, High bit depth, and EOTF/OEFT transfer function. In the video specification, several supplemental enhancement information (SEI) messages to describe the components of HDR technology are added in HEVC/AV1to support HDR signaling (SEI details, please visit HEVC / AV1 specification). VPL defines a few structures which can be mapped to video specification (HEVC/AV1) semantics.

- API structure mfxExtContentLightLevelInfo, indicate content light level for HDR and can be mapped to video specification(HEVC/AV1) semantics.

- API structure mfxExtMasteringDisplayColourVolume, indicate mastering display colour volume for HDR and can be mapped to video specification(HEVC/AV1) semantics.

- API structure mfxExtVideoSignalInfo, indicate video signal info, need to program the correct colour primaries and transfer function for HDR and can be mapped to video specification(HEVC/AV1) semantics.

- API structure mfxExtVPP3DLut, for HDR Tone mapping (LUT-based).

HDR Transcoding

Here, HDR transcoding means 3 scenarios: HDR input stream-> HDR output stream, HDR input stream-> SDR output stream, SDR input stream-> HDR output stream.

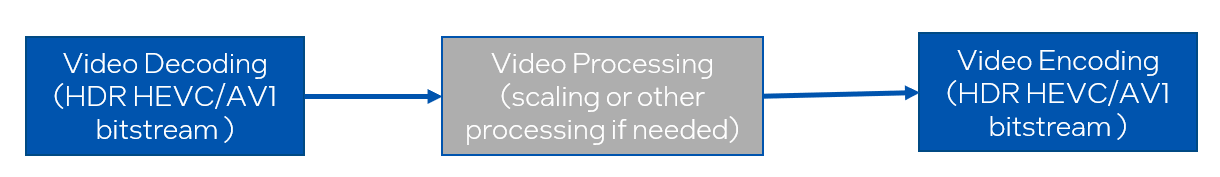

HDR->HDR Transcoding

HDR->HDR transcoding includes HDR decoding and HDR encoding. In this scenario, video processing is optional based on the need (e.g., scaling may be needed). Please see more details about HDR decoding/encoding in the following part.

Figure 4 – HDR->HDR transcoding

HDR->SDR Transcoding

HDR->SDR transcoding includes HDR decoding, HDR Tone Mapping, and SDR encoding. In this scenario, video processing HDR Tone Mapping is a must-have, other video processing feature is optional based on the need (e.g., scaling may be needed). Please see more details about HDR decoding/tone mapping in the following part.

Figure 5 – HDR->SDR transcoding

SDR->HDR Transcoding

SDR->SDR transcoding includes SDR decoding, HDR Tone Mapping, and HDR encoding. In this scenario, video processing HDR Tone Mapping is a must-have, other video processing feature is optional based on the need (e.g., scaling may be needed). Please see more details about HDR encoding/tone mapping in the following part.

Figure 6 – SDR->HDR transcoding

HDR Decoding

VPL provides HDR decoding functionality. The application should extract HDR SEI information from bitstream via the VPL API for HEVC/AV1 HDR decoding. Please visit API structure mfxExtContentLightLevelInfo, mfxExtMasteringDisplayColourVolume, mfxExtVideoSignalInfo for more details.

HDR Encoding

VPL provides HDR encoding functionality. The application should add HDR SEI information into bitstream via the VPL API for HEVC/AV1 HDR encoding. Please visit API structure mfxExtContentLightLevelInfo, mfxExtMasteringDisplayColourVolume, mfxExtVideoSignalInfo for more details. The application should pay attention to mfxExtVideoSignalInfo, need to set the correct “ColourPrimaries” and “TransferCharacteristics”(for example, Rec.2020 and SMPTE ST.2084).

HDR Tone Mapping

Tone mapping is a technique used in image processing to map one set of colors to another. Correct tone mapping is necessary for a good user experience (please see the comparison in Figure 7a and 7b). Currently, VPL provides 2 approaches for HDR Tone Mapping.

Figures 7a & 7b – with correct tone mapping (7a) & without correct tone mapping (7b)

Look Up Table(LUT)- Based HDR Tone Mapping

This approach is flexible; the application can customize the tone mapping effect based on the Look Up table from the application. But this approach requires the application developer has image-processing knowledge and expertise. Let’s take HLG input->SDR output as an example to explain how it works.

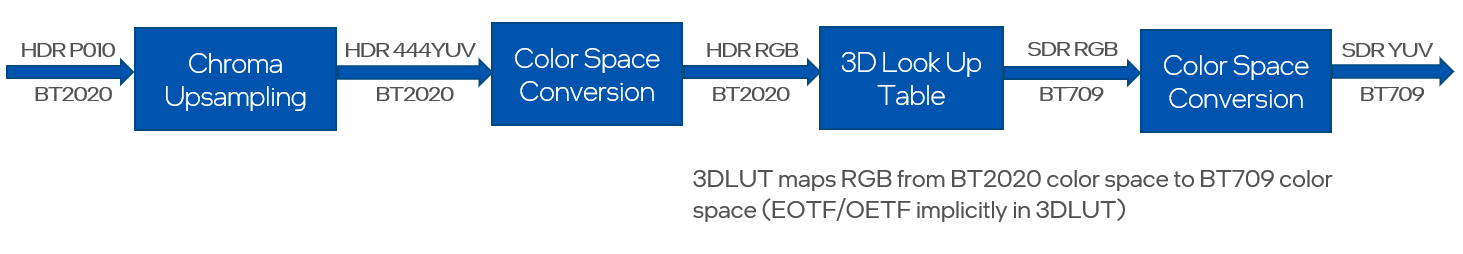

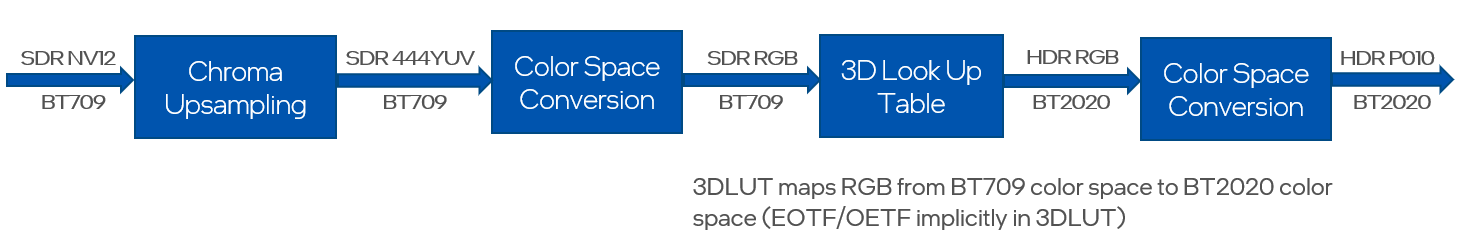

As the HDR Transcoding section introduced, there are 2 typical conversion HDR input signals -> SDR input signal and SDR input signal-> HDR input signal. Take HDR signal p010 format SDR signal nv12 format as the example to explain how to use LUT-based Tone Mapping.

Figure 8 shows the detailed steps of HDR->SDR Tone Mapping. All these steps can be completed on one VPP API call MFXVideoVPP_RunFrameVPPAsync. But the application needs to program the correct input/output (in this case, the input HDR P010 BT2020 input and the output SDR NV12 BT709) and 3DLUT parameters (video-processing-3dlut). In this pipeline, Chroma Upsampling, Color Space Conversion are added automatically when VPL builds the data-processing pipeline. The application does not need to take care of it.

Figure 8 – HDR->SDR Tone Mapping

Figure 9 is the detailed steps of SDR->HDR Tone Mapping. Same as HDR->SDR Tone Mapping, the application needs to program the correct input/output (in this case, the input SDR NV12 BT709 and the output HDR P010 BT2020) and 3DLUT parameters (video-processing-3dlut).

Figure 9 – SDR->HDR Tone Mapping

Regarding 3DLUT-based Tone Mapping, we investigated the 3DLUT mapping accuracy. With 65^3, the delta E is <2 which can keep the color accuracy.

HDR Metatada-Based HDR Tone Mapping

VPL also provides HDR metadata-based Tone Mapping. In this approach, the application only needs to program the HDR metadata as the programming guide HDR Tone Mapping indicates. Currently, this approach only supports HDR10 to SDR.

Sample Code

For HDR transcoding sample code, please visit sample_multi_transcode.

Intel Video Processing Library provides the open programming interface for fast, high-quality, real-time HDR decoding, tone mapping, and encoding which delivers the HDR advantages. With VPL, the application developer can develop quality, performant video applications that can leverage Intel hardware accelerators.

- VPL Specification

- Introduction to delta E

- CIE system

- SMTPE ST.2084, SMTPE ST.2086, SMTPE ST2094

No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document.

Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade.

This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest forecast, schedule, specifications, and roadmaps.

The products described in this document may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request.

See Related Content

Tech Articles

- VPL Support Added to FFMPEG for Great Streaming on Intel® GPUs

Read

On-Demand Webinars

- Optimize and Accelerate Video Pipelines across Architectures

Watch - Accelerate Video Processing on More CPUs, GPUs, and Accelerators

Watch

Get the Software

Intel® oneAPI Base Toolkit

Get started with this core set of tools and libraries for developing high-performance, data-centric applications across diverse architectures.