Sometimes, the Compiler Can Help You Write Faster Code

Mayank Tiwari, Technical Consulting Engineer, and Rama Malladi, Graphics Performance Modeling Engineer, Intel Corporation

@IntelDevTools

Get the Latest on All Things CODE

Sign Up

Improving the performance of an application typically takes two types of optimizations: algorithmic and architectural.

Most programmers are familiar with algorithmic optimizations, which are taught in computer science programs. But architectural optimizations aren't as straightforward. They require knowledge of the underlying architecture—plus support from tools like compilers, profilers, and libraries—to use the hardware as efficiently as possible. Modern compilers are powerful and can perform a lot of code transformations and optimizations to improve application performance. They can also give programmers useful feedback to tune the code for additional performance.

In this article, we'll discuss one such feature in the Intel® C, C++, and Fortran Compilers known as Optimization Reports. Each report is a text file generated alongside the compiled object/binary file that can give insights into code generated by the compiler. The report includes information on loop transformations, vectorization, inlining, and other optimizations.

Generating the Optimization Report

To generate the optimization report in Linux and macOS, use the compiler option -qopt-report[=n]. In Windows, use /Qopt-report[:n]. The n is optional and indicates the level of detail in the report. Valid values are 0 (no report) through 5 (detailed). For levels n=1 through n=5, each level includes all the information of the previous level, and potentially some additional information. We'll discuss reports generated using level n=5 and only focus on loop optimizations performed by Intel C and C++ compilers. (In future articles, we'll discuss other opt-report topics.)

Loop Transformations

Loops are one of the most important control flow statements in programming languages. They're often great targets for optimization, since a small optimization can impact a large number of instructions and improve overall performance. Compilers specially target loops to apply transformations like loop unrolling, loop fusion, and loop interchange. You can see all these transformations through compiler reports and funnel the feedback to the compiler for better performance.

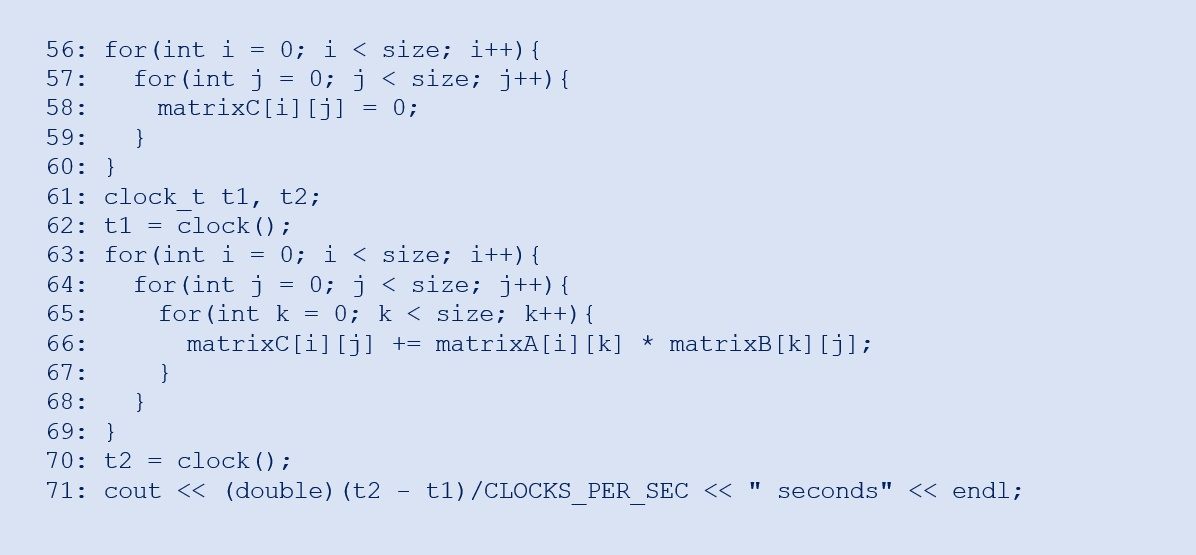

Figure 1 shows a matrix multiplication code snippet with matrixA, matrixB, and matrixC of dimension (size * size). For illustration, size is set to 256 and matrixA and matrixB are populated with random elements between 1 and 10. To begin, we compile this example using Intel® C++ Compiler 2020.

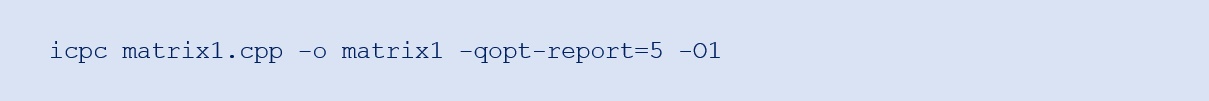

Windows:

Linux:

The generated binary was named matrix1 and the corresponding optimization report matrix1.optrpt, which includes all the information provided by the compiler as part of the optimization report at level n=5 and at optimization level O1.

Figure 1. Matrix multiplication code

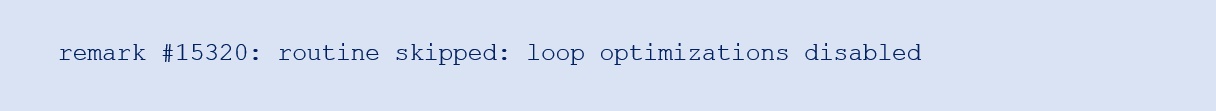

Since this is the first compiler optimization report we've generated, we'll break it up into parts to understand it. The very first thing we see in the optimization report is the compiler version and compiler options set:

This is followed by some inlining metrics (which we'll cover in future articles). In the section Report from: Loop nest, Vector & Auto-parallelization optimizations [loop, vec, par] we can review the loop optimizations done by the compiler. Since we've used the /O1 option to compile our code, this is an indication to the compiler to disable all looprelated optimizations. The optimization report shows this message:

The rest of the optimization report contains details on code generation (CG) optimizations, inlining, interprocedural optimizations (IPO), and offload (if any).

We encourage you to run the code snippet in Figure 1 and measure the execution time. On our test system, we observed approximately 15ms using the /O1 compiler option. Now let's try the default compiler option /O2.

Windows:

Linux:

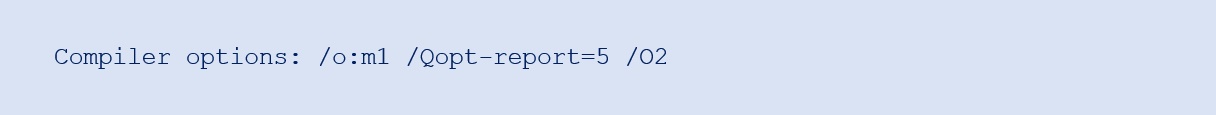

With /O2, the optimization report gets updated with some extra information. The very first change we can see is the updated compiler options listing, which is now /O2:

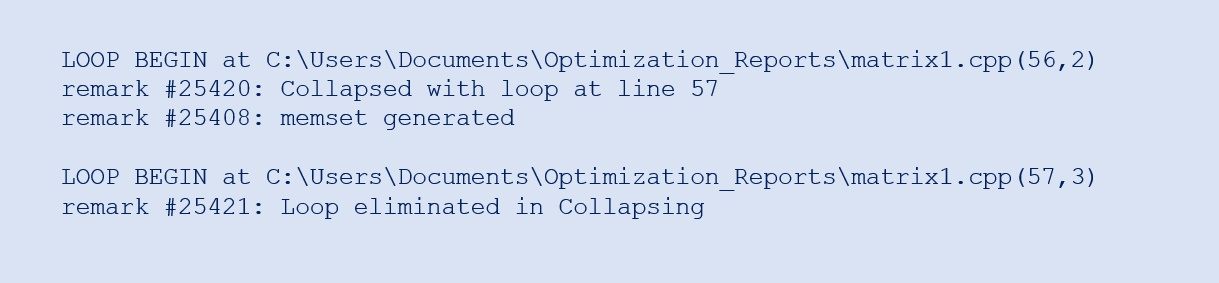

Another big change in the report is the inclusion of the loop optimization report. For example, loops at lines 56 and 57 are collapsed/fused and replaced with memset(); set matrixC to zero. memset() is much more efficient than the for loop, since it uses vector or rep move instructions to initialize.

If we scroll further, we see the following optimization remark for the outermost loop at line 63:

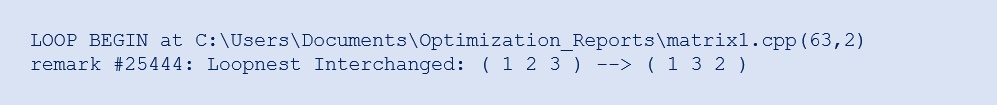

This optimization remark shows a very clever loop transformation by the compiler. Called loop interchange or loopnest interchange, this optimization exchanges the inner loop with the outer loop. With a loop variable used as an index into an array, this transformation can, in some cases, improve the locality of reference (cache hits). The remark ( 1 2 3 ) –> ( 1 3 2 ) conveys that the compiler changed the execution of lines 63, 64, and 65 to 63, 65, and 64. This means that matrix multiplication is now being computed as shown in Figure 2.

Figure 2. Matrix multiplication code after loop interchange applied by the compiler

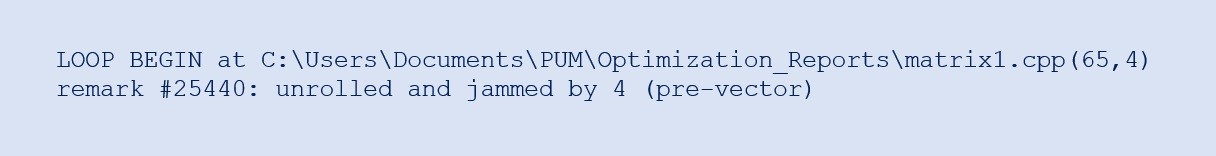

On our test system, the execution time with the /O2 option was approximately 4ms—which translates to a speedup of approximately 4x compared to the /O1 option. This highlights the benefit of compiler optimizations (for example, better cache locality). Using compiler option /O3, we see more aggressive optimizations being applied and more loop transformations in the code. The updated optimization report has this information:

With /O3, the compiler unrolled and jammed the loop at line 65 by a factor of 4. Loop unrolling and jamming inflates the loop body and brings down the number of loop iterations. This increases processor utilization and reduces the number of conditional and increment operations in the loop execution—often resulting in higher performance. However, the execution time after /O3 optimization was still about 4ms, so this loop transformation didn't benefit for this code example.

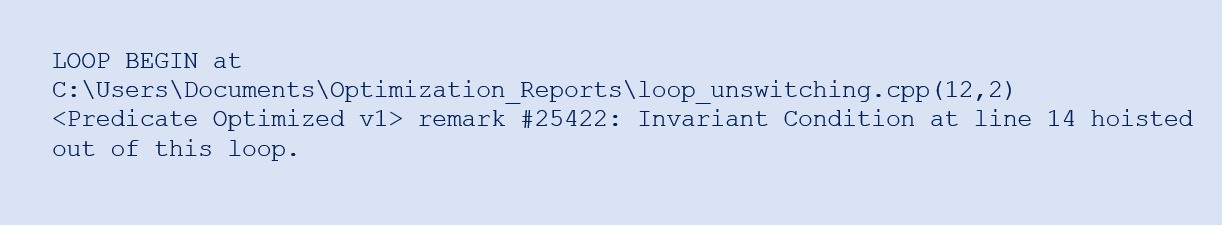

Loop Unswitching

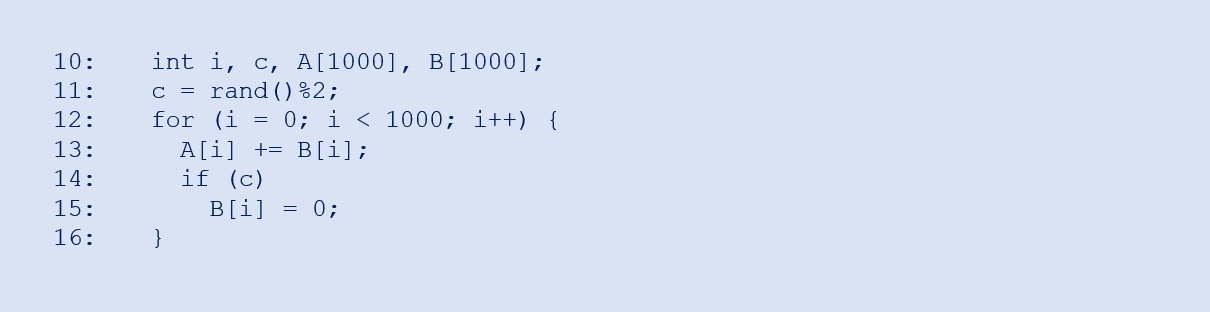

Let's look at another code where the compiler implements loop unswitching. In this transformation, a conditional statement inside a loop (Figure 3) is changed into an if-else clause by duplicating the loop body. The transformed loops will be easier for the compiler to optimize.

Figure 3. Example code to illustrate the loop unswitching optimization

If c is kept uninitialized or initialized with random value, the compiler generates two loops:

- One with an if condition

- One with an else condition

We can verify this with the opt-report remark : conditional statement that’s been hoisted out of the loop:

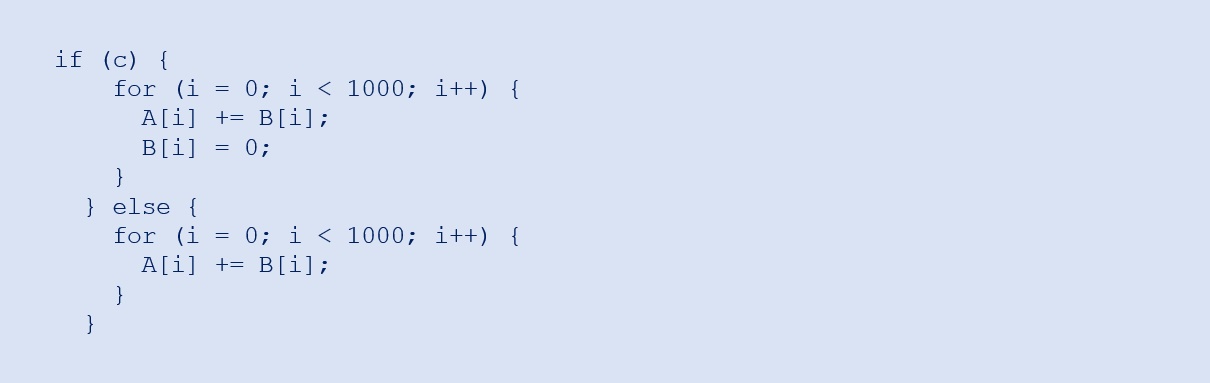

Figure 4 shows the transformed code.

Figure 4. Loop unswitching optimization applied to a loop body

When c is initialized to 0 or 1, no new version is generated, since the value was resolved at compile time. You can verify this as an exercise.

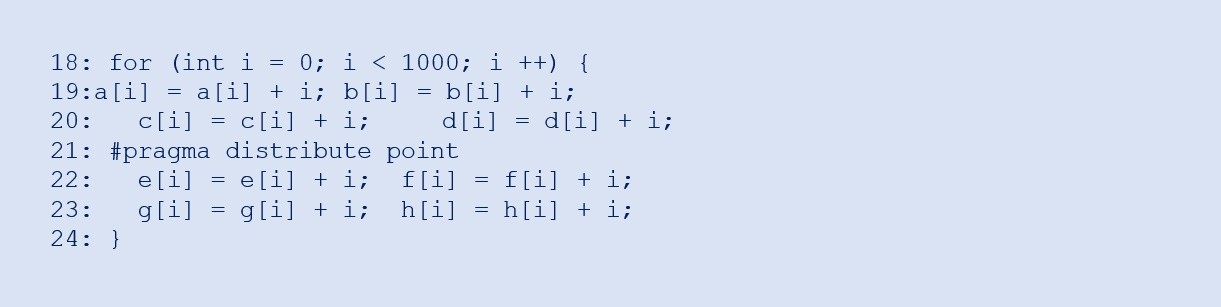

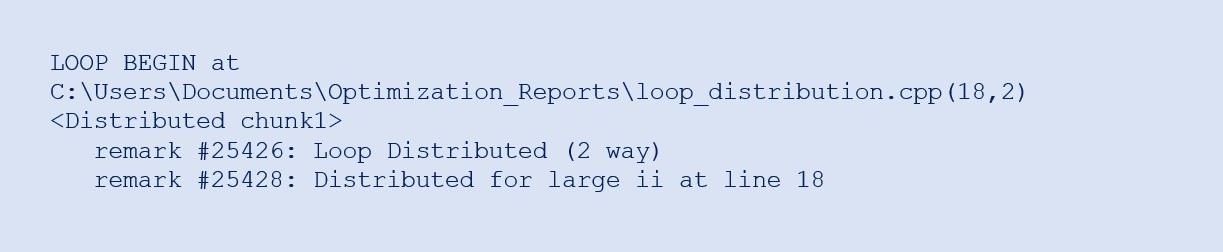

Loop Distribution

Another example of loop optimization by the compiler is loop distribution. If a loop body is big and has independent statements, the compiler can distribute the loop into several loops. We can force such an optimization by using #pragma distribute point. When this is placed inside the loop, the compiler will attempt to distribute the loop at that point in the code. The compiler will ignore all dependencies in the loop body. Figure 5 shows an example of code for loop distribution.

Figure 5. Loop distribution optimization applied to a loop body

In the compiler report, we can see that the loop has been distributed into two loops and the loop details are presented for both the chunks separately.

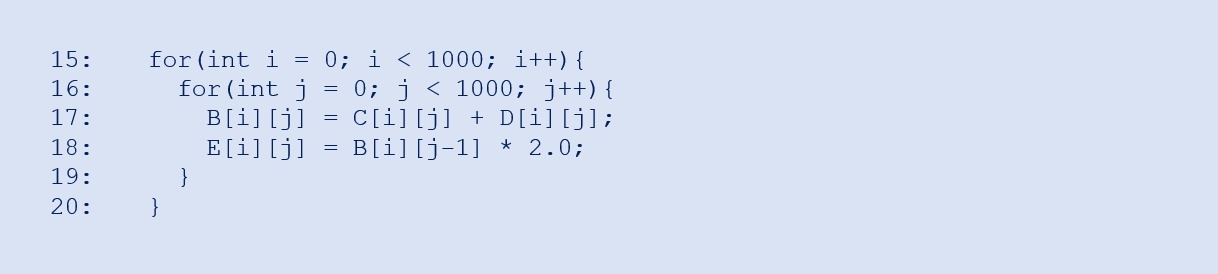

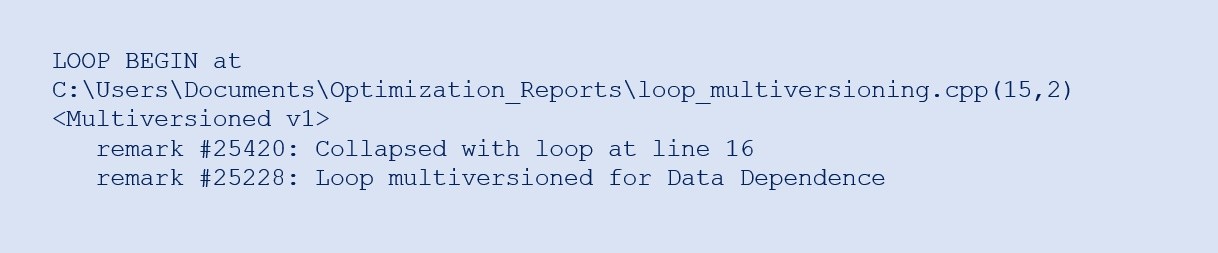

Loop Multi-Versioning

Loop multi-versioning is another optimization the compiler can perform when it has doubts over a potential vectorized and scalar result mismatch. Here, the compiler generates two loops, one scalar and one vectorized. It performs a sanity check for the vectorized loop's results by inserting a runtime loop. The compiler decides to vectorize the loop only if the results are the same for both the loops, and if it can predict a benefit from vectorization. The programmer can suppress this multi-versioning either by resolving the potential mismatch issue (for instance, by removing any aliasing concern or dependencies) or by forcibly vectorizing the loop. In those scenarios, no multi-versioning optimization will be applied.

Figure 6. Loop multi-versioning optimization applied to a loop body

In the code shown in Figure 6, due to assumed data dependence, the compiler goes for a multi-versioning optimization of the loops as mentioned in the compiler report.

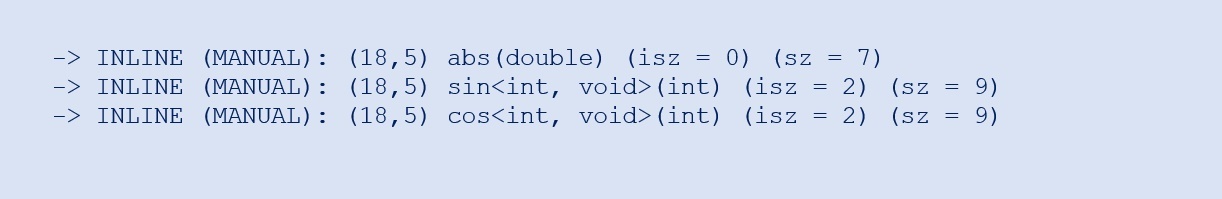

Inline Function Expansion

Another optimization by many modern compilers is inline function expansion. For example, the math functions abs(), sin(), and cos() are inlined by the compiler. Loops with these function calls can't be vectorized, so the compiler prefers to remove and inline these functions. This optimization is also listed in the optimization report (as shown below).

Here, sz is the size of the routine, isz is the size of the routine when inlined, and isz indicates the code bloat of the routine when inlined. The smaller a routine’s size, the more likely it will be inlined.

Code Generation, High-Level, and Loop Optimizations

In this article, we've dealt with several loop optimizations done by Intel C/C++ Compiler and understood them better using the optimization reports that were generated. Some details not dealt with here include code generation optimizations such as instruction scheduling, register allocation, and data-flow analysis and tuning. We encourage you to examine compiler optimization reports and try to apply the recommendations for better performance.

References

Intel® C++ Compiler 19.1 Developer Guide and Reference

Engineering a Compiler by Keith Cooper and Linda Torczon.

______

You May Also Like

Intel® oneAPI DPC++/C++ Compiler

Compile ISO C++, Khronos SYCL*, and DPC++ source code across CPUs, GPUs, and FPGAs.

Part of the Intel® oneAPI Base Toolkit.

Get It Now