Introduction

This white paper discusses uses for Intel® Data Center GPU Flex Series 170 on ASUS* ESC4000-E11 AI server to accelerate federated learning for real-world medical applications. In our previous study, we explored the potential of Intel CPUs to optimize federated learning for AlexNet and Visual Geometry Group 19 (VGG19) models. Building upon this research, we now delve into the impact of Intel Data Center GPU Flex Series 170 on further accelerating the training process of a proprietary AI model designed for a real-world medical application.

Our focus is detecting hand joint erosion, a common symptom of rheumatoid arthritis (RA). To achieve this, we employed a cutting-edge, EfficientNetV2-based model named modified total Sharp score (mTSS). In terms of model parameters, this model's complexity falls between the well-known AlexNet and VGG19 architectures.

Our findings are compelling. Intel Data Center GPU Flex Series 170 GPUs have demonstrated a remarkable performance advantage over CPUs while maintaining comparable accuracy. Training times were significantly reduced by nearly three times, showcasing the GPU's efficiency in handling the computational demands of federated learning. This outcome highlights the synergistic benefits of combining Intel's advanced software optimizations with the powerful hardware capabilities of the Intel Data Center GPU Flex Series 170.

These results underscore the potential of Intel Data Center GPU Flex Series 170 to revolutionize federated learning in real-world medical applications. By accelerating the training process, we can expedite the development and deployment of AI models that can more effectively assist healthcare professionals in diagnosing and treating diseases like RA.

Background

RA is a chronic autoimmune disease that causes joint inflammation and pain. Early detection and diagnosis are crucial for effective treatment and management. Medical imaging techniques, such as X-rays and MRIs, are commonly used to diagnose RA, but interpreting these images can be time-consuming and require specialized expertise.

Federated learning is a decentralized approach that enables training machine learning models on edge devices while preserving data privacy. Given that data is the key factor determining AI model capability, federated learning is naturally becoming a platform for multiple medical units to collaborate on an AI model by using the authorized and each own medical data. This well-trained AI model can be particularly beneficial in improving the inspection efforts conducted by a human doctor.

Problem Statement

The current approach to diagnosing RA using medical imaging techniques has several limitations:

- Time-consuming and requires specialized expertise

- Limited accuracy due to human error

- Data privacy concerns due to centralized data storage

Proposed Solution

To address these limitations, we propose a federated learning approach with Intel Data Center GPU Flex Series 170 on ASUS ESC4000-E11 to accelerate training and inference for a real-world medical scenario, specifically detecting hand joint erosion caused by RA.

Why ASUS* ESC4000-E11?

The general-purpose ASUS ESC4000-E11 server is designed to excel in federated learning applications that require efficient and secure handling of distributed AI workloads. Federated learning involves training machine learning models across multiple decentralized devices or servers without sharing raw data, ensuring data privacy and security. Here's how the ASUS ESC4000-E11, powered by 4th gen Intel® Xeon® Scalable processors and Intel Data Center GPU Flex Series 170 accelerators, enhances federated learning:

- Enhanced AI Acceleration: 4th gen Intel Xeon Scalable processors include built-in AI acceleration features, such as Intel® Deep Learning Boost. This technology enhances the performance of AI inference and training tasks, allowing the server to handle complex machine learning models more efficiently.

- Improved Security: Federated learning requires robust security measures to protect data privacy. 4th gen Intel Xeon Scalable processors come with advanced security features, including Intel® Software Guard Extensions and Intel® Total Memory Encryption. These features help safeguard data throughout the processing pipeline and ensure secure computation even in a distributed environment.

- Superior Scalability: Federated learning environments often involve scaling across multiple nodes to manage extensive datasets and model complexities. The Intel Xeon Scalable processors are designed for high scalability, supporting large memory capacities, and high-speed interconnects. This ensures the server can seamlessly expand to meet growing federated learning demands.

- Optimized Performance and Power Consumption: The combination of Intel Data Center GPU Flex Series 170 accelerators and Intel Xeon Scalable processors provides a balanced approach to performance and power efficiency. The accelerators offload specific tasks from the CPU, reducing the overall power consumption while maintaining high-performance levels. This balance is crucial for federated learning, where prolonged and intensive computational tasks are common.

- Efficient Data Handling: Federated learning involves frequent data exchanges between nodes to update the global model. The server's architecture, supported by Intel technologies, ensures efficient data handling and high-speed communication, minimizing latency and maximizing throughput.

By using these advanced features, the general-purpose ASUS ESC4000-E11 server offers a highly optimized and secure platform for federated learning, enabling organizations to harness the power of AI while maintaining data privacy and operational efficiency.

Methodology

We used three ASUS ESC4000-E11 servers, two of which acted as federated clients and one of which acted as a federated server. The federated clients were equipped with XPUs for Intel Data Center GPU Flex Series 170, while the federated server was equipped with Intel Xeon CPUs. We trained an EfficientNetV2-based model named Modified Total Sharp Score (mTSS) on the federated clients and evaluated its performance on the federated server.

- Data Preparation: We collected a dataset of hand X-ray images from patients with RA. The images were preprocessed and annotated to detect hand joint erosion.

- Model Architecture: The mTSS model was designed to be computationally efficient while maintaining high accuracy for RA detection. It is based on the EfficientNetV2 architecture and incorporates modifications to improve performance for medical image analysis.

- Federated Learning Framework: We implemented a federated learning framework using TensorFlow* Federated, which enables distributed training across multiple devices while ensuring data privacy.

- Experimentation: We conducted experiments using Intel CPUs and Intel Data Center GPU Flex Series 170 to evaluate the performance of federated learning with different hardware configurations.

The Test Setup

The testing environment was built using three ASUS ESC4000-E11 server machines. Two of these servers acted as federated clients, while the third ASUS ESC4000-E11 served as the federated server responsible for aggregating models based on the data held by each federated client. Various studies have explored different aggregation methods and their potential impact on the final model in federated learning setups. By default, this test applied the averaging method to merge the gradients from the federated clients.

The primary focus of this test is to provide performance insights on the federated client hardware—specifically, the ASUS ESC4000-E11 equipped with Intel Data Center GPU Flex Series 170 for acceleration. The key metrics evaluated in this setup include:

- Model training time

- Model accuracy

- Training loss

These metrics were then compared to results obtained from Intel Xeon CPUs, providing a comprehensive view of which hardware is better suited for deploying federated learning in a real-world medical context.

Note NTUNHS provided all medical data and images used in this test for research purposes only.

Model Inference and RA Erosion Detection

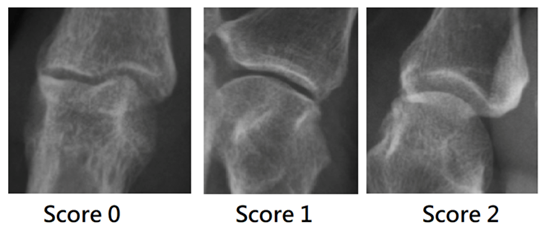

After the federated learning process and the final model aggregation, we also tested the model’s inference performance to assess its capability to correctly detect different levels of RA erosion. The designed mTSS model was employed to classify RA erosion severity into three distinct levels:

- Level 0: No erosion

- Level 1: Mild erosion

- Level 2: Severe erosion

This classification system allows for accurate diagnosis of RA progression based on medical imaging, aiding in effective treatment planning.

Figure 1. Classification of hand joint erosion

Key Findings

The performance comparison between the XPUs for Intel Data Center GPU Flex Series 170 and Intel Xeon CPUs revealed substantial improvements in model training and inference speed for federated AI workloads.

Model Training Time

The XPUs for Intel Data Center GPU Flex Series 170 demonstrated a significant speed advantage over CPUs. Training the AI model on XPUs for Intel Data Center GPU Flex Series 170 was nearly three times faster than training on Intel® Xeon® Gold 6430 processor while maintaining the same convergence trend.

Figure 2. Model training time comparison

Inference Time

After completing the federated learning process, the final aggregated model was tested for inference on new hand joint images (256 x 256).

- The Intel Xeon Gold 6430 CPU took 0.2111 seconds to evaluate a single image.

- In comparison, the Intel Data Center GPU Flex Series 170 took 0.0531 seconds, making it nearly four times faster for image classification.

Model Accuracy

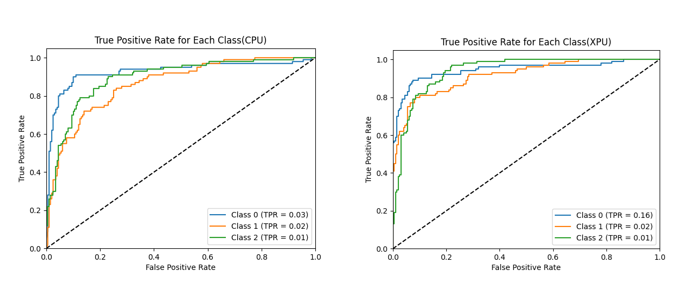

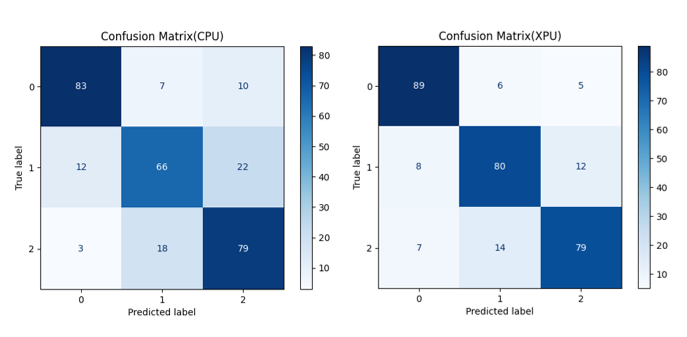

Regarding accuracy, both the Intel CPUs and GPUs delivered similar performance. The receiver operating characteristic (ROC) plots and confusion matrices showed that the final aggregated model achieved approximately 76%–82% accuracy in classifying new image data.

The high true positive rate indicated that the mTSS model was an effective classifier for detecting hand joint erosion in RA patients.

Figure 3. ROC comparison

Figure 4. Confusion matrix comparison

These results underscore the significant speed advantage of using Intel Data Center GPU Flex Series 170 for AI model inference, achieving nearly 4x faster classification times while maintaining similar accuracy levels compared to CPU-based solutions.

The analysis further highlights that different real-world problems demand varied computational capabilities. The findings presented in this paper demonstrate that the ASUS AI server equipped with the Intel Data Center GPU Flex Series 170 is a highly effective solution for addressing medical AI use cases, particularly those requiring scalable model training and high-speed inference.

Product and Performance Information

Hardware Configuration

- Federated Learning Clients:

- 2 ASUS ESC4000-E11 servers equipped with the following:

- 1 XPU for Intel Data Center GPU Flex Series 170

- 2 Intel Xeon Gold 6430 CPUs

- 256 GB RAM

- Operating System: Ubuntu* 22.04.4 LTS (Server)

- 2 ASUS ESC4000-E11 servers equipped with the following:

- Federated Learning Server:

- 1 ASUS ESC4000-E11 server equipped with the following:

- 2 Intel® Xeon® Platinum 8352Y CPUs

- 189 GB RAM

- Operating System: Debian 12

- 1 ASUS ESC4000-E11 server equipped with the following:

Software Configuration

- Programming Language: Python* 3.10

- Environment Management: Miniconda*

- Federated Learning Framework: Flower*

This setup provides an ideal hardware-software combination for federated learning, especially in medical scenarios requiring secure, decentralized AI model training across multiple institutions. It uses high-performance hardware for faster results.

Results

The XPUs for Intel Data Center GPU Flex Series 170 demonstrated a significant performance improvement over CPU-based model training in terms of speed. By measuring the time required for model training, it was found that the AI model training time on XPUs for Intel Data Center GPU Flex Series 170 was nearly three times faster compared to training on Intel Xeon Gold 6430 CPUs while maintaining the same convergence trends.

Upon completing the federated learning process, the final aggregated AI model was tested on new hand joint images to evaluate its performance. Key findings include:

- Inference Time:

- Intel Xeon Gold 6430 CPU took approximately 0.2111 seconds to evaluate a single 256 x 256 image.

- In contrast, the Intel Data Center GPU Flex Series 170 completed the same evaluation in 0.0531 seconds, nearly four times faster than the CPU.

- Model Accuracy:

The accuracy of the final aggregated model was evaluated using ROC plots and confusion matrices. The results showed that the accuracy remained approximately similar across the Intel CPU and GPU configurations. Specifically:- The model achieved 76%–82% accuracy in classifying new image data.

- The high true positive rate indicated that the mTSS model is an effective classifier for detecting hand joint erosion caused by RA.

These findings confirm that the Intel Data Center GPU Flex Series 170 provides a significant speed advantage in inference while maintaining similar accuracy levels compared to the CPU. The nearly 4x improvement in classification speed underscores the GPU’s capability for accelerating AI model deployment in real-world scenarios.

Impact of ASUS ESC4000-E11 on Federated AI Applications

The general-purpose ASUS ESC4000-E11 server, equipped with 4th gen Intel Xeon Scalable processors and XPUs for Intel Data Center GPU Flex Series 170, is critical in enhancing federated AI capabilities across various industries. This server's architecture is designed to optimize distributed AI workloads, making it ideal for sectors that prioritize privacy, scalability, and performance, such as healthcare and finance.

Key Benefits for Federated AI

- Enhanced Data Security: The ASUS ESC4000-E11 supports federated learning, ensuring that sensitive data remains decentralized. This decentralization is vital for maintaining data privacy, especially in compliance-heavy sectors. The server’s design reinforces privacy by processing data locally, which reduces the risks of data breaches and ensures adherence to stringent data protection regulations.

- Scalability and Flexibility: The server's architecture allows it to scale efficiently as federated learning networks expand. This scalability supports larger datasets and increasing model complexity, enabling organizations to grow their AI capabilities while maintaining optimal performance across multiple edge devices or institutions.

- Reduced Latency: With its powerful processing capabilities, the ASUS ESC4000-E11 minimizes latency during model training and updates. This reduction in latency is particularly crucial in real-time applications, such as medical diagnostics, where quick decision-making can significantly impact outcomes.

- Energy Efficiency: The integration of XPUs for Intel Data Center GPU Flex Series 170 ensures high performance while optimizing power consumption. This energy efficiency results in cost savings and environmental benefits, making it a sustainable solution for large-scale AI deployments.

By using the ASUS ESC4000-E11, organizations can achieve fast, more secure, and more efficient federated learning setups, driving innovation in AI-driven sectors.

Case Study: Federated AI in Medical Imaging Diagnostics

In a real-world federated AI scenario involving medical imaging diagnostics, multiple hospitals collaborate to improve the accuracy of AI models that diagnose diseases from medical images such as MRI, CT scans, and X-rays. Each hospital retains its data locally, ensuring compliance with privacy regulations while collectively training a shared AI model.

Infrastructure Setup

Each hospital deploys the ASUS ESC4000-E11, equipped with 4th gen Intel Xeon Scalable processors and XPUs for Intel Data Center GPU Flex Series 170, to handle intensive AI workloads while facilitating federated learning. This setup allows the hospitals to collaborate without sharing raw data.

Federated Learning Process

- Data Preparation: Each hospital preprocesses its local medical imaging data in-house, ensuring it never leaves a secure environment.

- Local Model Training: Hospitals use ASUS ESC4000-E11 servers to train the AI models on local datasets, using XPUs for Intel Data Center GPU Flex Series 170 for accelerated training. The training process remains within each hospital’s infrastructure, ensuring privacy.

- Model Aggregation: The locally trained models are shared with a central server, where the models are aggregated to form a global model. This aggregation process only involves model parameters; no raw data is exchanged.

- Global Model Updates: The global model, which now benefits from the collective intelligence of all hospitals, is redistributed back to each hospital. The cycle continues with further local training and iterations.

Performance and Efficiency Gains

- Faster Training Times: The ASUS ESC4000-E11’s powerful hardware significantly reduces training times, allowing hospitals to converge on a highly accurate global model quickly.

- Energy-Efficient Training: Training is completed in an energy-efficient way using XPUs for Intel Data Center GPU Flex Series 170, reducing operational costs and environmental footprint.

- Enhanced Data Security: 4th gen Intel Xeon processors have advanced security features that ensure patient data remains secure throughout the federated learning process.

Outcome and Benefits of Medical Diagnostics

The federated AI system, supported by the ASUS ESC4000-E11 servers, results in:

- A highly accurate and robust AI model capable of highly precise diagnosis of diseases from medical images

- A collaborative framework that enables hospitals to benefit from a diverse dataset, improving the model’s generalizability without compromising data privacy

- Faster model iterations, leading to quick deployment of diagnostic tools, directly benefiting patient care through more timely and accurate diagnoses

By using ASUS ESC4000-E11 servers in federated learning, medical institutions can collaboratively enhance their AI capabilities, improving healthcare outcomes while ensuring data privacy and security.

Conclusion

This white paper has demonstrated the potential of Intel Data Center GPU Flex Series 170 on ASUS ESC4000-E11 AI server to accelerate federated learning for real-world medical applications significantly. The technology enhances model training times, reduces latency, and ensures compliance with data privacy regulations, making it a strong solution for advancing AI in healthcare. Medical institutions can improve diagnostic capabilities and patient care outcomes by employing this hardware.