The recent developments in generative artificial intelligence has broaden the possibility and access for digital art creation.

At the core of tools such as Dall.E or Stable Diffusion are a recent family of methods known as diffusion models. Diffusion models aim at reproducing content from noise.

This technique was built using complex mathematical concepts such as Langevin diffusion or score matching. Both require advanced knowledge and practice of statistics, beyond the grasp of most undergrad students.

Everyone Can Understand Diffusion Models!

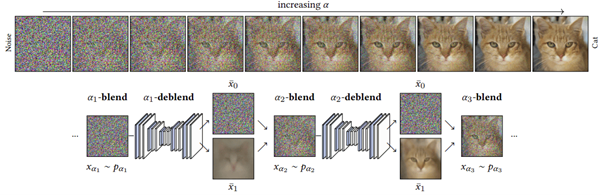

At Siggraph this summer, researchers from Graphics Research team at Intel will present a reformulation of diffusion models that do not require such complexity. Indeed, they managed to derive an equivalent model with simpler concepts: simple mixing and de-mixing of images.

[Furthermore it removes the constraint that most methods have regarding their inputs: they must be images of a specific kind of noise: Gaussian noise.]

But Can Everyone Use It?

Still, diffusion models require a heavy workload and are not accessible to most people. Fine-tuning and specialization of those models still require access to cloud computing or multi-GPUs hardware. Our hope is that simpler models will open the road for alternative ways to train and use of diffusion models that will be more efficient and less time consuming.

Read the detailed blog

Read the paper

Access the code

Graphics Research