Background

Challenges associated with data privacy, limited data availability, data labeling, ineffective data governance, high cost, and the need for a high volume of data are driving the use of synthetic data to fulfill the high demand for AI solutions across industries.

Synthetic text generators can be used for many purposes including building intelligent chatbots and generating artificial data generators for calibrating models.

Solution

In collaboration with Accenture*, Intel developed an unstructured synthetic text data AI reference kit to help you create and tune a large language model to generate synthetic text.

For this reference implementation, unstructured synthetic text data for news headlines is generated using a deep learning technique for tuning a large language model like a provided source dataset: a transformer-based solution that fine-tunes a pretrained Generative Pretrained Transformer 2 (GPT2) large language model to use preexisting knowledge.

To focus the model on generating similar headlines, it is fine-tuned on a headline dataset. Once the model is fine-tuned, it is used to generate new pieces of data that look and feel like the provided dataset without being the same.

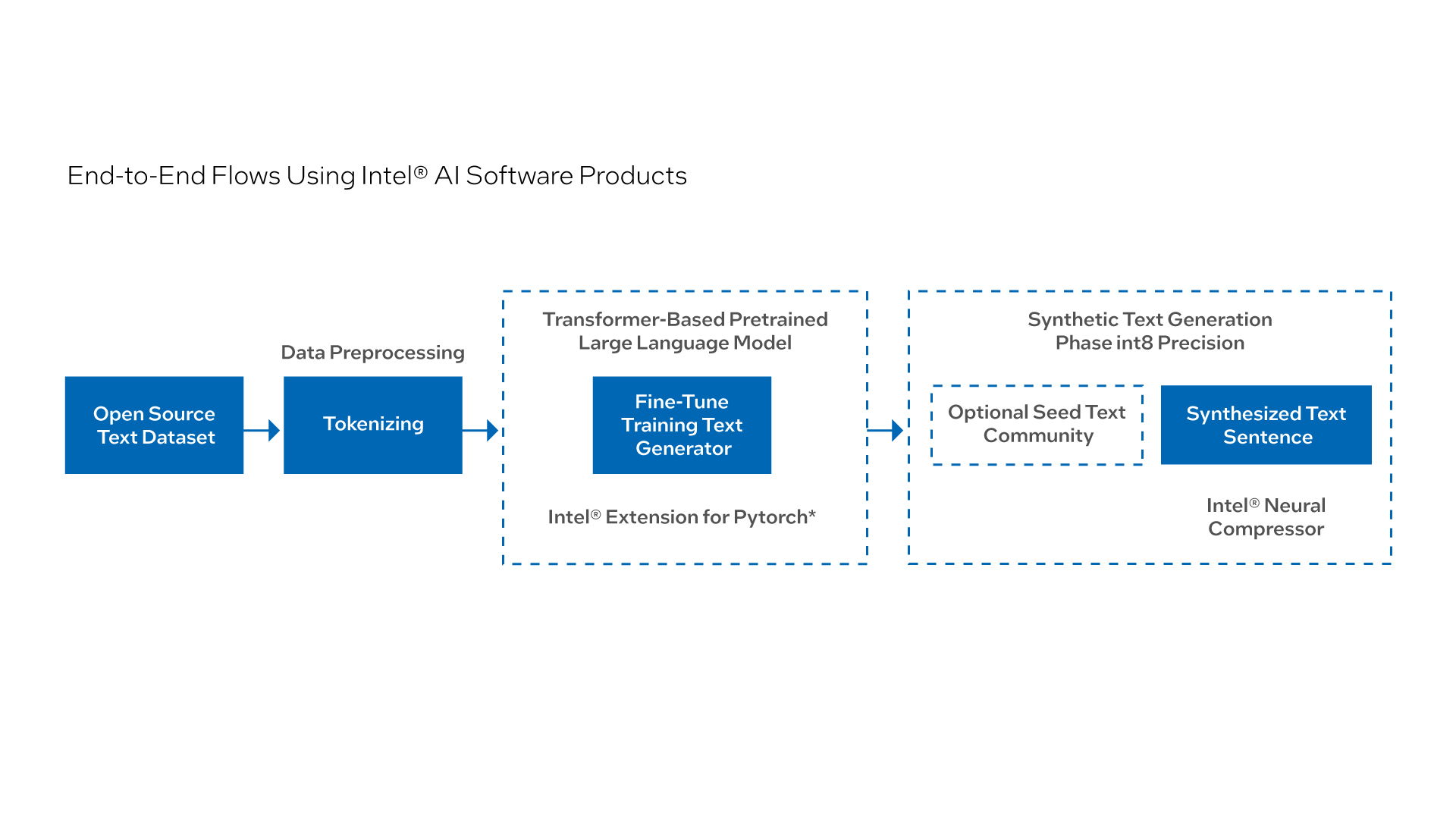

End-to-End Flow Using Intel® AI Software Products

One approach selected was to build a synthetic text generation model using a small pretrained transformer-based large language model (LLM), GPT-2. By fine-tuning, the model was oriented to generate data matching the dataset used and retaining the knowledge that it was pretrained with. This underlying solution powers many of the existing large language model technologies today.

This reference kit includes:

- Training data

- An open source, trained model

- Libraries

- User guides

- Intel® AI software products

At a Glance

- Industry: Cross-industry

- Task: A transformers-based GPT2 model that is fine-tuned on the dataset to produce more domain-specific relevant texts

- Dataset: Kaggle1: 1048576 rows of news headlines sourced from the reputable Australian news source ABC (Australian Broadcasting Corporation)

- Type of Learning: Method 1: Deep learning recurrent neural network (RNN); Method 2: Transfer learning causal language modeling objective

- Models: Method 1: Long short-term memory (LSTM) RNN model; Method 2: Transformer-based model

- Output: Unstructured text headlines generated

- Intel AI Software Products:

- Method 1: Intel® Optimization for TensorFlow* v2.11, Intel® Neural Compressor v1.14

- Method 2: Intel® Extension for PyTorch* v1.13.0, Intel Neural Compressor v1.14

- A Million News headlines, https://www.kaggle.com/datasets/therohk/million-headlines. See this dataset's applicable license for terms and conditions. Intel Corporation does not own the rights to this data set and does not confer any rights to it.

Technology

Optimized with Intel AI Software Products for Better Performance

The text data generation model was optimized with Intel Extension for PyTorch (v1.13.0). Intel Neural Compressor was used (with Accuracy Aware Dynamic Quantization) to quantize the FP32 model to the int8 model.

Intel Extension for PyTorch and Intel Neural Compressor allow you to reuse your model development code with minimal code changes for training and inferencing.

Performance benchmark tests were run on Microsoft Azure* Standard_D8_v5 using 3rd generation Intel® Xeon® processors to optimize the solution.

Benefits

Synthetic text generators can be used for many purposes including building intelligent chatbots and generating artificial data generators for calibrating models.

The AI unstructured synthetic text data reference kit helps you create and tune a large language model to generate synthetic text with significant increases in performance.

With Intel® oneAPI components, little to no code change is required to attain the performance boost.