Overview

This reference implementation helps you to set up quickly and adjust the core concurrent video analysis workload with a configuration file to obtain the best performance of video codec, post-processing and inference based on integrated Graphics Processing Unit (GPU) from Intel. It can effectively accelerate the performance evaluation and implementation cycle of video appliances on Intel platforms.

Set up the core workloads for products such as:

- Network Video Recorder (NVR) / Recording & Broadcasting (R&B) System

- Video Conference Terminal / Multiple Control Unit (MCU)

- AI Box/Video Analytics

Select Configure & Download to download the reference implementation and the software listed below.

- Time to Complete: 30 minutes

- Programming Language: C++

- Available Software:

- Intel® Media SDK 21.1.3

- Intel® Distribution of OpenVINO™ toolkit 2021.4

Recommended Hardware

The hardware below is recommended for use with this reference implementation. See the Recommended Hardware page for other suggestions.

- ASRock* NUC BOX-1165G7

- Uzel* US-M5520

- Portwell* PCOM-B656VGL

- AAEON* PICO-TGU4-SEMI

- AOPEN* DEX5750

- DFI* EC70A-TGU

- NexAIoT* NISE 70-T01

- Vecow* SPC-7000 Series

- Lex System* SKY 3 3I110HW

- GIGAIPC* QBiX-Pro TGLA1115G4EH-A1

- GIGAIPC* QBiX-Lite-TGLA1135G7-A1

- ADLINK* AMP-300

- ADLINK* AMP-500

- ASRock* iBOX-1100 Series

- TinyGo* AI-5033

- TinyGo* AI-7702

- Seavo* SV-U1170

- Seavo* SV-U1150

- Uzel* Bluzer Developer Kit

- Uzel* US-M5422

Target System Requirements

- 7th - 11th Generation Intel® Core™ processors.

- Intel Atom® processors.

- Ubuntu* 20.04.3.

- Platforms supported by the Intel® Media SDK 21.1.3 and Intel® Distribution of OpenVINO™ toolkit 2021.4.

- See GitHub* for major platform dependencies for the back-end media driver.

- 250 GB Hard Drive.

- At least 16 GB RAM.

How It Works

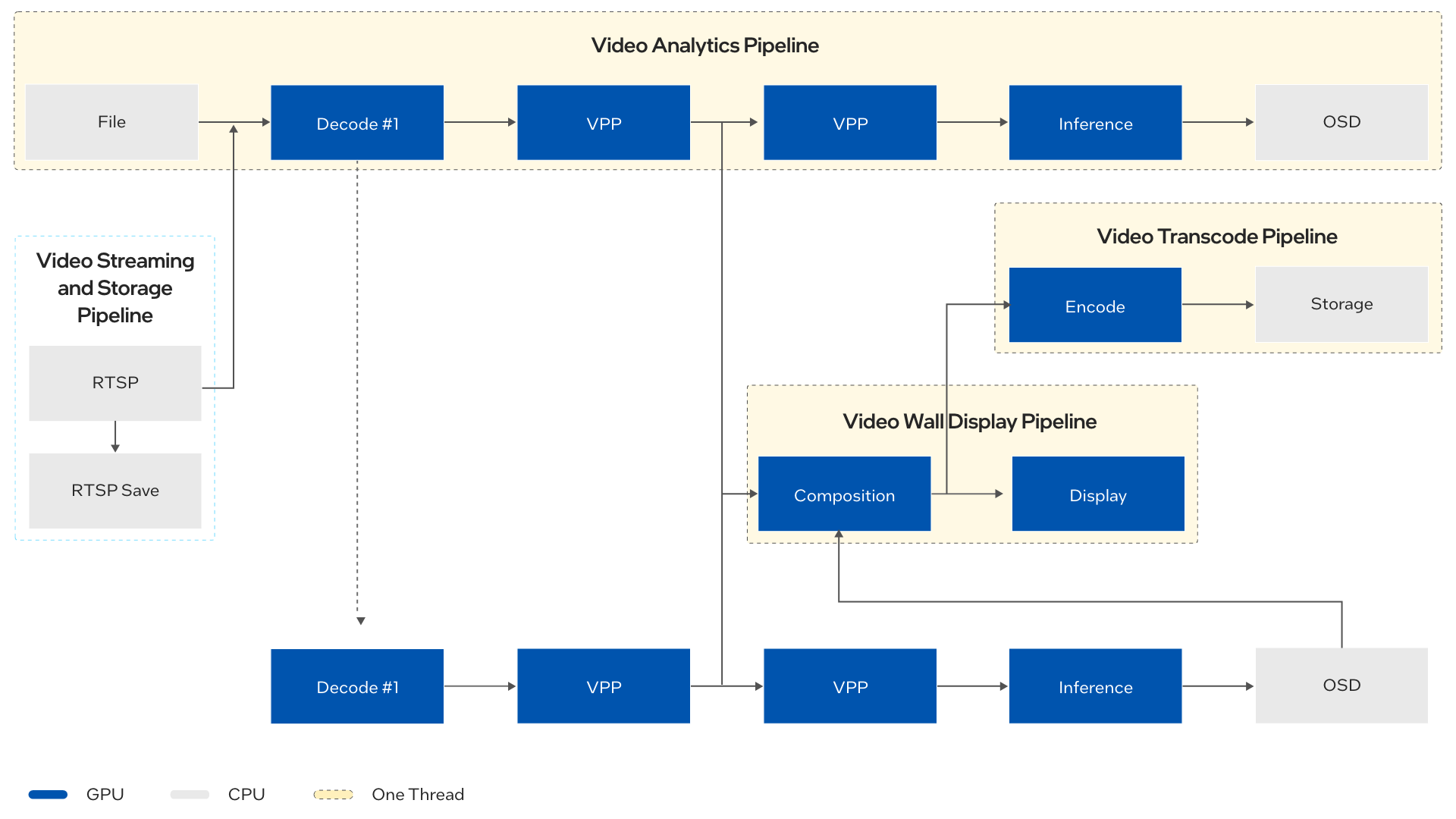

This reference implementation includes a concurrent end-to-end video-based media and AI workload along with the source code and software dependencies.

An end-to-end workload is built easily via combining components such as the following:

- Retrieving video stream from network and local storage

- Decoding

- Post-processing

- Transcoding

- Video stream forwarding

- Composition

- Screen display

- AI inferencing

The components are developed with:

- Intel® Distribution of OpenVINO™ toolkit’s inference engine for high performance AI inference on decoded frames.

- Intel® Media SDK to realize hardware-accelerated codec, transcoding, processing, and media workloads, etc.

Using the Par file within the reference implementation, you can easily customize the components above to set up the core pipelines. This can significantly accelerate the evaluation and implementation cycle of edge video appliances (Smart Network Video Recorder, Video Wall Controller, Video Conference, AI Box, etc.) on heterogeneous platforms consisting of CPUs and integrated GPUs from Intel.

With the full utilization of the computing resource and fixed functions, this reference implementation can help users to achieve the best performance on Intel Atom® processors and Intel® Core™ processors with Graphics. For example, the reference implementation uses GPU fixed function unit to release Execution Unit (EU) on GPU for other possible workload acceleration such as inference. Optimized system configuration in BIOS/OS, scaling algorithm from avs to bi-linear and NV12 HW plane rendering under Linux instead of RGB32 tremendously enhances memory bandwidth.

Get Started

NOTE: Before you proceed to the next sections to run workloads for NVR/R&B, Video Conference/MCU or AI Box/Video Analytics, complete the steps below.

Step 1: Install the Reference Implementation

Select Configure & Download to download the reference implementation and then follow the steps below to install it.

- Open a new terminal, go to the download folder and unzip the downloaded reference implementation package:

- Go to the smart_video_and_ai_workload_reference_implementation directory:

- Change permission of the executable edgesoftware file:

- Run the command below to install the Reference Implementation:

- During the installation, you will be prompted for the Product Key. The Product Key is contained in the email you received from Intel confirming your download.

Figure 3: Product key - When the installation is complete, you see the message “Installation of package complete” and the installation status for each module.

Figure 4: Install Success

7. Once the installation is successfully completed, open a new terminal to proceed with verification and running the reference implementation.

Step 2: Verify Application Dependency

Follow the steps below to verify the installation.

1. Go to the application directory:

2. Set the Environment Variables:

NOTE: Before executing this reference implementation, run the two commands above to set up the environment variables every time you open a new terminal.

3. If installation is successful, run vainfo and you will see the output below:

NOTE: If you do not see the output above, use the command below to check if there are any missing libraries:

NOTE: If there are any libraries not found, it means the installation was not completed. Contact your account manager from Intel and send the output of the command above in an email.

Run the Application to Visualize the Output

1. Go to the application directory. (Skip this step if you are already in the directory.)

2. Set the Intel® Distribution of OpenVINO™ toolkit Environment Variables:

3. With the application, two sample videos are provided for testing: svet_intro.h264 and car_1080p.h264. These video files are present in the application directory. They are in .h264 format as they are the element streams extracted from their base files. Here svet_intro.h264 file is used to test n16_face_detection_1080p.par file and car_1080p.h264 file is used to test n4_vehicle_detect_1080p.par file. Learn more about these par files in Step 4.

- Optional: If you are using a custom video file {e.g., classroom.mp4} of format .mp4, use the command below to extract the element stream from MP4 file and then try using the par files for inferencing:

- You can see classroom.mp4 inside the application directory since it is a sample video provided with the package. Instead of classroom.mp4, you can place any MP4 file [Example: sample.mp4] in the directory and execute the above command to extract the element stream from the MP4 file. Here the element stream will have the name classroom.h264 and will be placed in the same path where the classroom.mp4 is present [inside the application directory].

4. From the current working directory, navigate to the inference directory. Inside the inference directory, there are par files present for different models and different number of channels.

- For example, here we use the face detection model with 16 channels for sample video svet_intro.h264. To use it, choose the file named n16_face_detection_1080p.par and follow the instructions from Step 5 or if you are using car_1080p.h264 choose the file named n4_vehicle_detect_1080p.par and continue with Step 5.

5. Open the par file n16_face_detection_1080p.par using any editor (vi / vim). In each line after -i::h264 represents the video channel. Modify the video path after -i::h264 with the absolute path of the converted video or element stream in every line of the par file and then follow Step 6.

- For example purposes we have used n16_face_detection_1080p.par. Depending on the use cases, you can use any of the par files listed there. Make sure you use the absolute path of the element stream extracted from the sample video clip in every line for rendering it to the channel. (In this case, give the absolute path of svet_intro.h264 [e.g.: /home/user/video/ svet_intro.h264] in each line wherever /<path>/<filename>.h264 is found).

6. Modify the video path. In each line, text after -i::h264 represents the video channel. Change the path after -i::h264 with the absolute path of the converted video or element stream in every line of the selected par file and then follow Step 7.

NOTE: Make sure to use the absolute path of the element stream extracted from the sample video clip in every line for rendering it to the channel.

- If you are using svet_intro.h264 and the path of svet_intro.h264 is /home/user/video/ then give the absolute path of svet_intro.h264 as /home/user/video/svet_intro.h264 in each line wherever /<path>/<filename>.h264 is found.

- If you are using car_1080p.h264 and the path of car_1080p.h264 is /home/user/video/ then give the absolute path of car_1080p.h264 as /home/user/video/car_1080p.h264 in each line wherever /<path>/<filename>.h264 is found.

NOTE: Each line which starts with -i::h264 represents a video channel.

- For the par file n16_face_detection_1080p.par you can see 16 lines that start with -i::h264 and for the par file n4_vehicle_detect_1080p.par you can see 4 lines that start with -i::h264. This is the default number of channels supported for each of these par files. Likewise, there is a default number of channels supported for all other par files.

- If you are planning to run the application with a custom number (which is less than the default number) of channels, you need to remove the remaining lines that start with -i::h264 and modify the line that start with -vpp_comp <number_of_channels> and the line that starts with -vpp_comp_only <number_of_channels>, where <number_of_channels> is the custom number of channels you need to execute.

7. There are two ways you can run the application: using -rx11 and using -rdrm-DisplayPort:

- Run application with rx11

- Use this method if you want to run video_e2_sample with normal user or with X11 display.

- After making the changes in the par file, move to the last line and replace -rdrm-DisplayPort with -rx11 and save the file. Then follow the instructions from Step 8.

NOTE: This method will pop up a video screen showing the detections. X11 rendering isn’t as efficient as DRM direct rendering.

- Run application with -rdrm-DisplayPort

NOTE: Before you complete this step, make sure you are not using a remote system. You will be changing your system to text mode and you won’t be able to visualize the screen. Be physically available in front of the system, as the output of this method will be shown on the same system monitor where you have installed the application. If there are alive Virtual Network Client (VNC) sessions, close them first. The -p option is to keep the current user environment variables settings.

- By default, the par files come with this option.

- Make necessary changes with the chosen par file and make sure the last line contains -rdrm-DisplayPort where it is mentioned and save the file.

- To run with -rdrm-DisplayPort in the par file, you must switch Ubuntu to text mode by using Ctrl + Alt + F3.

- After switching to text mode, login with your username [if prompted].

- Move to the application directory [path mentioned in Step 1].

- Source Intel® Distribution of OpenVINO™ toolkit environment as mentioned in Step 2.

- Use the command su -p to preserve the environment settings. Also, because the DRM direct rendering requires root permission and no X clients running.

- If you are using this method, since you are already in the application directory, skip Step 8 and proceed with Step 9.

- You can see output on the monitor.

8. After making the changes in the respective par file, navigate to the application directory and set up the Intel® Media SDK environment.

If you have not run the following command to set the Intel® Media SDK environment for your current bash, run it first before going to the next step.

NOTE: Set up the Intel® Media SDK and OpenVINO™ toolkit environment variables every time you open a new terminal.

Execute the following command to run the application using the par file you have edited.

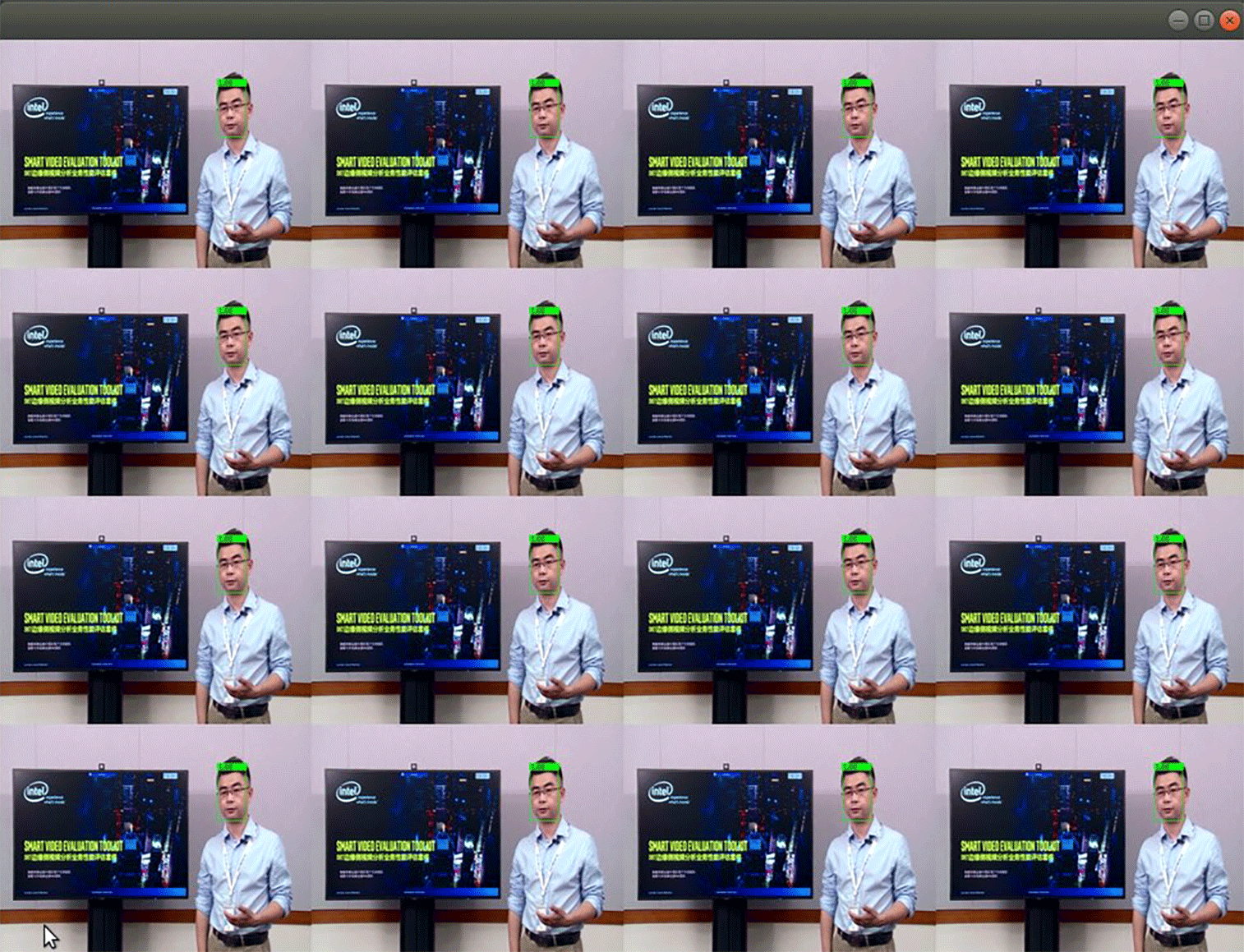

10. If you have edited the par file n16_face_detection_1080p.par for svet_intro.h264, execute the command below to run the application and you will see the output below:

11. If you have edited the par file n4_vehicle_detect_1080p.par for car_1080p.h264, execute the command below to run the application.

NOTE: If you want to stop the application, press Ctrl + c in the bash shell.

To learn more about running other par files, refer to GitHub.

The following four sections provide more examples on how to run the core video workload for specific vertical use cases and performance optimizations features in this reference implementation.

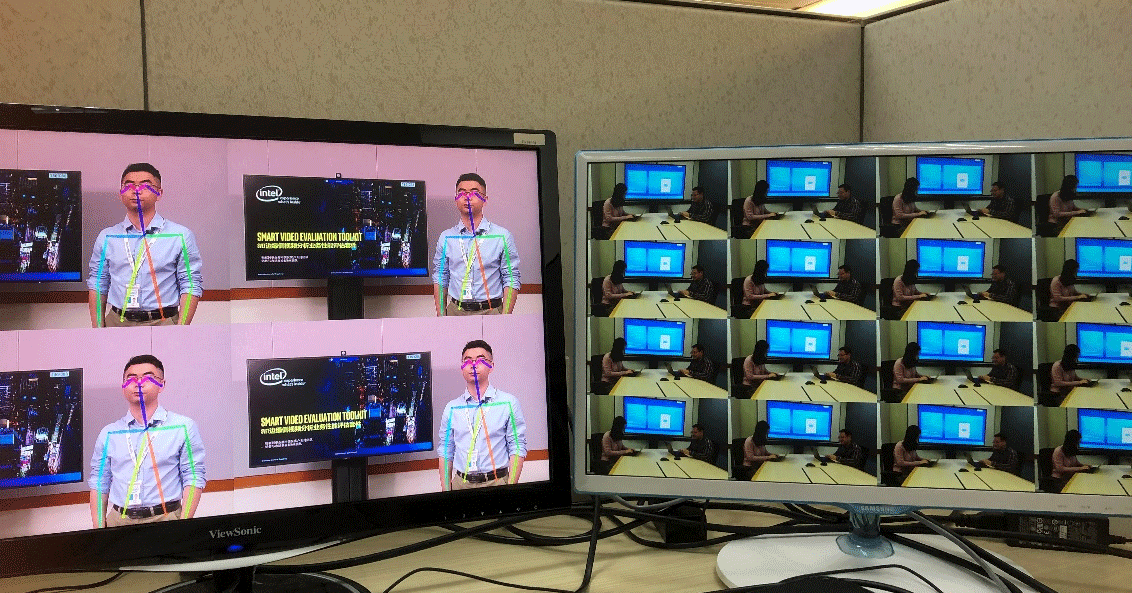

Run NVR/R&B Workload

By providing software functionality for multi-channel Real Time Streaming Protocol (RTSP) video streaming, RTSP video streaming and storage, high-performance multi-channel video processing and decoding, composition, encoding, etc., the reference implementation can be used to set up core workloads for a variety of NVR and R&B systems.

With the .par file in the reference implementation, you can customize the workload to support a wide variety of NVR workloads, ranging from basic decoding, composition, display encoding, multiple display videowall to RTSP streaming and pure RTSP storage.

NOTE: Before you proceed to the next step, make sure you have finished the steps in the Get Started section to set up the Intel® Media SDK and OpenVINO™ toolkit environment variables correctly.

Basic Video Decoding, Composition and Display

In the reference implementation directory, the par file is available under par_file/basic for you to configure different workloads. Below is a part of Par file n16_1080p_videowall.par configured to run 16 channel decoding, composition and display.

In the Par file, there is the following parameter: -i::h264 video/1080p.h264 -join -hw -async 4 -dec_postproc -o::sink -vpp_comp_dst_x 0 -vpp_comp_dst_y 0 -vpp_comp_dst_w 1920 -vpp_comp_dst_h 1080 -ext_allocator -vpp_comp_only 1 -w 1920 -h 1080 -async 4 -threads 2 -join -hw -i::source -ext_allocator -ec::rgb4 -rdrm-DisplayPort.

- Where -i::h264 | h264 sets input file and decoder type.

- – join means Join session with other session(s).

- If there are several transcoding sessions, any number of sessions can be joined. Each session includes decoding, preprocessing (optional).

- -hw GPU will be used for HW accelerated video decoding, encoding and post-processing.

- -vpp_comp_only <sourcesNum> enables composition from several decoding sessions.

- Result is shown on screen. -ec::nv12 | rgb4 forces encoder input to use provided chroma mode.

Use the command below with the par file to run video_e2e_sample application:

Video Decoding, Composition, Display and One Channel Encoding

Once you have video decoding, composition, display workload consolidated, encoding workload might also be required in some use cases. Par file n16_1080p_videowall_encoding.par is configured to run 16 channel video decoding, composition, display and one channel encoding.

In the par file, we have the following parameter: Shape-vpp_comp 16 -w 1920 -h 1080 -async 4 -threads 2 -join -hw -threads 3 -i::source -ext_allocator -ec::nv12 -o::h264 comp_out_1080p.h264

-vpp_comp_only 16 -w 1920 -h 1080 -async 4 -threads 2 -join -hw -i::source -ext_allocator -ec::rgb4 -rdrm-DisplayPort.

- Where -o::h264 | h264 Sets output file and encoder type.

- Dump the encoded file into comp_out_1080p.h264

Use the command below to run video_e2e_sample application:

Video Path and RTSP Streaming

Apart from workload examples on Video decoding, composition, display and encoding, you might need different input sources for our workflow pipeline. The build_and_install.sh downloads test video clips under video folder by default. If you want to use your own test clip, you can modify the video path (following -i::h264) of every line in the par file.

- Use par_file/inference/n16_1080p_face_detect_30fps.par as an example. See the text in italics: Shape-i::h264 ./video/1080p.h264 -join -hw -async 4 -dec_postproc -o::sink -vpp_comp_dst_x 480 -vpp_comp_dst_y 540 -vpp_comp_dst_w 480 -vpp_comp_dst_h 270 -ext_allocator -infer::fd ./model -fps 30

- Or, if you would like to use RTSP video stream instead of local video file, you can modify the par file and use RTSP URL to replace local video file path. See the text in italics: Shape-i::h264 rtsp://192.168.0.8:1554/simu0000 -join -hw -async 4 -dec_postproc -o::sink -vpp_comp_dst_x 0 -vpp_comp_dst_y 0 -vpp_comp_dst_w 480 -vpp_comp_dst_h 270 -ext_allocator -infer::fd ./model

Pure RTSP Streaming and Storage Mode

For some use cases, only RTSP streaming and storage is needed. When there are only -i and -rtsp_save parameters in the par file, the session will only save the specified RTSP stream to local file instead of running decode, inference or display.

Use the command below to run only RTSP streaming and storage:

NOTE: Such sessions must be put into one separate par file. If you’d like to run RTSP stream storage sessions together with other decoding and inference sessions, you can run with two par files. See the command below as an example:

Pure Decoding Mode

By using option -fake_sink, you can run the concurrent video decoding with fake sink instead of display or encoding. In this mode, the composition of the decoded frames or inference result is disabled. Refer to example par file n16_1080p_decode_fakesink.par under folder par_file/misc and n16_1080p_face_detection_fakesink.par under folder par_file/inference. The pure decoding mode will only have decoding workload. Apart from the option one of using option -fake_sink, you can also use -o::raw /dev/null to specify the pure decoding mode.

Two options for pure decoding are listed in the table below. For details, refer to n16_1080p_decode_fakesink.par and n64_1080p_decode_fakesink.par.

| -o::raw /dev/null | When using -o::raw with output file name /dev/null, application will drop the decode output frame instead of encoding or saving to local file. It’s for pure video decoding testing. |

| -fake_sink <number of sources> | Use a fake sink instead of display(-vpp_comp) or encoding(-vpp_comp_only). This fake sink won’t do composition of sources. The number of sources must be equal to the number of decoding sessions. See n16_1080p_decode_fakesink.par and n16_1080p_infer_fd_fakesink.par for example. -o option must be used together with this option but it won’t generate any output file. |

Multiple Display Support

Below is an example to run 16-channel 1080p decode sessions on one display and run 16-channel 1080p decode and inference sessions on another display.

- If the two par files specify different resolutions for display, e.g., 1080p and 4k, and there is one 1080p and one 4k monitor connecting to the device, this command line could run into error due to 4k par file selecting 1080p monitor. In this case, you can try to switch the order of par files passed to video_e2e_sample.

- In current implementation, -rdrm-XXXX options are ignored. Sample application will choose the first unused display emulated from the DRM for each par file. The order is according to the CRTC id showed in /sys/kernel/debug/dri/0/i915_display_info. Display with smaller CRTC id is emulated earlier.

- Generally, the first par file in the command can get the display with smallest CRTC id. But since we create different threads for each par file, the actual order of display assigned to each par file may not be strictly the same as the order of par file in the command.

Run the command below to run video_e2e_sample application:

Run Video Conference MCU Workload

In Multiple Controller Unit (MCU) mode, the reference implementation sample application can be used to test multiple channel video decoding, video composition and video encoding at the same time. This mode is ofen used in video conference use case.

NOTE: Before you proceed to the next step, make sure you have finished the steps in the Get Started section to set up the Intel® Media SDK and OpenVINO™ toolkit environment variables correctly.

Configure MCU mode

As an example, the command below can be used to test 8-channel 1080p AVC decode, 8-channel 1080p composition and 8-channel 1080p AVC encoding workload:

Run AI Box/Video Analytics Workload

This reference implementation helps you to set up optimized video analytics pipeline for AI Box use cases.

Based on the general multimedia framework and Intel® Distribution of OpenVINO™ toolkit Inference Engine at the backend, it ensures pipeline interoperability and optimized performance across Intel® architecture: CPU, iGPU and Intel® Movidius™ VPU.

With the .par file in this reference implementation, you can customize the workload and AI model for inferencing to support a wide variety of AI Box or Video Analytics use cases, such as face detection, object tracking, vehicle attribute detection, etc.

NOTE: Before you proceed to the next step, make sure you have finished the steps in the Get Started section to set up the Intel® Media SDK and OpenVINO™ toolkit environment variables correctly.

16-Channel Video Streaming, Decoding, Face Detection, Composition and Display

Use the command below to run video_e2e_sample application:

- The model used for face detection inference is specified by -infer::fd ./model in the par file. ./model is the directory that stores the Intermediate Representation (IR) files of face detection model. The IR is the model format used for OpenVINO™ toolkit Inference Engine.

- The first loading of face detection models to GPU is slow. You might need to wait for the video to show on display. Then with cl_cache enabled, the next running of face detection models will be much faster, which is about 10 seconds on CFL.

- If you want to stop the application, press Ctrl + c in the bash shell.

- If you want to play 200 frames in each decoding session, you can append -n 200 to parameters lines starting with -i in par files.

- By default, the pipeline is running as fast as it can. If you want to limit the FPS to a certain number, add -fps FPS_number to every decoding session, which start with -i in par files. Refer to par_file/inference/ n16_1080p_face_detect_30fps.par.

4-Channel Video Decoding, Vehicle and Vehicle Attributes Detection, Composition, Encode and Display

Use the command below to run video_e2e_sample application:

The models for vehicle and vehicle attributes detection inference are specified by -infer::vd ./model in the par file. ./model is the directory that stores the IR files of vehicle and vehicle attributes detection models.

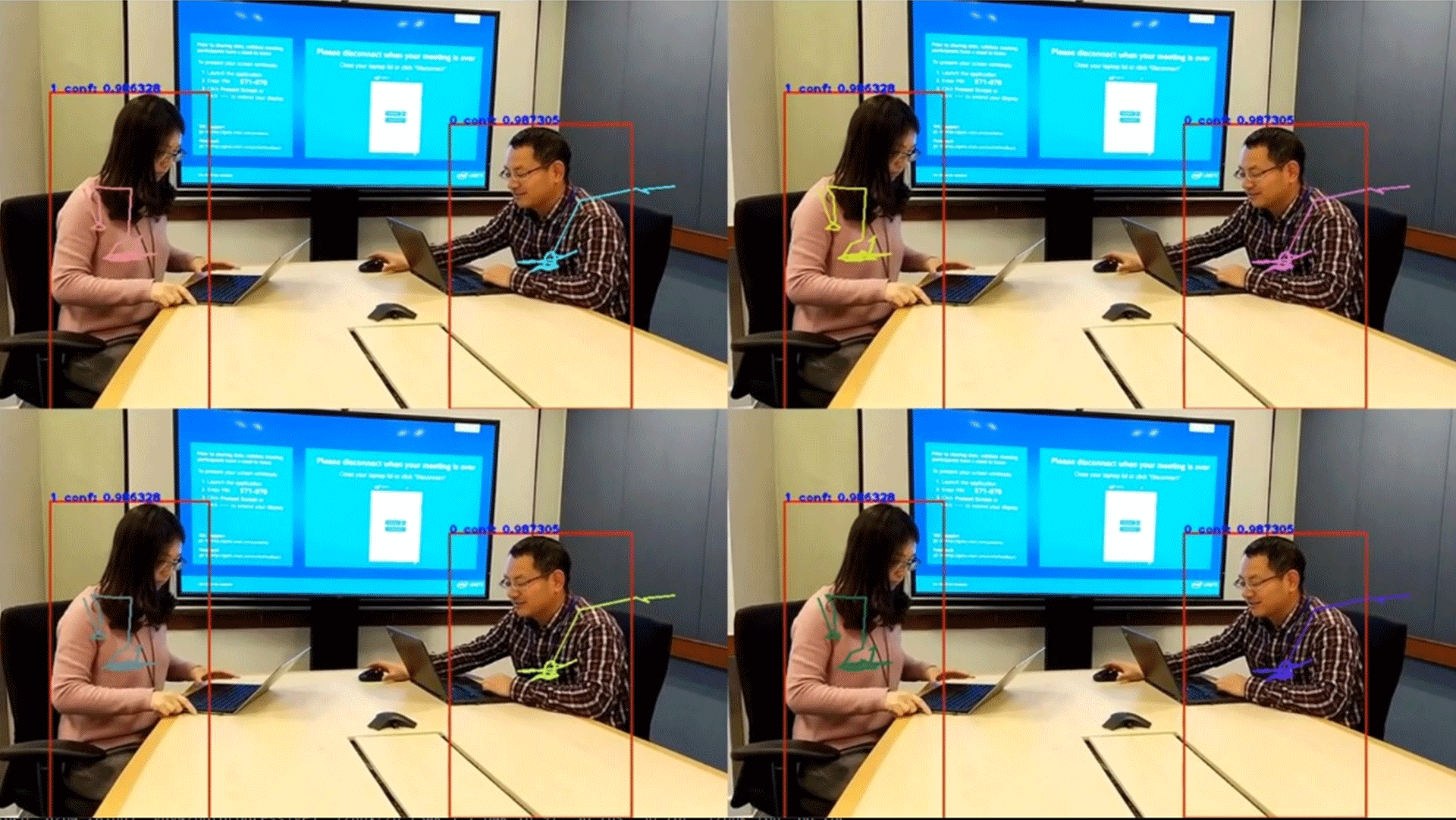

4-Channel Video Decoding, Multi Objects Detection/Tracking, Composition and Display

Use the command below to run video_e2e_sample application:

The models for object detection and motion tracking inference are specified by -infer::mot ./model in the par file. ./model is the directory that stores the IR files of object detection and motion tracking models.

2-Channel Video Decoding, Yolov3 Detection, Composition, and Display

To convert Yolov3 model with OpenVINO™ toolkit, refer to https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_convert_model_tf_specific_Convert_YOLO_From_Tensorflow.html.

Edit par_file/inference/n2_yolo_h264.par and replace yolofp16/yolo_v3.xml with the yolov3 IR file path in your system.

Use the command below to run video_e2e_sample application:

Optimization Tips

Offline Inference Mode

The results of inference are rendered to the composition by default. It can be disabled by adding parameter -infer::offline after -infer::fd ./model, then the result of inference won’t be rendered.

Shared Inference Network Instance

Starting from R3, the sessions that use the same network IR files and the same inference device share one inference network instance. The benefit is that when GPU plugin is used, the network loading time decreases by 93% for 16-channel inference.

Configure the Inference Target Device, Inference Interval and Maximum Object Number

By default, GPU is used as the inference target device. You can also use option -infer::device HDDL to specify HDDL as target device. You can also use option -infer::device CPU to specify CPU as target device.

In one par file, users can use different devices for each session.

If HDDL is used as the inference target device, make sure the HDDL device has been set up successfully. See n4_vehicle_detect_hddl_1080p.par for inference.

- The option -infer::interval indicates the distance between two inference frames. For example, -infer::interval 3 means frame 1, 4, 7, 10… will be sent to inference device and other frames will be skipped. For face detection and human pose estimation, the default interval is 6. For vehicle detection, the default interval is 1, which means running inference on every frame.

- The option -infer::max_detect indicates the maximum number of detected objects for further classification or labeling. By default, there is no limitation of the number of detected objects.

Refer to example par file n1_infer_options.par.

Performance Optimization

Use Fixed Function Unit Inside GPU to do VPP

If the system threshold is restricted by computation resources on VPP tasking, fixed function units inside GPU could be used by adding -dec_postproc to accelerate VPP processing instead of using EU units in GPU. This could offload EU in GPU for other critical tasks like inference and other codec workloads.

Modify the Par file and run VPP using -dec_postproc on fixed function unit inside GPU instead of EU.

Use Fixed Function Unit Inside GPU to do Encoding

Below is an example to run encoding sessions on fixed function unit inside GPU. Using fixed function units to run encoding could offload EU in GPU for other critical tasks like inference and other codec workloads.

Modify the par file and run encoding using -qsv-ff on fixed function unit inside GPU instead of EU.

Use Hybrid Rendering with Both LibDRM and XSERVER

In the default display architecture, this reference implementation uses libDRM to render the video directly to display. Therefore, it needs to act as master of the DRM display, which is not allowed when X client is running.

However, in some configuration settings, hybrid rendering mode with both libDRM and XSERVER operating simultaneously is provided. If your corporation has an assigned FAE from Intel, contact your FAE for special requests for customized configuration for hybrid rendering.

Disable the Power Management of GPU to Increase Performance

GPU has its own power management. In some use cases that have many small workloads, such as QCIF decoding, GPU will enter power save mode between these small workloads. It will introduce a lot of latency. One method is to disable the power management of GPU. It can be controlled in BIOS, but different versions of BIOS may have different approaches. Contact your FAE.

If this occurs, but full threading on GPU usage is still desired, contact your FAE for help on special requests for customized OS and BIOS setup.

Summary and Next Steps

This reference implementation supports core video analytic workloads for digital surveillance and retail use cases.

Learn More

To continue learning, see the following guides and software resources:

- Smart Video and AI Workload User Guide

- Video Acceleration Development Guide on Intel Platforms is a comprehensive development guide for this reference implementation to help you further debug and optimize. It summarizes common issues during customer engagements, such as media, display, system level performance optimization.

Known Issues

OpenVINO™ Toolkit Runtime Installation Error

This reference implementation uses OpenVINO™ toolkit 2021.4 and if any other version of OpenVINO™ toolkit is installed on the system where you are installing this reference implementation you might get an Error - Failed to verify openvino runtime error. This is due to the OpenVINO™ toolkit version mismatch.

Follow the instructions below for the installation to continue:

- Make sure you are using a fresh machine, where there is no OpenVINO™ toolkit installed.

- If a version of OpenVINO™ toolkit is installed other than OpenVINO 2021.4, uninstall the other version and execute rm -rf $HOME/Downloads/YOLOv3 to remove the dependency of the older OpenVINO™ toolkit.

- Move to the application download folder and execute the installation command below.

If you follow the above steps correctly, the installation will be successful.

Failed to Install OpenCL NEO Driver Error

If you see Installation failed: opencl neo drivers error for OpenCL NEO driver installation, typically it’s due to the unstable access to GitHub from the PRC network. You will need to manually install it by running the command below until it succeeds:

Support Forum

If you're unable to resolve your issues, contact the Support Forum.