Highlights

Researchers from the Intel Visual Compute & Graphics (VCG) Lab will present papers at SIGGRAPH and High-Performance Graphics (HPG) 2024 from July 26 through August 1 in Denver, Colorado, US. Researchers from Intel will explain how N-dimensional Gaussian mixtures can fit high-dimensional functions, making scene dynamics, variable lighting, and more possible within the same efficient framework. In a course talk, researchers will introduce a component implementation for extremely large-scale tiled triangular scenes, enabling bigger worlds for gamers to explore.

The VCG Lab’s mission is to drive Intel’s graphics and GPU ecosystem, guiding GPU hardware design, as well as providing new technologies and building blocks for the broader ecosystem. During the last year, the researchers have investigated challenging problems in graphics, such as optimizations for content creation and more efficient geometric and material representations, as well as opening Intel® technologies and libraries to the broader ecosystem of graphics and AI practitioners.

- Researchers from the VCG Lab will present papers and talks at SIGGRAPH and HPG.

- The VCG Lab shares progress and contributions to the graphics community, including better representations and rendering techniques, as well as tools for graphics and 3D generative AI (GenAI).

- The lab also provides updates on Intel's open ecosystem of libraries to help graphics and machine learning communities achieve higher-fidelity visuals in the field of 3D experiences.

SIGGRAPH and HPG Highlights

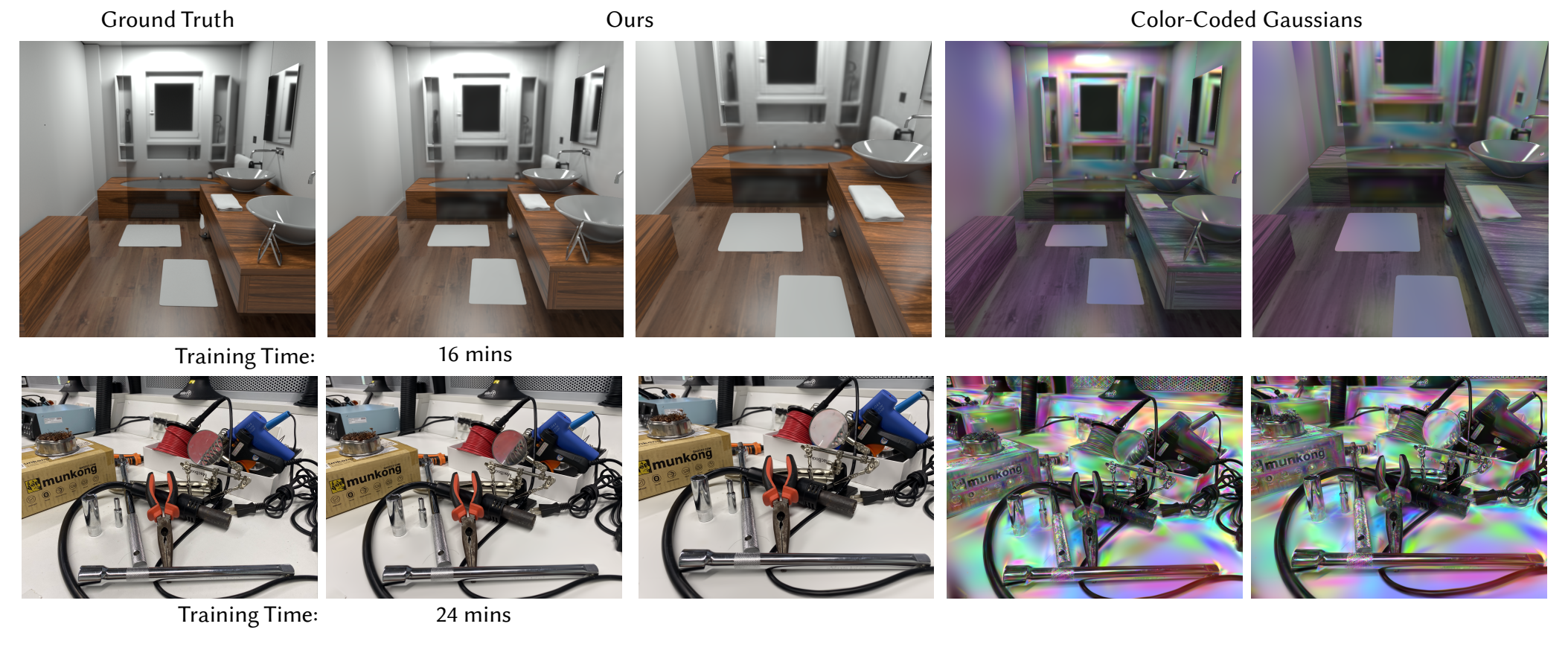

Novel rich scene and material representations are driving the next wave of asset generation and scanning methods. Neural radiance field (NeRF) and, recently, Gaussian splats, have been demonstrated as powerful and efficient representations that can close the loops, such as sensing-to-pixels and generation-to-pixels. Gaussian splats, a representation for efficient 3D reconstruction, have been demonstrated as a convenient representation amenable to more localized optimizations yet still too static for production use due to some fundamental variables baked into it that limit variability in lighting, materials, scene dynamics, and animations. The team’s work on N-Dimensional Gaussians for Fitting of High Dimensional Functions expands Gaussian splats to overcome these limitations and will be presented with an interactive demo at SIGGRAPH 2024 by Stavros Diolatzis (Intel) co-authored with Tobias Zirr, Alexandr Kuznetsov (Intel), Georgios Kopanas (Inria and Universite Cote d'Azur) and Anton Kaplanyan (Intel).

Figure 1. N-dimensional Gaussians for fitting high-dimensional functions.

In this work, N-dimensional Gaussian mixtures are employed to fit high-dimensional functions, making scene dynamics, variable lighting, global illumination, and complex anisotropic effects possible within the same efficient framework. The method results in orders of magnitude faster training without sacrificing the quality achieved by implicit methods. The presentation and the demo will be part of the SIGGRAPH Radiance Field Processing session.

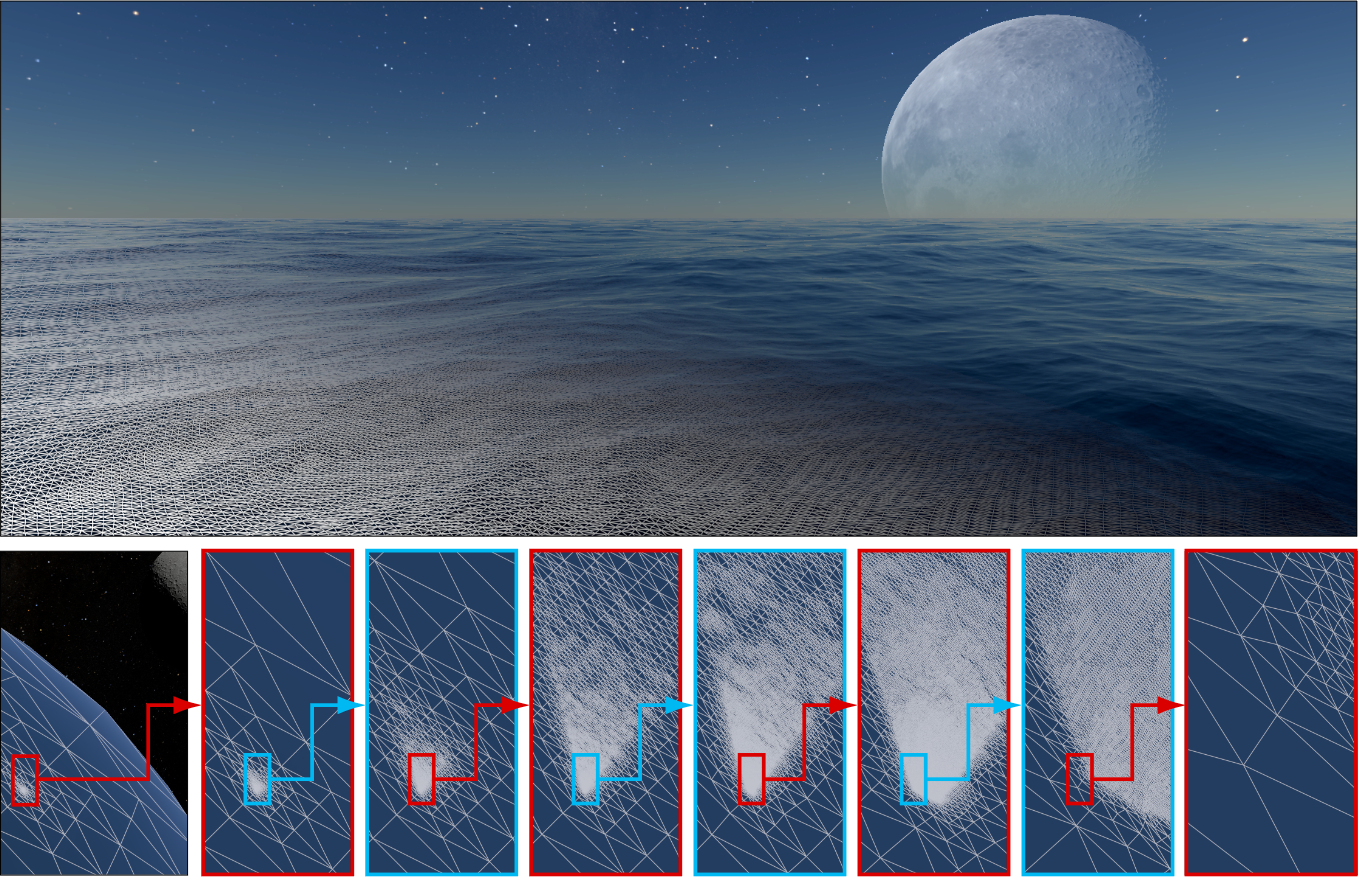

Another paper presented at HPG 2024, Concurrent Binary Trees for Large-Scale Game Components introduces a large-scale component implementation suitable for extremely large-scale tessellated scenes, such as planetary scales, enabling bigger and better worlds for gaming players to explore. Intel’s Anis Benyoub and Jonathan Dupuy will also give a talk at the SIGGRAPH course Advances in Real-Time Rendering in Games, Part II, which will expand on this topic of state-of-the-art proven rendering techniques for fast, real-time, and interactive rendering of complex worlds.

Figure 2. Concurrent binary trees for large-scale game components.

Graphics Building Blocks for Creators and GenAI

As production costs keep shifting toward creating high-quality assets, it becomes increasingly important for the ecosystem to have efficient tools for the creation and generation of assets. To this end, we are working on both moving the needle in the state-of-the-art methods, as well as providing existing high-quality tools to the community.

More Accessible Differentiable Rendering

One content creation building block is called differentiable rendering. It reverses the process of image rendering and answers the question: What do we need to change in the scene to match the reference image? This method is widely used in asset scanning, optimization, and generation. The challenging part of differentiable rendering is making the existing renderers differentiable. The work, Transform a Nondifferentiable Rasterizer into a Differentiable Rasterizer with Stochastic Gradient Estimation, shows how to drastically simplify the computations in the differentiable rendering machinery to make it applicable even to large black box renderers and game engines that were not engineered with differentiable rendering in mind. Winning both the Best Presentation award and Best Paper second place award at the Interactive 3D Graphics and Games (I3D) 2024, Intel’s Thomas Deliot, Erich Heitz, and Laurent Belcour show how to transform any existing rendering engine into a differentiable one with only a few compute shaders, amounting to minimal engineering efforts and no external dependencies.

Figure 3. Transform a nondifferentiable rasterizer into a differentiable rasterizer with stochastic gradient estimation.

Fast and Accessible Ray Tracing in Python* with Intel® Embree Coming Soon

Another challenge for content creation lies deeper down in the rendering process within accelerating the more fundamental ray tracing operation. For example, multiple recent works on accelerating 3D representations use various accelerating structures and complex PyTorch* code. Within the ray tracing context, we have Intel Embree, an Oscar*-winning and thoroughly tested library highly optimized for ray tracing, which we are happy to expose as a building block to the community of machine learning practitioners.

A new Python* API for Intel® Embree is under development allowing the Python ecosystem and AI practitioners to employ high-performant ray tracing capabilities of Intel Embree directly in their Python-based AI research and development. Key abstractions were also added to low-level API in Intel Embree to ensure good performance by limiting transitions between Python and C/C++. For more details, see the development branch on GitHub*.

The Python API in Intel Embree will be released in the second half of 2024. This enables efficient ray tracing in the Python and PyTorch ecosystem for efficient geometric optimizations, differentiable rendering, and 3D GenAI training.

Recent Papers from Visual Compute & Graphics Lab

While good building blocks for new asset generation methods are important, the group looks at more graphics problems, such as frame extrapolation, perception, and large-scale visualization.

In addition to geometric representations, advanced materials are as important. A recent Eurographics Symposium on Rendering (EGSR) 2024 paper on Nonorthogonal Reduction for Rendering Fluorescent Materials in Nonspectral Engines, by Alban Fichet and Laurent Belcour (Intel) in collaboration with Pascal Barla (Inria*), is a method to accurately handle fluorescence in a nonspectral (for example, tristimulus) rendering engine, showcasing color-shifting and increased luminance effects. Core to the method is a principled reduction technique that encodes the re-radiation into a low-dimensional matrix working in the space of the renderer's color-matching functions (CMFs). The reduction technique enables support for fluorescence in an RGB renderer, including real-time applications.

Figure 4. Nonorthogonal reduction for rendering fluorescent materials in nonspectral engines.

This contribution to the state-of-the-art in graphics, as well as new building blocks for the graphics and machine learning community, will help achieve higher-quality visuals.

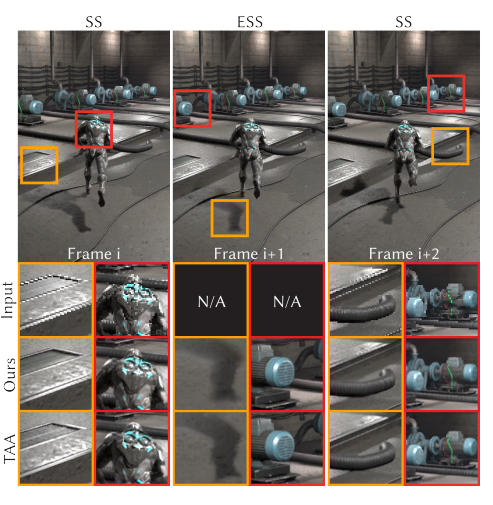

A recent SIGGRAPH Asia 2023 work, ExtraSS: A Framework for Joint Spatial Super Sampling and Frame Extrapolation, is a collaboration between Intel and the University of California Santa Barbara. It introduces a framework that integrates spatial supersampling and frame extrapolation techniques to enhance real-time rendering performance while achieving a balance between performance and quality. With its ability to generate temporally stable high-quality results, the framework creates new possibilities for real-time rendering applications, advancing the boundaries of performance and photo-realistic rendering in various domains.

Figure 5. ExtraSS—A framework for joint spatial super-sampling and frame extrapolation.

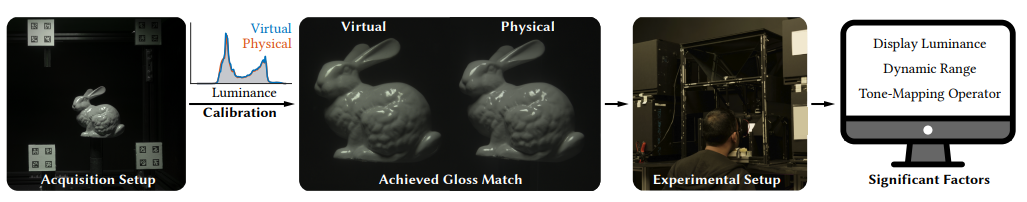

Another SIGGRAPH Asia 2023 paper on The Effect of Display Capabilities on the Gloss Consistency Between Real and Virtual Objects is a collaboration between Intel and scientists across the research community. The work investigates how display capabilities affect gloss appearance with respect to a real-world reference object. A series of gloss-matching experiments were conducted to study how gloss perception degrades based on individual factors: object albedo, display luminance, dynamic range, stereopsis, and tone mapping.

Figure 6. The effect of display capabilities on the gloss consistency between real and virtual objects.

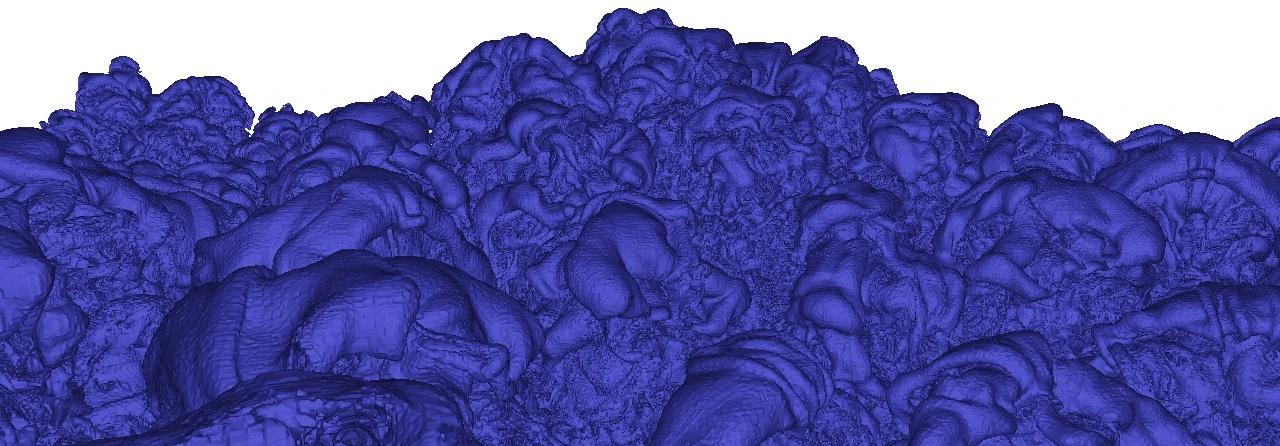

Speculative Progressive Raycasting for Memory Constrained Isosurface Visualization of Massive Volumes, published at IEEE Large Data Analysis and Visualization, presents Intel’s collaborative research with the University of Illinois at Chicago and demonstrates a novel implicit isosurface rendering algorithm for interactive visualization of massive volumes within a small memory footprint. This addresses the memory constraints of lightweight end-user devices encountered when attempting to visualize the massive data sets produced by today's simulations and data acquisition systems.

Figure 7: Speculative progressive raycasting for memory-constrained isosurface visualization of massive volumes.

A Rich and Open Ecosystem of Rendering Libraries

Beyond research papers, the lab has made improvements to our ecosystem of rendering libraries.

Opening the Development of Intel® Rendering Toolkit to Broader Ecosystem Contributions

Based on community feedback, we are making libraries in the Intel® Rendering Toolkit truly open for the community to contribute. Particularly, we opened the internal development and feature branches, which are now hosted directly on GitHub. We hope this will allow the community to contribute features and functionality directly and effectively. This new chapter brings more community-funded features and functionality, development transparency, early development feedback channels, and cross-platform CI for validation of contributions. The component list includes:

- Intel® Embree

- Intel® Open Image Denoise

- Intel® Open Volume Kernel Library (Intel® Open VKL)

- Intel® Open Path Guiding Library (Intel® Open PGL)

- Intel® OSPRay

- Intel® OSPRay Studio

We invite the rendering community to contribute to making Intel Rendering Toolkit the industry's leading open source, cross-platform, cross-architecture graphics stack.

Enabling the 3D Creator and Rendering Markets with Intel® Open Image Denoise Becoming the Default Choice in Blender*

Intel Open Image Denoise provides full cross-vendor GPU support, running inclusively on Intel, NVIDIA*, AMD*, and Apple* GPUs. The compelling quality and performance of Intel Open Image Denoise has led to it being selected as the default denoiser in Blender* on all these platforms. Benchmarks show Intel® Arc™ A770 GPU outperforming the NVIDIA GeForce RTX 3090, and matching the performance and price of the higher-end NVIDIA GeForce RTX 4070 in denoising. The integration into Blender solidifies Intel GPUs as a viable platform in Blender and allows Intel to continue to improve the Blender experience on current and future Intel platforms, as well as cross-vendor platforms.

Path Guiding Research with Intel® Open PGL in the Physically Based Rendering (PBRT) Research Framework

We released an official example integration of Intel Open PGL into a fork of the commonly used PBRT research framework. This integration not only shows some gritty details about how to robustly integrate a production-ready, path-guiding framework into a rendering system, but it also enables researchers to build upon and compare against current state-of-the-art, path-guiding algorithms used in production. Through constant updates, new Intel Open PGL features will be directly accessible to the rendering and research community, allowing them to work on open challenges more effectively. The integration of Intel Open PGL into PBRT is accessible on GitHub.

Conclusion

We are excited to share the progress and contributions of Intel Visual Compute & Graphics Research (VCG) Lab to the graphics technologies and ecosystem. We hope that the presented tools for optimizations, new representations and materials, and other high-profile technologies, as well as the progress in our open ecosystem of graphics libraries, will inspire the researchers and help the practitioners in graphics and machine learning communities to achieve higher-fidelity visuals.