We wouldn’t be lying if we said that every time a developer starts building an AI application, their go-to starting point is an open source project. We rely on open frameworks like PyTorch or TensorFlow, or Python libraries like scikit-learn, Pandas, and NumPy, and more recently, specific projects for large language models (LLMs) like LangChain, LLamaIndex, or HuggingFace. All these open projects helped democratize AI, enabling developers to learn, develop, make contributions, and create their AI applications. Given this reliance on open source, it was hardly surprising to learn in Intel’s annual open source community survey that the majority of survey respondents (82%) cited open source in AI as “extremely or very important.”

Nevertheless, AI requires one thing other technologies don’t: a model. A model is not pre-built; it learns from data. To create, train, and use AI models, developers need data, sophisticated algorithms, and specialized tools. This creates some important questions for developers such as: How can I train my model if I don’t have access to the data? Can I download a model to use it, or do I have to access it through a paid API? As a researcher, how can I investigate architecture and how the model that I’ll be using was trained?

Stories from the Community

At the Open Source Summit in Seattle, we spoke with community leaders who are having discussions around some of the biggest questions facing the industry today. We asked them about their thoughts on the importance of open source in AI and how each of their initiatives is helping to move the conversation forward, with a focus on accessibility, collaboration, and standards.

Accessible and Transparent

"Accessible" is often the first word that comes to mind for many of us when we think of open source. From computer vision models like YOLO and the famous RESNET, which recognize objects in images, to LLMs that enable language interaction, developers and researchers use and explore these models because they are readily available. It started with the first VGG-16 model, which enabled us to collaborate and make changes, like adding layers to enable customization for specific tasks, such as enhanced image segmentation performance.

For Mer Joyce, founder of the co-design firm Do Big Good, openness in AI is crucial for collaboration. “Open source AI matters because it is an incredibly powerful tool,” she said, “And we want to make sure that it is accessible and transparent.” Joyce is the process facilitator for the open source AI definition (OSAID), an initiative driven by the OSI. Her job is to use co-design methods to ensure that the OSAID is created in a manner that is also accessible, transparent, and collaborative. (You can read more about that on the project forum.)

This is one of the most important principles of open source. By maintaining openness and transparency, AI development becomes a collective effort, accelerating innovation and ensuring that the technology benefits a wide range of people. This approach improves the quality and reliability of AI while democratizing its advancements, allowing more individuals and organizations to contribute and benefit.

Ofer Hermoni, chairperson of Education & Outreach for LF AI & Data GenAI Commons, agrees, “I think it's important to have open source AI because we want everyone to be able to enjoy this very, maybe most, important technology that was invented in the history of humanity.”

“It’s also very important to be able to fine-tune and modify it to adjust to different cultures,” Hermoni added. It’s widely recognized that the majority of development is carried out by a small number of countries, as argued in The Limits of Global Inclusion in AI Development, which states that “the full lists of the top 100 universities and top 100 companies by publication index include no companies or universities based in Africa or Latin America.”

As the lead for LF AI & Data GenAI Commons’ education and outreach committee, Hermoni is leading one of the five working groups of the Generative AI Commons, the others are: Models and Data, Applications, Frameworks, and Responsible AI.

The Path to Open Collaboration

Should AI be open or closed? The debate has proponents on both sides with some saying that openness enables necessary transparency while others argue that it opens the door to greater risk. “I know there are different camps in the AI conversation about open being the right path or being more closed being the right path,” said Open Source Science (OSSci) Community Lead Tim Bonnemann, “I personally think that open tends to be the better approach and that we need to develop these tools and approaches together to create safe, responsible, and trustworthy AI systems.”

Bonnemann leads the community for OSSci, which was launched in 2022 with the goal of accelerating scientific research by improving the open source infrastructure for scientists. “Unlike in the private sector, where open source really is very mature and robust,” said Bonnemann, “we’re seeing a lot of issues that are holding scientists back.” These issues include technological as well as social, governance, and policy challenges.

“It can be very difficult to know what open source tools even exist in a specific scientific domain,” said Bonnemann. “So, for many different reasons, what happens a lot is that people keep reinventing the wheel versus, in a good open source way, pooling their resources and building tools over time that are much more capable than each individual contributor could come up with on their own.”

OSSci is working to change that by helping science professionals understand what resources are out there. The Map of Open Source Science (MOSS) is a project from OSSci that aims to help researchers avoid duplication of work. “It's about creating a new interface that lets you, for example, as an individual scientist, discover the existing open source tools in your specific corner of the science world,” said Bonnemann, “And also discover where these tools have been cited, mentioned, or referenced in published research.” Bonnemann added that MOSS will also enable scientists to see the people behind the papers, research, and projects enabling greater transparency and collaboration.

Standard Definitions

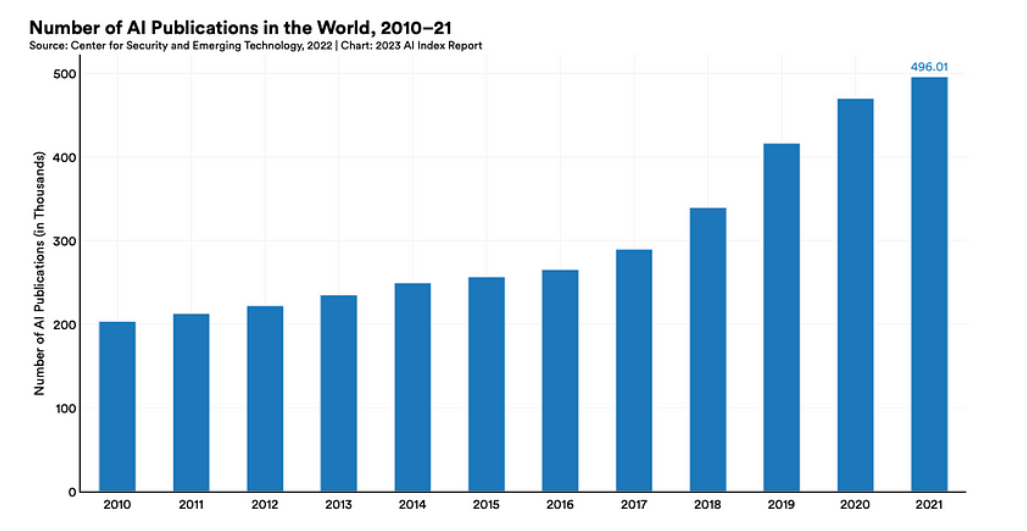

With AI dominating news headlines and product roadmaps, research has increased—just look at the rise in the number of published papers — but implementation at the enterprise level has lagged. In our annual open source community survey, the majority of respondents (61%) weren’t yet using AI in their workflow. Why? Almost everyone needs the benefits that this technology promises: to make us more effective, help us with daily tasks, offer better service to customers...the list is virtually endless.

The Artificial Intelligence Index Report from Stanford University shows how the amount of papers published on AI is growing exponentially.

But what this rapidly evolving industry lacks is standards—something the Open Source Initiative (OSI) is working to correct, starting with defining open source AI. Mer Joyce, who also serves as the OSI’s co-design facilitator for the open source AI definition, highlighted the importance of having common definitions. “It is important to define open source AI because it is already out there,” she said. “It is already being used and defined by various actors. There's no stability, there's no consistency. And we want open source AI to be a consistent, stable part of the technological infrastructure for all actors.”

Joyce explained that the OSI’s goal is to create a definition for open source AI like the open source definition that the OSI currently stewards and make it a part of the stable public infrastructure that everyone can benefit from. “Our goal is to consult with global stakeholders and co-design this definition of open source AI to ensure that it is representative of the needs of the vast range of stakeholders,” said Joyce, “and also to integrate and weave together the real variety of different perspectives on what open source AI should mean.”

Moving Forward Together

There's still a ton of work ahead to achieve the goals of trustworthy, transparent, and truly collaborative AI. Open source AI is still a hot topic, and it’s doable with everyone involved, from big companies to developers in the community. The three initiatives mentioned earlier: OSSci (and MOSS), Generative AI Commons (LF AI& DATA) and The Open Source AI Definition are crucial for keeping things open and pushing for AI to be trustworthy, transparent, and truly collaborative. Don't hesitate to get involved.

Join us to advance this critical conversation and drive positive change in the open source ecosystem. Follow us on LinkedIn, and X, engage in the dialogue!

About the Author

Ezequiel Lanza is an open source AI evangelist on Intel’s Open Ecosystem team, passionate about helping people discover the exciting world of AI. He’s also a frequent AI conference presenter and creator of use cases, tutorials, and guides to help developers adopt open source AI tools. He holds an MS in data science. Find him on X at @eze_lanza and LinkedIn at /eze_lanza.