I am pleased to announce the 2022 version of the award winning Intel® oneAPI tools. These enhanced tools offer expanded cross-architecture features to provide developers greater utility and architectural choice to accelerate computing. The tools offer high-performance support for programming in Python, C++, OpenMP, MPI, Fortran, SYCL, C, and through a wide collection of oneAPI libraries.

New capabilities include performance acceleration for AI workloads and ray tracing visualization workloads; expanded support for a wider range of devices; ever expanding support for standards; new microarchitecture optimizations; over 900 new features since the laun ch of the 2021 version; LLVM-based compilers that span C, C++, Fortran, SYCL, and OpenMP in a common unified manner; data parallel Python for CPUs and GPUs; and advanced accelerator performance modeling and tuning.

ch of the 2021 version; LLVM-based compilers that span C, C++, Fortran, SYCL, and OpenMP in a common unified manner; data parallel Python for CPUs and GPUs; and advanced accelerator performance modeling and tuning.

These programming development tools are available immediately via download from Intel® Developer Zone, plus apt-get, Anaconda, and other repositories, as well as being pre-installed for easy use on Intel® DevCloud, and in containers. Using Intel® DevCloud, you can try out oneAPI on CPUs, GPUs, and FPGAs!

oneAPI Acceleration: Speeding AI

oneAPI helps supercharge your AI workflow by offering seamless software acceleration. Libraries such as oneDNN, oneDAL, oneCCL, and oneMKL form the foundation that drives the performance and productivity of Intel’s portfolio of AI tools and framework optimizations.

The Intel® oneAPI AI Analytics Toolkit accelerates end-to-end data science and machine-learning pipelines and it now includes the Intel® Neural Compressor to help simplify post-training optimization across multiple Deep Learning frameworks. With low-precision quantization, pruning, knowledge distillation, and other compression techniques, AI developers can now achieve increased inference performance and engineering productivity on Intel Platforms.

Using oneAPI libraries, optimizations added to Tensorflow have shown 10x performance gains compared to prior versions without our optimizations. Machine learning algorithms in Python are improved an average of 27 times faster in training and 36 times faster during inference thanks to optimized scikit-learn you can download from Anaconda and elsewhere. Based on real delivered results, with very little effort, it is easy to see why our optimizations are being incorporated into many of the most popular AI frameworks, distributions (including Anaconda), and applications to help power AI and machine learning.

oneAPI also helps power new workstations, with enormous memory capacities thanks to Intel® Optane™ memory, to greatly enhance AI development. Dell, HP, and Lenovo are offering numerous Linux laptops, desktops, and workstations that feature select Intel® Core™ and Intel® Xeon® processors, and validated pre-loads of the Intel oneAPI AI Analytics Toolkit. The result is a seamless and easy-to-use solution, enabling data scientists to iterate, visualize, and analyze complex data at scale.

The VentureBeat article Scaling AI and data science – 10 smart ways to move from pilot to production is an excellent place to learn more about how the open, interoperable, and extensible AI Compute platform that we are building will help with Scaling your AI projects to production. Also read about Software AI Accelerators and how they can help you boost your AI performance for free for the same hardware configuration.

oneAPI Approach Matters

Our implementation of oneAPI, the Intel® oneAPI tools, are based on decades of software products from Intel that have been widely used for building many applications in the world and built from multiple open source projects to which Intel contributes heavily. The tools are true to the oneAPI vision of supporting multiple device types and architectures; we see constantly expanding support for CPUs, GPUs, FPGAs, and more.

Embree: Path Tracing for Photorealistic Rendering

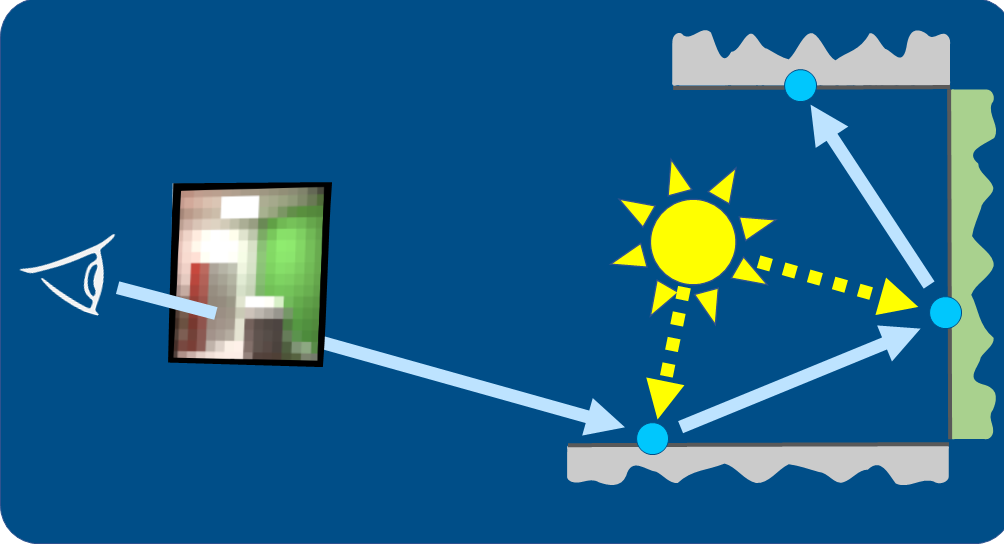

Path Tracing is an algorithm for simulating light transport in a 3 dimensional environment to produce an image. To render complex photorealistic scenes with multiple real-world elements (e.g., trees, cars, people, tables, etc.), a combination of memory size and computational algorithm demands has kept it primarily in the professional realm of film, detailed product design, and supercomputer scientific visualization with the best processing platform being CPUs. Recent technology developments are bringing path tracing’s eye-popping photorealistic imagery to everyone through the addition of GPU Ray Tracing acceleration.

Adding ray tracing hardware on GPUs is transforming the graphics industry, but it creates a challenge for software developers: more platforms to program. The oneAPI approach of encouraging and supporting programming with an xPU mindset helps avoid the extreme case of having separate code bases for CPUs and GPUs.

We have a solution now, an xPU path tracer built with the oneAPI Rendering Toolkit libraries, the Academy Award winning Embree, and the cutting-edge AI denoiser Open Image Denoise.

Intel® Open Image Denoise: ML Algorithms to Quiet the Noise

This video shows real-time screen captures of 1 sample per pixel path tracing with temporal anti-aliasing using Intel® Embree and Intel® Open Image Denoise all running on an upcoming Intel® Xe Architecture based system.

Watch this video to learn more about Embree.

Path Tracing, using Intel software, helped clean up noise in the visual effects for an animated MGM feature film The Addams Family 2. An in-depth article on Forbes, explores the details of how this works and why it matters.

oneAPI offers incredible capabilities and performance, while putting a damper on the disadvantages of having multiple code bases to maintain.

FPGAs: Supporting More Than Just GPUs

FPGA programming via SYCL support in the DPC++ compiler keeps getting richer including features such as arbitrary precision integer, fixed point, floating point, and complex data types, pipes for kernel-to-kernel and kernel-to-IO communication, and the buffer_location accessor property for control over memory allocations in deployments with multiple memory types (e.g., HBM, DRAM, and/or Optane). We will continue to add features to provide more control over FPGA designs in the upcoming year.

Intel DevCloud offers access to Intel® Arria® and Intel® Stratix® FPGAs. Early next year, we will add Intel® Agilex™ FPGAs, with up to 40% higher performance (geomean vs. Intel® Stratix® 10) or up to 40% lower power for applications in data center, networking, and edge compute, to the Intel DevCloud. Using Intel DevCloud, you can try out oneAPI on CPUs, GPUs, or FPGAs – get started today, and enjoy 2022 as we grow with additional hardware.

Industry Standards

Here is an update on our aggressive support of industry language standards:

Here is an update on our aggressive support of industry language standards:

- All Intel® C/C++/Fortran compilers support OpenMP 4.5 and most of 5.0 and 5.1.

- Our LLVM-based-compilers are our compilers for the long haul; we are aggressively enhancing their status as the first truly xPU-oriented compilers for C, C++, and Fortran:

- Intel C “icx” compiler uses clang so it fully supports C99, C11, as well as some of C17 and (parts proposed for) C23, with more improvements coming in future updates.

- Intel C++ “icpx” compiler fully supports C++11, C++14, C++17, some of C++20 (with ongoing improvements underway now), some of (parts proposed for) C++23, and much of SYCL 2020, with additional improvements coming in upcoming releases.

- Intel Fortran “ifx” supports Fortran 2003 except PDTs, and 2008 except coarrays, and it supports OpenMP 5.1 compute offload to GPUs. The LLVM-based Fortran will close the feature gap with respect to the classic Fortran in upcoming releases while continuing to expand its unique advantage of being a truly xPU Fortran compiler

- Our classic Intel C/C++ compiler supports almost all of C99, C11, C17, C++11, C++14, C++17, and only a little of C++20, on CPUs only.

- Our classic Intel Fortran “ifort” supports all of Fortran 2003, 2008, and 2018 on CPUs only, plus all of OpenMP 4.5, and most of 5.0, and 5.1.

The Intel® MPI Library conforms to MPI 3.1, including support for using GPU-to-rank pinning, GPU memory allocations (USM), and performance optimizations for Google Cloud Platform fabric. We anticipate conforming to MPI 4.0 in the upcoming year.

Our Python support optimizes the performance of Python 3.x.

Advanced Microarchitecture Support

- Intel® VTune Profiler has support for the Intel® microarchitecture code named Alder Lake in the Microarchitecture Exploration and Memory Access analyses.

- Intel® oneAPI Deep Neural Networks Library supports Intel AMX instructions for int8 inference and bfloat16 training AI workloads.

- Countless compiler and library optimizations for the latest microarchitectures.

Your Asked, We Deliver

Here is a sample of the over 900 new features Intel oneAPI 2022 tools deliver:

Here is a sample of the over 900 new features Intel oneAPI 2022 tools deliver:

- Visual Studio Code extensions help with common developer tasks for better productivity.

- With this oneAPI release, we are introducing support for Microsoft Windows Subsystem for Linux (WSL2) expanding the usages for oneAPI. With WSL2 in Windows 10 and Windows 11, you can use a native Linux distribution of Intel oneAPI compilers and libraries on Windows for CPU and GPU workflows. In a future oneAPI release, we will expand this support to analysis tools and communication libraries. I will definitely dive into these in a future blog because you can do even more than what these Microsoft and Intel blogs introduce us to!

- Intel® MPI Library includes new performance optimizations for Google Cloud Platform fabric. (OFI/tcp)

- IoT developers are now able to use tools via the intel-meta layer provided through OpenEmbedded or Yocto Project to accelerate development of optimized Yocto Project Linux kernels and applications

- Intel® Inspector improved memory and threading errors analysis for DPC++ and OpenMP offloaded codes, executed on CPU targets. It adds correctness check for memcpy() function arguments on Windows, and improved C++ stack frames visualization and increased accuracy of libc library reporting.

- Intel® Embree ray tracing libraries now include ARM support, in addition to colored transparency, colored shadows, cones, and cylinder geometries which have been re-added to help with scientific visualization and general rendering.

- Intel® Open Volume Kernel Library (VKL) version 1.0 release contains support for native use of Intel Open VKL on ARM CPU and new additions to the API such as interval and hit iterator contexts, iteration on any volume attribute, configurable background values, tricubic filtering, VDB multi-attribute volume support, faster structured and VDB volume sample and quicker interval iteration on structure, VDB and unstructured volumes, support for structured FP16, VDB FP16, VDB Motion Blur, VDB Cubic Filtering and the capability to handle multiple volumes in the same scene.

- Intel® Open Image Denoise includes new denoising mode providing higher quality renderings for final frames, in addition to support for directional lightmaps and Apple Silicon.

- Intel® OSPRay now supports transform motion blur and MPI Module library support.

- Intel® OSPRay Studio graphical user Interface includes improved lights editor for easy scene lighting, gITF loader improvements enabling animation and skinning of triangle meshes, punctual lights, materials and textures, more camera states and better scene file load and saving by including model transforms, material, lights and camera state. Additional new features include Python bindings in Studio Scene Graph, parameters to control Intel OSPRay’s transformation and camera motion blur effects and including UDIM texture workflow support.

Enhanced Intel® VTune Profiler productivity includes flame graph visualization.

Enhanced Intel® VTune Profiler productivity includes flame graph visualization. Breakthrough GPU modeling and roofline analysis through Intel® Advisor.

Breakthrough GPU modeling and roofline analysis through Intel® Advisor.

Get the Tools Now

You can get the updates in the same places as you got your original downloads - the Intel oneAPI tools on Intel Developer Zone, via containers, apt-get, Anaconda, and other distributions.

The tools are also accessible on the Intel DevCloud which includes very useful oneAPI training resources. This in-the-cloud environment is a fantastic place to develop, test and optimize code, for free, across an array of Intel CPUs and accelerators without having to set up your own systems.

Download Today the Best in oneAPI

oneAPI makes programming tasks truly open this heterogeneous computing era, so that common tooling; including libraries, compilers, debuggers, analyzers, and frameworks; exist to support systems regardless of vendor or architecture.

oneAPI makes programming tasks truly open this heterogeneous computing era, so that common tooling; including libraries, compilers, debuggers, analyzers, and frameworks; exist to support systems regardless of vendor or architecture.

I encourage you to update and get the best that Intel oneAPI tools have to offer. When you care about top performance, we are here to help.

Be sure to post your questions to the community forums or check out the options for paid Priority Support if you desire more personal support.

Visit my blog for more encouragement on learning about the heterogeneous programming that is possible with oneAPI.

oneAPI is an attitude, a joy, and a journey - to help all software developers.

About oneAPI

oneAPI is an open, unified, and cross-architecture programming model for heterogeneous computing. oneAPI embraces an open, multivendor, multiarchitecture approach to programming tools (libraries, compilers, debuggers, analyzers, frameworks, etc.). Based on standards, the programming model simplifies software development and delivers uncompromised performance for accelerated compute without proprietary lock-in, while enabling the integration of legacy code. A recent post by Sanjiv Shah, discussed specification 1.1 and the ‘coming soon’ 1.2 specification. Learn more at oneapi.io.